A dockerized ML Flow server using a S3 server for artifacts (see minio-s3-server) and PostgreSQL for backend storage.

- Copy the provided example environment file and update it with your values, especially the

MINIO_ROOT_USERandMINIO_ROOT_PASSWORD. Those values will serve asAWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY.

cp .env.example .env- Start the service with docker-compose:

docker-compose -d- Log in to your minio console and create a bucket that will be th root of the mlflow runs

- go to http://your-minio-host:9001

- identify using

MINIO_ROOT_USERandMINIO_ROOT_PASSWORD - create a

mlflowbucket, its name must match theARTIFACT_ROOT_URIin the.env

Your Mlflow server is up!

I recommend testing you can load metrics & artefacts from a simple client before going into

advanced artefact logging scenarios. The test_client/ offers just this, see the client side part below.

Now that your ML Flow server is running, you might test that a client can effectively write

metrics to the metrics store and artifacts to the artifacts store. Some configuration is required on the client

willing to log info to the ML Flow server. The test_client/ folder proposes a minimal example for a python

sklearn code logging its training to ML Flow.

To push your training results to an ML Flow server with an S3-like interface for artifact server, your client needs to:

- have mlflow installed (

pip install mlflow) - have

awslocal settings configured to interact with the S3 bucket.

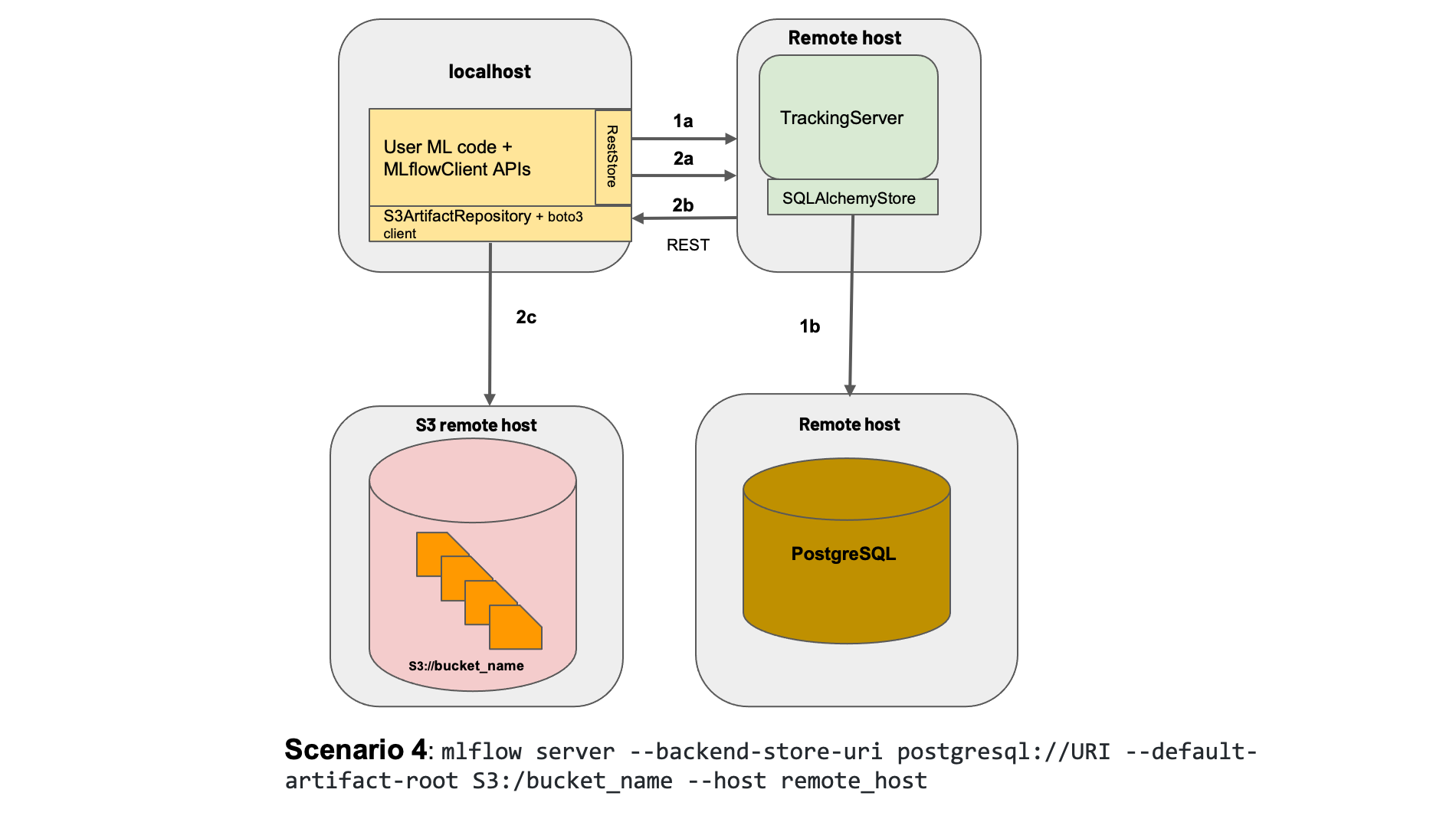

:info: When pushing artefacts from the client (e.g. saving a model), the artifacts are pushed directly to the S3 interface bucket, not proxyed through the ML Flow server, Thus your client needs to be aware of teh S3 endpoint to push the artifcat to and have the credentials to be allowed to do so. See architecture below.

Source: MLflow.org, https://mlflow.org/docs/latest/tracking.html#concepts

Source: MLflow.org, https://mlflow.org/docs/latest/tracking.html#concepts

Note: We use aws cli because s3 is a standard for object storage interface, but you can interact with a self-hosted

minio server just the same as you'd with aws simple object storage service (s3).

- Install

aws-cliandawscli-pluginusingpip.

python3 -m pip install awscli awscli-plugin-endpoint- Create the file

~/.aws/configby running the following command:

aws configure set plugins.endpoint awscli_plugin_endpoint- Open

~/.aws/configin a text editor and edit it as follows:

[plugins]

endpoint = awscli_plugin_endpoint

[default]

region = fr-par

s3 =

endpoint_url = https://your-minio-host:your-minio-port

signature_version = s3v4

max_concurrent_requests = 100

max_queue_size = 1000

multipart_threshold = 50MB

# Edit the multipart_chunksize value according to the file sizes that you want to upload. The present configuration allows to upload files up to 10 GB (1000 requests * 10MB). For example setting it to 5GB allows you to upload files up to 5TB.

multipart_chunksize = 10MB

s3api =

endpoint_url = https://your-minio-host:your-minio-port- Generate a credentials file using

aws configure- Open the

~/.aws/credentialsfile and configure your API key as follows:

[default]

aws_access_key_id=<ACCESS_KEY_THE VALUE_CAN_BE_YOUR_MINIO_ROOT_USER>

aws_secret_access_key=<SECRET_KEY_CAN_BE_YOUR_MINIO_ROOT_PASSWORD>- Test your cluster

aws s3 lsKudos to the scaleway doc for this aws configuration setup.