This work is the minimal pytorch implementation of some sequence to sequence (seq2seq) models:

- Loung seq2seq model: used in NLP sequence to sequence translation

- Pointer networks: an important piece in many combinatorial neural networks.

This repo. is written in a none-technical way to explain the intuition behind these models. However, the code follows the papers that describe the models. A more technical description will be published soon.

sequence to sequence ; Loung; NLP; optimization; Pointer networks

- pytorch 1.4.0

- python 3.7.6

We have used the date generation code from https://github.com/tensorflow/tfjs-examples/tree/master/date-conversion-attention to create a dataset and have followed the same steps in this link, but our implementation uses NLLoss instead of cat. softmax loss function. The current implementation is meant for learning purposes and might not be efficient in terms of speed.

see Loung_seq2seq_example.ipynb

This is similar to the previous model but in this case we use the softmax output to point back to the input. Thus making the output and input length consistent and removes the limitation in predefining a fixed output length.

I will use number sorting example to demonstrate how pointer network works.

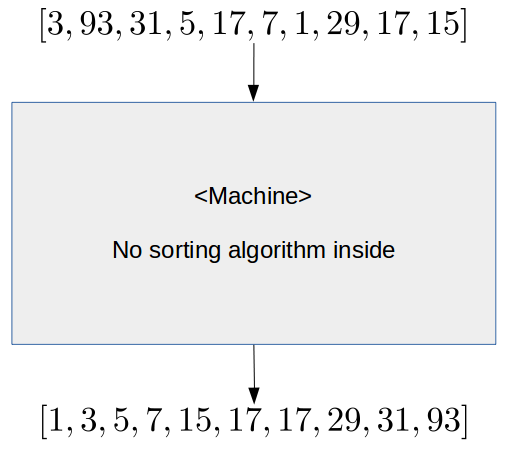

Let us assume a pointer net is a blackbox machine, where we feed it with an array (sequence) of unsorted numbers. The machine sorts the input array. We are sure there is not any sorting algorithm (bubbles) inside the machine. How does it do it?

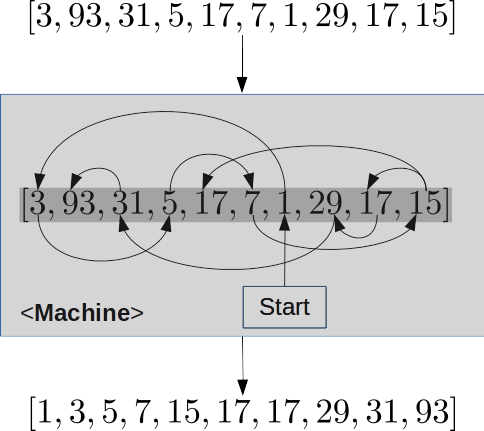

Now assume we can obtain an estimation of what the machine actually does:

Each one of these arrows points to an item in the input. For each input the machine will generate a sequence of these arrows (pointers). Therefore, the machine must learn a mechanism that generates these arrows.

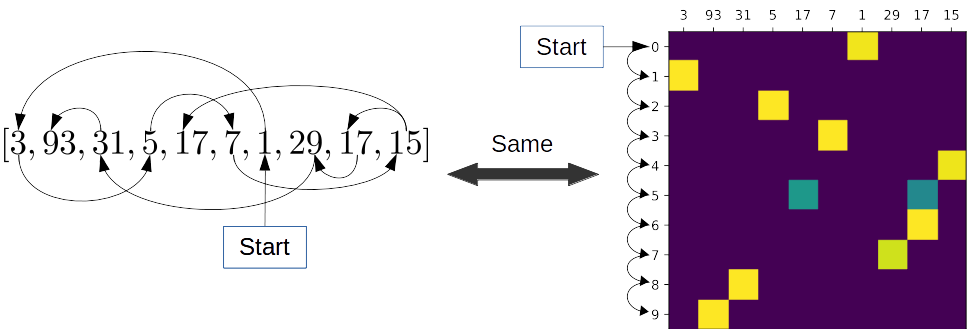

Let us create a better representation than in Figure 1 when using a hot-one encoding matrix. Where each row tells us which input (x axis) to point to in the current step (y axis).

Where : yellow squares = 1, green = 0.5, blue =0.

Please note that, since there are two 17s in the input, we can point to any one of them with the same probability.

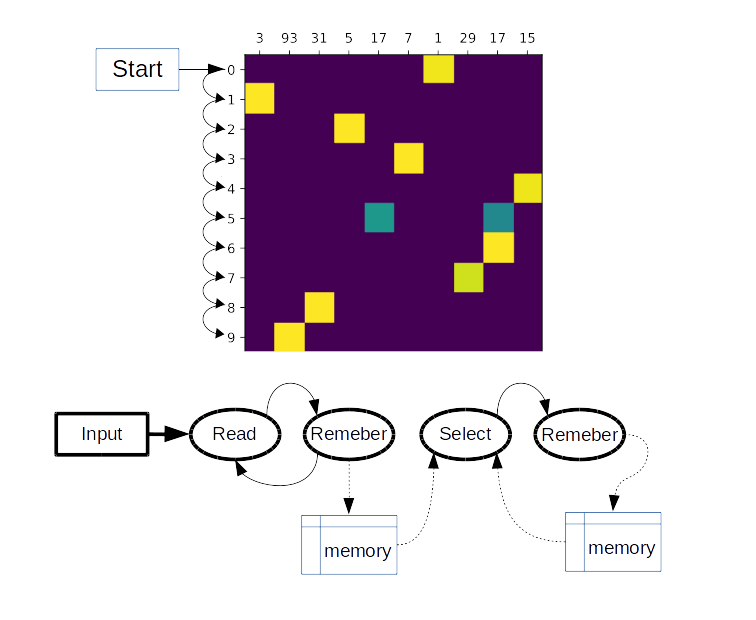

So far we have seen an estimation of what the machine is doing, but is that what pointer networks actually does. To know the answer, let us construct another sketch of how would human do it:

- Repeat these steps:

- read an item from the sequence.

- remember it , why? Because we actually look for the smallest number. Therefore we need to remember all numbers we have visited

- After we have read and remembered all items from the input, we can start generating the arrows in Figure 2. Therefore we repeat the following steps until we generate all arrows:

- pick the smallest number, then draw arrow to it.

- remember it, why? Because we will use it to find the second smallest number.

We need to remember both the input and the generated sequence so far. We actually use both memories to generate the next arrows. That is (memory(input), memory(output so far)) -> next arrow

Therefore, we can sketch the following components from the above steps:

The main component is select, where it uses both the memory from the input side, and the memory from the output side to generate the next arrow. The remember component might have different preprocessing steps before it can store the data it receives.Let us combine the components in the input side: read, remember, and memory and call it Encoder. Likewise, let us combine the components in the output side: select, remember, and memory and call it decoder. Therefore, the pointer network is a neural network architecture that encodes the input and converts it into a latent memory and use a decoder step to generate a pointer to the input.

However, the representation in Figure 2 is what we actually tries to teach the pointer network to do. When trained, the pointer network starts by making random guesses and improves with time until it obtains clear decisions.

A training until convergence of pointer network is shown in Figure 5. This file is generated from masked_pointer_net_example.ipynb.

- see

pointer_net_example.ipynbfor unmasked pointer network - see

masked_pointer_net_example.ipynbfor masked pointer network, notice the radically improved performance! - see

pointer_net_multi_features_example.ipynbfor masked pointer network used for input vector with higher dimensions.

- [1] Loung model paper: https://arxiv.org/abs/1508.04025

- [2] Pointer network paper: https://arxiv.org/abs/1506.03134

- [3] TensorFlow 2.0 Impelementation : https://github.com/AndreMaz/deep-attention