Technical report DCASE. Workshop paper with more analysis of the parameter reduction methods DCASE. This Repo code is based on CP JKU Submission for DCASE 2019. The architectures are based on Receptive-Field-Regularized CNNs The Receptive Field as a Regularizer in Deep Convolutional Neural Networks for Acoustic Scene Classification, Receptive-Field-Regularized CNN Variants for Acoustic Scene Classification

Feel free to raise an issue or contact the authors in case of an issue.

cpjku_dcase20_colab.ipynb contains the required code to run feature extraction and training on google colab. It also contains a low-complexity baseline (65K non-zero parameters) for DCASE 21 task 1.a with 0.623 accuracy.

Conda should be installed on the system.

install_dependencies.sh installs the following:

- Python 3

- PyTorch

- torchvision

- tensorboard-pytorch

- etc..

-

Install Anaconda or conda

-

Run the install dependencies script:

./install_dependencies.shThis creates conda environment cpjku_dcase20 with all the dependencies.

Running

source activate cpjku_dcase20 is needed before running exp*.py

After installing dependencies:

-

Activate Conda environment created by

./install_dependencies.sh$ source activate cpjku_dcase20 -

Download the dataset:

$ python download_dataset.py --version 2020b

You can also download previous versions of DCASE

--version year, year is one of 2018,2017,2016,2019,2020a or any dataset from dcase_util.Alternatively, if you already have the dataset downloaded:

- You can make link to the dataset:

ln -s ~/some_shared_folder/TAU-urban-acoustic-scenes-2019-development ./datasets/TAU-urban-acoustic-scenes-2019-development- Change the paths in

config/[expermient_name].json.

-

Run the experiment script:

$ CUDA_VISIBLE_DEVICES=0 python exp_[expeirment_name].py -

The output of each run is stored in

outdir, you can also monitor the experiments with TensorBoard, using the logs stored in the tensorboard runs dirrunsdir. Example:tensorboard --logdir ./runsdir/cp_resnet/exp_Aug20_14.11.28

The exact commmand is printed when you run the experiment script.

default adapted receptive field RN1,RN1 (in Koutini2019Receptive below):

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py For a list of args:

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --helpLarge receptive Field

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --rho 15very small max receptive Field:

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --rho 2The argument --arch allows changing the CNN architecture, the possible architectures are stored in models/.

For example Frequency aware CP_ResNet :

CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --arch cp_faresnet --rho 5

Frequency-Damped CP_ResNet:

CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --arch cp_resnet_freq_damp --rho 7

Using smaller width :

CUDA_VISIBLE_DEVICES=0 python3 exp_cp_resnet.py --width 64 --rho 5and removing some of the tailing layers :

CUDA_VISIBLE_DEVICES=0 python3 exp_cp_resnet.py --width 64 --depth_restriction "0,0,3" --rho 5and pruning 90% of the parameters :

CUDA_VISIBLE_DEVICES=0 python3 exp_cp_resnet.py --arch cp_resnet_prune --rho 5 --width 64 --depth_restriction "0,0,3" --prune --prune_ratio=0.9Submission 1: Decomposed CP-ResNet(rho=3) with 95.83 accuracy on the development set (94.7 on the unseen evaluation set) 18740 trainable parameters (34.21875 KB in float16)

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --arch cp_resnet_decomp --rho 3 --width 48 --depth_restriction "0,4,4" Submission 2: Pruned Frequency-Damped CP-ResNet (rho=4) with 97.3 accuracy on the development set (96.5 on the unseen evaluation set) 250000 trainable parameters (500 KB in float16)

$ CUDA_VISIBLE_DEVICES=1 python exp_cp_resnet.py --arch cp_resnet_df_prune --rho 4 --width 64 --depth_restriction "0,2,4" --prune --prune_method=all --prune_target_params 250000Submission 3: Frequency-Damped CP-ResNet (width and depth restriction) (rho=4) with 97.3 accuracy on the development set (96.5 on the unseen evaluation set) 247316 trainable parameters (500 KB in float16)

$ CUDA_VISIBLE_DEVICES=2 python exp_cp_resnet.py --arch cp_resnet_freq_damp --rho 4 --width 48 --depth_restriction "0,1,3"Reproducing Results from the paper with DCASE 18 Dataset

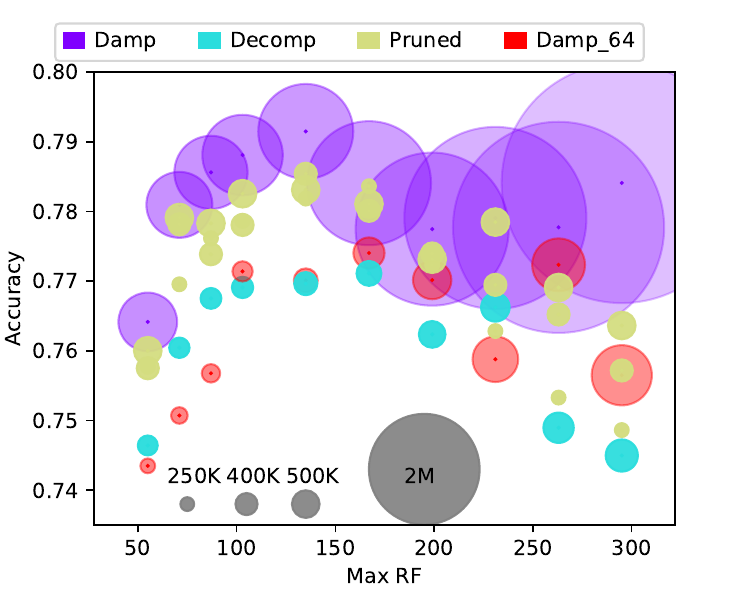

The plots uses different values of rho, in the examples we set rho=0.

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --dataset dcase2018.json --arch cp_resnet --depth_restriction "0,1,4" --rho 0 CP-ResNet with Frequency-Damped layers:

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --dataset dcase2018.json --arch cp_resnet_freq_damp --depth_restriction "0,1,4" --rho 0 CP-ResNet Decomposed with decomposition factor of 4 (--decomp_factor 4):

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --dataset dcase2018.json --arch cp_resnet_decomp --depth_restriction "0,1,4" --rho 0 --decomp_factor 4Frequency-Damped CP-ResNet Decomposed with decomposition factor of 4 (--decomp_factor 4) :

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --dataset dcase2018.json --arch cp_resnet_decomp_freq_damp --depth_restriction "0,1,4" --rho 0 --decomp_factor 4CP-ResNet pruned to a target number of parameters of 500k (--prune_target_params 500000):

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --dataset dcase2018.json --arch cp_resnet_prune --depth_restriction "0,1,4" --rho 0 --prune --prune_target_params 500000Frequency-Damped CP-ResNet pruned to a target number of parameters of 500k (--decomp_factor 4):

$ CUDA_VISIBLE_DEVICES=0 python exp_cp_resnet.py --dataset dcase2018.json --arch cp_resnet_df_prune --depth_restriction "0,1,4" --rho 0 --prune --prune_target_params 500000Download the evaluation set:

$ python download_dataset.py --version 2020b_evalDownload the trained models DCASE 2020 ( DCASE 2019) and extract the models directories into the "pretrained_models" directory.

Check the eval_pretrained.ipynb notebook.

In case that you want to predict on a different dataset, you should add the dataset to the config file.

For example look at the dcase20 eval dataset in configs/datasets/dcase2020b_eval.json.

This repo is used to publish for our submission to DCASE 2019, 2020 and MediaEval 2019. If some feauture/architecture/dataset missing feel free to contact the authors or to open an issue.

The Receptive Field as a Regularizer , Receptive-Field-Regularized CNN Variants for Acoustic Scene Classification, Low-Complexity Models for Acoustic Scene Classification Based on Receptive Field Regularization and Frequency Damping

@INPROCEEDINGS{Koutini2019Receptive,

AUTHOR={ Koutini, Khaled and Eghbal-zadeh, Hamid and Dorfer, Matthias and Widmer, Gerhard},

TITLE={{The Receptive Field as a Regularizer in Deep Convolutional Neural Networks for Acoustic Scene Classification}},

booktitle = {Proceedings of the European Signal Processing Conference (EUSIPCO)},

ADDRESS={A Coru\~{n}a, Spain},

YEAR=2019

}

@inproceedings{KoutiniDCASE2019CNNVars,

title = {Receptive-Field-Regularized CNN Variants for Acoustic Scene Classification},

booktitle = {Preprint},

date = {2019-10},

author = {Koutini, Khaled and Eghbal-zadeh, Hamid and Widmer, Gerhard},

}

@inproceedings{Koutini2020,

author = "Koutini, Khaled and Henkel, Florian and Eghbal-Zadeh, Hamid and Widmer, Gerhard",

title = "Low-Complexity Models for Acoustic Scene Classification Based on Receptive Field Regularization and Frequency Damping",

booktitle = "Proceedings of the Detection and Classification of Acoustic Scenes and Events 2020 Workshop (DCASE2020)",

address = "Tokyo, Japan",

month = "November",

year = "2020",

pages = "86--90",

}