Official implementation of "SCIPaD: Incorporating Spatial Clues into Unsupervised Pose-Depth Joint Learning"(T-IV 2024).

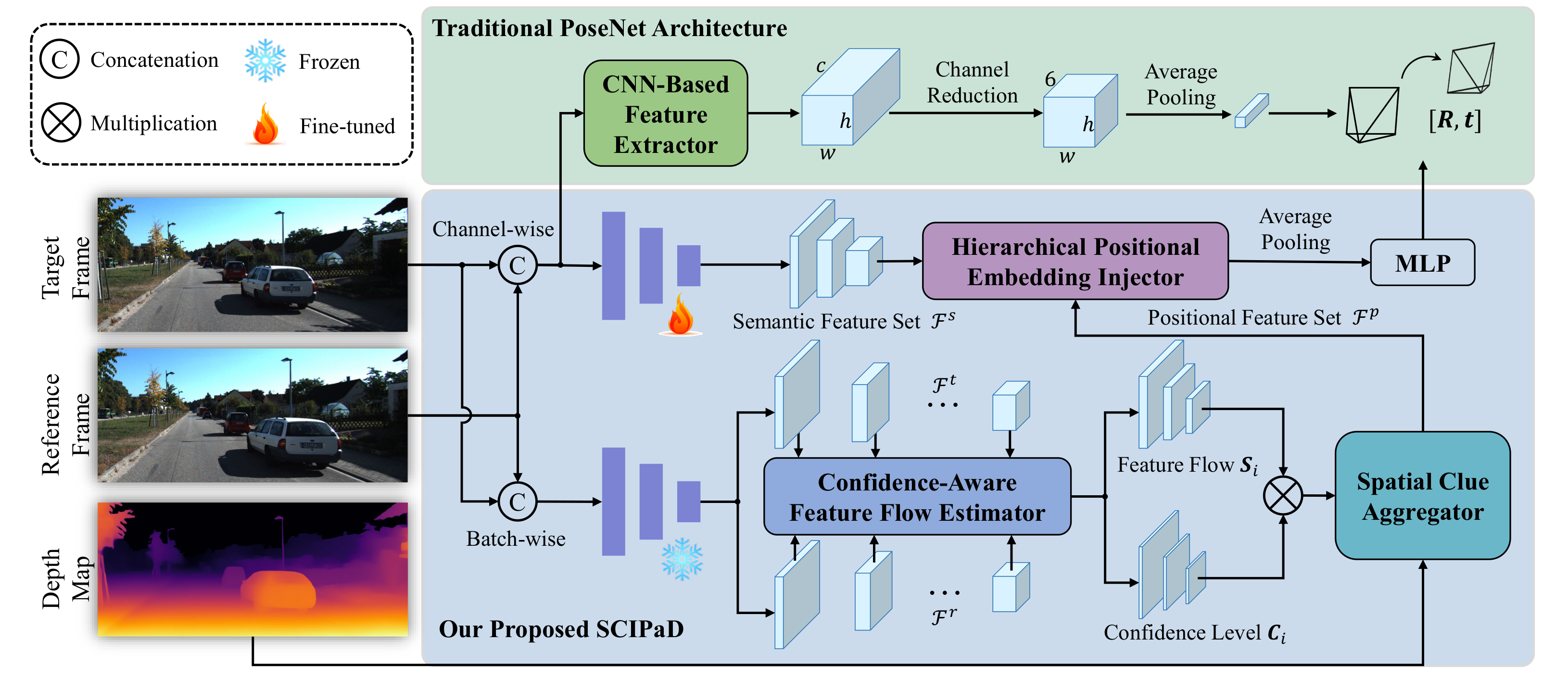

An illustration of our proposed SCIPaD framework. Compared with the traditional PoseNet architecture, it comprises three main parts: (1) a confidence-aware feature flow estimator, (2) a spatial clue aggregator, and (3) a hierarchical positional embedding injector.Create a conda environment:

conda create -n scipad python==3.9

conda activate scipadInstall pytorch, torchvision and cuda:

conda install pytorch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 pytorch-cuda=11.7 -c pytorch -c nvidiaInstall the dependencies:

pip install -r requirements.txtNote that we ran our experiments with PyTorch 2.0.1, CUDA 11.7, Python 3.9 and Ubuntu 20.04. It's acceptable to use a newer version of PyTorch, but the metrics might be slightly different from those reported in the paper (~0.01%).

You can download weights for pretrained models from the Google Drive or Baidu Cloud Drive:

| Methods | WxH | abs rel | sq rel | RMSE | RMSE log | |||

|---|---|---|---|---|---|---|---|---|

| KITTI Raw | 640x192 | 0.090 | 0.650 | 4.056 | 0.166 | 0.918 | 0.970 | 0.985 |

| Methods | WxH | Seq09 |

Seq09 |

Seq09 ATE | Seq10 |

Seq10 |

Seq10 ATE |

|---|---|---|---|---|---|---|---|

| KITTI Odom | 640x192 | 7.43 | 2.46 | 26.15 | 9.82 | 3.87 | 15.51 |

Create a ./checkpoints/ folder and place the pretrained models inside it.

- KITTI Raw Dataset: You can download the KITTI raw dataset and convert the png images to jpeg following instructions in Monodepth2:

wget -i splits/kitti_archives_to_download.txt -P kitti_data/

cd kitti_data

unzip "*.zip"

cd ..

find kitti_data/ -name '*.png' | parallel 'convert -quality 92 -sampling-factor 2x2,1x1,1x1 {.}.png {.}.jpg && rm {}'We also need pre-computed segmentation images provided by TriDepth for training (not needed for evaluation). Download them from here and organize the dataset as follows:

kitti_raw

├── 2011_09_26

│ ├── 2011_09_26_drive_0001_sync

│ ├── ...

│ ├── calib_cam_to_cam.txt

│ ├── calib_imu_to_velo.txt

│ └── calib_velo_to_cam.txt

├── ...

├── 2011_10_03

│ ├── ...

└── segmentation

├── 2011_09_26

├── ...

└── 2011_10_03

- KITTI Odometry Dataset: Download the KITTI odometry dataset (color, 65GB) and ground truth poses and organize the dataset as follows:

kitti_odom

├── poses

│ ├── 00.txt

│ ├── ...

│ └── 10.txt

└── sequences

├── 00

├── ...

└── 21

On KITTI Raw:

python train.py --config configs/kitti_raw.yamlOn KITTI Odometry:

python train.py --config configs/kitti_odom.yamlThe yacs configuration library is used in this project.

You can customize your configuration structure in ./utils/config/defaults.py

and configuration value in ./configs/*.yaml.

First, download KITTI ground truth and improved ground truth

from here, and put them into

the ./split/eigen and ./split/eigen_benchmark, respectively. You can also obtain them following instructions

provided in Monodepth2.

- Depth estimation results using the KITTI Eigen split:

python evaluate_depth.py --config configs/kitti_raw.yaml load_weights_folder checkpoints/KITTI eval.batch_size 1- Visual odometry evaluation pretrained weights to evaluate depth with eigen benchmark split:

- Depth estimation results using the improved KITTI ground truth:

python evaluate_depth.py --config configs/kitti_raw.yaml \

load_weights_folder checkpoints/KITTI \

eval.batch_size 1 \

eval.split eigen_benchmark- Visual odometry evaluation using Seqs. 09-10:

python evaluate_pose.py --config configs/kitti_odom.yaml \

load_weights_folder checkpoints/KITTI_Odom \

eval.split odom_09

python evaluate_pose.py --config configs/kitti_odom.yaml \

load_weights_folder checkpoints/KITTI_Odom/ \

eval.split odom_10- To compute evaluation results and visualize trajectory, run:

python ./utils/kitti_odom_eval/eval_odom.py --result=checkpoints/KITTI_Odom/ --align='7dof'You can refer to ./eval.sh for more information.

If you find our work useful in your research please consider citing our paper:

@inproceedings{feng2024scipad,

title={SCIPaD: Incorporating Spatial Clues into Unsupervised Pose-Depth Joint Learning},

author={Feng, Yi and Guo, Zizhan and Chen, Qijun and Fan, Rui},

journal={IEEE Transactions on Intelligent Vehicles},

year={2024},

publisher={IEEE}

}