Code for CVPR 2023 paper Regularizing Second-Order Influences for Continual Learning.

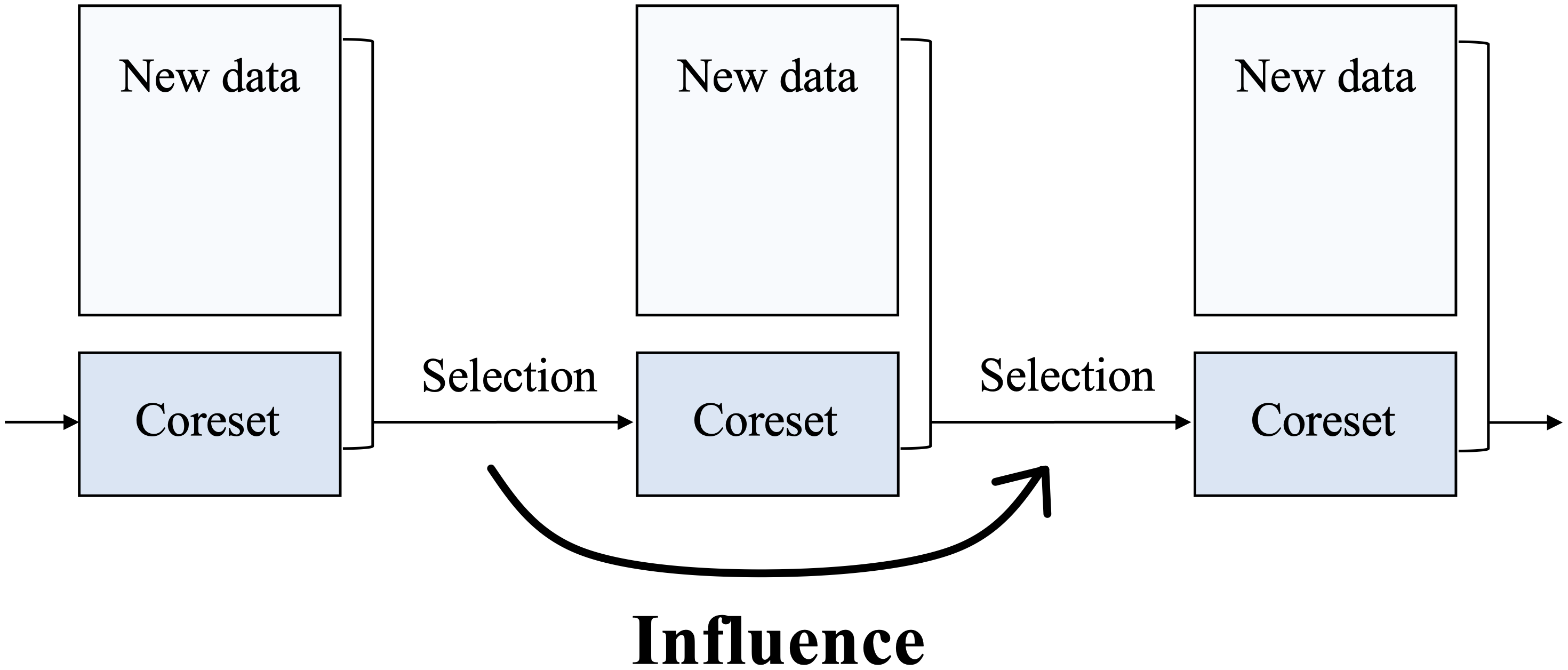

In continual learning, earlier coreset selection exerts a profound influence on subsequent steps through the data flow. Our proposed scheme regularizes the future influence of each selection. In its absence, a greedy selection strategy would degrade over time.

pip install -r requirements.txtPlease specify the CUDA and CuDNN version for jax explicitly. If you are using CUDA 10.2 or order, you would also need to manually choose an older version of tf2jax and neural-tangents.

Train and evaluate models through utils/main.py. For example, to train our model on Split CIFAR-10 with a 500 fixed-size buffer, one could execute:

python utils/main.py --model soif --load_best_args --dataset seq-cifar10 --buffer_size 500To compare with the result of vanilla influence functions, simply run:

python utils/main.py --model soif --load_best_args --dataset seq-cifar10 --buffer_size 500 --nu 0More datasets and methods are supported. You can find the available options by running:

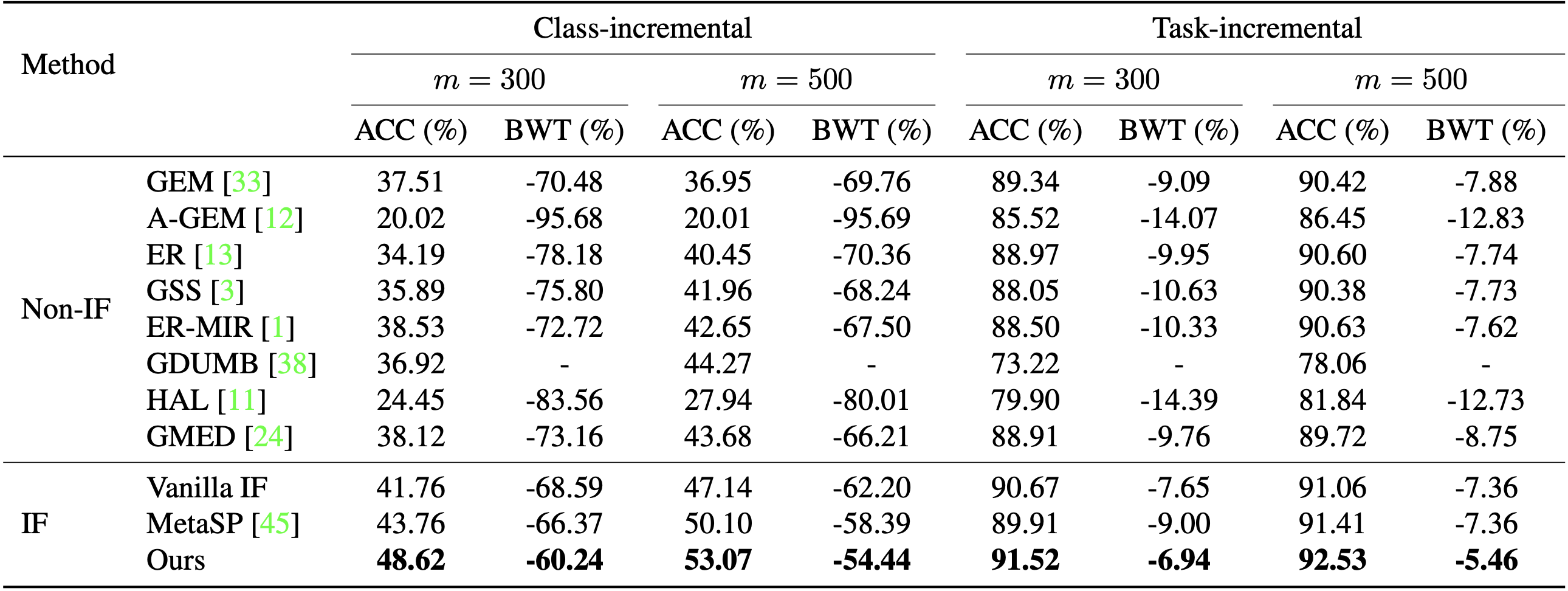

python utils/main.py --helpThe following results on Split CIFAR-10 were obtained with single NVIDIA 2080 Ti GPU:

If you find this code useful, please consider citing:

@inproceedings{sun2023regularizing,

title={Regularizing Second-Order Influences for Continual Learning},

author={Sun, Zhicheng and Mu, Yadong and Hua, Gang},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={20166--20175},

year={2023},

}Our implementation is based on Mammoth. We also refer to bilevel_coresets and Example_Influence_CL.