-

The commands below are quite specific. This means that the following things should be avoided

-

DO NOT use sudo where not specified. If you are facing issues, re-evaluate your actions and its consequences. Make sure that you followed the steps.

-

Make sure that you are using virtual environment where specified. Virtual environment, while it seems insignificant, is actually mandatory.

git clone https://github.com/clearbothk/botmlcode.gitcurl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

python get-pip.pyAfter installation is finished, restart the Jetson Nano

pip install virtualenv --user

cd ~/botmlcode

virtualenv .venv -p python3Then, to activate the virtual environment, run:

cd ~/botmlcode

source .venv/bin/activatepip install numpycd ~

git clone https://github.com/opencv/opencv.git

git clone https://github.com/opencv/opencv_contrib.gitIf the clone for opencv is slow, download the zip from GitHub. Extract and rename the folder to opencv

Useful links when doing that:

https://itsfoss.com/mount-exfat/

Install un-installed dependencies in Jetson Nano

sudo apt install python3-dev

sudo apt-get install libjpeg-dev libpng-dev libtiff-dev

sudo apt-get install libavcodec-dev libavformat-dev libswscale-dev libv4l-dev

sudo apt-get install libxvidcore-dev libx264-dev

sudo apt-get install libgtk-3-dev

sudo apt-get install libatlas-base-dev gfortrancd ~/opencv

mkdir build

cmake -D CMAKE_BUILD_TYPE=RELEASE \

-D WITH_CUDA=ON \

-D CUDA_ARCH_PTX="" \

-D CUDA_ARCH_BIN="5.3,6.2,7.2" \

-D WITH_CUBLAS=ON \

-D WITH_LIBV4L=ON \

-D BUILD_opencv_python3=ON \

-D BUILD_opencv_python2=OFF \

-D BUILD_opencv_java=OFF \

-D WITH_GSTREAMER=ON \

-D WITH_GTK=ON \

-D BUILD_TESTS=OFF \

-D BUILD_PERF_TESTS=OFF \

-D BUILD_EXAMPLES=OFF \

-D OPENCV_ENABLE_NONFREE=ON \

-D OPENCV_EXTRA_MODULES_PATH=/home/`whoami`/opencv_contrib/modules ..Now make OpenCV

# Use nproc to check no. of threads

nproc

# 4

make -j4Once its built, go to the botmlcode folder and symlink the built OpenCV python:

cd .venv/lib/python3.6/site-packages

ln -s /home/clearbot/opencv/build/lib/python3/cv2.cpython-36m-aarch64-linux-gnu.so cv2.soPull the model data using Git LFS. In the botmlcode repo, run:

git lfs pullInstall Git LFS using:

sudo apt install git-lfs

git lfs installNext, install DroneKit and MAVProxy:

References:

https://dronekit-python.readthedocs.io/en/latest/guide/quick_start.html

https://brisbaneroboticsclub.id.au/connect-nvidia-nano-to-pixhawk/

sudo apt install libxml2-dev libxslt1-dev

# Make sure that you have activated the .venv using: $ source .venv/bin/activate

pip install matplotlib lxml

pip install future pymavlink mavproxy

pip install dronekit dronekit-sitlInstall MQTT for ThingSpeak

pip install paho-mqttwe are using DroneKit-Python API as an Onboard app between Jetson Nano and Pixhawk.

Make sure your linux userid has the permission to use your tty port device

connection port = dev/ttyTHS1

Assume our userid is user

sudo usermod -a -G dialout userlet's try running testing.py to get a brief introduction with Dronekit ( in botmlcode/ directory )

python testing.pywe are aware that we need to wait for around 10 seconds or more to get the above's print statement be executed. At first, we though this was an issue( listed below )

- Note ( 14th July, 2020): Optimise Pixhawk integration to the Jetson #5 Track the issue here

In pixhawk.py script, below is the line code to establish Dronekit connectivity to the connected device. it is recommended to set wait_ready=True to waits until some vehicle parameters and attributes are populated so that it is initialized successfully.

def __init__(self, connection_port="/dev/ttyTHS1", baud=57600):

try:

self.vehicle = connect(connection_port, wait_ready=True, baud=baud)

self.vehicle.mode = VehicleMode("MANUAL")

except serialutil.SerialException as e:

logging.error(e)Thus we need to first initialize the dronekit.connect() and make it as a constructor rather than repeatedly run the scripts so that we do not need to re run the script for everytime the Dronekit attributes functions

get called.

The intuition behind Botmlcode is to integrate Clearbot AI vision, Clearbot autonomous system, and the reporting system.

To run the botmlcode main.py, we have the flexibility to conifgure which AI model that we want to use by using -m tiny or full. moreover, we also have the option to generate the video out and actiavte the debug fucntion by indcating -v True and --debug True respectively.

There are two main function inside Botmlcode:

-

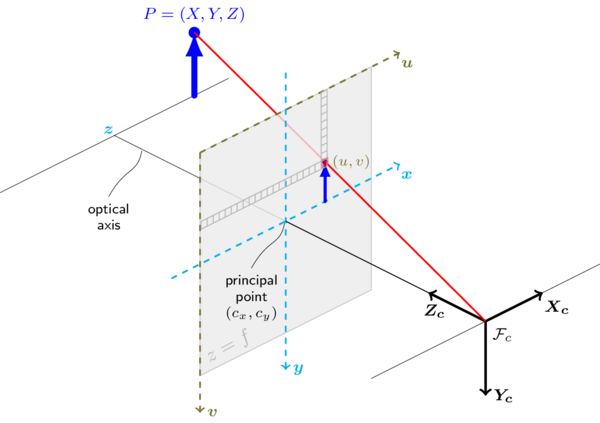

The first one is the Object detection feature where the functions returns a list of all objects that are detected and also the Angle between the object and the optical axis of the camera using

get_angle()function explained on theAngle Measurement sectionbelow. -

The second function is the

pixhawk_controller(), make sure Jetson Nano is already configured with Pixhawk. the function return a Json log file where it consists of various data and status about the ClearBot such as the GPS location, velocity, battery status, and etc.

Both functions runs using Multiprocessing module to prevent dependency as it will risk the performances of the AI model.

- OpenCV (latest version from the repo: see setup instructions for details)

- Python 3.6 or above

- Numpy (latest version)

Make sure the clearbot camera has already been calibrated. If you are using a different camera or you want to calibrate the camera again, click here for reference. Make sure to calculate the distance between the camera to the calibration checkboard and calculate the half of the value of horizontal length of the calibration checkboard. You can find the Check_board.pdf image inside imgsrc/ directory.

Below is the get_angle() function. It is located in detector.py

def get_angle(self, x_axis):

real_distance = 0

angle = 0

if (x_axis < 316):

x_axis = 316 - x_axis

real_distance = (14.5 * x_axis)/316

angle = np.degrees(np.math.atan2(real_distance, 45))

elif (x_axis > 316):

x_axis = x_axis - 316

real_distance = (14.5 * x_axis)/316

angle = np.degrees(np.math.atan2(real_distance, 45))

else:

angle = 0

return angle-

Let the distance between the camera to the calibration checkboard as X

-

Let the half of the value its horizontal length of the calibration checkboard as Y

In this current configuration, X = 45 and Y = 14.5. By using the inverse tangent function we can get the maximum angle that the camera gets. We can use NumPy library by using np.degrees(np.math.atan2(real_distance, 45)). real_distance depends on where the object at.

If the object's x_axis is located in the 2nd and 3rd quadrant, then the real_distance is:

(Y * ((horizantal pixel length / 2) - x_axis)/ (horizantal pixel length / 2).

On the other hand, if the if the object's x_axis is located in the 1st and 4th quadrant, then the real_distance is:

(Y * (x_axis - (horizantal pixel length / 2)))/ (horizantal pixel length / 2).