Dataset : You can download the dataset here

Presentation Deck : View presentation deck here

A Digital Wallet company has quite a large amount of online transaction data. The company wants to acknowledging data limitation and uncertainties such as inaccurate or missing crucial information data. On the other hand, the company also wants to use online transaction data to detect online payment fraud that harms their business.

Create a data pipeline that can be utilised for analysis and reporting to determine whether online transaction data has excellent data quality and can be used to detect fraud in online transactions.

- Create an automated pipeline that facilitates the batch and stream data processing from various data sources to data warehouses and data mart.

- Create a visualization dashboard to obtain meaningful insights from the data, enabling informed business decisions.

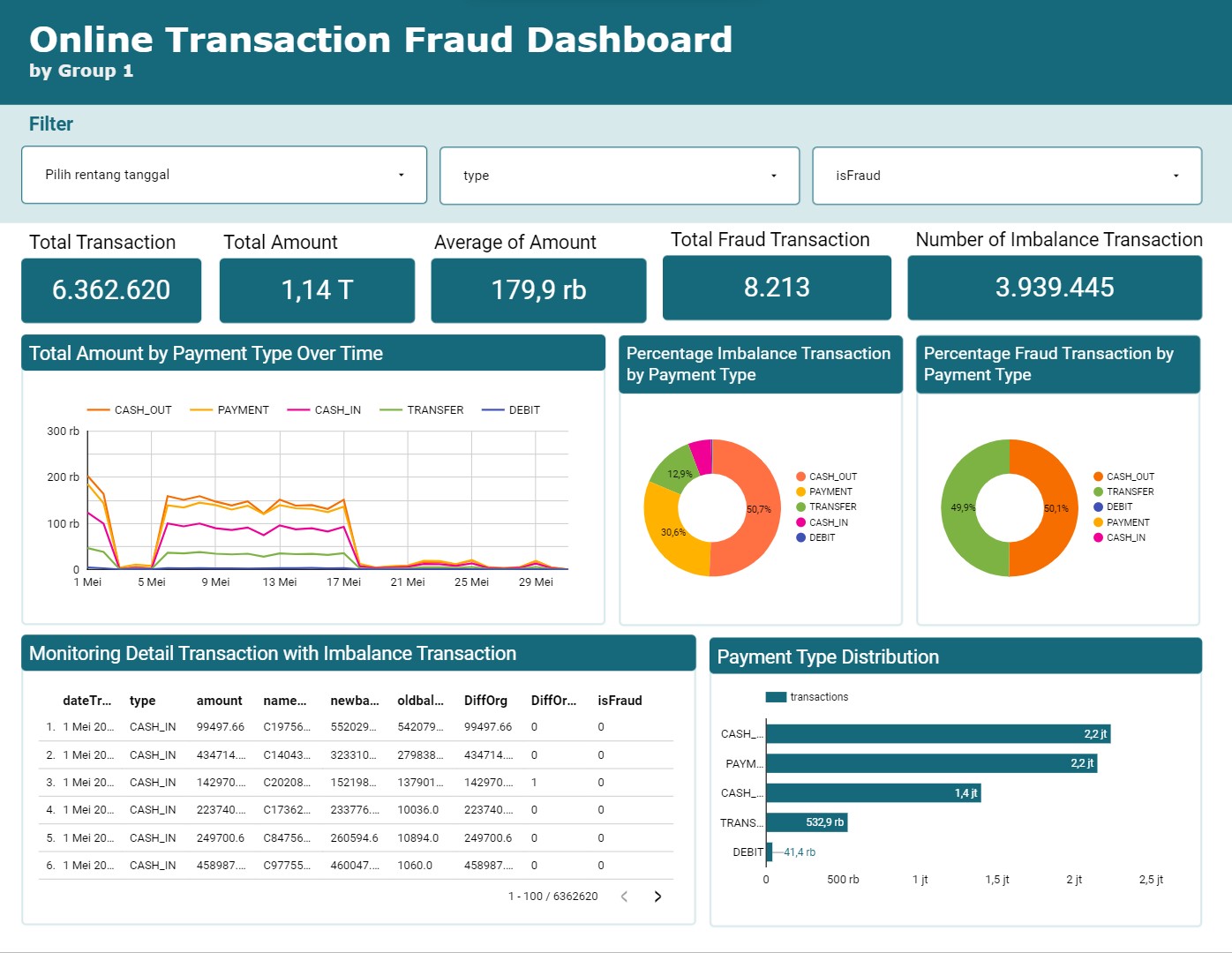

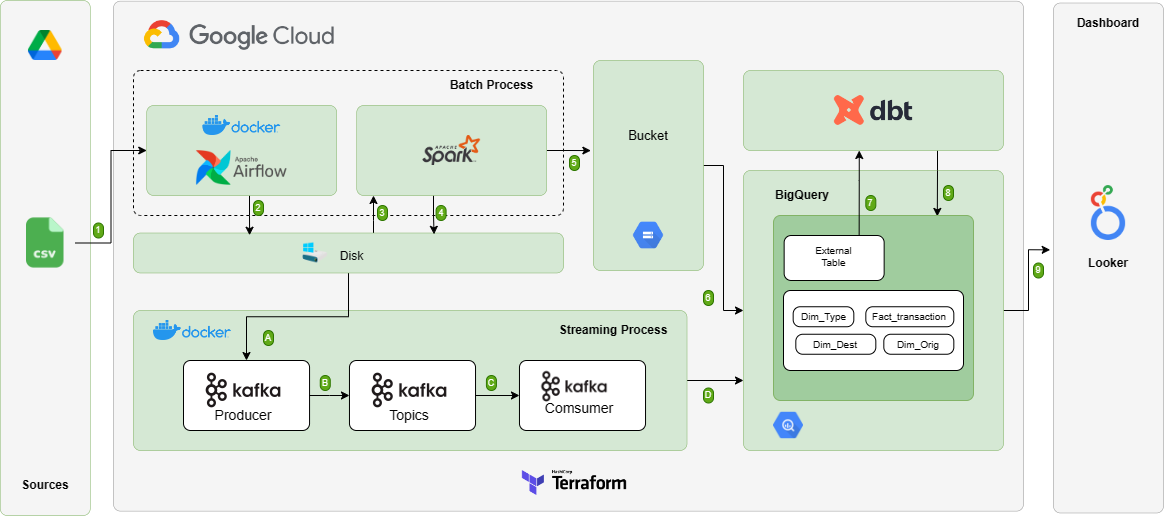

Image 1. Pipeline Architecture

Image 1. Pipeline Architecture

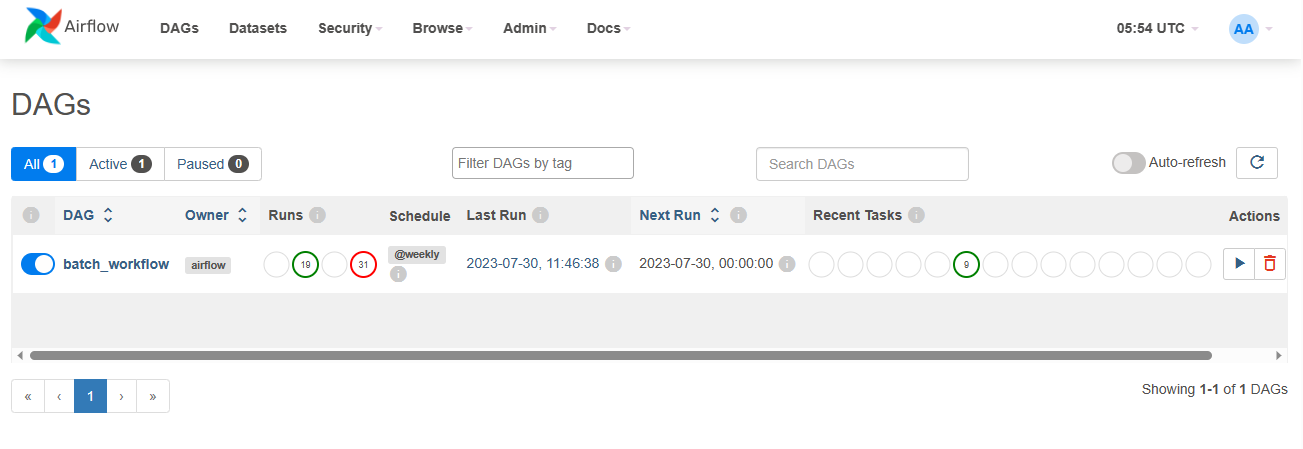

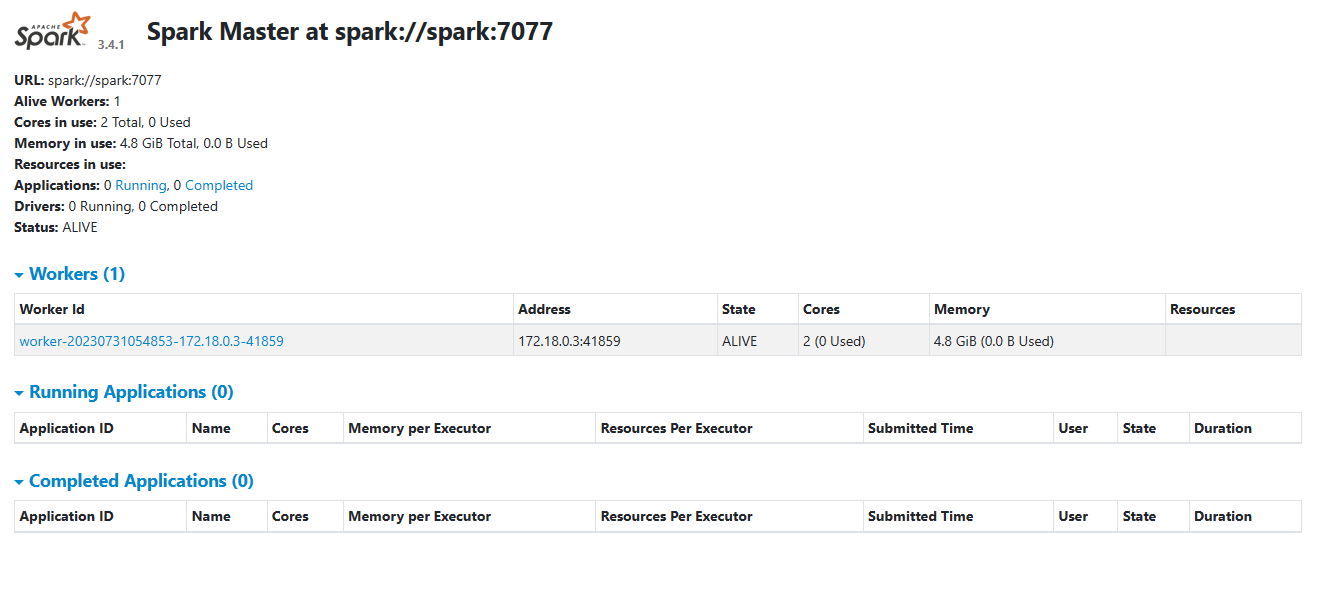

- Orchestration: Airflow

- Tranformation: Spark, dbt

- Streaming: Kafka

- Container: Docker

- Storage: Google Cloud Storage

- Warehouse: BigQuery

- Data Visualization: Looker

final-project-fraud-transaction-pipeline/

|-- dags

| |-- batch_dag.py

| |-- download.sh

|-- datasets

|-- dbt

|-- kafka

|-- logs

|-- service-account

| |-- service-account.json

|-- spark

|-- terraform- dags, this folder that runs the batch processing fraud transaction pipeline.

- datasets, This folder is for storing downloaded datasets.

- dbt, this folder for runs transformation with dbt.

- kafka, this folder for runs the stream processing fraud transaction pipeline.

- service-account, this folder is for storing the json file of the service account

- spark, this folder for runs transformation with spark

- terraform, idk more

Docker Engine & Docker Compose : https://docs.docker.com/engine/install/

This project uses Google Cloud Platform (GCP) with Google Cloud Storage as the cloud environment for data storage and Google Bigquery as the cloud environment for data warehouse.

- Create a GCP Account: To use GCP services, you need a GCP account. If you don't have one, sign up at https://cloud.google.com/free to create a free account.

- Set Up a Project: Create a new GCP project in the GCP Console: https://console.cloud.google.com/projectcreate

- Enable Billing: Make sure your GCP project has billing enabled, as some services like BigQuery may not work without billing enabled.

- Create a Service Account: In the GCP Console, go to "IAM & Admin" > "Service Accounts" and create a new service account. Download the service account key as a JSON file and save it securely on your local machine.

- Grant Permissions: Assign the service account the necessary permissions to access BigQuery datasets and Google data storage.

git clone https://github.com/graceyudhaaa/final-project-fraud-transaction-pipeline.git && cd final-project-fraud-transaction-pipeline

Create a folder named service-account Create a GCP project. Then, create a service account with Editor role. Download the JSON credential rename it to service-account.json and store it on the service-account folder.

- Install Terraform CLI

- Change directory to terraform by executing

cd terraform - Initialize Terraform (set up environment and install Google provider)

terraform init - Create new infrastructure by applying Terraform plan

terraform apply - Check your GCP project for newly created resources (GCS Bucket and BigQuery Datasets)

Alternatively you can create the resources manually:

- Create a GCS bucket named

final-project-lake, set the region toasia-southeast2 - Create two datasets in BigQuery named

onlinetransaction_whandonlinetransaction_stream

move the service account that was downloaded earlier into ./final-project-fraud-transaction-pipeline/service-account and rename it with service-account.json

cd final-project-fraud-transaction-pipelinedocker-compose uplocalhost:8080

localhost:8081

cd kafkadocker-compose uppip install -r requirements.txt- Copy the

env.examplefile, rename it to.env - Fill the required information for the sender and receiver email

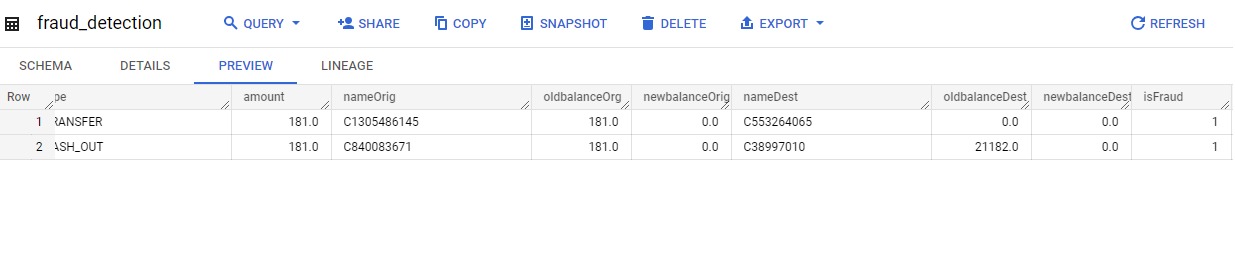

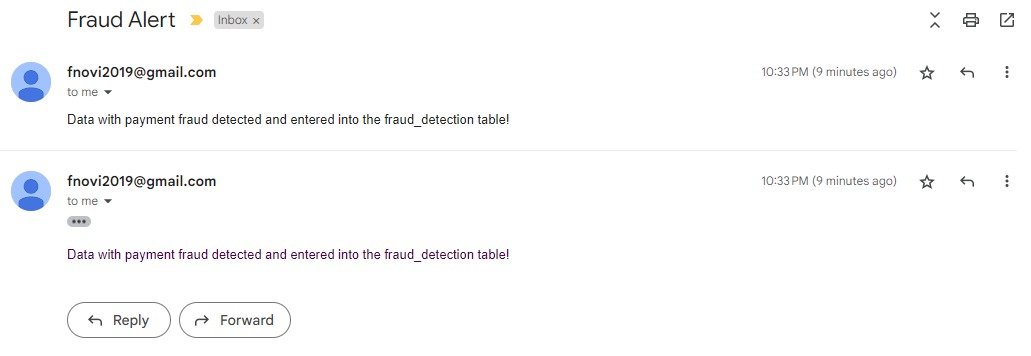

python producer.pypython consumer.pyData will be loaded into the record table for all transactions in BigQuery, and if any data is detected as fraud, it will be recorded in the detected_fraud table, and an automatic email notification indicating fraud will be sent.

Image 4. Email Notification from Data that Detected Fraud

Image 4. Email Notification from Data that Detected Fraud

If you run into a problem where, the schema registry image was exited. with the message

INFO io.confluent.admin.utils.ClusterStatus - Expected 1 brokers but found only 0. Trying to query Kafka for metadata again

You might want to reset your firewall with running this on your command line with administrator permission

iisreset

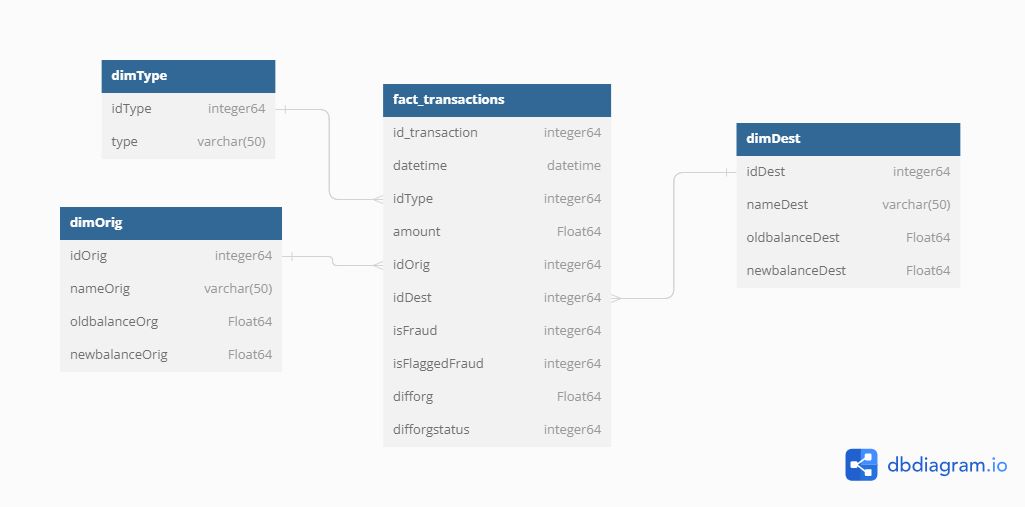

In this project, we use star-schema to define the data warehouse. In the warehouse there are several tables, namely:

a. Dim Type

b. Dim Origin

c. Dim Dest

d. Fact Transaction

Here is the data warehouse schema that we developed.

Image 5. Data Warehouse Schema

Image 5. Data Warehouse Schema

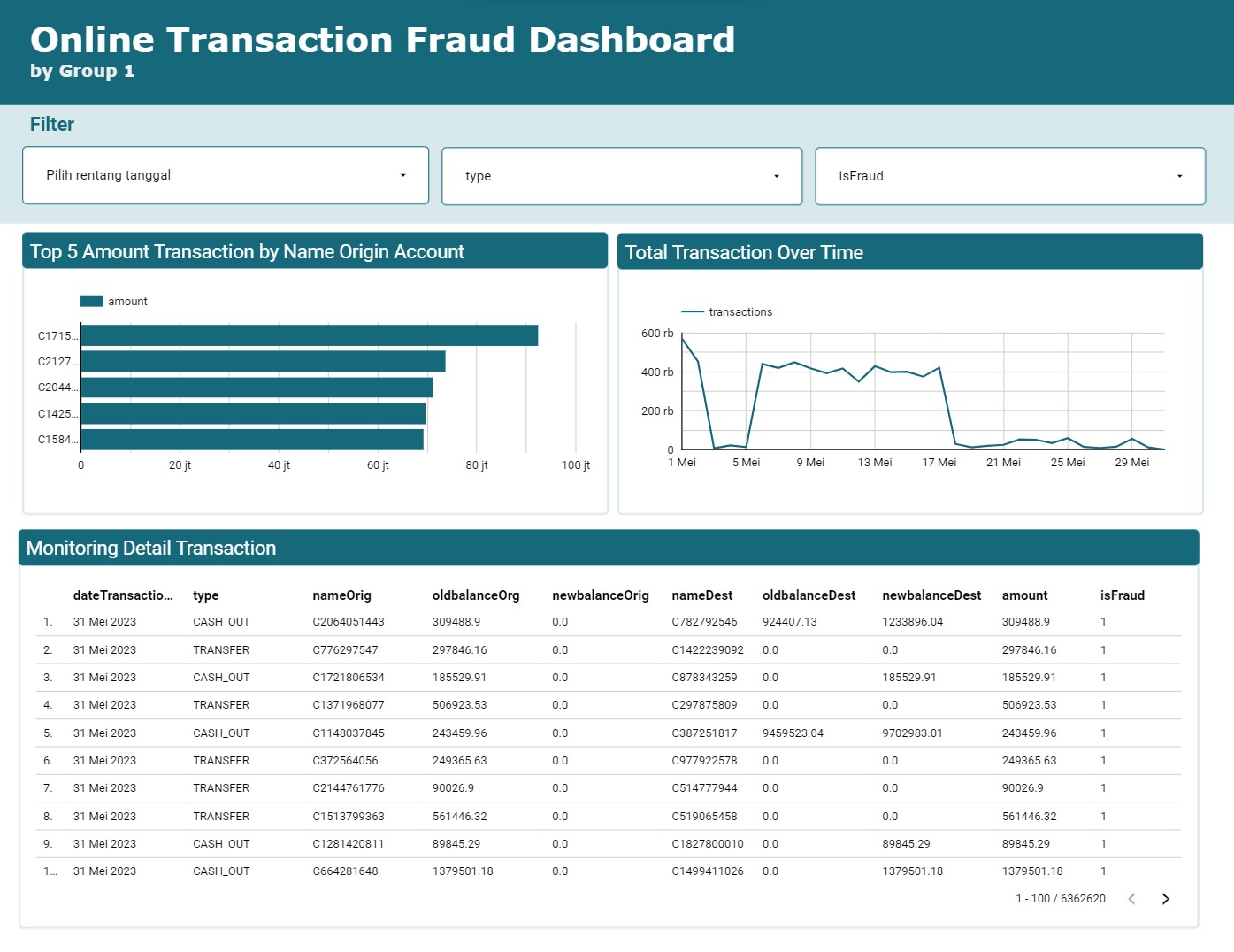

The outcome of this comprehensive data pipeline project is a dashboard that allows someone get insight for fraudulent transaction.

Our dashboard through the following link: Online Transaction Fraud Dashboard