Automatic control of an UR3e 6 joints robot hand based on the detected human hand gestures.

-

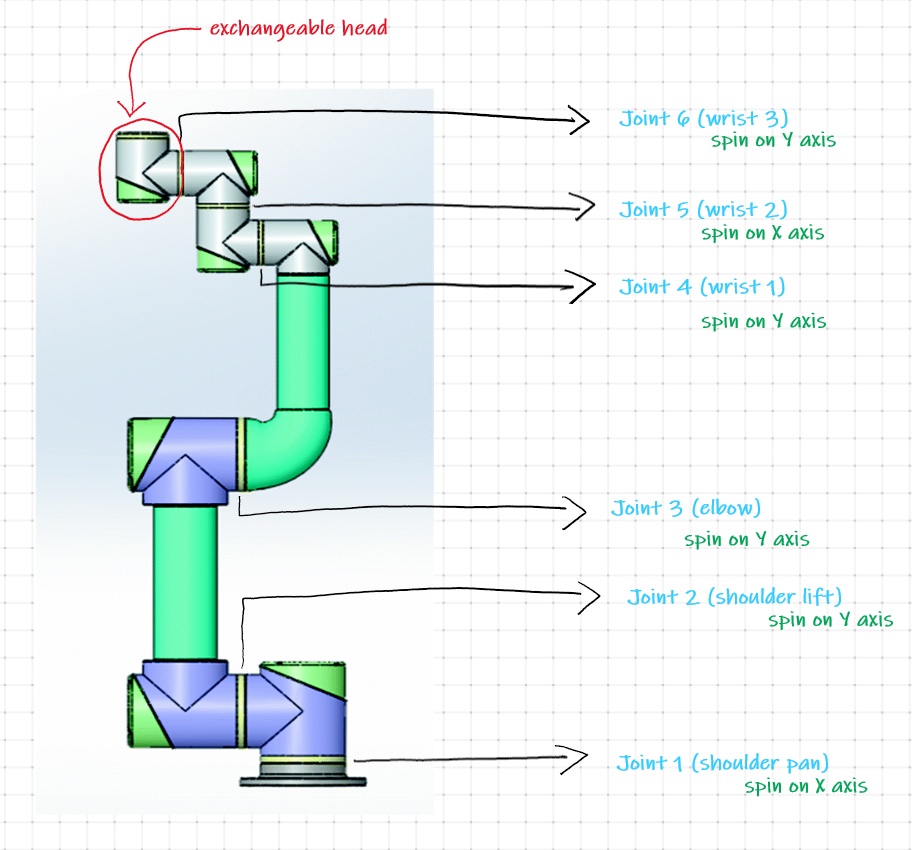

What is an UR3e 6 joints robot?

-

-

"The UR3e collaborative robot is a smaller collaborative table-top robot, perfect for light assembly tasks and automated workbench scenarios. The compact table-top cobot weighs only 24.3 lbs (11 kg), but has a payload of 6.6 lbs (3 kg), ±360-degree rotation on all wrist joints, and infinite rotation on the end joint."

-

-

-

Our Solution

- Create a custom Hand Gesture System based on Human Hand Gestures which are recognized by a Trained Neuronal Network.

- Based on the gesture, instructions are sent to the robot in order to controll it.

-

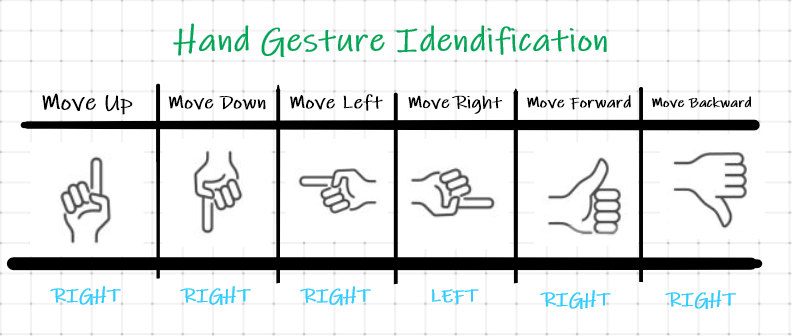

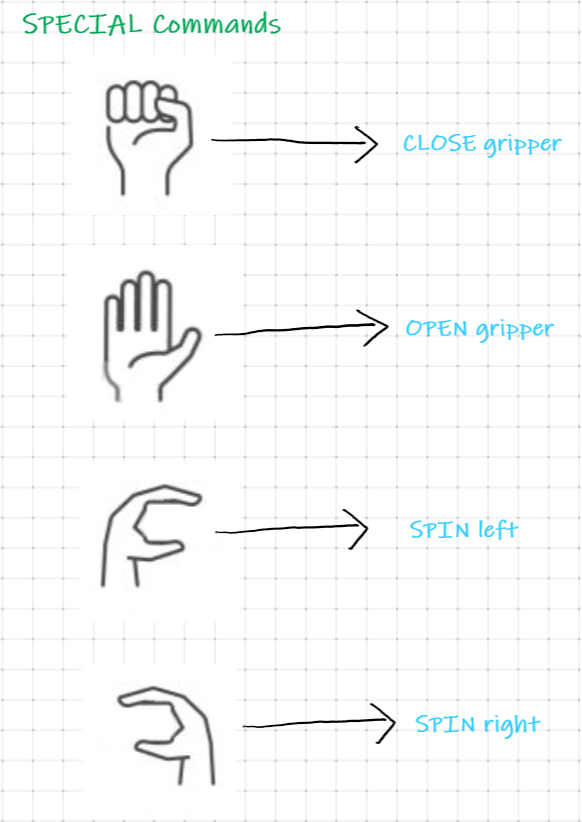

Gesture System

- Hand Gesture Movement System

- Hand Gesture **Base Rotation & Gripper Controll** System

- Hand Gesture **Base Rotation & Gripper Controll** System

-

How it works

- First, the human interacting with the robot must specify via the

Hand Gesture Identificationtable the direction in which the TCP will move. - Once the gesture has been detected, using the

ur_library.pylibrary, instructions are sent to the robot which moves 1cm at a time in the given direction. - To stop the movement, simply stop indicating the gesture.

- In order to rotate the base on a different direction, the

SPIN left/rightgesture from theSPECIAL Commandstable must be used. - In order to

CLOSE/OPEN gripper, use the gestures presented inSPECIAL Commandstable.

- First, the human interacting with the robot must specify via the

-

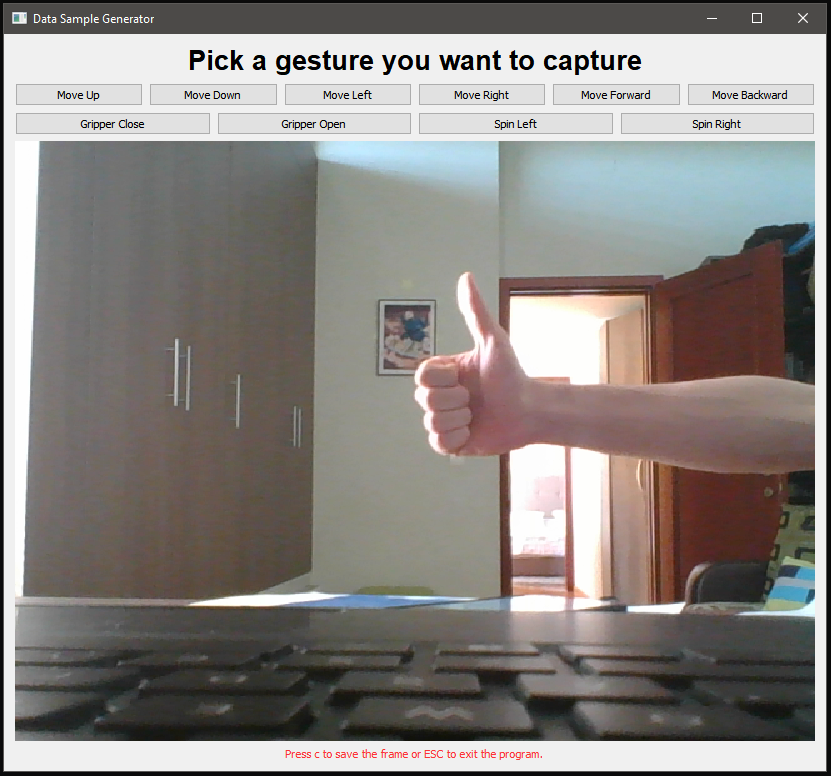

Component1: genedate data samples (

generate_samples.py) -

Component2: train neuronal network (

colab)- https://colab.research.google.com/drive/1gl4ZZTF66uqQOuVOxGaAYMsDkpUI2mAB#scrollTo=7SFT3mUiW4rK

- Ussage: follow the steps as presented in the colab, then download the created model.

-

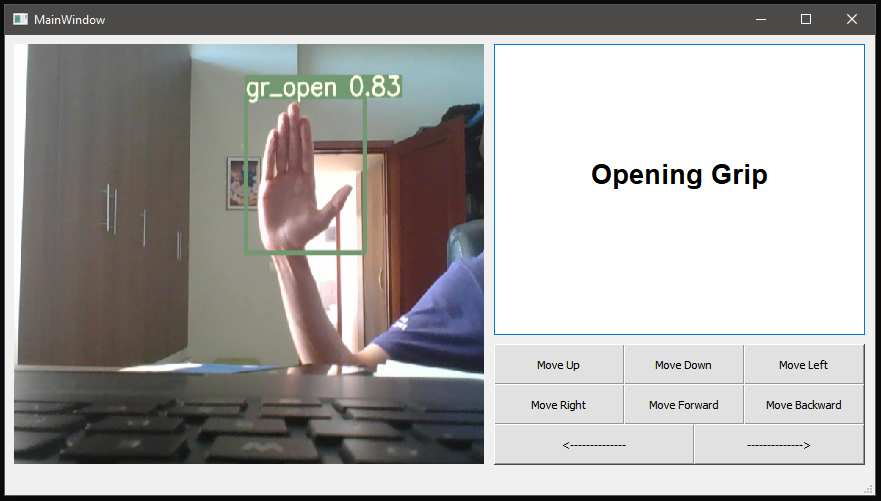

Component3: object detection (

detect.py)- Ussage: used within Component5

- Scope: implement a continous detection loop on the given frame and return the results.

-

Component4: ur3e library (

ur_library.py) -

Component5: interactive GUI (

app_controller.py) -

Component6: dataset (

LINK)

- python-urx: v0.11.02 https://github.com/Mofeywalker/python-urx

- yolov5: https://github.com/ultralytics/yolov5

pip install PyQt5 seaborn pandas matplotlib pyyaml tqdm torchvision image requests torch urx opencv-python PySide2