This repository is the official implementation of DynaVSR, which is accepted in WACV2021.

Link: Paper(Arxiv) | Paper(CVFOpenAccess)

project

│ README.md

└───dataset - make symbolic link of your data here

└───pretrained_models - put the downloadedpretrained model here

└───codes

│ └───data

│ │ common.py

│ │ data_sampler.py

│ │ old_kernel_generator.py

│ │ random_kernel_generator.py

│ └───baseline - for finetuning the VSR network

│ └───estimator - for training MFDN, SFDN

│ └───meta_learner - training maml network

│ └───data_scripts - miscellaneous scripts (same as EDVR)

│ └───metrics - metric calculation (same as EDVR)

│ └───models - model collections

│ └───options

│ └───test - ymls for testing the networks

│ └───train - ymls for training the networks

│ │ options.py

│ └───scripts

│ └───utils

| calc_psnr_ssim.py - code for calculating psnrs and ssims for image sets

| degradation_gen.py - code for generating the preset kernel

| make_downscaled_images.py - code for making true slr images

| make_slr_images.py - code for making slr images generated by MFDN, SFDN

| train.py - code for training the VSR network

| train_dynavsr.py - code for training DynaVSR

| train_mfdn.py - code for training MFDN, SFDN network

| test_maml.py - code for testing DynaVSR

| test_Vid4_REDS4_with_GT(_DUF, _TOF).py - code for testing baseline VSR network

| run_downscaling.sh - scripts for generating LR, SLR images

| run_visual.sh - scripts for testing DynaVSR

| ...

Current version is tested on:

- Ubuntu 18.04

- Python==3.7.7

- numpy==1.17

- PyTorch==1.3.1, torchvision==0.4.2, cudatoolkit==10.0

- tensorboard==1.14.0

- etc: pyyaml, opencv, scikit-image, pandas, imageio, tqdm

# Easy installation (using Anaconda environment)

conda env create -f environment.yml

conda activate dynavsr

- Vimeo90K: Training / Vid4: Validation

- REDS: Training, Validation

- download train_sharp data

- after downloading the dataset, use run_downscaling.sh to make lr, slr images

sh codes/run_downscaling.sh

- make symbolic link to the datasets.

- You can download pretrained models using the link: [Google Drive]

- To run EDVR, first install Deformable Convolution. We use mmdetection's dcn implementation. Please first compile it.

cd ./codes/models/archs/dcn python setup.py develop

Two ways to train DynaVSR network.

- Distributed training(When using multiple GPUs).

cd ./codes python -m torch.distributed.launch --nproc_per_node=8 --master_port=4321 train_dynavsr.py -opt options/train/[Path to YML file] --launcher pytorch --exp_name [Experiment Name] - Single GPU training.

cd ./codes python train_dynavsr.py -opt options/train/[Path to YML file] --exp_name [Experiment Name]

-

We just support single GPU for testing.

- To use your own test set, change the dataroot in

EDVR_Demo.ymlfile to the folder containing images.

cd ./codes python test_dynavsr.py -opt options/test/[Path to YML file] - To use your own test set, change the dataroot in

-

Or just use

run_visual.sh(Uncomment line that you want to execute).sh ./codes/run_visual.sh

If you like the paper, please cite our paper using:

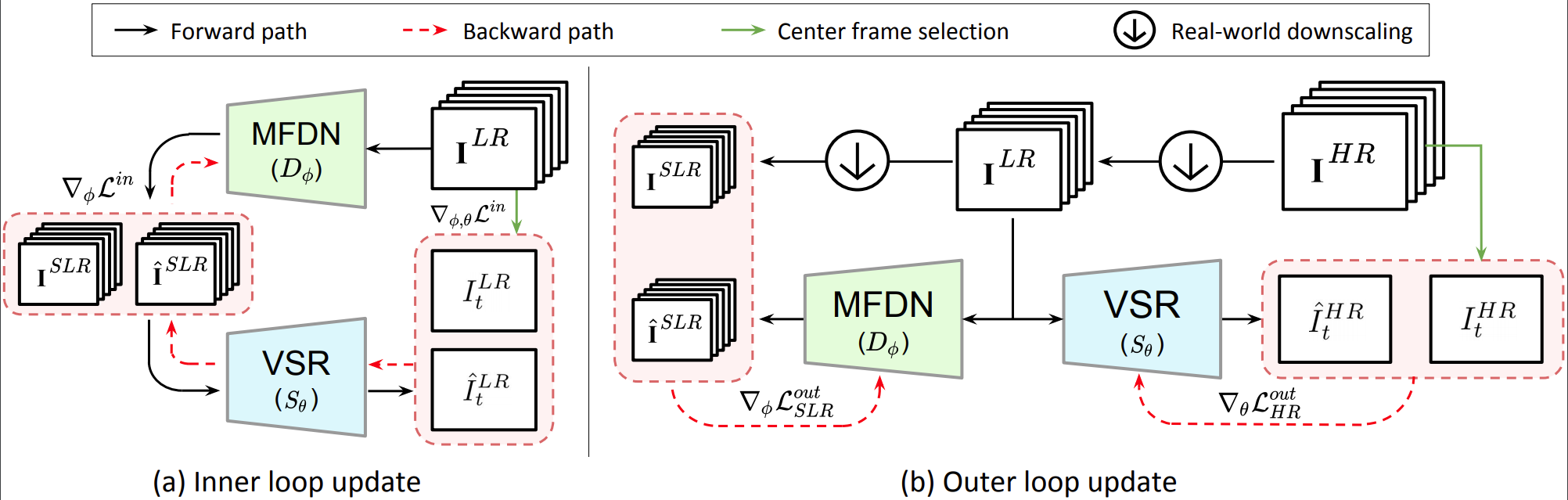

@inproceedings{lee2021dynavsr,

title={DynaVSR: Dynamic Adaptive Blind Video Super-Resolution},

author={Lee, Suyoung and Choi, Myungsub and Lee, Kyoung Mu},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

pages={2093--2102},

year={2021}

}

- [2020-12-09] First code cleanup, Publish pretrained models

- [2020-12-29] Add arbitrary input data testing script

- [2021-01-05] Add script for testing 4X scale EDVR models

- [2021-01-06] Modify scripts for generating LR, SLR frames

The code is built based on