Introduction to Azure Networking

Part 1: Spoke-to-Spoke communication over NVA

Part 2: NVA Scalability and HA

This document contains a lab guide that helps to deploy a basic environment in Azure that allows to test some of the functionality of the integration between Azure and Ansible. Before starting with this account, make sure to fulfill all the requisites:

- A valid Azure subscription account. If you don’t have one, you can create your free azure account (https://azure.microsoft.com/free/) today.

- If you are using Windows 10/11, you can install the Windows Subsystem for Linux (How to install Linux on Windows with WSL).

- Azure CLI 2.0, follow these instructions to install: https://docs.microsoft.com/cli/azure/install-azure-cli

The labs cover:

- Introduction to Azure networking

- Deployment of multi-vnet Hub and Spoke design

- Traffic filtering in Azure with firewalls

- Microsegmentation using firewalls

- Scaling out NVAs with load balancing and SNAT

- Advanced probes for Azure Load Balancers

- Linux custom routing

This lab has been modified to improve the user's experience. Testing with Virtual Network Gateways has been taken all the way to the end, since just the gateway deployment can take up to 45 minutes. The activities in this lab have been divided in 3 sections:

- Section 1: Hub and Spoke networking (around 60 minutes)

- Section 2: NVA scalability with Azure Load Balancer (around 90 minutes)

- Section 3: using VPN gateway for spoke-to-spoke connectivity and site-to-site access (around 60 minutes, not including the time required to provision the gateways)

Along this lab some variables will be used, that might (and probably should) look different in your environment. These are the variables you need to decide on before starting with the lab. Notice that the VM names are prefixed by a (not so) random number, since these names will be used to create DNS entries as well, and DNS names need to be unique.

| Description | Value used in this lab guide |

|---|---|

| Azure resource group | vnetTest |

| Username for provisioned VMs and NVAs | lab-user |

| Password for provisioned VMs and NVAs | Microsoft123! |

| Azure region | westeurope |

As tip, if you want to do the VPN lab, it might be beneficial to run the commands in Lab9 Step1 as you are doing the previous labs, so that you don’t need to wait for 45 minutes (that is more or less the time it takes to provision VPN gateways) when you arrive to Lab9.

Microsoft Azure has established as one of the leading cloud providers, and part of Azure's offering is Infrastructure as a Service (IaaS), that is, provisioning raw data center infrastructure constructs (virtual machines, networks, storage, etc), so that any application can be installed on top.

An important part of this infrastructure is the network, and Microsoft Azure offers multiple network technologies that can help to achieve the applications' business objectives: from VPN gateways that offer secure network access to load balancers that enable application (and network, as we will see in this lab) scalability.

Some organizations have decided to complement Azure Network offering with Network Virtual Appliances (NVAs) from traditional network vendors. This lab will focus on the integration of these NVAs, and we will take as example an open source firewall, that will be implemented with iptables running on top of an Ubuntu VM with 2 network interfaces. This will allow to highlight some of the challenges of the integration of this sort of VMs, and how to solve them.

At the end of this guide you will find a collection of useful links, but if you don’t know where to start, here is the home page for the documentation for Microsoft Azure Networking: https://docs.microsoft.com/azure/#pivot=services&panel=network.

The second link you want to be looking at is this document, where Hub and Spoke topologies are discussed: https://docs.microsoft.com/azure/architecture/reference-architectures/hybrid-networking/hub-spoke.

If you find any issue when running through this lab or any error in this guide, please open a Github issue in this repository, and we will try to fix it. Enjoy!

Step 1. Log into your system.

Step 2. If you don’t have a valid Azure subscription, you can create a free Azure subscription in https://azure.microsoft.com/free. If you have received a voucher code for Azure, go to https://www.microsoftazurepass.com/Home/HowTo for instructions on how to redeem it.

Step 3. Open a terminal window and log into Azure. Here you have different options:

-

If you are using the Azure CLI on Windows, you can press the Windows key in your keyboard, then type

cmdand hit the Enter key. You might want to maximize the command Window so that it fills your desktop. -

If you are using the Azure CLI on the Linux subsystem for Windows, open your Linux console

-

If you are using Linux or Mac, you probably do not need me to tell me how to open a Terminal window

-

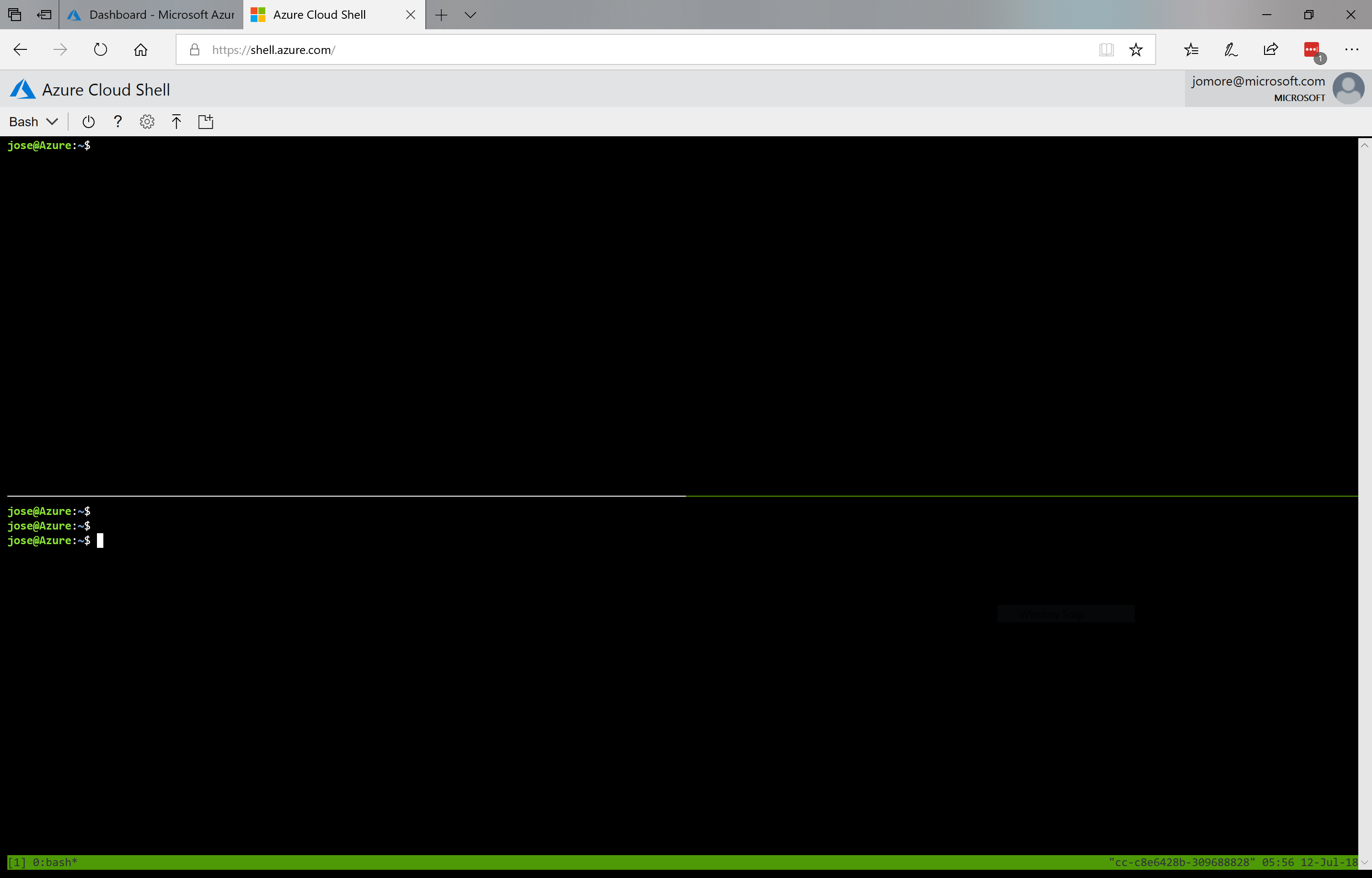

Alternatively you can use the Azure shell, no matter on which OS you are working. Open the URL https://shell.azure.com on a Web browser, and after authenticating with your Azure credentials you will get to an Azure Cloud Shell. In this lab we will use the Azure CLI (and not Powershell), so make sure you select the Bash shell. You can optionally use

tmux, as this figure shows:

Figure 1. Cloud shell with two tmux panels

If not using cloud shell, you will have to log into Azure. You can copy the following command from this guide with Ctrl-C, and paste it into your terminal window:

az loginAfter logging into Azure, you should be able to retrieve details from the current subscription:

az account showIf you have multiple subscriptions and the wrong one is being selected, you can select the subscription where you want to deploy the lab with the command az account set --subscription <your subscription GUID>.

Step 4. Create a new resource group, where we will place all our objects (so that you can easily delete everything after you are done). The last command also sets the default resource group to the newly created one, so that you do not need to download it. Note that your regions should support Availability Zones, otherwise the ARM template will not deploy successfully.

# Set some variables (bash)

rg=vnetTest

location=eastus2

location2ary=westus

adminPassword='Microsoft123!'

template_uri=https://raw.githubusercontent.com/erjosito/azure-networking-lab/master/arm/NetworkingLab_master.jsonFor Windows Powershell, this is how you would declare variables:

# Set some variables (PowerShell)

$rg = "vnetTest"

$location = "eastus2"

$location2ary = "westus"

$adminPassword = "Microsoft123!"

$template_uri = "https://raw.githubusercontent.com/erjosito/azure-networking-lab/master/arm/NetworkingLab_master.json"Now you can create the resource group:

az group create --name $rg --location $location

az configure --defaults group=$rg

Step 5. Deploy the master template that will create our initial network configuration:

az deployment group create --name netLabDeployment --template-uri $template_uri --resource-group $rg --parameters "{\"adminPassword\":{\"value\":\"$adminPassword\"}, \"location2ary\":{\"value\": \"$location2ary\"}, \"location2aryVnets\":{\"value\": [3]}}" Note: the previous command will deploy 5 vnets, one of them (vnet 3) in an alternate location. The goal of deploying this single vnet in a different location is to include global vnet peering in this lab. Should you not have access to locations where global vnet peering is available (such as West Europe and West US 2 used in the examples), you can just deploy the previous templates without the parameters location2ary and location2aryVnets, which will deploy all nets into the same location as the resource group.

Step 6. Since the previous command will take a while (around 15 minutes), open another command window (see Step 3 for detailed instructions) to monitor the deployment progress. Note you might have to login in this second window too:

az deployment group list -o tableThe output of that command should be something like this:

Name Timestamp State

----------------- -------------------------------- ---------

myVnet5gwPip 2017-06-29T19:15:28.227920+00:00 Succeeded

myVnet4gwPip 2017-06-29T19:15:31.617920+00:00 Succeeded

myVnet5-vm1-nic 2017-06-29T19:15:38.468886+00:00 Succeeded

myVnet1VpnGw 2017-06-29T19:15:38.565418+00:00 Succeeded

myVnet5VpnGw 2017-06-29T19:15:39.056567+00:00 Succeeded

myVnet3-vm1-nic 2017-06-29T19:15:39.269138+00:00 Succeeded

myVnet4-vm1-nic 2017-06-29T19:15:39.509990+00:00 Succeeded

myVnet2VpnGw 2017-06-29T19:15:40.576390+00:00 Succeeded

myVnet2-vm1-nic 2017-06-29T19:15:41.003741+00:00 Succeeded

myVnet1-vm1-nic 2017-06-29T19:15:41.143406+00:00 Succeeded

myVnet4VpnGw 2017-06-29T19:15:42.608290+00:00 Succeeded

myVnet3VpnGw 2017-06-29T19:15:44.467581+00:00 Succeeded

myVnet3-vm 2017-06-29T19:20:21.195625+00:00 Succeeded

myVnet4-vm 2017-06-29T19:20:21.856738+00:00 Succeeded

myVnet5-vm 2017-06-29T19:20:38.720152+00:00 Succeeded

myVnet-template-3 2017-06-29T19:20:42.269630+00:00 Succeeded

myVnet-template-4 2017-06-29T19:20:42.581238+00:00 Succeeded

myVnet1-vm 2017-06-29T19:20:42.663423+00:00 Succeeded

myVnet-template-5 2017-06-29T19:20:55.790429+00:00 Succeeded

myVnet-template-1 2017-06-29T19:20:59.148149+00:00 Succeeded

myVnet2-vm 2017-06-29T19:21:11.406220+00:00 Succeeded

myVnet-template-2 2017-06-29T19:21:29.787517+00:00 Succeeded

vnets 2017-06-29T19:21:32.490251+00:00 Succeeded

vnet5Gw 2017-06-29T19:21:52.630513+00:00 Succeeded

vnet4Gw 2017-06-29T19:21:53.090784+00:00 Succeeded

linuxnva-1-nic0 2017-06-29T19:21:56.417810+00:00 Succeeded

UDRs 2017-06-29T19:21:57.487004+00:00 Succeeded

linuxnva-2-nic0 2017-06-29T19:21:58.322935+00:00 Succeeded

myVnet1-vm2-nic 2017-06-29T19:21:58.354766+00:00 Succeeded

linuxnva-2-nic1 2017-06-29T19:21:59.328610+00:00 Succeeded

linuxnva-1-nic1 2017-06-29T19:21:59.490821+00:00 Succeeded

nva-slb-int 2017-06-29T19:22:03.146781+00:00 Succeeded

hub2spoke2 2017-06-29T19:22:09.140223+00:00 Succeeded

hub2spoke3 2017-06-29T19:22:10.229240+00:00 Succeeded

hub2spoke1 2017-06-29T19:22:12.980700+00:00 Succeeded

AzureLB 2017-06-29T19:22:15.587843+00:00 Succeeded

nva-slb-ext 2017-06-29T19:22:18.507575+00:00 Succeeded

vnet1subnet1vm2 2017-06-29T19:26:53.109076+00:00 Succeeded

nva 2017-06-29T19:29:24.679832+00:00 Succeeded

netLabDeployment 2017-06-29T19:29:26.491546+00:00 Succeeded

Note: You might see other resource names when using your template, since newer lab versions might have different object names

Step 1. You don’t need to wait until all objects in the template have been successfully deployed (although it would be good, to make sure that everything is there). In your second terminal window, start exploring the objects created by the ARM template: vnets, subnets, VMs, interfaces, public IP addresses, etc. Save the output of these commands (copying and pasting to a text file for example).

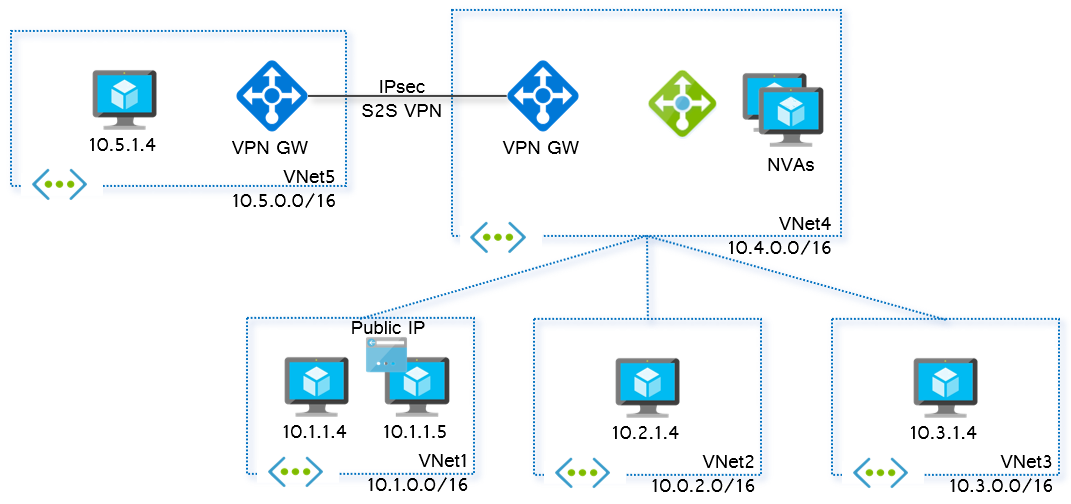

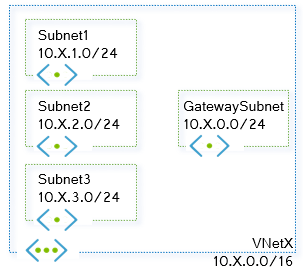

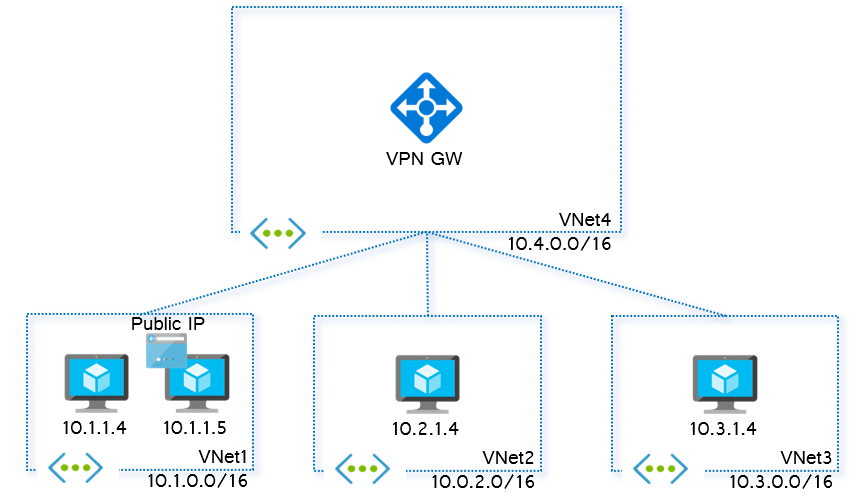

You can see some diagrams about the deployed environment here, so that you can interpret better the command outputs.

Note that the output of these commands might be different, if the template deployment from lab 0 is not completed yet.

Figure 2. Overall vnet diagram

Figure 3. Subnet design of every vnet

az network vnet list -g $rg -o table

Location Name ProvisioningState ResourceGroup ResourceGuid

---------- ------- ------------------- --------------- -------------

westeurope myVnet1 Succeeded vnetTest 1d20ba9a...

westeurope myVnet2 Succeeded vnetTest 43ca80d0...

westus2 myVnet3 Succeeded vnetTest 4837a481...

westeurope myVnet4 Succeeded vnetTest 72a82a72...

westeurope myVnet5 Succeeded vnetTest 96e5f9c5... Note: Some columns of the ouput above have been removed for clarity purposes.

az network vnet subnet list -g $rg --vnet-name myVnet1 -o table

AddressPrefix Name ProvisioningState ResourceGroup

--------------- -------------- ------------------- ---------------

10.1.0.0/24 GatewaySubnet Succeeded vnetTest

10.1.1.0/24 myVnet1Subnet1 Succeeded vnetTest

10.1.2.0/24 myVnet1Subnet2 Succeeded vnetTest

10.1.3.0/24 myVnet1Subnet3 Succeeded vnetTestaz vm list -g $rg -o table

Name ResourceGroup Location

----------- --------------- ----------

linuxnva-1 vnetTest westeurope

linuxnva-2 vnetTest westeurope

myVnet1-vm1 vnetTest westeurope

myVnet1-vm2 vnetTest westeurope

myVnet2-vm1 vnetTest westeurope

myVnet3-vm1 vnetTest westeurope

myVnet4-vm1 vnetTest westeurope

myVnet5-vm1 vnetTest westeuropeaz network nic list -g $rg -o table

EnableIpForwarding Location MacAddress Name

-------------------- ---------- ----------------- -------

True westeurope 00-0D-3A-28-F8-F9 linuxnva-1-nic0

True westeurope 00-0D-3A-28-F0-3A linuxnva-1-nic1

True westeurope 00-0D-3A-28-24-73 linuxnva-2-nic0

True westeurope 00-0D-3A-28-2A-28 linuxnva-2-nic1

westeurope 00-0D-3A-2A-48-AF myVnet1-vm1-nic

westeurope 00-0D-3A-28-2C-8C myVnet1-vm2-nic

westeurope 00-0D-3A-2A-4A-DE myVnet2-vm1-nic

westus2 00-0D-3A-2A-46-DE myVnet3-vm1-nic

westeurope 00-0D-3A-2A-4F-EA myVnet4-vm1-nic

westeurope 00-0D-3A-2A-47-BC myVnet5-vm1-nic Note: Some columns of the ouput above have been removed for clarity purposes.

az network public-ip list -g $rg --query '[].[name,ipAddress]' -o table

Column1 Column2

------------------- ---------------

linuxnva-slbPip-ext 5.6.7.8

myVnet1-vm2-pip 1.2.3.4

vnet4gwPip

vnet5gwPipNote: You might have notice the --query option in the command above. The reason is that the standard command to list public IP addresses does not show the IP addresses themselves, interestingly enough. With the --query option you can force the Azure CLI to show the information you are interested in. Furthermore, the public IP addresses in the table are obviously not the ones you will see in your environment.

As you see, we have a single public IP address allocated to myVnet1-vm2. We will use this VM as jump host for the lab.

Step 2. Using the public IP address from the previous step open an SSH terminal (putty on windows, an additional terminal on Mac/Linux, a new tmux panel in the cloud shell, etc) and connect to the jump host. If you did not modified the ARM templates used to provision the environment, the user is lab-user, and the password Microsoft123!

ssh lab-user@1.2.3.4

The authenticity of host '1.2.3.4 (1.2.3.4)' can't be established.

ECDSA key fingerprint is SHA256:FghxuVL+BuKux27Homrsm3nYjb7o/gE/SfFoiRYl5Y4.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '1.2.3.4' (ECDSA) to the list of known hosts.

lab-user@1.2.3.4's password:

[... ouput omitted...]

lab-user@myVnet1-vm2:~$Note: do not forget to use the actual public IP address of your environment instead of the sample value of 1.2.3.4. Make sure to use the IP address corresponding to myVnet1-vm2-pip, not the one assigned to linuxnva-slbPip-ext.

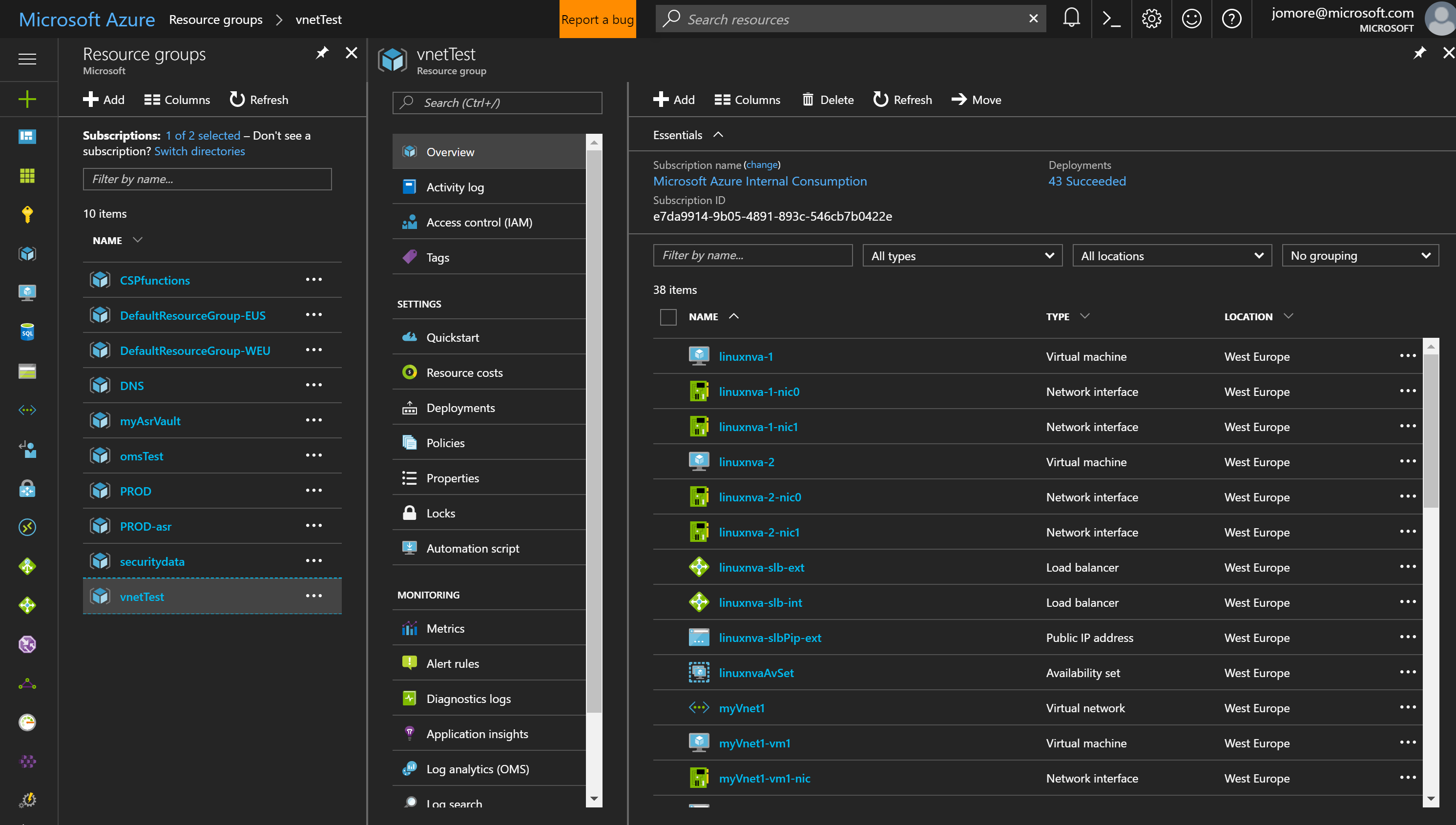

Step 3. Connect to the Azure portal (http://portal.azure.com) and locate the resource group that we have just created (called 'vnetTest', if you did not change it). Verify the objects that have been created and explore their properties and states.

Figure 5: Azure portal with the resource group created for this lab

Note: you might want to open two new additional command prompt windows (or tmux panels) and launch the two commands from Lab 8, Step 1. Each of those commands (can be run in parallel) will take around 45 minutes, so you can leave them running while you proceed with Labs 2 through 7. If you are not planning to run Labs 8-9, you can safely ignore this paragraph.

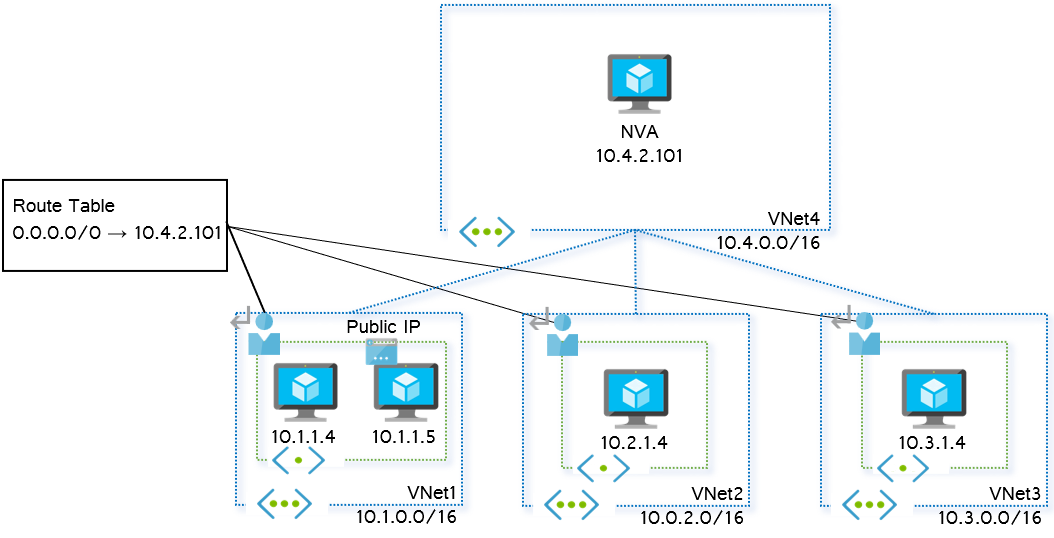

In some situations you would want some kind of security between the different Vnets. Although this security can be partially provided by Network Security Groups, certain organizations might require some more advanced filtering functionality such as the one that firewalls provide. In this lab we will insert a Network Virtual Appliance in the communication flow. Typically these Network Virtual Appliance might be a next-generation firewall of vendors such as Barracuda, Checkpoint, Cisco or Palo Alto, to name a few, but in this lab we will use a Linux machine with 2 interfaces and traffic forwarding enabled. For this exercise, the firewall will be inserted as a 'firewall on a stick', that is one single interface will suffice.

Figure 6. Spoke-to-spoke traffic going through an NVA

Step 1. In the Ubuntu VM acting as firewall iptables have been configured by means of a Custom Script Extension. This extension downloads a script from a public repository (the Github repository for this lab) and runs it on the VM on provisioning time. Verify that the NVAs have successfully registered the extensions with this command (look for the ProvisioningState column):

az vm extension list -g $rg --vm-name linuxnva-1 -o table

AutoUpgradeMinorVersion Location Name ProvisioningState

------------------------- ---------- ------------------- -----------------

True westeurope installcustomscript Succeeded Step 2. From your jump host ssh session connect to myVnet1-vm1 using the credentials that you specified when deploying the template, and verify that you have connectivity to the second VM in vnet1.

lab-user@myVnet1-vm2:~$ ssh 10.1.1.4

The authenticity of host '10.1.1.4 (10.1.1.4)' can't be established.

ECDSA key fingerprint is SHA256:y4T92R4Qd968bf1ElHUazOvXLidj0RmgDOb4wxfpe7s.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.1.1.4' (ECDSA) to the list of known hosts.

lab-user@10.1.1.4's password:

[...Output omitted...]

lab-user@myVnet1-vm1:~$The username and password were specified at creation time (that long command that invoked the ARM template). If you did not change the parameters, the username is 'lab-user' and the password 'Microsoft123!' (without the quotes).

Step 3. Try to connect to the private IP address of the VM in vnet2 over SSH. We can use the private IP address, because now we are inside of the vnet.

lab-user@myVnet1-vm1:~$ ssh 10.2.1.4

ssh: connect to host 10.2.1.4 port 22: Connection timed out

lab-user@myVnet1-vm1:~$Note: you do not need to wait for the "ssh 10.2.1.4" command to time out if you do not want to. Besides, if you are wondering why we are not testing with a simple ping, the reason is because the NVAs are preconfigured to drop ICMP traffic, as we will see in later labs.

Step 4. Back in the command prompt window where you are running the Azure CLI, verify that the involved subnets (myVnet1-Subnet1 and myVnet2-Subnet1) do not have any routing table attached:

az network vnet subnet show -g $rg --vnet-name myVnet1 -n myVnet1Subnet1 --query routeTableNote: You should get no output out of the previous command

Step 5. Create a custom route table named "vnet1-subnet1", and another one called "vnet2-subnet1":

az network route-table create -n vnet1-subnet1 -g $rgaz network route-table create --name vnet2-subnet1 -g $rgStep 6. Verify that the route tables are successfully created:

az network route-table list -g $rg -o table

Location Name ProvisioningState ResourceGroup

---------- ------------- ------------------- ---------------

westeurope vnet1-subnet1 Succeeded vnetTest

westeurope vnet2-subnet1 Succeeded vnetTestStep 7. Now attach custom route tables to both subnets involved in this example (Vnet1Subnet1, Vnet2Subnet2):

az network vnet subnet update -g $rg -n myVnet1Subnet1 --vnet-name myVnet1 --route-table vnet1-subnet1az network vnet subnet update -g $rg -n myVnet2Subnet1 --vnet-name myVnet2 --route-table vnet2-subnet1Step 8. And now you can check that the subnets are associated with the right routing tables:

az network vnet subnet show -g $rg --vnet-name myVnet1 -n myVnet1Subnet1 --query routeTable

{

"disableBgpRoutePropagation": null,

"etag": null,

"id": "/subscriptions/.../resourceGroups/vnetTest/providers/Microsoft.Network/routeTables/vnet1-subnet1",

"location": null,

"name": null,

"provisioningState": null,

"resourceGroup": "vnetTest",

"routes": null,

"subnets": null,

"tags": null,

"type": null

}az network vnet subnet show -g $rg --vnet-name myVnet2 -n myVnet2Subnet1 --query routeTable

{

"disableBgpRoutePropagation": null,

"etag": null,

"id": "/subscriptions/.../resourceGroups/vnetTest/providers/Microsoft.Network/routeTables/vnet2-subnet1",

"location": null,

"name": null,

"provisioningState": null,

"resourceGroup": "vnetTest",

"routes": null,

"subnets": null,

"tags": null,

"type": null

}Step 9. We will inspect now the default routing information for our virtual machines. In Azure, routing is programmed in the Network Interface Card (NIC) of a VM, so when inspecting routes you need to send commands to the NIC. For example, for the VM in VNet2, the name of its NIC is myVnet1-vm1-nic, and you can inspect the routes in the NIC with this command:

az network nic show-effective-route-table -g $rg -n myVnet1-vm1-nic -o table

Source State Address Prefix Next Hop Type Next Hop IP

-------- ------- ---------------- --------------- -------------

Default Active 10.1.0.0/16 VnetLocal

Default Active 10.4.0.0/16 VNetPeering

Default Active 0.0.0.0/0 Internet

Default Active 10.0.0.0/8 None

Default Active 127.0.0.0/8 None

Default Active 100.64.0.0/10 None

Default Active 172.16.0.0/12 None

Default Active 25.48.0.0/12 None

Default Active 25.4.0.0/14 None

Default Active 198.18.0.0/15 None

Default Active 157.59.0.0/16 None

Default Active 192.168.0.0/16 None

Default Active 25.33.0.0/16 None

Default Active 40.109.0.0/16 None

Default Active 104.147.0.0/16 None

Default Active 104.146.0.0/17 None

Default Active 40.108.0.0/17 None

Default Active 23.103.0.0/18 None

Default Active 25.41.0.0/20 None

Default Active 20.35.252.0/22 NoneIt is important to take a moment to learn where these routes are coming from:

- The first route, with a next hop type of

VnetLocal, is the system route that tells a virtual machine how to reach other virtual machines in the same VNet. Note that the route is for the whole VNet, and not restricted to a subnet: as a consequence, every virtual machine in a VNet will be able to reach other virtual machines in the same VNet, regardless of the subnet. - The second route, with a next hop type of

VNetPeering, is the system route inserted when peering two VNets, and tells the virtual machine how to reach end points in the peered VNet. - The third route, with a next hop type of

Internet, tells the virtual machine how to reach Internet. Differently that other clouds like AWS, Azure virtual machines have per default outbound access to the Internet, without the need for Internet Gateways. - Finally, there are a bunch of routes with the next hop type of

None(with the effect of dropping traffic). The purpose of these routes is to make sure that only legitimate traffic is sent to the Internet. For example, any traffic sent to RFC 1918 private IP addresses or other Microsoft-owned public IP addresses used internally should not be sent to the public Internet, and hence it is dropped.

Routing in Azure follows the longest prefix match (LPM) principle. For example, for traffic sent to the local VNet, since 10.1.0.0/16 (next hop VnetLocal) is more specific than 10.0.0.0/8 (next hop None), the traffic will be forwarded, and not dropped.

Since there is no specific route for VNet1 in the route table, when the VM in VNet1 tries to send traffic to VNet2 (in 10.2.0.0/16), this traffic will hit the route for 10.0.0.0/8 with next hop None, and it will be dropped.

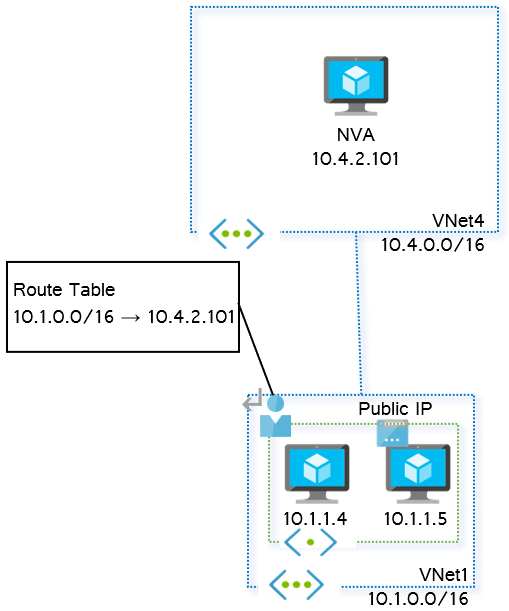

Step 10. Now we can tell Azure to send traffic from subnet1 in VNet1 to other private IP addresses over the hub vnet. You need a forwarding device in the hub VNet as next hop. The route table will send all traffic to the 10.x.x.x range (10.0.0.0/8) to the IP address of the Network Virtual Appliance (NVA) deployed in VNet4 (10.4.2.101):

az network route-table route create --address-prefix 10.0.0.0/8 --next-hop-ip-address 10.4.2.101 --next-hop-type VirtualAppliance --route-table-name vnet1-subnet1 -g $rg -n rfc1918-1

Let's look now at the effective routes for vm1's NIC:

az network nic show-effective-route-table -g $rg -n myVnet1-vm1-nic -o table

Source State Address Prefix Next Hop Type Next Hop IP

-------- ------- ---------------- ---------------- -------------

Default Active 10.1.0.0/16 VnetLocal

Default Active 10.4.0.0/16 VNetPeering

Default Active 0.0.0.0/0 Internet

Default Active 127.0.0.0/8 None

Default Active 100.64.0.0/10 None

Default Active 172.16.0.0/12 None

Default Active 25.48.0.0/12 None

Default Active 25.4.0.0/14 None

Default Active 198.18.0.0/15 None

Default Active 157.59.0.0/16 None

Default Active 192.168.0.0/16 None

Default Active 25.33.0.0/16 None

Default Active 40.109.0.0/16 None

Default Active 104.147.0.0/16 None

Default Active 104.146.0.0/17 None

Default Active 40.108.0.0/17 None

Default Active 23.103.0.0/18 None

Default Active 25.41.0.0/20 None

Default Active 20.35.252.0/22 None

User Active 10.0.0.0/8 VirtualAppliance 10.4.2.101- There is now a

Userroute (also known as User-Defined Route or UDR) as opposed to the previous system routes (marked by the sourceDefault). - This new user route has as next hop type

VirtualAppliance, and the IP address that we defined in the previous command. - We are not sending all the traffic (

0.0.0.0/0) to the NVA, but only traffic addressed to internal networks (covered by the summary10.0.0.0/8). The reason is because if we used0.0.0.0/0, we would break the SSH connection to the virtual machine in VNet1, that currently is going over the public Internet.

Step 11. At this point, traffic between vnet1 and vnet2 will still not work, because our virtual machine in VNet2 doesn't know how to send the return traffic back (there is no route for 10.1.0.0/16 in its route table):

az network nic show-effective-route-table -g $rg -n myVnet2-vm1-nic -o table

Source State Address Prefix Next Hop Type Next Hop IP

-------- ------- ---------------- --------------- -------------

Default Active 10.2.0.0/16 VnetLocal

Default Active 10.4.0.0/16 VNetPeering

Default Active 0.0.0.0/0 Internet

Default Active 10.0.0.0/8 None

Default Active 127.0.0.0/8 None

Default Active 100.64.0.0/10 None

Default Active 172.16.0.0/12 None

Default Active 25.48.0.0/12 None

Default Active 25.4.0.0/14 None

Default Active 198.18.0.0/15 None

Default Active 157.59.0.0/16 None

Default Active 192.168.0.0/16 None

Default Active 25.33.0.0/16 None

Default Active 40.109.0.0/16 None

Default Active 104.147.0.0/16 None

Default Active 104.146.0.0/17 None

Default Active 40.108.0.0/17 None

Default Active 23.103.0.0/18 None

Default Active 25.41.0.0/20 None

Default Active 20.35.252.0/22 NoneLet's create now a route table, and instruct the virtual machines in the subnet of VNet2 to send all private traffic to the Network Virtual Appliance in the hub VNet (vnet4):

az network route-table route create --address-prefix 0.0.0.0/0 --next-hop-ip-address 10.4.2.101 --next-hop-type VirtualAppliance -g $rg --route-table-name vnet2-subnet1 -n defaultNote that in this case we are not sending all the traffic (0.0.0.0/0) to the NVA, but only traffic addressed to internal networks (covered by the summary 10.0.0.0/8). The reason is because if we used 0.0.0.0/0, we would break the SSH connection to the virtual machine in VNet2 that currently is going over the public Internet.

Step 12. We can verify what the route tables look like now, and how it has been programmed in one of the NICs associated to the subnet:

az network route-table route list -g $rg --route-table-name vnet1-subnet1 -o table

AddressPrefix HasBgpOverride Name NextHopIpAddress NextHopType ProvisioningState ResourceGroup

--------------- ---------------- --------- ------------------ ---------------- ------------------- ---------------

10.0.0.0/8 False rfc1918-1 10.4.2.101 VirtualAppliance Succeeded vnetTestaz network route-table route list -g $rg --route-table-name vnet2-subnet1 -o table

AddressPrefix HasBgpOverride Name NextHopIpAddress NextHopType ProvisioningState ResourceGroup

--------------- ---------------- ------- ------------------ ---------------- ------------------- ---------------

0.0.0.0/0 False default 10.4.2.101 VirtualAppliance Succeeded vnetTestaz network nic show-effective-route-table -n myVnet1-vm1-nic -g $rg -o table

Source State Address Prefix Next Hop Type Next Hop IP

-------- ------- ---------------- ---------------- -------------

Default Active 10.1.0.0/16 VnetLocal

Default Active 10.4.0.0/16 VNetPeering

Default Active 0.0.0.0/0 Internet

Default Active 127.0.0.0/8 None

Default Active 100.64.0.0/10 None

Default Active 172.16.0.0/12 None

Default Active 25.48.0.0/12 None

Default Active 25.4.0.0/14 None

Default Active 198.18.0.0/15 None

Default Active 157.59.0.0/16 None

Default Active 192.168.0.0/16 None

Default Active 25.33.0.0/16 None

Default Active 40.109.0.0/16 None

Default Active 104.147.0.0/16 None

Default Active 104.146.0.0/17 None

Default Active 40.108.0.0/17 None

Default Active 23.103.0.0/18 None

Default Active 25.41.0.0/20 None

Default Active 20.35.252.0/22 None

User Active 10.0.0.0/8 VirtualAppliance 10.4.2.101az network nic show-effective-route-table -n myVnet2-vm1-nic -g $rg -o table

Default Active 10.2.0.0/16 VnetLocal

Default Active 10.4.0.0/16 VNetPeering

Default Invalid 0.0.0.0/0 Internet

User Active 0.0.0.0/0 VirtualAppliance 10.4.2.101- User routes have preference over system routes. Consequently, you can see that for the routes in VNet2's subnet, the previous system route with next hop

Internetis nowInvalid. - You can see as well that all the routes with next hop

Nonehave disappeared. The reason is because the traffic is not going anymore to the Internet, so there is no reason for Azure to drop destination addresses that wouldn't be legitimate in the Internet.

Note: the previous command takes some seconds to run, since it accesses the routing programmed into the NIC. If you cannot find the route with the addressPrefix 10.2.0.0/16 (at the bottom of the output), please wait a few seconds and issue the command again, sometimes it takes some time to program the NICs in Azure

Step 13. Now both virtual machines should be able to reach each other over SSH (it is normal if you are asked to confirm the identity of the VM). Note that Ping between Vnets does not work, because as we will see later, the firewall is dropping ICMP traffic:

lab-user@myVnet1vm:~$ ssh 10.2.1.4

...output omitted

lab-user@myVnet2-vm1:~$Step 14. Does this work over global vnet peering? This is what we are going to test in this step. As you may have noticed already, Vnet3 is in a different location than the rest of the vnets, US West 2 (unless you changed the value of the variables used to deploy the lab).

az network vnet list --query [].[name,location] -o tsv

myVnet1 westeurope

myVnet2 westeurope

myVnet4 westeurope

myVnet5 westeurope

myVnet3 westus2VNet peering is still configured and it should be in a Connected state:

az network vnet peering list -o table --vnet-name myVnet4

AllowForwardedTraffic AllowGatewayTransit AllowVirtualNetworkAccess Name PeeringState ProvisioningState ResourceGroup UseRemoteGateways

----------------------- --------------------- --------------------------- ------------- -------------- ------------------- --------------- -------------------

False False True LinkTomyVnet3 Connected Succeeded vnetTest False

False False True LinkTomyVnet2 Connected Succeeded vnetTest False

False False True LinkTomyVnet1 Connected Succeeded vnetTest False

az network vnet peering list -o table --vnet-name myVnet3

AllowForwardedTraffic AllowGatewayTransit AllowVirtualNetworkAccess Name PeeringState ProvisioningState ResourceGroup UseRemoteGateways

----------------------- --------------------- --------------------------- ------------- -------------- ------------------- --------------- -------------------

True False True LinkTomyVnet4 Connected Succeeded vnetTest FalseSo we can just configure the routes between Vnet3 and the rest of the spokes, exactly as explained above for Vnets in the same region. The following commands will create a new route-table for subnet1 in Vnet3 (note that it needs to be in the same region as the vnet), and route traffic to the rest of the spokes (Vnets 2 and 3) over the NVA:

# Routing for VNet3

az network route-table create --name vnet3-subnet1 -l $location2ary -g $rg

az network vnet subnet update -n myVnet3Subnet1 --vnet-name myVnet3 --route-table vnet3-subnet1 -g $rg

az network route-table route create --address-prefix 0.0.0.0/0 --next-hop-ip-address 10.4.2.101 --next-hop-type VirtualAppliance --route-table-name vnet3-subnet1 -n default -g $rgIf the previous commands worked, you should be able to see now the new routes in the interface associated to the VM in Vnet3:

az network route-table route list -g $rg --route-table-name vnet3-subnet1 -o table

AddressPrefix HasBgpOverride Name NextHopIpAddress NextHopType ProvisioningState ResourceGroup

--------------- ---------------- ------- ------------------ ---------------- ------------------- ---------------

0.0.0.0/0 False default 10.4.2.101 VirtualAppliance Succeeded vnetTestaz network nic show-effective-route-table -n myVnet3-vm1-nic -g $rg -o table

Source State Address Prefix Next Hop Type Next Hop IP

-------- ------- ---------------- ----------------- -------------

Source State Address Prefix Next Hop Type Next Hop IP

-------- ------- ---------------- ----------------- -------------

Default Active 10.3.0.0/16 VnetLocal

Default Invalid 0.0.0.0/0 Internet

User Active 0.0.0.0/0 VirtualAppliance 10.4.2.101

Default Active 10.4.0.0/16 VNetGlobalPeeringAnd now you should be able to connect from the jump host (VM2 in Vnet1) to the VM in Vnet3:

lab-user@myVnet1-vm2:~$ ssh 10.3.1.4

...Output omitted...

lab-user@10.3.1.4's password:

...Output omitted...

lab-user@myVnet3-vm1:~$With spoke VNets peered to a central hub VNet you need to steer the spoke traffic to an NVA in the hub (or to a VPN/ER Gateway, as we will see in a later lab) via User-Defined Routes (UDR).

UDRs can be used steer traffic between subnets through a firewall. The UDRs should point to the IP address of a firewall interface in a different subnet. This firewall could be even in a peered vnet, as we demonstrated in this lab, where the firewall was located in the hub vnet.

You can verify the routes installed in the routing table, as well as the routes programmed in the NICs of your VMs. Note that discrepancies between the routing table and the programmed routes can be extremely useful when troubleshooting routing problems.

You can use these concepts both in locally peered VNets (in the same region) as well as with globally peered VNets (in differnt regions). Note that this is the case because we are routing to an IP associated to a VM (our first NVA in this example). As later labs will show, when routing to an IP associated to a standard Load Balancer global peering will still work.

Some organizations wish to filter not only traffic between specific network segments, but traffic inside of a subnet as well, in order to reduce the probability of successful attacks spreading inside of an organization. This is what some in the industry know as 'microsegmentation'.

Figure 7. Intra-subnet NVA-based filtering, also known as “microsegmentation”

Step 1. In order to be able to test the topology above, we will leverage our jump host, which happens to be the second VM in myVnet1-Subnet1 (vnet1-vm2). We need to instruct all VMs in this subnet to send local traffic to the NVAs as well. First, let us verify that both VMs can reach each other. Exit the session from Vnet2-vm1 and Vnet1-vm1 to come back to Vnet1-vm2, and verify that you can reach its neighbor VM in 10.1.1.4:

lab-user@myVnet2-vm1:~$ exit

logout

Connection to 10.2.1.4 closed.

lab-user@myVnet1-vm1:~$ exit

logout

Connection to 10.1.1.4 closed.

lab-user@myVnet1-vm2:~$ ping 10.1.1.4

PING 10.1.1.4 (10.1.1.4) 56(84) bytes of data.

64 bytes from 10.1.1.4: icmp_seq=1 ttl=64 time=0.612 ms

64 bytes from 10.1.1.4: icmp_seq=2 ttl=64 time=3.62 ms

64 bytes from 10.1.1.4: icmp_seq=3 ttl=64 time=2.71 ms

64 bytes from 10.1.1.4: icmp_seq=4 ttl=64 time=0.748 ms

^C

--- 10.1.1.4 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3002ms

rtt min/avg/max/mdev = 0.612/1.924/3.628/1.287 ms

lab-user@myVnet1-vm2:~$Why is this happening? The reason is because if we look at the effective routes in either of the two VMs, the route selected to go to any destination inside of the same subnet is the one for 10.1.0.0/16 and next hop VnetLocal:

❯ az network nic show-effective-route-table -n myVnet1-vm1-nic -g $rg -o table

Source State Address Prefix Next Hop Type Next Hop IP

-------- ------- ---------------- ---------------- -------------

Default Active 10.1.0.0/16 VnetLocal

Default Active 10.4.0.0/16 VNetPeering

Default Active 0.0.0.0/0 Internet

Default Active 127.0.0.0/8 None

...Output omitted...

...Output omitted...

Default Active 20.35.252.0/22 None

User Active 10.0.0.0/8 VirtualAppliance 10.4.2.101Step 2. We want to override that system route so that traffic flowing between the two VMs, even if they are in the same subnet, is sent to the firewall in the hub VNet. This can be easily done by adding an additional User-Defined Route to the corresponding routing table, since as we saw in the previous lab, user routes have priority over system routes. Go back to your Azure CLI command prompt, and type this command:

az network route-table route create --address-prefix 10.1.1.0/24 --next-hop-ip-address 10.4.2.101 --next-hop-type VirtualAppliance --route-table-name vnet1-subnet1 -n vnet1-subnet1 -g $rg

{

"addressPrefix": "10.1.1.0/24",

"etag": "W/\"...\"",

"id": "/subscriptions/.../resourceGroups/vnetTest/providers/Microsoft.Network/routeTables/vnet1-subnet1/routes/vnet1-subnet1",

"name": "vnet1-subnet1",

"nextHopIpAddress": "10.4.2.101",

"nextHopType": "VirtualAppliance",

"provisioningState": "Succeeded",

"resourceGroup": "vnetTest"

}Step 3. If you go back to the terminal with the SSH connection to the jump host and restart the ping, you will notice that after some seconds (the time it takes Azure to program the new route in the NICs of the VMs) ping will stop working, because traffic is going through the firewalls now, configured to drop ICMP packets.

lab-user@myVnet1-vm2:~$ ping 10.1.1.4

PING 10.1.1.4 (10.1.1.4) 56(84) bytes of data.

64 bytes from 10.1.1.4: icmp_seq=1 ttl=64 time=2.22 ms

64 bytes from 10.1.1.4: icmp_seq=2 ttl=64 time=0.847 ms

...

64 bytes from 10.1.1.4: icmp_seq=30 ttl=64 time=0.762 ms

64 bytes from 10.1.1.4: icmp_seq=31 ttl=64 time=0.689 ms

64 bytes from 10.1.1.4: icmp_seq=32 ttl=64 time=3.00 ms

^C

--- 10.1.1.4 ping statistics ---

98 packets transmitted, 32 received, 67% packet loss, time 97132ms

rtt min/avg/max/mdev = 0.620/1.160/4.284/0.766 ms

lab-user@myVnet1-vm1:~$Step 4. To verify that routing is still correct, you can now try SSH instead of ping. The fact that SSH works, but ping does not, demonstrates that the traffic now goes through the NVA (configured to allow SSH, but to drop ICMP packets).

lab-user@myVnet1-vm2:~$ ssh 10.1.1.4

Output omitted

lab-user@myVnet1-vm1:~$UDRs can be used not only to steer traffic between subnets through a firewall, but to steer traffic even between hosts inside of one subnet through a firewall too. This is due to the fact that Azure routing is not performed at the subnet level, as in traditional networks, but at the NIC level. This enables a very high degree of granularity

As a side remark, in order for these microsegmentation designs to work, the firewall needs to be in a separate subnet from the VMs themselves, otherwise the UDR will provoke a routing loop.

NOTE: The mechanism to have inter-subnet routing going through the NVA would be exactly the same. Only that if you want intra-subnet traffic to be routed directly, you would have to add another route to the route table for the subnet prefix and next hop type

VnetLocal. This exercise is left to the reader (provisioning an additional VM in one of the other networks would be required for testing).

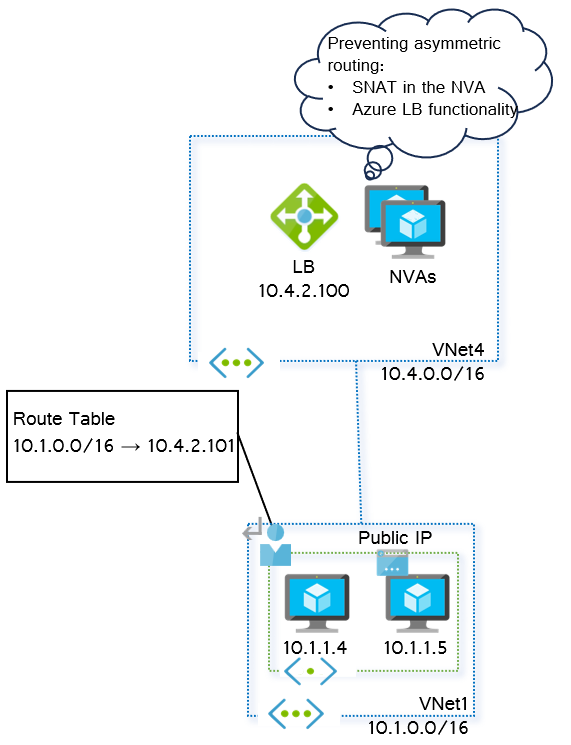

If all traffic is going through a single Network Virtual Appliance (NVA), chances are that it is not going to scale, or that it suffers from a problem and all of the traffic stops flowing. Whereas you might solve the scalability problem by resizing the VM where it lives, you should have at least two NVA instances for high availability. Besides, scale out provides a more linear way of achieving additional performance, potentially even increasing and decreasing the number of NVAs automatically via scale sets.

In this lab we will use two NVAs and will send the traffic over both of them by means of an Azure Load Balancer. Since return traffic must flow through the same NVA (since firewalling is a stateful operation and asymmetric routing would break it), the firewalls will source-NAT traffic to their individual addresses.

Figure 8. Load balancer for NVA scale out

Note that no clustering function is required in the firewalls, each firewall is completely unaware of the others.

A different model that we are not going to explore in this lab is based on UDR (User-Defined Route) automatic modification. The concept is simple: if you have the setup from Lab 3, you have UDRs pointing to an NVA. If that NVA went down, you could have an automatic mechanism to change the UDRs so that they point to a second NVA. After the Azure Load Balancer supports the HA Ports feature, what we will explore later in this lab, most NVA vendors have moved away from the UDR-based HA model, that is why we will not explore it in this lab. With recent innovations in Azure SDN the time it takes to change routes and/or move IP addresses across virtual machines has been considerably reduced.

Step 1. As a first thing, we will create a NAT gateway for outbound communication from the NVAs. This is the preferred method for egress connectivity to the public Internet to prevent SNAT port exhaustion (see Use SNAT for outbound connections):

az network public-ip create -g $rg -n natgw-pip --sku standard --allocation-method static -l $location

az network nat gateway create -n mynatgw -g $rg -l $location --public-ip-address natgw-pip

az network vnet subnet update -n myVnet4Subnet2 --vnet-name myVnet4 --nat-gateway mynatgw -g $rg

az network vnet subnet update -n myVnet4Subnet3 --vnet-name myVnet4 --nat-gateway mynatgw -g $rgIf you check your egress IP address from any of the NVAs, it should be the NAT gateway's public IP:

az network public-ip show -n natgw-pip -g $rg --query ipAddress -o tsv 20.1.207.13

lab-user@linuxnva-2:~$ curl ifconfig.me 20.1.207.13

Step 2. First, go to your terminal window to verify that both an internal and an external load balancer have been deployed (our NVAs have each two NICs). In this lab we will only use the internal load balancer:

az network lb list -g $rg -o table

Location Name ProvisioningState ResourceGroup

---------- ------- ------------------- ---------------

westeurope linuxnva-slb-ext Succeeded vnetTest

westeurope linuxnva-slb-int Succeeded vnetTest Step 3. We can inspect the backends configured in the load balancer. For that we will need the name of the backend farm of the load balancer first:

az network lb show -n linuxnva-slb-int -g $rg --query 'backendAddressPools[].name' -o tsv

linuxnva-slbBackend-intaz network lb address-pool address list --pool-name linuxnva-slbBackend-int --lb-name linuxnva-slb-int -g $rg -o table(The previous command should return no output)

Step 4. Now that we know the backend pool where we want to add our NVAs, we can use this command to do so. Intuitively you might think that this process is performed at the LB level, but it is actually a NIC operation. In other words, you do not add the NIC to the LB backend pool, but the backend pool to the NIC:

az network nic ip-config address-pool add --ip-config-name linuxnva-1-nic0-ipConfig --nic-name linuxnva-1-nic0 --address-pool linuxnva-slbBackend-int --lb-name linuxnva-slb-int -g $rgAnd a similar command for our second Linux-based NVA appliance:

az network nic ip-config address-pool add --ip-config-name linuxnva-2-nic0-ipConfig --nic-name linuxnva-2-nic0 --address-pool linuxnva-slbBackend-int --lb-name linuxnva-slb-int -g $rgFinally, we can add the external NICs of the NVAs to the public Load Balancer too:

az network nic ip-config address-pool add --ip-config-name linuxnva-1-nic0-ipConfig --nic-name linuxnva-1-nic0 --address-pool linuxnva-slbBackend-ext --lb-name linuxnva-slb-ext -g $rg

az network nic ip-config address-pool add --ip-config-name linuxnva-2-nic0-ipConfig --nic-name linuxnva-2-nic0 --address-pool linuxnva-slbBackend-ext --lb-name linuxnva-slb-ext -g $rgNote: the previous commands will require some minutes to run

You can verify that the pool for the internal and public load balancers has been successfully added to both NICs with this command:

az network lb address-pool address list --pool-name linuxnva-slbBackend-int --lb-name linuxnva-slb-int -g $rg -o table

Name

------------------------------------------------

vnetTest_linuxnva-1-nic0linuxnva-1-nic0-ipConfig

vnetTest_linuxnva-2-nic0linuxnva-2-nic0-ipConfig

az network lb address-pool address list --pool-name linuxnva-slbBackend-ext --lb-name linuxnva-slb-ext -g $rg -o table

Name

------------------------------------------------

vnetTest_linuxnva-1-nic0linuxnva-1-nic0-ipConfig

vnetTest_linuxnva-2-nic0linuxnva-2-nic0-ipConfigStep 5. Let us verify the rules configured in the load balancer. As you can see, there is a load balancing rule for ALL TCP/UDP (Protocol is All) ports, so it will forward all TCP/UDP traffic to the backends:

az network lb rule list --lb-name linuxnva-slb-int -g $rg -o table

BackendPort FrontendPort LoadDistribution Name Protocol

------------- -------------- ------------------ ----------- --------

0 0 Default HARule All Note: This type of rules for all ports only works in standard Load Balancers. In order to verify which SKU the load balancers have, you can use issue this command:

az network lb list -g $rg --query '[].[name,sku.name]' -o table

Column1 Column2

---------------- ---------

linuxnva-slb-ext Standard

linuxnva-slb-int StandardStep 6. Verify with the following command the fronted IP address that the load balancer has been preconfigured with (with the ARM template in the very first lab):

az network lb frontend-ip list --lb-name linuxnva-slb-int -g $rg -o table

Name PrivateIpAddress PrivateIpAllocationMethod

---------------- ------------------ -------------------------

myFrontendConfig 10.4.2.100 Static Note: some columns have been removed from the previous output for simplicity

Step 7. Verify that the NVAs are answering to the load balancer's healtcheck probes, by looking at the load balancer metrics. You can access this information from two places:

- Go to the Metrics blade of the Load Balancer, and select the metric "Health Probe Status". Optionally, you can reduce the time span down to 30 minutes, and apply splitting per backend IP address

- Go to the Insights blade of the Load Balancer, select "View detailed metrics", and in the "Frontend and backend availability" you will find the chart "Health Probe Status by Backend IP Address".

For example, next figure shows an example where initially only one of the NVAs was responding to the healthcheck, and after fixing it went up to 100%:

Figure 9. Load balancer health metrics

If you find that the NVAs in your lab environment are not answering to the healthcheck probes of the load balancer, it could be due to the fact that the second interface of the NVA has a higher priority (lower metric). You can fix this issuing these commands, which assign the default metric (100) to eth0 and a worse metric (200) to eth1:

sudo ifmetric eth0 100

sudo ifmetric eth1 200Step 8. We must change the next-hop for the UDRs that are required for the communication. We need to point them at the virtual IP address of the load balancer (10.4.2.100). Remember that we configured that route to point to 10.4.2.101, the IP address of one of the firewalls. We will take the route for microsegmentation, in order to test the connection depicted in the picture above:

az network route-table route update -g $rg --route-table-name vnet1-subnet1 -n vnet1-subnet1 --next-hop-ip-address 10.4.2.100At this point communication between the VMs should be possible, flowing through the NVA.

If you go back to the SSH session to myVnet1-vm2, you can verify that ping to the neighbor VM in the same subnet still does not work (meaning that traffic is being successfully dropped by the firewall), but SSH does:

lab-user@myVnet1-vm2:~$ ping 10.1.1.4

PING 10.1.1.4 (10.1.1.4) 56(84) bytes of data.

^C

--- 10.1.1.4 ping statistics ---

4 packets transmitted, 0 received, 100% packet loss, time 1006mslab-user@myVnet1-vm2:~$ ssh 10.1.1.4

lab-user@10.1.1.4's password:

Welcome to Ubuntu 16.04.1 LTS (GNU/Linux 4.4.0-47-generic x86_64)

lab-user@myVnet1-vm1:~$Step 9. Observe the source IP address that the destination machine sees. This is due to the source NAT that firewalls do, in order to make sure that return traffic from myVnet1-vm2 goes through the NVA as well:

lab-user@ myVnet1-vm2:~$ who

lab-user pts/0 2017-03-23 23:41 (10.4.2.101)Note: if you see multiple SSH sessions, you might want to kill them, so that you only have one. You can get the process IDs of the SSH sessions with the command ps -ef | grep ssh, and you can kill a specific process ID with the command kill -9 process_id_to_be_killed. Obviously, if you happen to kill the session over which you are currently connected, you will have to reconnect again.

Step 10. This is expected, since our firewalls are configured to source NAT the connections outgoing on that interface. Now open another SSH window/panel, and connect from the jump host to the NVA. If in the who command you saw the IP address 10.4.2.101. Remember that the username is still 'lab-user', the password 'Microsoft123!' (without the quotes). After connecting to the firewall, you can display the NAT configuration with the following command:

lab-user@myVnet1-vm2:~$ ssh 10.4.2.101

lab-user@10.4.2.101's password:

...Output omitted...

lab-user@linuxnva-1:~$

lab-user@linuxnva-1:~$ sudo iptables -L -t nat

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- anywhere anywhere

MASQUERADE all -- anywhere anywhereNote: the Linux machines that we use as firewalls in this lab have the Linux package "iptables" installed to work as firewall. A tutorial of iptables is out of the scope of this lab guide. Suffice to say here that the key word MASQUERADE means to translate the source IP address of packets and replace it with its own interface address. In other words, source-NAT. There are two MASQUERADE entries, one per each interface of the NVA. You can see to which interface the entries refer to with the command sudo iptables -vL -t nat.

Step 11. We will simulate a failure of the NVA where the connection is going through (in this case 10.4.2.101, linuxnva-1). First of all, verify that both ports 1138 (used by the internal load balancer of this lab scenario for the healthcheck probes) and 1139 (that could be used by the external load balancer for the healthcheck probes) are open:

lab-user@linuxnva-1:~$ nc -zv -w 1 127.0.0.1 1138-1139

Connection to 127.0.0.1 1138 port [tcp/*] succeeded!

Connection to 127.0.0.1 1139 port [tcp/*] succeeded!Note: in this example we use the Linux command nc (an abbreviation for netcat) to open TCP connections to those two ports

The process answering to TCP requests on those ports is netcat (represented by 'nc'), as you can see with netstat:

lab-user@linuxnva-1:~$ sudo apt update && sudo apt install -y net-tools

...Output omitted...

lab-user@linuxnva-1:~$ sudo netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program

tcp 0 0 0.0.0.0:1138 0.0.0.0:* LISTEN 1783/nc

tcp 0 0 0.0.0.0:1139 0.0.0.0:* LISTEN 1782/nc

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1587/sshd

tcp6 0 0 :::80 :::* LISTEN 11730/apache2

tcp6 0 0 :::22 :::* LISTEN 1587/sshdVerify that the internal load balancer is actually using a TCP probe on port 1138:

az network lb probe list --lb-name linuxnva-slb-int -g $rg -o table

IntervalInSeconds Name NumberOfProbes Port Protocol ProvisioningState ResourceGroup

------------------- ------- ---------------- ------ ---------- ------------------- ---------------

15 myProbe 2 1138 Tcp Succeeded vnetTestWe can verify that both NVA instances are actively responding the healthcheck probes. If you go to the Azure portal, you can verify in the Load Balancer's metrics that 100% of the backends are healthy, as it was described earlier in step 7.

Step 12. Now we want to take out the firewall out of the load balancer rotation. There are many ways to do that, but in this lab we will do it with Network Security Groups (NSGs), so that we don't need to shut down interfaces or virtual machines, since we control the NSG from outside of the VM.

If you go back to the your Azure CLI terminal, you can see that there are some NSGs defined in the resource group:

az network nsg list -g $rg -o table

Location Name ProvisioningState ResourceGroup ResourceGuid

---------- ------------------- ------------------- --------------- ------------------------------------

westeurope linuxnva-1-nic0-nsg Succeeded vnetTest e506ae9b-156d-4dd0-977b-2678691031d4

westeurope linuxnva-2-nic0-nsg Succeeded vnetTest 14217c8a-c19e-4151-a79c-a6ca623b9ef2If you examine the one for the NVA1 (linuxnva-1-nic0-nsg), you will see that only the default rules are there. We can do it with linuxnva-1-nic0-nsg, for example (the other should be identical):

az network nsg rule list --nsg-name linuxnva-1-nic0-nsg -g $rg -o tableNow we can add a new rule that will prevent ALL traffic from entering the VM, including the Load Balancer probes. This effectively will have as consequence that all traffic will go through the other NVA:

az network nsg rule create --nsg-name linuxnva-1-nic0-nsg -g $rg -n deny_all_in --priority 100 --access Deny --direction Inbound --protocol "*" --source-address-prefixes "*" --source-port-ranges "*" --destination-address-prefixes "*" --destination-port-ranges "*"Now the rules in your NSG should look like this:

az network nsg rule list --nsg-name linuxnva-1-nic0-nsg -g $rg -o table

Name RscGroup Prio SourcePort SourceAddress SourceASG Access Prot Direction DestPort DestAddres DestASG

----------- -------- ---- ---------- ------------- --------- ------ ---- --------- -------- ---------- -------

deny_all_in vnetTest 100 * * None Deny * Inbound * * NoneIf you now initiate another SSH connection to myVnet1-vm1 from the jump host, you will see that you are now always going through the other NVA (in this example, nva-2). Note that it takes some time (defined by the probe frequency and number, per default two times 15 seconds) until the load balancer decides to take the NVA out of rotation.

lab-user@myVnet1-vm2:~$ ssh 10.1.1.4

lab-user@10.1.1.5's password:

...

lab-user@myVnet1-vm2:~$

lab-user@myVnet1-vm2:~$ who

lab-user pts/0 2017-06-29 21:21 (10.4.2.101)

lab-user pts/1 2017-06-29 21:39 (10.4.2.102)

lab-user@myVnet1-vm2:~$Note: you might still see the previous connection going through 10.4.2.101, as in the previous example

Step 13. Let us confirm that the load balancer farm now only contains one NVA, following the process described in https://docs.microsoft.com/azure/load-balancer/load-balancer-standard-diagnostics. In the Azure Portal, navigate to the internal load balancer in the Resource Group vnetTest, and under Metrics (preview at the time of this writing) select Health Probe Status. You should be able to see something like the figure below, where only half of the probes are successful.

Figure 10. Load balancer health metrics showing one NVA down (10.4.2.101)

Note: The oscillations around 50% are because of the skew in the intervals of the probes for the NVAs: in some monitoring intervals there are more probes for nva-1, in others more probes for nva-2. Play with the filtering mechanism of the graph using the Backend IP Address as filtering dimension (as in the figure above) to verify that 0% of the probes to nva-1 are successful, but 100% of the probes to nva-2 are successful.

Step 14. To be completely sure of our setup, let us bring the second firewall out of rotation too:

az network nsg rule create --nsg-name linuxnva-2-nic0-nsg -n deny_all_in --priority 100 --access Deny --direction Inbound --protocol "*" --source-address-prefixes "*" --source-port-ranges "*" --destination-address-prefixes "*" --destination-port-ranges "*"

Output omitted

az network nsg rule list --nsg-name linuxnva-2-nic0-nsg -o table

Name RscGroup Prio SourcePort SourceAddress SourceASG Access Prot Direction DestPort DestAddres DestASG

----------- -------- ---- ---------- ------------- --------- ------ ---- --------- -------- ---------- -------

deny_all_in vnetTest 100 * * None Deny * Inbound * * NoneIf you had any SSH sessions opened from the jump host to any other VM, they are now broken and will have to timeout. You may want to start another SSH connection to your jump host in that case. If you try to SSH to vm1 (or to anything else going through the firewalls), it should fail (note that it takes a couple of seconds to program the NSGs into the NICs, wait like 30 seconds before trying the following command).

lab-user@myVnet1-vm2:~$ ssh 10.1.4.4

ssh: connect to host 10.1.4.4 port 22: Connection timed outStep 15. In order to repair our lab, we just need to remove the NSG rules, to allow the Azure Load Balancer to discover the firewalls again:

az network nsg rule delete -n deny_all_in --nsg-name linuxnva-1-nic0-nsg -g $rg

az network nsg rule delete -n deny_all_in --nsg-name linuxnva-2-nic0-nsg -g $rgAfter some seconds SSH should be working just fine once more. You can verify that the probe health is back to 100% in the Azure Portal.

Step 16. We still need to change the rest of the routes, which currently are hard wired to a single NVA, to leverage the Azure load balancer:

az network route-table route update --route-table-name vnet1-subnet1 -g $rg -n rfc1918-1 --next-hop-ip-address 10.4.2.100 --next-hop-type VirtualAppliance

az network route-table route update --route-table-name vnet2-subnet1 -g $rg -n default --next-hop-ip-address 10.4.2.100 --next-hop-type VirtualAppliance

az network route-table route update --route-table-name vnet3-subnet1 -g $rg -n default --next-hop-ip-address 10.4.2.100 --next-hop-type VirtualApplianceAfter a couple of seconds, check the effective route table on the NIC belonging to the VM in Vnet3:

az network nic show-effective-route-table -n myVnet3-vm1-nic -g $rg -o table

Source State Address Prefix Next Hop Type Next Hop IP

-------- ------- ---------------- ----------------- -------------

Default Active 10.3.0.0/16 VnetLocal

Default Invalid 0.0.0.0/0 Internet

User Active 0.0.0.0/0 VirtualAppliance 10.4.2.100

Default Active 10.4.0.0/16 VNetGlobalPeeringAnd connectivity will still work:

lab-user@myVnet1-vm2:~$ ssh 10.3.1.4

...Output omitted...

lab-user@10.3.1.4's password:

Welcome to Ubuntu 16.04.5 LTS (GNU/Linux 4.15.0-1021-azure x86_64)

...Output omitted...

lab-user@myVnet3-vm1:~$NVAs can be load balanced with the help of an Azure Load Balancer. UDRs configured in each subnet will essentially point not to the IP address of an NVA, but to a virtual IP address configured in the LB.

The HA Ports feature of the Azure standard load balancer allows configuring Layer3 load balancing rules, that is, rules that will forward all UDP/TCP ports to the NVA. This is today the way most modern HA designs work, superseeding designs based on automatic UDR modification.

Another problem that needs to be solved is return traffic. With stateful network devices such as firewalls you need to prevent asymmetric routing. In other words, source-to-destination traffic needs to go through the same firewall as destination-to-source traffic (for any given TCP or UDP flow). This can be achieved by source-NATting the traffic at the NVAs, so that the destination will always send the return traffic the right way.

Lastly, we have verified that this construct works for local and global peerings, when using the standard Azure Load Balancer (as opposed to the basic SKU).

As we saw in the previous lab, the NVAs was source-natting (or masquerading, in iptables parlance) traffic so that return traffic would always go through the same firewall that inspected the first packet. However, in some situations source-NATting is not desirable, so this lab will have a look at a variation of the previous setup without source NAT.

Step 1. If you go to the terminal window with your jump host, you can connect to the NVAs and disable source NAT (masquerade). Note that there are two masquerade entries, one for each interface in the NVA. The following example shows the process in nva-1, please repeat the process for nva-2 (10.4.2.102).

lab-user@myVnet1-vm2:~$ ssh 10.4.2.101

lab-user@10.4.2.101's password:

...Output omitted...

lab-user@linuxnva-1:~$

lab-user@linuxnva-1:~$ sudo iptables -vL -t nat

Chain PREROUTING (policy ACCEPT 7655 packets, 547K bytes)

pkts bytes target prot opt in out source destination

Chain INPUT (policy ACCEPT 204 packets, 10628 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 8524 packets, 523K bytes)

pkts bytes target prot opt in out source destination

Chain POSTROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

10517 731K MASQUERADE all -- any eth0 anywhere anywhere

5419 325K MASQUERADE all -- any eth1 anywhere anywhere

lab-user@linuxnva-1:~$

lab-user@linuxnva-1:~$ sudo iptables -t nat -D POSTROUTING -o eth0 -j MASQUERADE

lab-user@linuxnva-1:~$ sudo iptables -t nat -A POSTROUTING -o eth0 ! -d 10.0.0.0/255.0.0.0 -j MASQUERADE

lab-user@linuxnva-1:~$

lab-user@linuxnva-1:~$ sudo iptables -vL -t nat

Chain PREROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 3 packets, 180 bytes)

pkts bytes target prot opt in out source destination

Chain POSTROUTING (policy ACCEPT 3 packets, 180 bytes)

pkts bytes target prot opt in out source destination

5419 325K MASQUERADE all -- any eth1 anywhere anywhere

405 325K MASQUERADE all -- any eth0 anywhere !10.0.0.0/8Step 2: Now you can go back to the jump host, connect to vm1, and verify that the source IP address has not been source-natted:

lab-user@myVnet1-vm2:~$ ssh 10.1.1.4

lab-user@10.1.1.4's password:

...Output omitted...

lab-user@myVnet1-vm1:~$

lab-user@myVnet1-vm1:~$ who

lab-user pts/0 2018-07-12 11:56 (10.1.1.5)Step 3: Why is this working? Because the load balancing algorith in the Azure LB distributes the load equally for the traffic in both directions. In other words, if traffic from VM1 to VM2 is load balanced to NVA1, return traffic from VM2 to VM1 will be load balanced to NVA1 as well. This is the case for the default load balancing algorithm, that leverages protocol, source/destination IPs and source/destination ports. This load balancing mode is often called 5-tuple hash.

There are other load balancing algorithms, as you can see in https://docs.microsoft.com/azure/load-balancer/load-balancer-distribution-mode, such as IP Source affinity. This is actually not only based in the source IP address, but in the destination IP address too. You can change the load balancing algorithm like this:

az network lb rule show -g $rg --lb-name linuxnva-slb-int -n HARule --query loadDistribution -o tsv

Default

az network lb rule update -g $rg --lb-name linuxnva-slb-int -n HARule --load-distribution SourceIP -o none

az network lb rule show -g $rg --lb-name linuxnva-slb-int -n HARule --query loadDistribution -o tsv

SourceIPYou can bring the load balancing algorithm back to the default:

az network lb rule update --lb-name linuxnva-slb-int -n HARule --load-distribution DefaultUsing an Azure LB for the return traffic instead of SNAT is a viable possibility as well, since the hash-based load balancing algorithms are symmetric, meaning that for a pair of source and destination combinations it will send traffic to the same NVA.

Note that this schema is the standard mechanism to deploy active/active clusters of NVAs.

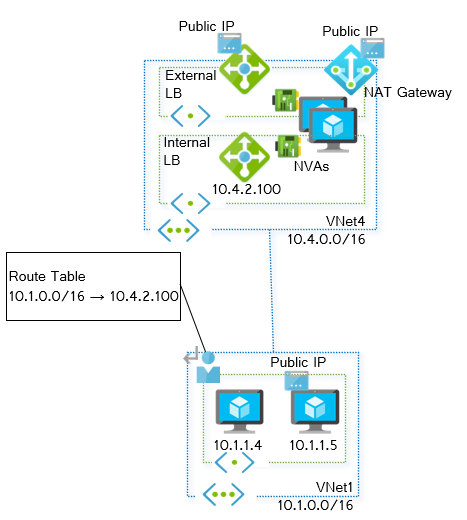

What if we want to send all traffic leaving the VNet towards the public Internet through the NVAs? We need to make sure that Internet traffic to/from all VMs flows through the NVAs via User-Defined Routes, that NVAs source-NAT the outgoing traffic with their public IP address, and that NVAs have Internet connectivity.

You might be asking yourself whether this last point is relevant, since after all, all VMs in Azure have per default connectivity to the Internet. However, when associating a standard internal LB to the NVAs we actually removed this connectivity, as documented in https://docs.microsoft.com/azure/load-balancer/load-balancer-outbound-connections#defaultsnat. As explained in that document, you have two alternatives in order to restore Internet connectivity:

- Configure a public IP address for every NVA: although possible, this is often undesirable, since that would expose our NVAs to the Internet. However, this design allows to use different NICs in the NVA for internal and external connectivity. See more about this scenario here.

- Attach a public LB to the NVAs with outbound NAT rules. This scenario is described here, and it is what we will use in our lab.

- Deploy a NAT gateway to the NVA's subnet (this is what we did earlier in this lab)

This figure demonstrates the concept that we will implement:

Step 1. For this test we will use VNet2-VM1. We will insert a default route to send Internet traffic through the NVAs. First, connect from the jump host to VNet2-VM1 (10.2.1.4), and verify that you have don't Internet connectivity. You could just send a curl request to the web service ifconfig.me, that returns your public IP address as seen by the Web server:

lab-user@myVnet1vm2:~$ ssh 10.2.1.4

lab-user@10.2.1.4's password:

Welcome to Ubuntu 16.04.1 LTS (GNU/Linux 4.4.0-47-generic x86_64)

...

lab-user@myVnet2-vm1:~$ curl ifconfig.me

curl: (28) Failed to connect to ifconfig.me port 80 after 129605 ms: Connection timed outStep 2. Let's verify that we have a valid effective route in the NIC for VNet2-VM1 coming from the routing table configured in previous steps, with the internal LB's IP address as next hop (10.4.2.100):

az network nic show-effective-route-table -n myVnet2-vm1-nic -g $rg -o table

Source State Address Prefix Next Hop Type Next Hop IP

-------- ------- ---------------- ---------------- -------------

Default Active 10.2.0.0/16 VnetLocal

Default Active 10.4.0.0/16 VNetPeering

Default Invalid 0.0.0.0/0 Internet

User Active 0.0.0.0/0 VirtualAppliance 10.4.2.100At this point, the routing table for Vnet2-Subnet1 should look like this:

az network route-table route list --route-table-name vnet2-subnet1 -g $rg -o table

AddressPrefix HasBgpOverride Name NextHopIpAddress NextHopType ProvisioningState ResourceGroup

--------------- ---------------- ------- ------------------ ---------------- ------------------- ---------------

0.0.0.0/0 False default 10.4.2.100 VirtualAppliance Succeeded vnetTestAnd if we check the effective routes in the NIC for our VM in Vnet2, you should see that our custom route to the NVA has overridden the system route to the Internet:

Step 3. If you look at the default rules of the NSG attached to the nic0 interface of the NVAs, you will find out that no traffic addressed to the Internet will be allowed:

az network nsg rule list --nsg-name linuxnva-1-nic0-nsg -g $rg -o table --include-default

Name Priority SrcPortRanges SrcAddressPrefixes Access Protocol Direction DstPortRanges DestinationAddressPrefixes

----------------------------- -------- ------------- ------------------ ------ -------- --------- ------------- --------------------------

AllowVnetInBound 65000 * VirtualNetwork Allow * Inbound * VirtualNetwork

AllowAzureLoadBalancerInBound 65001 * AzureLoadBalancer Allow * Inbound * *

DenyAllInBound 65500 * * Deny * Inbound * *

AllowVnetOutBound 65000 * VirtualNetwork Allow * Outbound * VirtualNetwork

AllowInternetOutBound 65001 * * Allow * Outbound * Internet

DenyAllOutBound 65500 * * Deny * Outbound * *Note: the previous output has been formated for readability

We therefore need to add a new rule to permit incoming traffic which is sourced by the VNet address space (including the peered VNets) and addressed to the Internet:

az network nsg rule create --nsg-name linuxnva-1-nic0-nsg -g $rg -n allow_vnet_internet --priority 110 --access Allow --direction Inbound --protocol "Tcp" --source-address-prefix "VirtualNetwork" --source-port-ranges "*" --destination-address-prefixes "*" --destination-port-ranges "80" "443"

az network nsg rule create --nsg-name linuxnva-2-nic0-nsg -g $rg -n allow_vnet_internet --priority 110 --access Allow --direction Inbound --protocol "Tcp" --source-address-prefix "VirtualNetwork" --source-port-ranges "*" --destination-address-prefixes "*" --destination-port-ranges "80" "443"az network nsg rule list --nsg-name linuxnva-1-nic0-nsg -g $rg -o table --include-default

Name Priority SrcPortRanges SrcAddressPrefixes Access Protocol Direction DstPortRanges DestinationAddressPrefixes

----------------------------- -------- ------------- ------------------ ------ -------- --------- ------------- --------------------------

allow_vnet_internet 110 * VirtualNetwork Allow Tcp Inbound 80-80 *

AllowVnetInBound 65000 * VirtualNetwork Allow * Inbound * VirtualNetwork

AllowAzureLoadBalancerInBound 65001 * AzureLoadBalancer Allow * Inbound * *

DenyAllInBound 65500 * * Deny * Inbound * *

AllowVnetOutBound 65000 * VirtualNetwork Allow * Outbound * VirtualNetwork

AllowInternetOutBound 65001 * * Allow * Outbound * Internet

DenyAllOutBound 65500 * * Deny * Outbound * *Step 4. nternet access should be work from VNet2-vm1, and it should be sourced from the public IP address from the NAT gateway we created in earlier steps of the lab and associated to both the internal and external subnets of the NVA.

az network public-ip show -n natgw-pip -g $rg --query ipAddress -o tsv 5.6.7.8

lab-user@myVnet2-vm1:~$ curl ifconfig.me 5.6.7.8

However, we want Internet traffic to go through the external interface of the NVAs, so we shouldn't need the NAT gateway associated to the internal subnet:

az network vnet subnet update -n myVnet4Subnet2 --vnet-name myVnet4 --nat-gateway null -g $rgSo how do we force Internet traffic to go through the external NIC? In order to prioritize the default route going out of the external interface, we can decrease its metric in both NVAs:

lab-user@linuxnva-1:~$ sudo ifmetric eth1 10

lab-user@linuxnva-2:~$ sudo ifmetric eth1 10

However, when doing this, not only we are forcing the 0.0.0.0/0 route to point to the outbound interface, but the return traffic for the Load Balancer probes as well (originating from the well-known IP address 168.63.129.16). As a consequence of the previous change, the internal Load Balancer is broken, because the NVAs answer to the healthcheck probes on the wrong interface. In order to fix this, we can introduce policy-based routing in the NVA, so that it will change to the healthcheck probes on the interface where it received them. Run these commands on each NVA instance:

# Get IP addresses from the external and internal interfaces

ipaddint=`ip a | grep 10.4.2 | awk '{print $2}' | awk -F '/' '{print $1}'` # either 10.4.2.101 or .102

ipaddext=`ip a | grep 10.4.3 | awk '{print $2}' | awk -F '/' '{print $1}'` # either 10.4.3.101 or .102

echo "The IP addresses of this NVA are $ipaddint and $ipaddext"

# Create a custom routing table for internal LB probes

echo "Creating custom route table for return traffic from our internal IP address $ipaddint to the Azure LB IP address 168.63.129.16..."

sudo sed -i '$a201 slbint' /etc/iproute2/rt_tables # an easier echo command would be denied by selinux

sudo ip rule add from $ipaddint to 168.63.129.16 lookup slbint # Note that this depends on the nva number!

sudo ip route add 168.63.129.16 via 10.4.2.1 dev eth0 table slbint

# Create a custom routing table for external LB probes

echo "Creating custom route table for return traffic from our external IP address $ipaddext to the Azure LB IP address 168.63.129.16..."

sudo sed -i '$a202 slbext' /etc/iproute2/rt_tables # an easier echo command would be denied by selinux

sudo ip rule add from $ipaddext to 168.63.129.16 lookup slbext

sudo ip route add 168.63.129.16 via 10.4.3.1 dev eth1 table slbext

# Show commands

echo "Rules created:"

ip rule list

echo "Routes in external table:"

ip route show table slbext

echo "Routes in internal table:"

ip route show table slbintStep 5. And we are finally done! If you test our curl command from VM2:

lab-user@myVnet2-vm1:~$ curl ifconfig.co

5.6.7.8Note: Observe that the public IP address that VM3 gets back from the ifconfig.co service is the public IP addresses assigned to the external Load Balancer. You can get the public IP addresses in your resource group with this command:

az network public-ip list --query [].[name,ipAddress] -o tsv

linuxnva-slbPip-ext 5.6.7.8

myVnet1-vm2-pip 1.2.3.4

vnet4gwPip

vnet5gwPip Note: in the previous output you would see your own IP addresses, which will obviously defer from the ones shown in the example above.

If you would like to use the NAT gateway instead of the outbound rule in the Azure LB, it is enough by removing the outbound rule:

az network lb outbound-rule delete -n myrule --lb-name linuxnva-slb-ext -g $rgIn case you need to recreate the outbound rule, you can recreate it like this:

az network lb outbound-rule create -n myrule --lb-name linuxnva-slb-ext -g $rg --address-pool linuxnva-slbBackend-ext --frontend-ip-configs myFrontendConfig --outbound-ports 30000 --idle-timeout 5 --protocol All

Essentially the mechanism for redirecting traffic going from Azure VMs to the public Internet through an NVA is very similar to the problems we have seen previously in this lab. You need to configure UDRs pointing to the NVA (or to an internal load balancer that sends traffic to the NVA).

Source NAT at the firewall/NVA will guarantee that the return traffic (destination-to-source) is sent to the same NVA that processed the initial packets (source-to-destination).

Your NSGs should allow traffic to get into the firewall and to get out from the firewall to the Internet.

Lastly, either a NAT gateway, an outbound NAT rule in a public LB or public IP addresses will be required in the NVAs, since otherwise Internet access is not possible while associated to the internal load balancer. The public IP address would have had to be standard (not basic) to coexist with the internal load balancer (standard too, to support the HA port feature). In this lab we showed the outbound NAT rule in an external Load Balancer and the NAT gateway, being the latter the preferred approach since it is more scalable.

Standard TCP probes only verify that the interface being probed answers to TCP sessions. But what if it is the other interface that has an issue? What good does it make if VMs send all traffic to a Network Virtual Appliance with a perfectly working internal interface (eth0 in our lab), but eth1 is down, and therefore that NVA has no Internet access whatsoever?