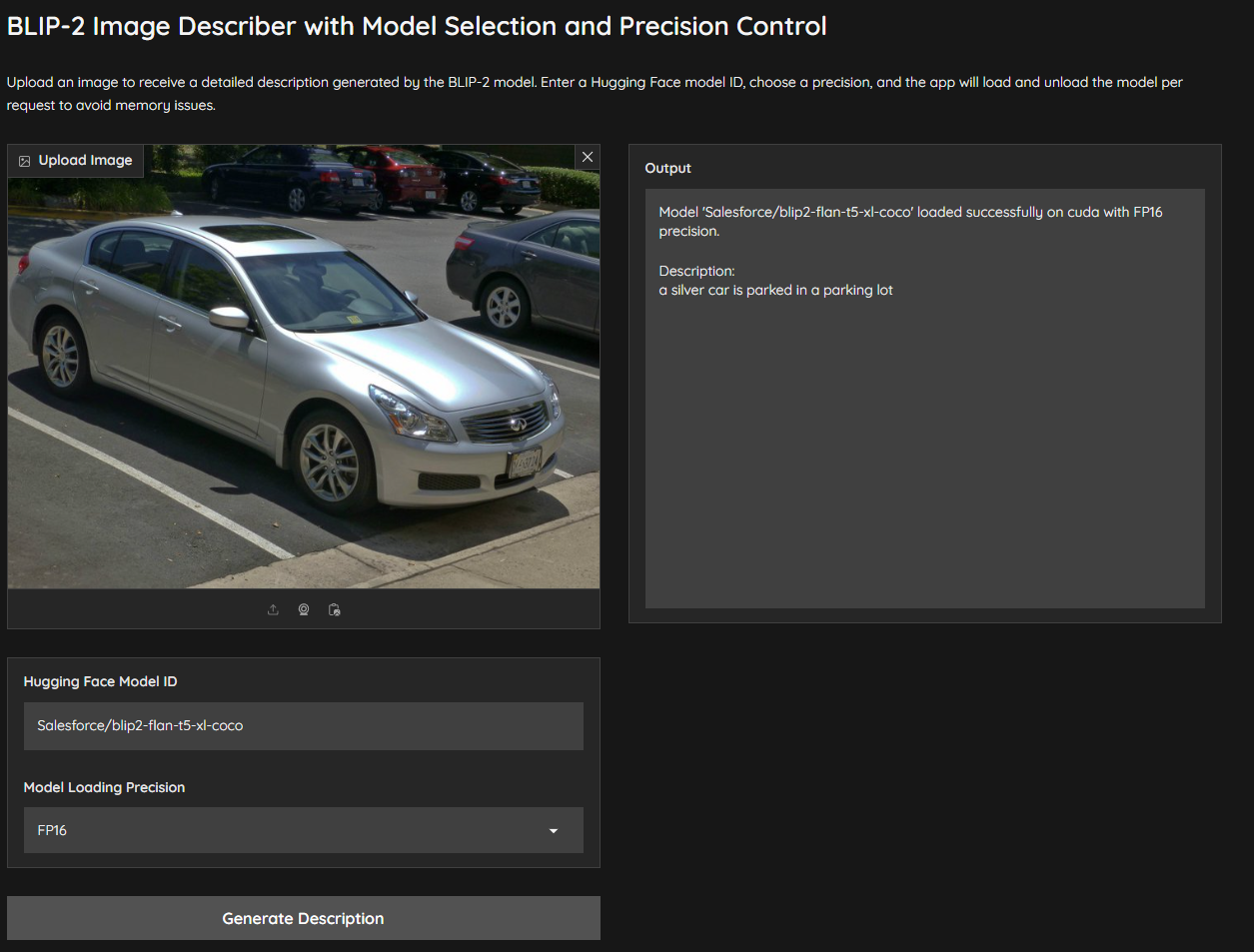

This Gradio application uses BLIP-2 with Flan-T5 to generate detailed descriptions of uploaded images. The app allows users to select different Hugging Face model IDs, specify precision (FP16, INT8, BF16, or FP32), and dynamically load models for optimal performance on various hardware configurations.

- Choose a Hugging Face model ID for flexible model selection.

- Select precision modes (FP16, INT8, BF16, FP32) to optimize performance based on hardware.

- Detailed, long-form descriptions generated with beam search and repetition controls.

-

Clone the repository:

-

Install dependencies:

pip install -r requirements.txt

-

Run the application:

python app.py

-

Access: Open the app in your browser at

http://localhost:7862.

This project is licensed under the MIT License.