This is the official repository of our paper titled HyperE2VID: Improving Event-Based Video Reconstruction via Hypernetworks by Burak Ercan, Onur Eker, Canberk Sağlam, Aykut Erdem, and Erkut Erdem.

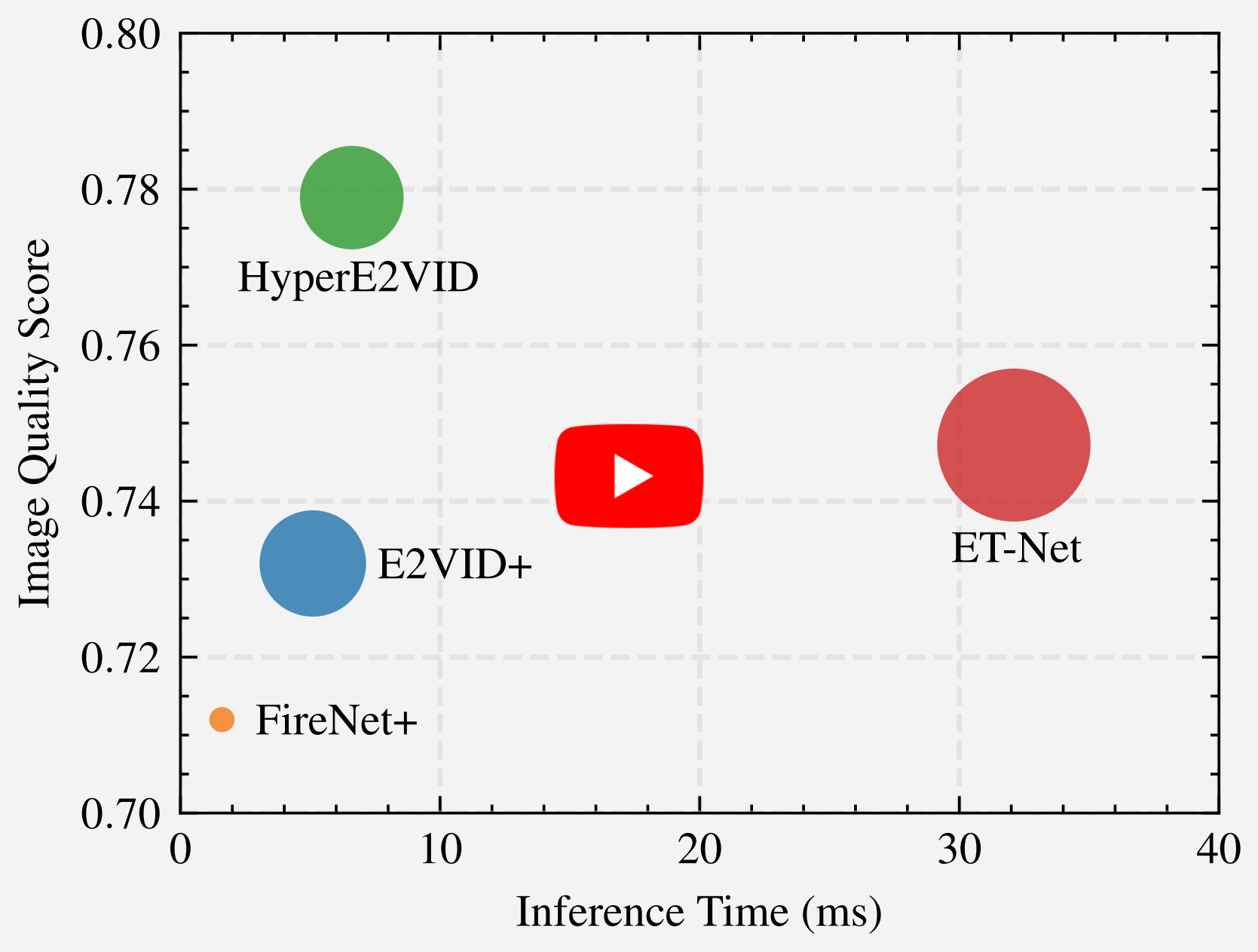

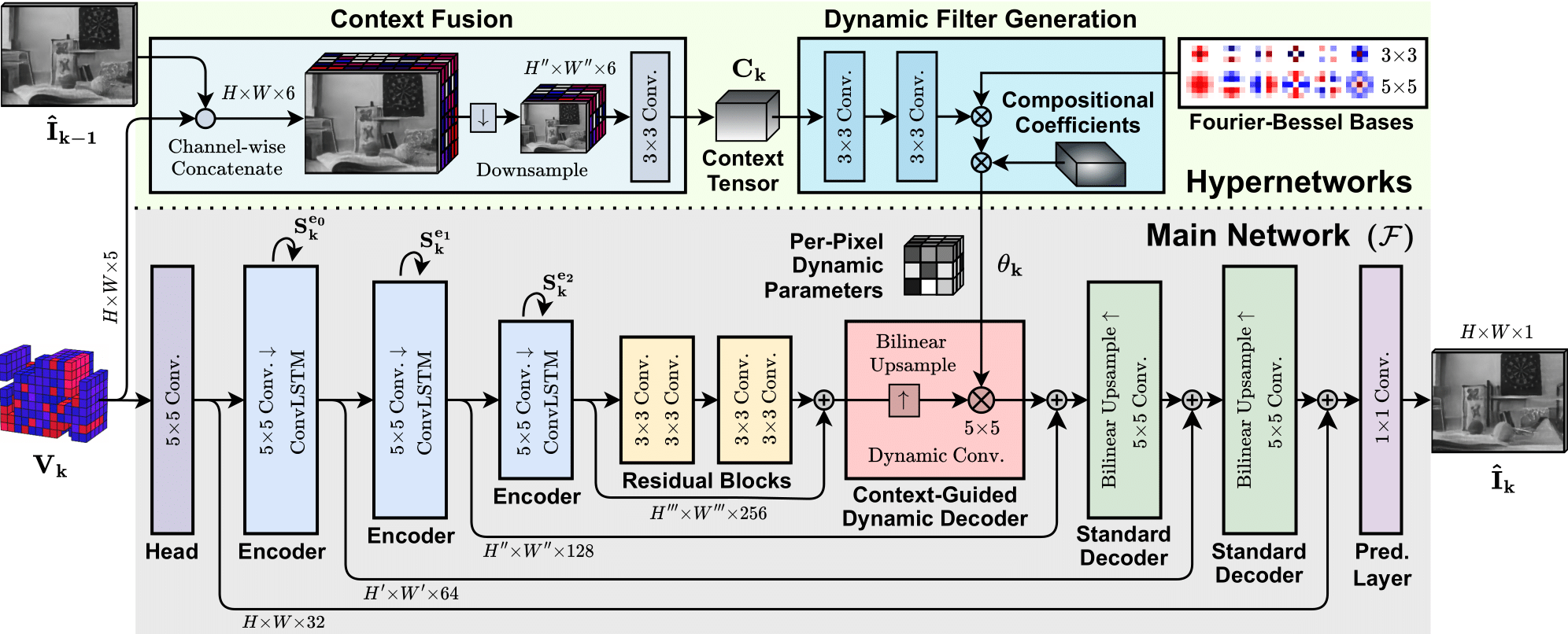

In this work we present HyperE2VID, a dynamic neural network architecture for event-based video reconstruction. Our approach extends existing static architectures by using hypernetworks and dynamic convolutions to generate per-pixel adaptive filters guided by a context fusion module that combines information from event voxel grids and previously reconstructed intensity images. We show that this dynamic architecture can generate higher-quality videos than previous state-of-the-art, while also reducing memory consumption and inference time.- For more details please see our paper.

- For qualitative results please see our project website.

- For more results and experimental analyses of HyperE2VID, please see the interactive result analysis tool of EVREAL.

- Model codes are published under the model folder in this repository.

- The pretrained model of HyperE2VID can be found here.

- For evaluation and analysis of HyperE2VID model, please use the codes in EVREAL repository.

- Training codes will be published soon.

If you use code in this repo in an academic context, please cite the following:

@article{ercan2023hypere2vid,

title={{HyperE2VID}: Improving Event-Based Video Reconstruction via Hypernetworks},

author={Ercan, Burak and Eker, Onur and Saglam, Canberk and Erdem, Aykut and Erdem, Erkut},

journal={arXiv preprint arXiv:2305.06382},

year={2023}}

- This work was supported in part by KUIS AI Center Research Award, TUBITAK-1001 Program Award No. 121E454, and BAGEP 2021 Award of the Science Academy to A. Erdem.

- This code borrows from or is inspired by the following open-source repositories: