Raptor simplifies deploying data science work from a notebook to production; it compiles your python research

code and takes care of the engineering concerns like scalability and reliability on Kubernetes. Focus on

the data science, RaptorML will take care of the engineering overhead.

Explore the docs »

Getting started in 5 minutes

·

Report a Bug

·

Request a Feature

Raptor enables data scientists and ML engineers to build and deploy operational models and ML-driven functionality, without learning backend engineering.

With Raptor, you can export your Python research code as standard production artifacts, and deploy them to Kubernetes. Once you deployed, Raptor optimize data processing and feature calculation for production, deploy models to Sagemaker or Docker containers, connect to your production data sources, scaling, high availability, caching, monitoring, and all other backend concerns.

Before Raptor, data scientists had to work closely with software engineers to translate their models into production-ready code, connect to data sources, transform their data with Flink/Spark or even Java, create APIs, dockerizing the model, handle scaling and high availability, and more.

With Raptor, data scientists can focus only on their research and model development, then export their work to production. Raptor takes care of the rest, including connecting to data sources, transforming the data, deploying and connecting the model, etc. This means data scientists can focus on what they do best, and Raptor handles the rest.

- Easy to use: Raptor is user-friendly and can be started within 5 minutes.

- Eliminate serving/training skew: You can use the same code for training and production to avoid training serving skew.

- Real-time/on-demand: Raptor optimizes feature calculations and predictions to be performed at the time of request.

- Seamless Caching and storage: Raptor uses an integrated caching system, and store your historical data for training purposes. So you won't need any other data storage system such as "Feature Store".

- Turns data science work into production artifacts: Raptor implements best-practice functionalities of Kubernetes solutions, such as scaling, health, auto-recovery, monitoring, logging, and more.

- Integrates with R&D team: Raptor extends existing DevOps tools and infrastructure and allows you to connect your ML research to the rest of your organization's R&D ecosystem, utilizing tools such as CI/CD and monitoring.

The work with Raptor starts in your research phase in your notebook or IDE. Raptor allows you to write your ML work in a translatable way for production purposes.

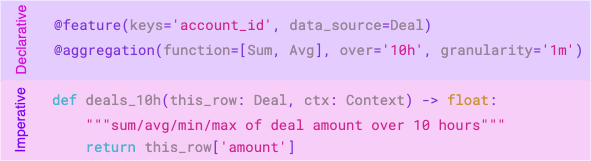

Models and Features in Raptor are composed of a declarative part(via Python's decorators) and a function code. This way, Raptor can translate the heavy-lifting engineering concerns(such as aggregations or caching) by implementing the "declarative part", and optimize the implementation for production.

After you are satisfied with your research results, "export" these definitions, and deploy it to Kubernetes using standard tools; Once deployed, Raptor Core(the server-side part) is extending Kubernetes with the ability to implement them. It takes care of the engineering concerns by managing and controlling Kubernetes-native resources such as deployments to connect your production data sources and run your business logic at scale.

You can read more about Raptor's architecture in the docs.

Raptor's LabSDK is the quickest and most popular way to develop RaptorML compatible features.

The LabSDK allows you to write Raptor-compatible features using Python and "convert" them to Kubernetes resources. This way, in most of the use-cases, you can iterate and play with your data.

Raptor installation is not required for training purposes. You only need to install Raptor when deploying to production (or staging).

Learn more about production installation at the docs.

-

Kubernetes cluster

(You can use Kind to install Raptor locally)

-

kubectlinstalled and configured to your cluster. -

Redis server (> 2.8.9)

The easiest way to install Raptor is to use the OperatorHub Installation method.

@feature(keys='name')

@freshness(target='-1', invalid_after='-1')

def emails_deals(_, ctx: Context) -> float:

return f"hello world {ctx.keys['name']}!"import pandas as pd

from typing_extensions import TypedDict

from labsdk.raptor import *

@data_source(

training_data=pd.read_csv(

'https://gist.githubusercontent.com/AlmogBaku/8be77c2236836177b8e54fa8217411f2/raw/hello_world_transactions.csv'),

keys=['customer_id'],

production_config=StreamingConfig()

)

class BankTransaction(TypedDict):

customer_id: int

amount: float

timestamp: str

# Define features

@feature(keys='customer_id', data_source=BankTransaction)

@aggregation(function=AggregationFunction.Sum, over='10h', granularity='1h')

def total_spend(this_row: BankTransaction, ctx: Context) -> float:

"""total spend by a customer in the last hour"""

return this_row['amount']

@feature(keys='customer_id', data_source=BankTransaction)

@freshness(max_age='5h', max_stale='1d')

def amount(this_row: BankTransaction, ctx: Context) -> float:

"""total spend by a customer in the last hour"""

return this_row['amount']

# Train the model

@model(

keys=['customer_id'],

input_features=['total_spend+sum'],

input_labels=[amount],

model_framework='sklearn',

model_server='sagemaker-ack',

)

@freshness(max_age='1h', max_stale='100h')

def amount_prediction(ctx: TrainingContext):

from sklearn.linear_model import LinearRegression

df = ctx.features_and_labels()

trainer = LinearRegression()

trainer.fit(df[ctx.input_features], df[ctx.input_labels])

return trainer

amount_prediction.export()Then, we can deploy the generated resources to Kubernetes using kubectl or instructing the DevOps team to integrate

the generated Makefile into the existing CI/CD pipeline.

- S3 historical storage plugins

- S3 storing

- S3 fetching data - Spark

- Deploy models to model servers

- Sagemaker ACK

- Seldon

- Kubeflow

- KFServing

- Standalone

- Large-scale training

- Support more data sources

- Kafka

- GCP Pub/Sub

- Rest

- Snowflake

- BigQuery

- gRPC

- Redis

- Postgres

- GraphQL

See the open issues for a full list of proposed features (and known issues) .

Contributions make the open-source community a fantastic place to learn, inspire, and create. Any contributions you make are greatly appreciated (not only code! but also documenting, blogging, or giving us feedback) 😍.

Please fork the repo and create a pull request if you have a suggestion. You can also simply open an issue and choose " Feature Request" to give us some feedback.

Don't forget to give the project a star! ⭐️

For more information about contributing code to the project, read the CONTRIBUTING.md file.

Distributed under the Apache2 License. Read the LICENSE file for more information.