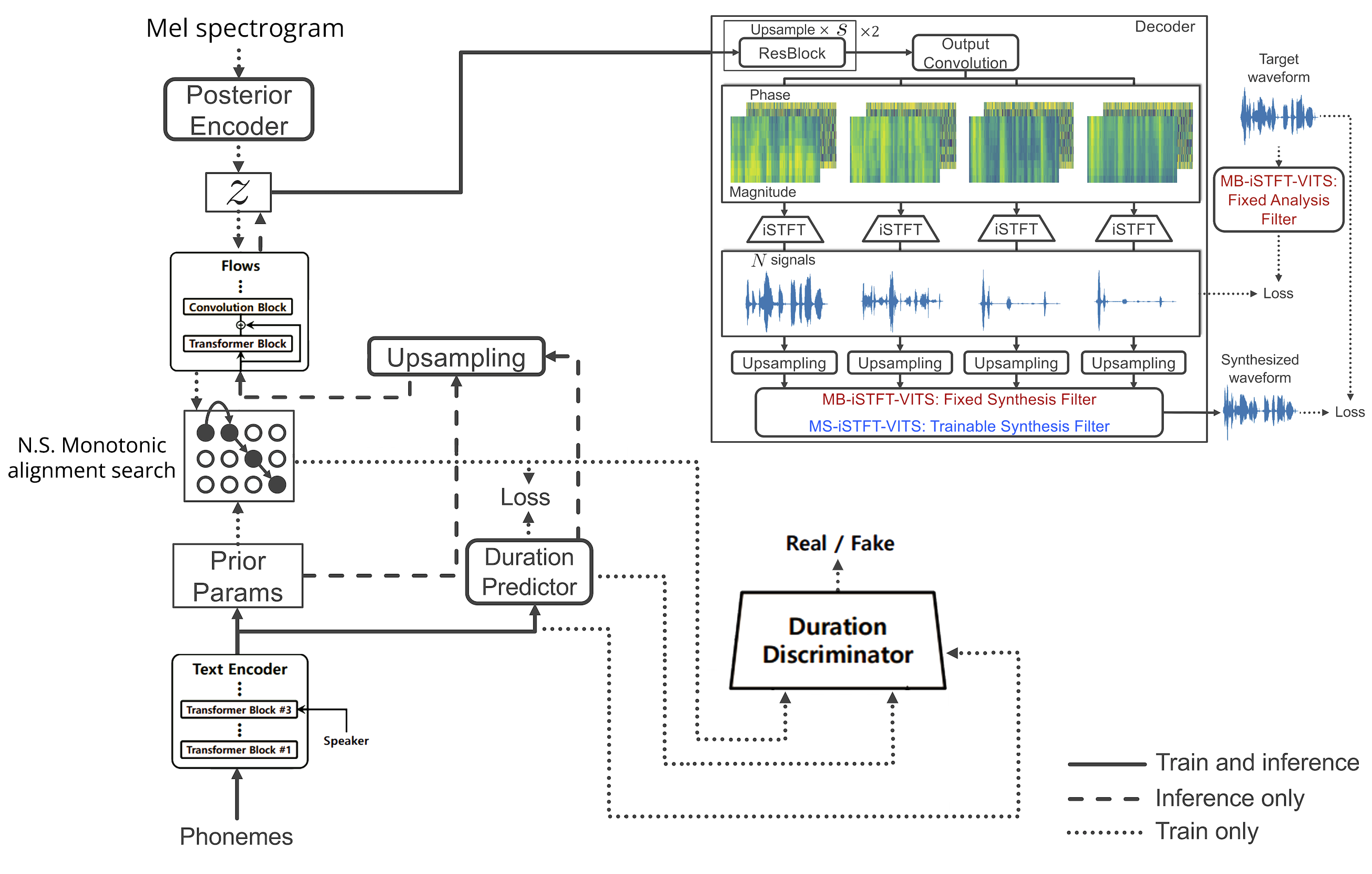

A... vits2_pytorch and MB-iSTFT-VITS hybrid... Gods, an abomination! Who created this atrocity?

This is an experimental build. Does not guarantee performance, therefore.

According to shigabeev's experiment, it can now dare claim the word SOTA for its performance (at least for Russian).

- Python >= 3.8

- CUDA

- Pytorch version 1.13.1 (+cu117)

- Clone this repository

- Install python requirements. Please refer requirements.txt

1. You may need to install espeak first:apt-get install espeak - Prepare datasets

- ex) Download and extract the LJ Speech dataset, then rename or create a link to the dataset folder:

ln -s /path/to/LJSpeech-1.1/wavs DUMMY1

- ex) Download and extract the LJ Speech dataset, then rename or create a link to the dataset folder:

- Build Monotonic Alignment Search and run preprocessing if you use your own datasets.

# Cython-version Monotonoic Alignment Search

cd monotonic_align

mkdir monotonic_align

python setup.py build_ext --inplaceSetting json file in configs

| Model | How to set up json file in configs | Sample of json file configuration |

|---|---|---|

| iSTFT-VITS2 | "istft_vits": true, "upsample_rates": [8,8], |

istft_vits2_base.json |

| MB-iSTFT-VITS2 | "subbands": 4,"mb_istft_vits": true, "upsample_rates": [4,4], |

mb_istft_vits2_base.json |

| MS-iSTFT-VITS2 | "subbands": 4,"ms_istft_vits": true, "upsample_rates": [4,4], |

ms_istft_vits2_base.json |

| Mini-iSTFT-VITS2 | "istft_vits": true, "upsample_rates": [8,8],"hidden_channels": 96, "n_layers": 3, |

mini_istft_vits2_base.json |

| Mini-MB-iSTFT-VITS2 | "subbands": 4,"mb_istft_vits": true, "upsample_rates": [4,4],"hidden_channels": 96, "n_layers": 3,"upsample_initial_channel": 256, |

mini_mb_istft_vits2_base.json |

python train.py -c configs/mini_mb_istft_vits2_base.json -m models/test