DiFlow-TTS: Discrete Flow Matching with Factorized Speech Tokens for Low-Latency Zero-Shot Text-to-Speech

- [Coming soon] Release evaluation code.

- [Coming soon] Release training instructions.

- [2025.08] Release inference code.

- [2025.08] This repo is created.

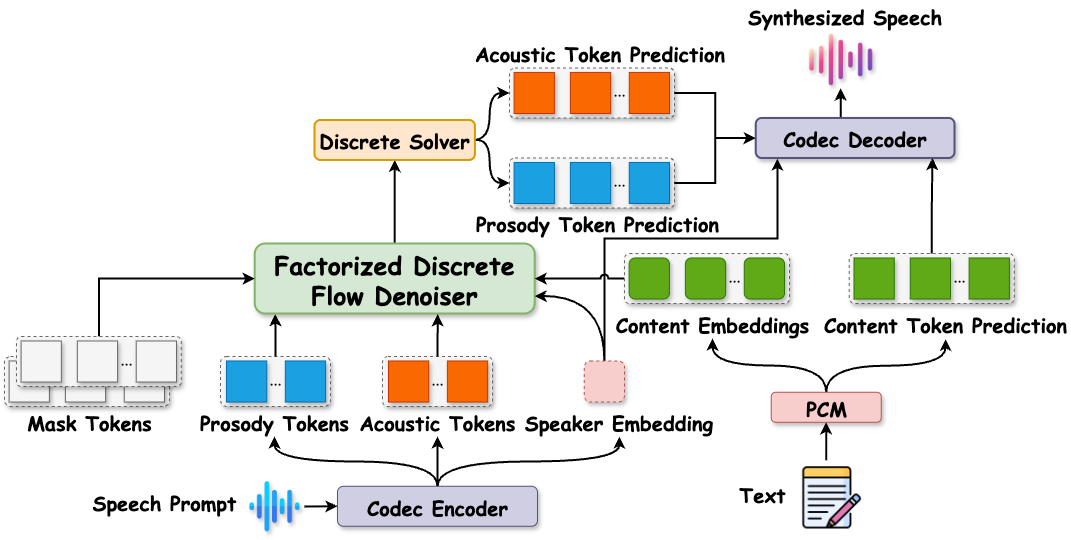

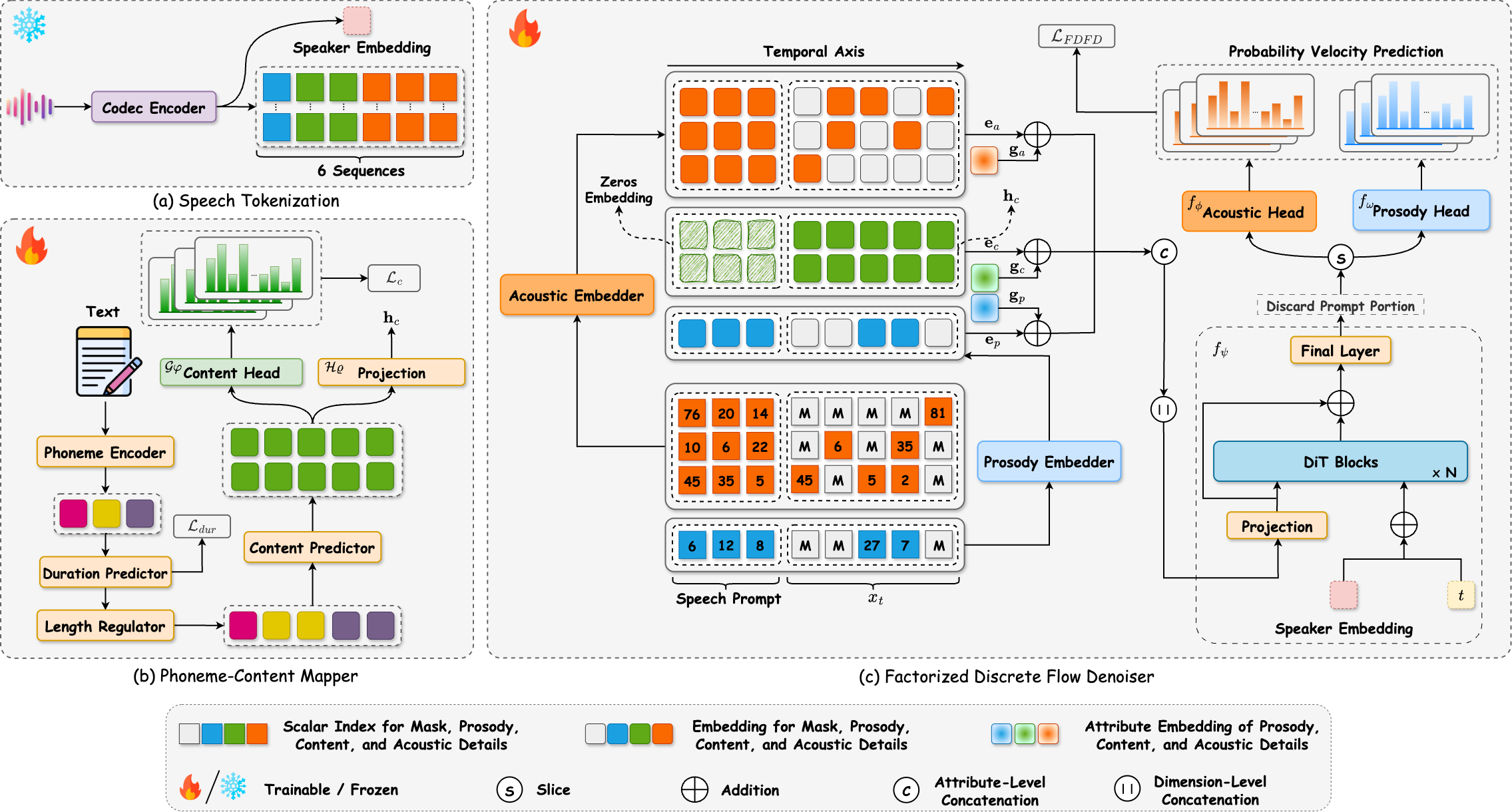

DiFlow-TTS is a novel zero-shot text-to-speech system that leverages purely discrete flow matching with factorized speech token modeling.

Install the required dependencies using Conda:

conda env create -f environment.yaml

conda activate diflow- Download the pretrained FACodec model from HuggingFace, and place the checkpoint files in the following structure:

root/

└── models/

└── facodec/

└── checkpoints/

├── ns3_facodec_encoder.bin

└── ns3_facodec_decoder.bin

- Download the DiFlow-TTS model checkpoint from Link, and place it as follows:

root/

└── ckpts/

└── diflow-tts.ckpt

To synthesize a sample with DiFlow-TTS, follow these steps:

-

Open the script:

scripts/synth_one_sample.sh -

Edit the following lines:

- Line 3: Set the path to the DiFlow-TTS checkpoint.

- Line 4: Set your input text.

- Line 5: Set the path to your reference speech prompt.

-

Run the script with:

CUDA_VISIBLE_DEVICES=0 bash scripts/synth_one_sample.shMake sure the model checkpoint and audio prompt are correctly formatted and accessible at the specified paths.

Coming soon