Code used for TransGAN: Two Pure Transformers Can Make One Strong GAN, and That Can Scale Up.

- checkpoint gradient using torch.utils.checkpoint

- 16bit precision training

- Distributed Training (Faster!)

- IS/FID Evaluation

- Gradient Accumulation

- Stronger Data Augmentation

- Self-Modulation

python exp/cifar_train.py

First download the cifar checkpoint and put it on ./cifar_checkpoint. Then run the following script.

python exp/cifar_test.py

README waits for updated

Codebase from AutoGAN, pytorch-image-models

if you find this repo is helpful, please cite

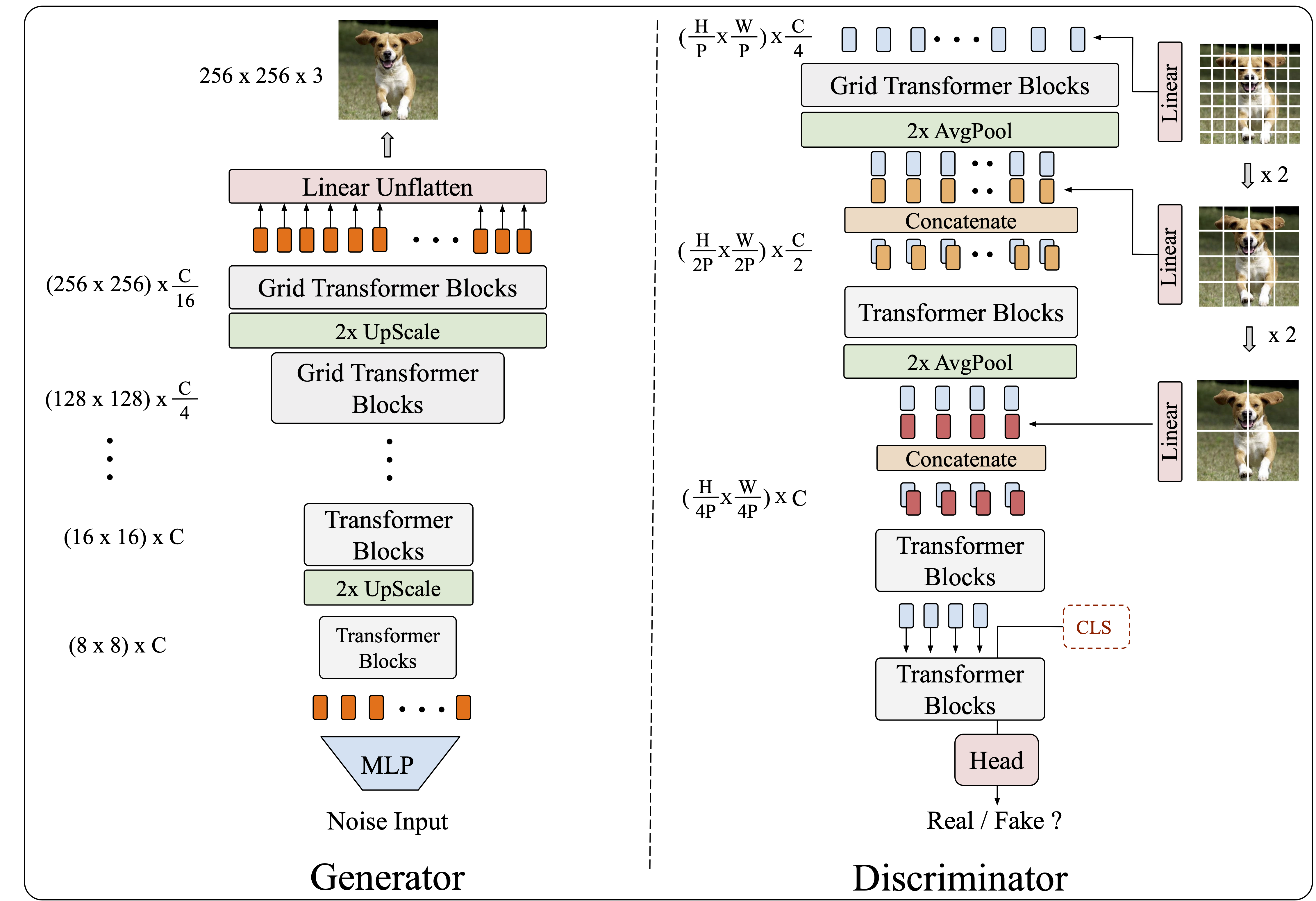

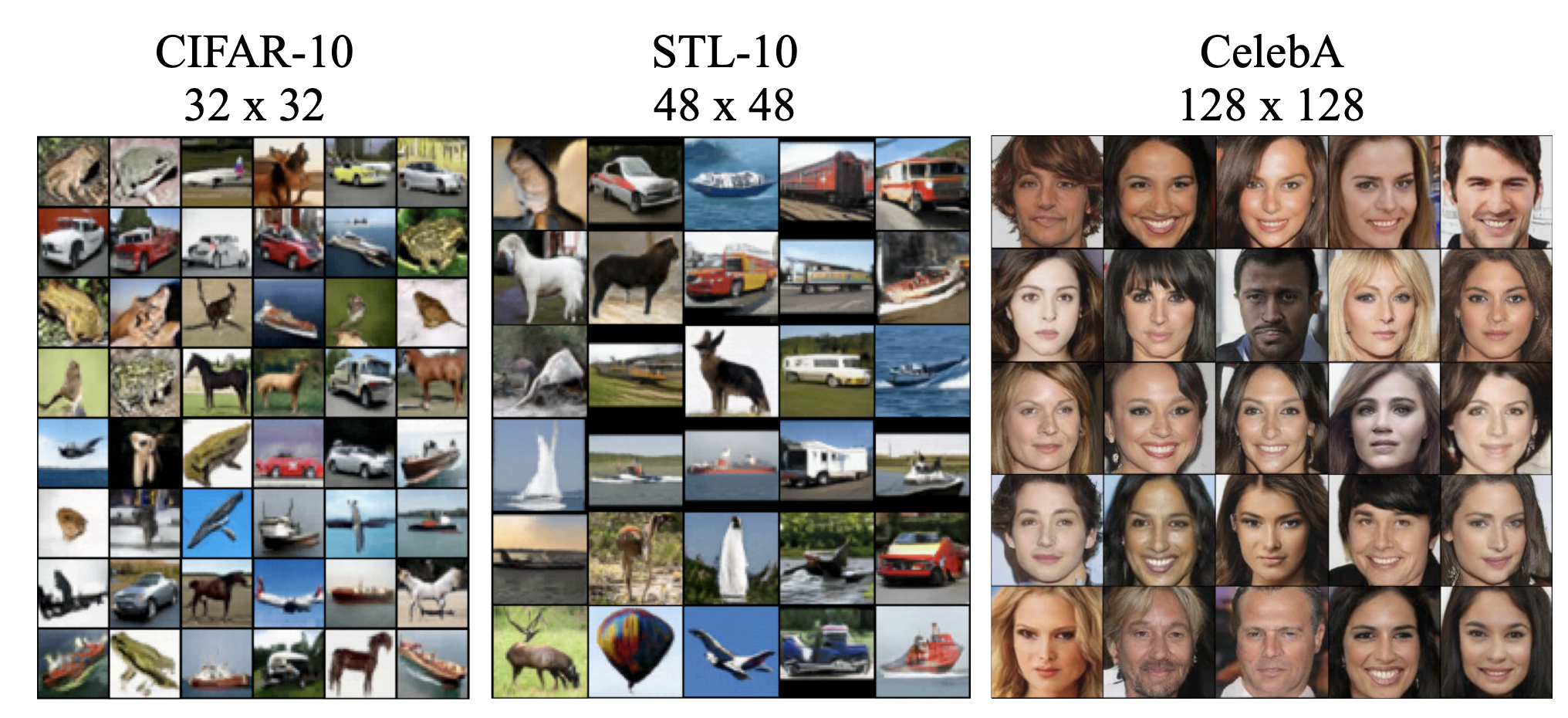

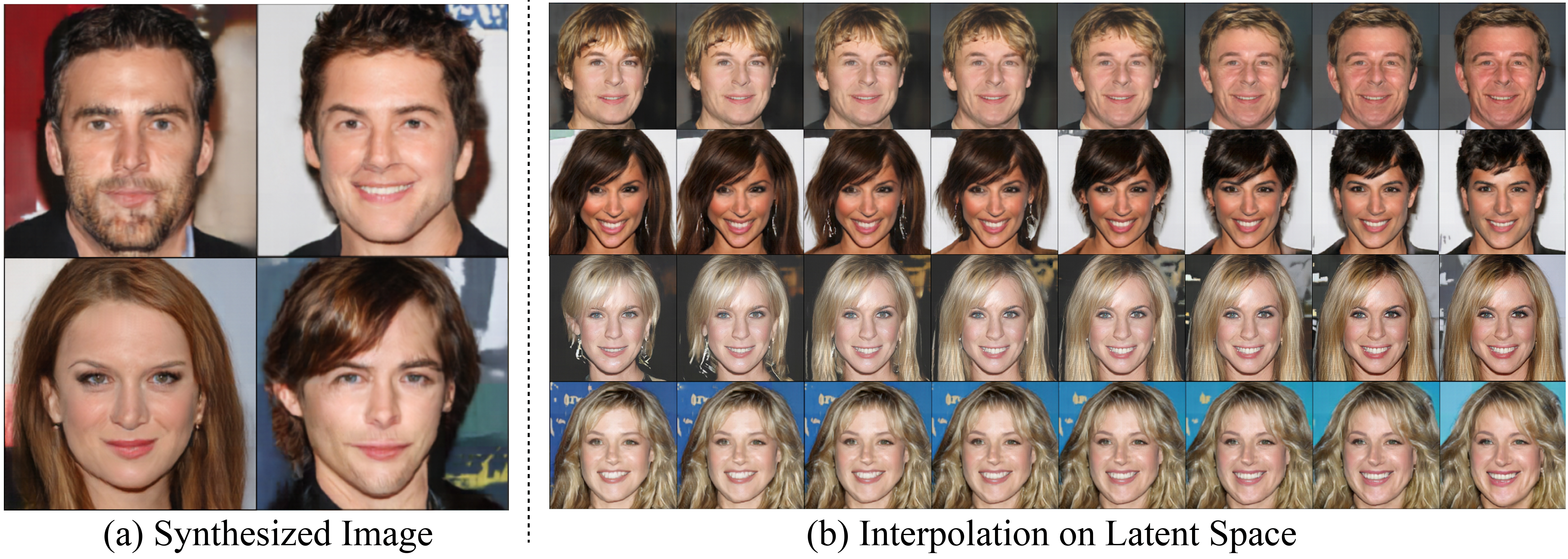

@article{jiang2021transgan,

title={Transgan: Two pure transformers can make one strong gan, and that can scale up},

author={Jiang, Yifan and Chang, Shiyu and Wang, Zhangyang},

journal={Advances in Neural Information Processing Systems},

volume={34},

year={2021}

}