[ECCV 2024 Oral] Rethinking Data Augmentation for Robust LiDAR Semantic Segmentation in Adverse Weather

Junsung Park, Kyungmin Kim, Hyunjung Shim

CVML Lab. KAIST AI.

[Project Page]

Official implementation of "Rethinking Data Augmentation for Robust LiDAR Semantic Segmentation in Adverse Weather", accepted in ECCV 2024.

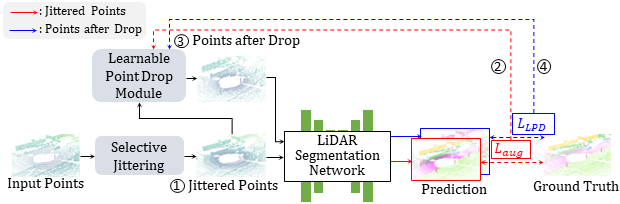

Existing LiDAR semantic segmentation methods often struggle in adverse weather conditions. Previous work has addressed this by simulating adverse weather or using general data augmentation, but lacks detailed analysis of the negative effects on performance. We identified key factors of adverse weather affecting performance: geometric perturbation from refraction and point drop due to energy absorption and occlusions. Based on these findings, we propose new data augmentation techniques: Selective Jittering (SJ) to mimic geometric perturbation and Learnable Point Drop (LPD) to approximate point drop patterns using a Deep Q-Learning Network. These techniques enhance the model by exposing it to identified vulnerabilities without precise weather simulation.

Fig. The overall training process of our methods.

- [2024.09] - Our paper is selected as ORAL PRESENTATION in ECCV 2024! Link

- [2024.08] - Our project page is opened! Check it out in here!

- [2024.08] - Official implementation is released! Also, our paper is available on arXiv, click here to check it out.

conda create -n lidar_weather python=3.8 -y && conda activate lidar_weather

conda install pytorch==1.10.0 torchvision==0.11.0 cudatoolkit=11.3 -c pytorch -y

pip install -U openmim && mim install mmengine && mim install 'mmcv>=2.0.0rc4, <2.1.0' && mim install 'mmdet>=3.0.0, <3.2.0'

git clone https://github.com/engineerJPark/LiDARWeather.git

cd LiDARWeather && pip install -v -e .

pip install cumm-cu113 && pip install spconv-cu113

sudo apt-get install libsparsehash-dev

export PATH=/usr/local/cuda/bin:$PATH && pip install --upgrade git+https://github.com/mit-han-lab/torchsparse.git@v1.4.0

pip install nuscenes-devkit

pip install wandbPlease refer to INSTALL.md for the installation details.

Please refer to DATA_PREPARE.md for the details to prepare the 1SemanticKITTI, 2SynLiDAR, 3SemanticSTF, and 4SemanticKITTI-C datasets.

./tools/dist_train.sh configs/lidarweather_minkunet/sj+lpd+minkunet_semantickitti.py 4

./tools/dist_train.sh projects/CENet/lidarweather_cenet/sj+lpd+cenet_semantickitti.py 4python tools/test.py configs/lidarweather_minkunet/sj+lpd+minkunet_semantickitti.py work_dirs/sj+lpd+minkunet_semantickitti/epoch_15.pth

python tools/test.py projects/CENet/lidarweather_cenet/sj+lpd+cenet_semantickitti.py work_dirs/sj+lpd+cenet_semantickitti/epoch_50.pthPlease refer to GET_STARTED.md to learn more details.

| Methods | D-fog |

L-fog |

Rain |

Snow |

mIoU |

|---|---|---|---|---|---|

| Oracle | 51.9 | 54.6 | 57.9 | 53.7 | 54.7 |

| Baseline | 30.7 | 30.1 | 29.7 | 25.3 | 31.4 |

| LaserMix | 23.2 | 15.5 | 9.3 | 7.8 | 14.7 |

| PolarMix | 21.3 | 14.9 | 16.5 | 9.3 | 15.3 |

| PointDR* | 37.3 | 33.5 | 35.5 | 26.9 | 33.9 |

| Baseline+SJ+LPD | 36.0 | 37.5 | 37.6 | 33.1 | 39.5 |

| Increments to baseline | +5.3 | +7.4 | +7.9 | +7.8 | +8.1 |

| Methods | D-fog |

L-fog |

Rain |

Snow |

mIoU |

|---|---|---|---|---|---|

| Oracle | 51.9 | 54.6 | 57.9 | 53.7 | 54.7 |

| Baseline | 15.24 | 15.97 | 16.83 | 12.76 | 15.45 |

| LaserMix | 15.32 | 17.95 | 18.55 | 13.8 | 16.85 |

| PolarMix | 16.47 | 18.69 | 19.63 | 15.98 | 18.09 |

| PointDR* | 19.09 | 20.28 | 25.29 | 18.98 | 19.78 |

| Baseline+SJ+LPD | 19.08 | 20.65 | 21.97 | 17.27 | 20.08 |

| Increments to baseline | +3.8 | +4.7 | +5.1 | +4.5 | +4.6 |

| Method | SemanticSTF | SemanticKITTI-C |

|---|---|---|

| CENet | 14.2 | 49.3 |

| CENet+Ours | 22.0 (+7.8) | 53.2 (+3.9) |

| SPVCNN | 28.1 | 52.5 |

| SPVCNN+Ours | 38.4 (+10.3) | 52.9 (+0.4) |

| Minkowski | 31.4 | 53.0 |

| Minkowski+Ours | 39.5 (+8.1) | 58.6 (+5.6) |

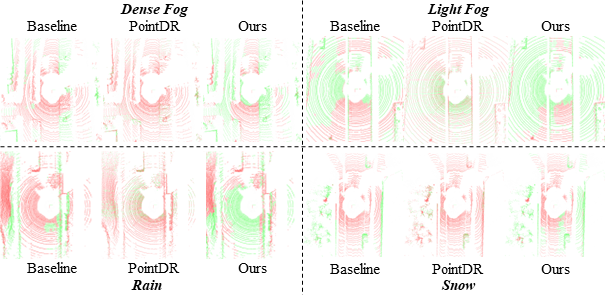

Fig. Qualitative results of our methods.

This work is under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Our codebase builds heavily on MMDetection3D and PyTorch DQN Tutorials. MMDetection3D is an open-source toolbox based on PyTorch, towards the next-generation platform for general 3D perception. It is a part of the OpenMMLab project developed by MMLab.

If you find this work helpful, please kindly consider citing our paper:

@article{park2024rethinking,

title={Rethinking Data Augmentation for Robust LiDAR Semantic Segmentation in Adverse Weather},

author={Park, Junsung and Kim, Kyungmin and Shim, Hyunjung},

journal={arXiv preprint arXiv:2407.02286},

year={2024}

}This citation will be updated after the proceedings are published.