🤗 Model • 📚 Data • 📃 Paper • 🌐 Demo

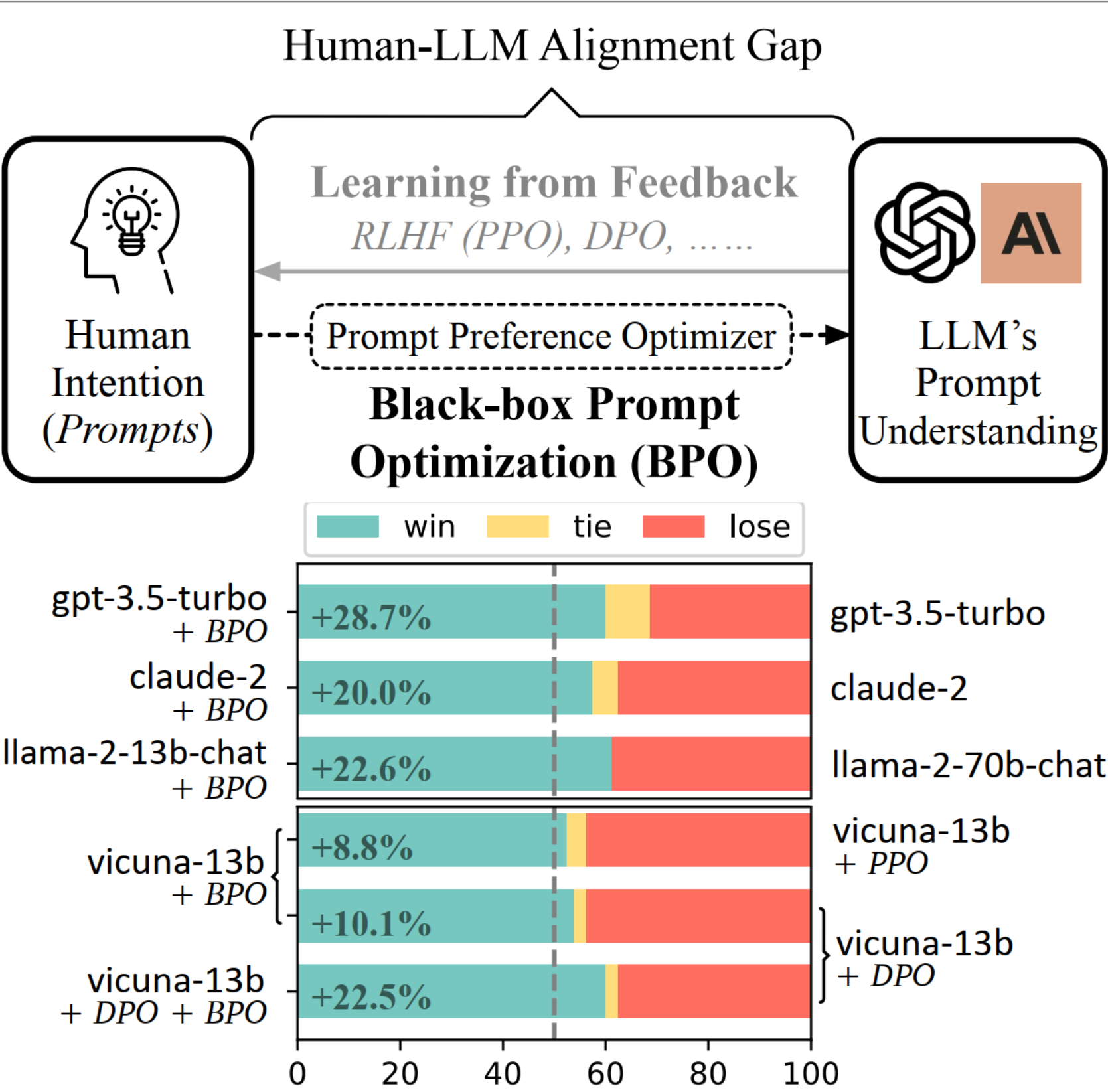

(Upper) Black-box Prompt Optimization (BPO) offers a conceptually new perspective to bridge the gap between humans and LLMs. (Lower) On Vicuna Eval’s pairwise evaluation, we show that BPO further aligns gpt-3.5-turbo and claude-2 without training. It also outperforms both PPO & DPO and presents orthogonal improvements.

We have released our model and data on HuggingFace.

We build a demo for BPO on Huggingface.

The prompt preference optimization model can be download from HuggingFace

Inference code (Please refer to src/infer_example.py for more instructions on how to optimize your prompts):

from transformers import AutoModelForCausalLM, AutoTokenizer

model_path = 'THUDM/BPO'

prompt_template = "[INST] You are an expert prompt engineer. Please help me improve this prompt to get a more helpful and harmless response:\n{} [/INST]"

device = 'cuda:0'

model = AutoModelForCausalLM.from_pretrained(model_path).half().eval().to(device)

# for 8bit

# model = AutoModelForCausalLM.from_pretrained(model_path, device_map=device, load_in_8bit=True)

tokenizer = AutoTokenizer.from_pretrained(model_path)

text = 'Tell me about Harry Potter'

prompt = prompt_template.format(text)

model_inputs = tokenizer(prompt, return_tensors="pt").to(device)

output = model.generate(**model_inputs, max_new_tokens=1024, do_sample=True, top_p=0.9, temperature=0.6, num_beams=1)

resp = tokenizer.decode(output[0], skip_special_tokens=True).split('[/INST]')[1].strip()

print(resp)BPO Dataset can be found on HuggingFace.

The alpaca_reproduce directory contains the BPO-reproduced Alpaca dataset. The data format is:

{

"instruction": {instruction},

"input": {input},

"output": {output},

"optimized_prompt": {optimized_prompt},

"res": {res}

}- {instruction}, {input}, and {output} are elements from the original dataset.

- {optimized_prompt} is BPO-optimized instruction.

- {res} is the response from text-davinci-003 using the {optimized_prompt}.

The testset directory contains all the test datasets we used, including:

- 200 prompts sampled from the BPO dataset

- 200 examples from Dolly dataset

- 252 human evaluation instructions from Self-Instruct

- 80 user-oriented prompts from the Vicuna Eval dataset.

For all codes, we have added #TODO comments to indicate places in the code that need modification before running. Please update the relevant parts as noted before executing each file.

pip install -r requirements.txtTo construct data yourself, run the following command

cd src/data_construction

# using pairwise feedback data to generate optimized prompts

python chatgpt_infer.py

# process generated optimized prompts

python process_optimized_prompts.pyIf you want to train your own prompt preference optimizer, please run the following command:

cd src/training

# pre-process fine-tuning data

python ../data_construction/process_en.py

python data_utils.py

# fine-tuning

python train.py

# inference

python infer_finetuning.py- Datasets

- Inference Code

- Evaluation Code

- RLHF Code

- Fine-tuning code: llm_finetuning

- PPO code: DeepSpeed-Chat

- DPO code: LLaMA-Factory

- Evaluation Prompts: llm_judge and alpaca_eval

@article{cheng2023black,

title={Black-Box Prompt Optimization: Aligning Large Language Models without Model Training},

author={Cheng, Jiale and Liu, Xiao and Zheng, Kehan and Ke, Pei and Wang, Hongning and Dong, Yuxiao and Tang, Jie and Huang, Minlie},

journal={arXiv preprint arXiv:2311.04155},

year={2023}

}