TODO: Estudiar el video primero installation

A workspace to experiment with Apache Spark, Livy, and Airflow in a containerized Docker environment.

We'll be using the below tools and versions:

| Tool | Version | Notes |

|---|---|---|

| Java 8 SDK | openjdk version "1.8.0_282" |

Installed locally in order to run the Spark driver (see brew installation options). |

| Scala | scala-sdk-2.11.12 |

Running on JetBrains IntelliJ. |

| Python | 3.6 |

Installed using conda 4.9.2 . |

| PySpark | 2.4.6 |

Installed inside conda virtual environment. |

| Livy | 0.7.0-incubating |

See release history here. |

| Airflow | 1.10.14 |

For compatibility with apache-airflow-backport-providers-apache-livy . |

| Docker | 20.10.5 |

See Mac installation instructions. |

| Bitnami Spark Docker Images | docker.io/bitnami/spark:2 |

I use the Spark 2.4.6 images for comtability with Apache Livy, which supports Spark (2.2.x to 2.4.x). |

The only Python packages you'll need are pyspark and requests .

You can use the provided requirements.txt file to create a virtual environment using the tool of your choice.

For example, with conda , you can create a virtual environment with:

conda create --no-default-packages -n spark-livy-on-airflow-workspace python=3.6Then activate using:

conda activate spark-livy-on-airflow-workspaceThen install the requirements with:

pip install -r requirements.txtAlternatively, you can use the provided environment.yml file and run:

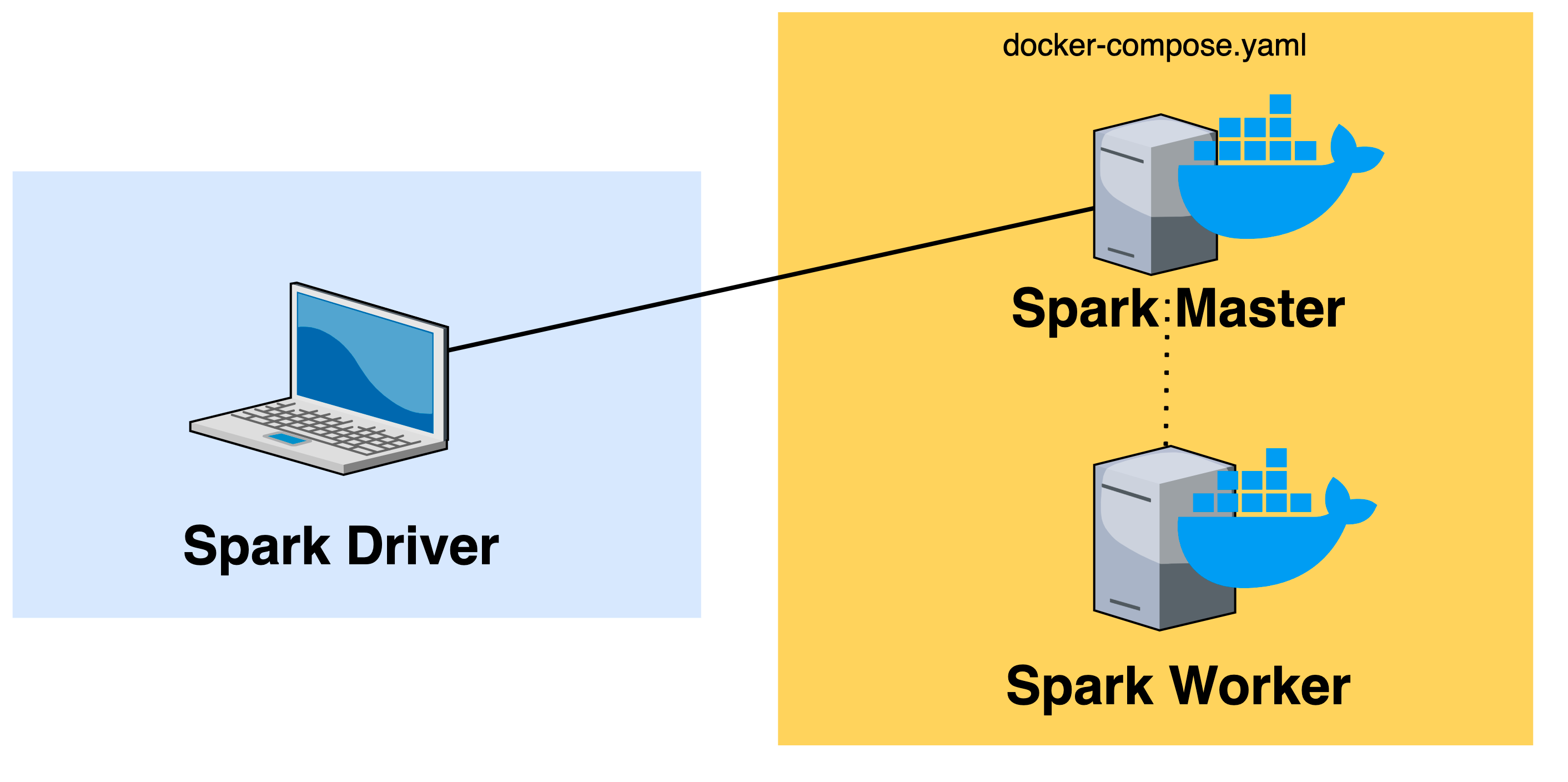

conda env create -n spark-livy-on-airflow-workspace -f environment.yamlThis first part will focus on executing a simple PySpark job on a

Dockerized Spark cluster based on the default docker-compose.yaml

provided in the bitnami-docker-spark repo.

Below is a rough outline of what this setup will look like.

To get started, we'll need to set:

SPARK_MASTER_URL- This is the endpoint that our Spark worker and driver will use to connect to the master.

Assuming you're using the docker-compose.yaml in this repo, this variable should be set to spark://spark-master:7077 , which is the name of the Docker container

and the default port for the Spark master.

SPARK_DRIVER_HOST- This will be the IP of where you're running your Spark driver

(in our case your personal workstation). See this discussion and this sample configuration for more details.

Both of these settings will be passed to the Spark configuration object:

conf.setAll(

[

("spark.master", os.environ.get("SPARK_MASTER_URL", "spark://spark-master:7077")),

("spark.driver.host", os.environ.get("SPARK_DRIVER_HOST", "local[*]")),

("spark.submit.deployMode", "client"),

("spark.driver.bindAddress", "0.0.0.0"),

]

)To spin up the containers, run:

docker-compose up -dSame as the above, only in Scala:

val conf = new SparkConf

conf.set("spark.master", Properties.envOrElse("SPARK_MASTER_URL", "spark://spark-master:7077"))

conf.set("spark.driver.host", Properties.envOrElse("SPARK_DRIVER_HOST", "local[*]"))

conf.set("spark.submit.deployMode", "client")

conf.set("spark.driver.bindAddress", "0.0.0.0")From the Apache Livy website:

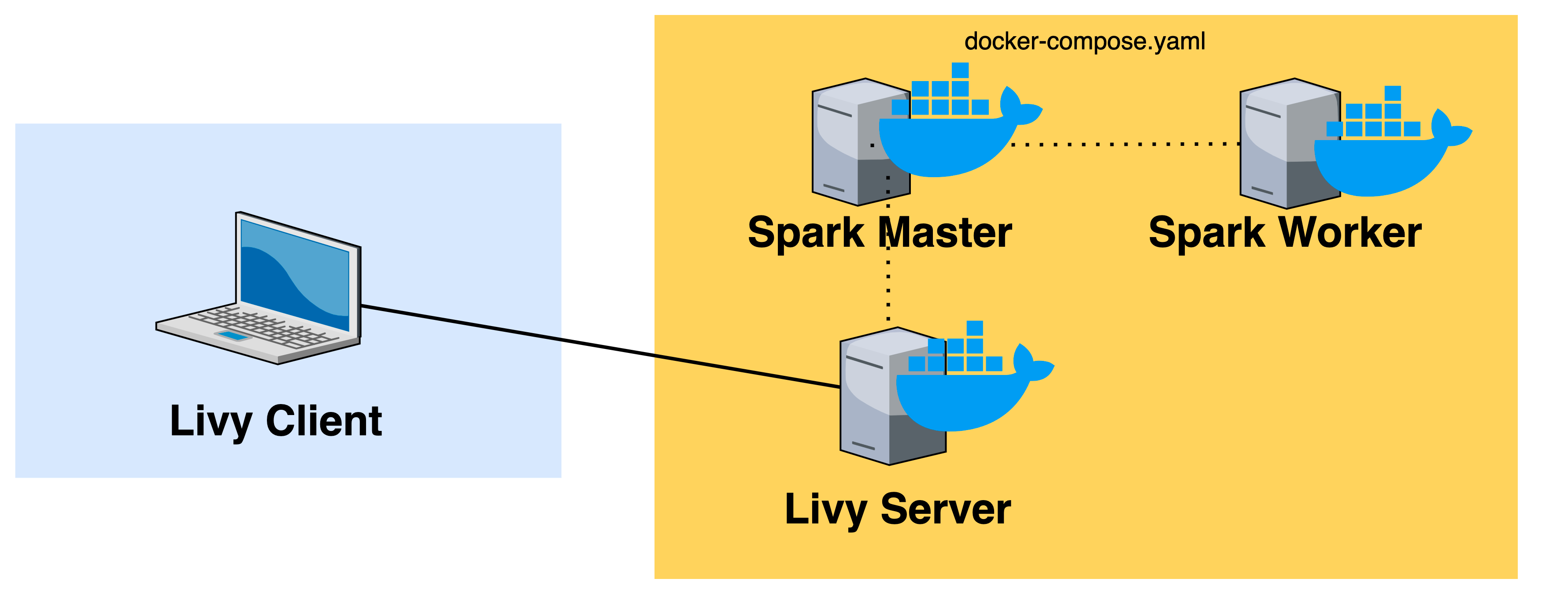

Apache Livy is a service that enables easy interaction with a Spark cluster over a REST interface. It enables easy submission of Spark jobs or snippets of Spark code, synchronous or asynchronous result retrieval, as well as Spark Context management, all via a simple REST interface or an RPC client library.

We'll now extend the Bitnami Docker image to include Apache Livy in our container architecture to achieve the below.

See this repo's Dockerfile for implementation details.

After instantiating the containers with docker-compose up -d you can test your connection by creating a session:

curl -X POST -d '{"kind": "pyspark"}' \

-H "Content-Type: application/json" \

localhost:8998/sessions/And executing a sample command:

curl -X POST -d '{

"kind": "pyspark",

"code": "for i in range(1,10): print(i)"

}' \

-H "Content-Type: application/json" \

localhost:8998/sessions/0/statementsSee Livy session API examples.

See Livy batch API documentation.

#TODO

#TODO