Train fast Speech-to-Text networks in different languages. An overview of the approach can be found in the paper Scribosermo: Fast Speech-to-Text models for German and other Languages.

Note: This repository is focused on training STT-networks, but you can find a short and experimental inference example here.

Requirements are:

- Computer with a modern gpu and working nvidia+docker setup

- Basic knowledge in python and deep-learning

- A lot of training data in your required language

(preferable >100h for fine-tuning and >1000h for new languages)

File structure will look as follows:

my_speech2text_folder

checkpoints

corcua <- Library for datasets

data_original

data_prepared

Scribosermo <- This repository

Clone corcua:

git clone https://gitlab.com/Jaco-Assistant/corcua.gitBuild and run docker container:

docker build -f Scribosermo/Containerfile -t scribosermo ./Scribosermo/

./Scribosermo/run_container.shFollow readme in preprocessing directory for preparing the voice data.

Follow readme in langmodel directory for generating the language model.

Follow readme in training directory for training your network.

For easier inference follow the exporting readme in extras/exporting directory.

You can find more details about the currently used datasets here.

| Language | DE | EN | ES | FR | IT | PL | Noise |

| Duration (hours) | 2370 | 982 | 817 | 1028 | 360 | 169 | 152 |

| Datasets | 37 | 1 | 8 | 7 | 5 | 3 | 3 |

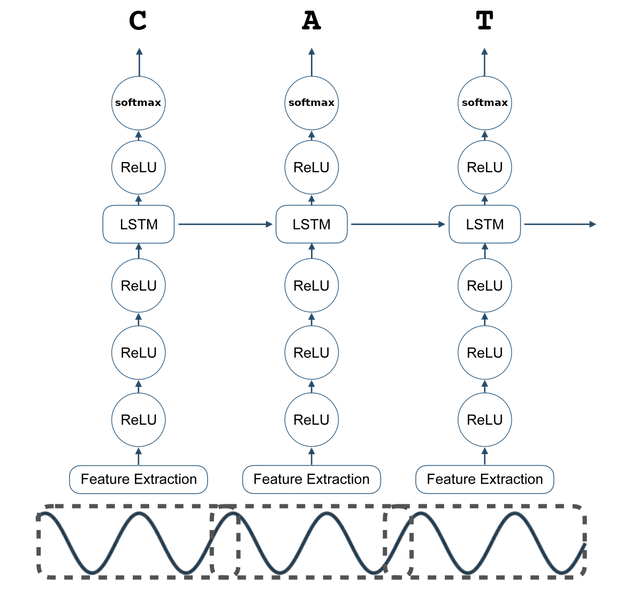

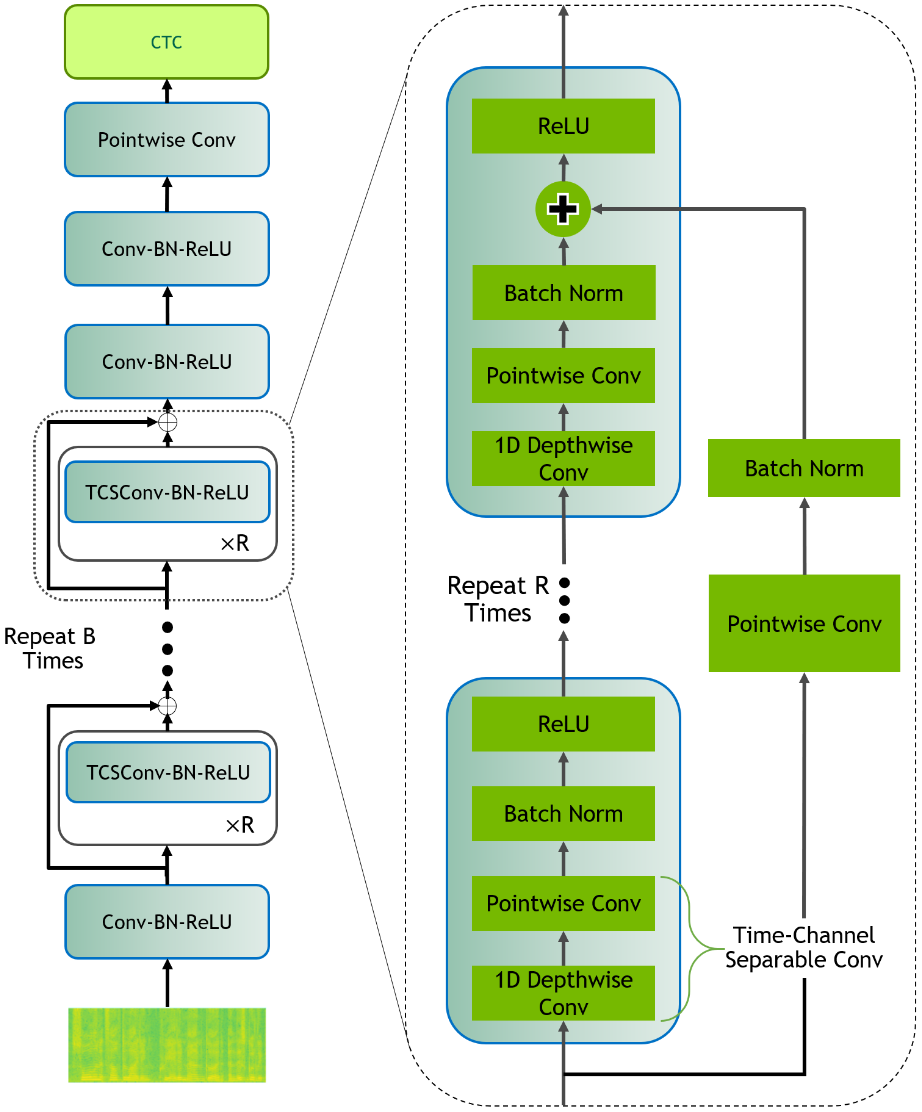

Implemented networks: DeepSpeech1, DeepSpeech2, QuartzNet, Jasper, ContextNet(simplified), Conformer(simplified), CitriNet

Notes on the networks:

- Not every network is fully tested, but each could be trained with one single audio file.

- Some networks might differ slightly from their paper implementations.

Supported networks with their trainable parameter count (using English alphabet):

| Network | DeepSpeech1 | DeepSpeech2 | QuartzNet | Jasper | ContextNetSimple | SimpleConformer | CitriNet |

| Config | 5x5 / 15x5 / +LSTM | 0.8 | 16x240x4 | 256 / 344 / +LSTM | |||

| Params | 48.7M | 120M | 6.7M / 18.9M / 21.5M | 323M | 21.6M | 21.7M | 10.9M / 19.3M / 21.6M |

By default, the checkpoints are provided under the same licence as this repository, but a lot of datasets have extra conditions (for example non-commercial use only) which also have to be applied. The QuartzNet models are double licenced withs Nvidia's NGC, because they use their pretrained weights. Please check this yourself for the models you want to use.

Mozilla's DeepSpeech:

You can find the old models later on this page, in the old experiments section.

Below models are not compatible to the DeepSpeech client anymore!

German:

- Quartznet15x5, CV only (WER: 7.5%): Link

- Quartznet15x5, D37CV (WER: 6.6%): Link

- Scorer: TCV, D37CV, PocoLg

English:

- Quartznet5x5 (WER: 4.5%): Link

- Quartznet15x5 (WER: 3.7%): Link

- ContextNetSimple (WER: 4.9%): Link

- Scorer: Link (to DeepSpeech)

Spanish:

- Quartznet15x5, CV only (WER: 10.5%): Link

- Quartznet15x5, D8CV (WER: 10.0%): Link

- Scorer: KenSm, PocoLg

French:

- Quartznet15x5, CV only (WER: 12.1%): Link

- Quartznet15x5, D7CV (WER: 11.0%): Link

- Scorer: KenSm, PocoLg

Italian:

Please cite Scribosermo if you found it helpful for your research or business.

@article{

scribosermo,

title={Scribosermo: Fast Speech-to-Text models for German and other Languages},

author={Bermuth, Daniel and Poeppel, Alexander and Reif, Wolfgang},

journal={arXiv preprint arXiv:2110.07982},

year={2021}

}You can contribute to this project in multiple ways:

-

Help to solve the open issues

-

Implement new networks or augmentation options

-

Train new models or improve the existing

(Requires a gpu and a lot of time, or multiple gpus and some time) -

Experiment with the language models

-

Add a new language:

- Extend

data/directory with thealphabetandlangdictsfiles - Add speech datasets

- Find text corpora for the language model

- Extend

See readme in tests directory for testing instructions.

| Language | Network | Additional Infos | Performance Results |

|---|---|---|---|

| EN | Quartznet5x5 | Results from Nvidia-Nemo, using LS-dev-clean as test dataset | WER greedy: 0.0537 |

| EN | Quartznet5x5 | Converted model from Nvidia-Nemo, using LS-dev-clean as test dataset | Loss: 9.7666 CER greedy: 0.0268 CER with lm: 0.0202 WER greedy: 0.0809 WER with lm: 0.0506 |

| EN | Quartznet5x5 | Pretrained model from Nvidia-Nemo, one extra epoch on LibriSpeech to reduce the different spectrogram problem | Loss: 7.3253 CER greedy: 0.0202 CER with lm: 0.0163 WER greedy: 0.0654 WER with lm: 0.0446 |

| EN | Quartznet5x5 | above, using LS-dev-clean as test dataset (for better comparison with results from Nemo) | Loss: 6.9973 CER greedy: 0.0203 CER with lm: 0.0159 WER greedy: 0.0648 WER with lm: 0.0419 |

| EN | Quartznet15x5 | Results from Nvidia-Nemo, using LS-dev-clean as test dataset | WER greedy: 0.0379 |

| EN | Quartznet15x5 | Converted model from Nvidia-Nemo, using LS-dev-clean as test dataset | Loss: 5.8044 CER greedy: 0.0160 CER with lm: 0.0130 WER greedy: 0.0515 WER with lm: 0.0355 |

| EN | Quartznet15x5 | Pretrained model from Nvidia-Nemo, four extra epochs on LibriSpeech to reduce the different spectrogram problem | Loss: 5.3074 CER greedy: 0.0141 CER with lm: 0.0128 WER greedy: 0.0456 WER with lm: 0.0374 |

| EN | Quartznet15x5 | above, using LS-dev-clean as test dataset (for better comparison with results from Nemo) | Loss: 5.1035 CER greedy: 0.0132 CER with lm: 0.0108 WER greedy: 0.0435 WER with lm: 0.0308 |

Next trainings were all done with above pretrained Quartznet15x5 network.

| Language | Datasets | Additional Infos | Performance Results |

|---|---|---|---|

| DE | Tuda | Learning rate 0.0001; Training time on 2x1080Ti was about 16h | Loss: 61.3615 CER greedy: 0.1481 CER with lm: 0.0914 WER greedy: 0.5502 WER with lm: 0.2381 |

| DE | Tuda | Learning rate 0.001 | Loss: 59.3143 CER greedy: 0.1329 CER with lm: 0.0917 WER greedy: 0.4956 WER with lm: 0.2448 |

| DE | CommonVoice | Training time on 2x1080Ti was about 70h; Reusing scorer from DeepSpeech-Polyglot trainings | Loss: 11.6188 CER greedy: 0.0528 CER with lm: 0.0319 WER greedy: 0.1853 WER with lm: 0.0774 |

| DE | CommonVoice | Above network; tested on Tuda dataset | Loss: 25.5442 CER greedy: 0.0473 CER with lm: 0.0340 WER greedy: 0.1865 WER with lm: 0.1199 |

| DE | MLS | Learning rate 0.0001; Test on CommonVoice | Loss: 38.5387 CER greedy: 0.1967 CER with lm: 0.1616 WER greedy: 0.5894 WER with lm: 0.2584 |

| DE | MLS + CommonVoice | Above network, continuing training with CommonVoice; Learning rate 0.001; Test on CommonVoice | Loss: 12.3243 CER greedy: 0.0574 CER with lm: 0.0314 WER greedy: 0.2122 WER with lm: 0.0788 |

| DE | D37 | Continued training from CV-checkpoint with 0.077 WER; Learning rate 0.001; Test on CommonVoice | Loss: 10.4031 CER greedy: 0.0491 CER with lm: 0.0369 WER greedy: 0.1710 WER with lm: 0.0824 |

| DE | D37 | Above network; Test on Tuda | Loss: 16.6407 CER greedy: 0.0355 CER with lm: 0.0309 WER greedy: 0.1530 WER with lm: 0.1113 |

| DE | D37 + CommonVoice | Fine-tuned above network on CommonVoice again; Learning rate 0.001; Test on CommonVoice | Loss: 9.9733 CER greedy: 0.0456 CER with lm: 0.0323 WER greedy: 0.1601 WER with lm: 0.0760 |

| DE | D37 + CommonVoice | Above network; Scorer build with all new training transcriptions; Beam size 1024; Test on CommonVoice | CER with lm: 0.0279 WER with lm: 0.0718 |

| DE | D37 + CommonVoice | Like above; Test on Tuda | Loss: 17.3551 CER greedy: 0.0346 CER with lm: 0.0262 WER greedy: 0.1432 WER with lm: 0.1070 |

| ES | CommonVoice | Frozen with 4 epochs, then full training | Eval-Loss: 29.0722 Test-Loss: 31.2095 CER greedy: 0.1568 CER with lm: 0.1461 WER greedy: 0.5289 WER with lm: 0.3446 |

| ES | CommonVoice | Additional Dropout layers after each block and end convolutions; Continuing above frozen checkpoint | Eval-Loss: 30.5518 Test-Loss: 32.7240 CER greedy: 0.1643 CER with lm: 0.1519 WER greedy: 0.5523 WER with lm: 0.3538 |

| FR | CommonVoice | Frozen with 4 epochs, then full training | Eval-Loss: 26.9454 Test-Loss: 30.6238 CER greedy: 0.1585 CER with lm: 0.1821 WER greedy: 0.4570 WER with lm: 0.4220 |

| ES | CommonVoice | Updated augmentations; Continuing above frozen checkpoint | Eval-Loss: 28.0187 |

| ES | CommonVoice | Like above, but lower augmentation strength | Eval-Loss: 26.5313 |

| ES | CommonVoice | Like above, but higher augmentation strength | Eval-Loss: 34.6475 |

| ES | CommonVoice | Only spectrogram cut/mask augmentations | Eval-Loss: 27.2635 |

| ES | CommonVoice | Random speed and pitch augmentation, only spectrogram cutout | Eval-Loss: 25.9359 |

| ES | CommonVoice | Improved transfer-learning with alphabet extension | Eval-Loss: 13.0415 Test-Loss: 14.8321 CER greedy: 0.0742 CER with lm: 0.0579 WER greedy: 0.2568 WER with lm: 0.1410 |

| ES | CommonVoice | Like above, with esrp-delta=0.1 and lr=0.002; Training for 29 epochs | Eval-Loss: 10.3032 Test-Loss: 12.1623 CER greedy: 0.0533 CER with lm: 0.0460 WER greedy: 0.1713 WER with lm: 0.1149 |

| ES | CommonVoice | Above model; Extended scorer with Europarl+News dataset | CER with lm: 0.0439 WER with lm: 0.1074 |

| ES | CommonVoice | Like above; Beam size 1024 instead of 256 | CER with lm: 0.0422 WER with lm: 0.1053 |

| ES | D8 | Continued training from above CV-checkpoint; Learning rate reduced to 0.0002; Test on CommonVoice | Eval-Loss: 9.3886 Test-Loss: 11.1205 CER greedy: 0.0529 CER with lm: 0.0456 WER greedy: 0.1690 WER with lm: 0.1075 |

| ES | D8 + CommonVoice | Fine-tuned above network on CommonVoice again; Learning rate 0.0002; Test on CommonVoice | Eval-Loss: 9.6201 Test-Loss: 11.3245 CER greedy: 0.0507 CER with lm: 0.0421 WER greedy: 0.1632 WER with lm: 0.1025 |

| ES | D8 + CommonVoice | Like above; Beam size 1024 instead of 256 | CER with lm: 0.0404 WER with lm: 0.1003 |

| FR | CommonVoice | Similar to Spanish CV training above; Training for 26 epochs | Eval-Loss: 10.4081 Test-Loss: 13.6226 CER greedy: 0.0642 CER with lm: 0.0544 WER greedy: 0.1907 WER with lm: 0.1248 |

| FR | CommonVoice | Like above; Beam size 1024 instead of 256 | CER with lm: 0.0511 WER with lm: 0.1209 |

| FR | D7 | Continued training from above CV-checkpoint | Eval-Loss: 9.8695 Test-Loss: 12.7798 CER greedy: 0.0604 CER with lm: 0.0528 WER greedy: 0.1790 WER with lm: 0.1208 |

| FR | D7 + CommonVoice | Fine-tuned above network on CommonVoice again | Eval-Loss: 9.8874 Test-Loss: 12.9053 CER greedy: 0.0613 CER with lm: 0.0536 WER greedy: 0.1811 WER with lm: 0.1208 |

| FR | D7 + CommonVoice | Like above; Beam size 1024 instead of 256 | CER with lm: 0.0501 WER with lm: 0.1167 |

| DE | CommonVoice | Updated augmentations/pipeline to above Spanish training; Reduced esrp-delta 1.1 -> 0.1 compared to last DE run | Eval-Loss: 10.3421 Test-Loss: 11.4755 CER greedy: 0.0512 CER with lm: 0.0330 WER greedy: 0.1749 WER with lm: 0.0790 |

| DE | D37 | Continued training from above CV-checkpoint; Learning rate reduced by factor 10; Test on CommonVoice | Eval-Loss: 9.6293 Test-Loss: 10.7855 CER greedy: 0.0503 CER with lm: 0.0347 WER greedy: 0.1705 WER with lm: 0.0793 |

| DE | D37 + CommonVoice | Fine-tuned above network on CommonVoice again; Test on CommonVoice | Eval-Loss: 9.3287 Test-Loss: 10.4325 CER greedy: 0.0468 CER with lm: 0.0309 WER greedy: 0.1599 WER with lm: 0.0741 |

Running some experiments with different language models:

| Language | Datasets | Additional Infos | Performance Results |

|---|---|---|---|

| DE | D37 + CommonVoice | Use PocoLM instead of KenLM (similar LM size); Checkpoint from D37+CV training with WER=0.0718; Test on CommonVoice | CER with lm: 0.0285 WER with lm: 0.0701 |

| DE | D37 + CommonVoice | Like above; Test on Tuda | CER with lm: 0.0265 WER with lm: 0.1037 |

| DE | D37 + CommonVoice | Use unpruned language model (1.5GB instead of 250MB); Rest similar to above; Test on CommonVoice | CER with lm: 0.0276 WER with lm: 0.0673 |

| DE | D37 + CommonVoice | Like above; Test on Tuda | CER with lm: 0.0261 WER with lm: 0.1026 |

| DE | D37 + CommonVoice | Use pruned language model with similar size to English model (850MB); Rest similar to above; Test on CommonVoice | CER with lm: 0.0277 WER with lm: 0.0672 |

| DE | D37 + CommonVoice | Like above; Test on Tuda | CER with lm: 0.0260 WER with lm: 0.1024 |

| DE | D37 + CommonVoice | Checkpoint from D37+CV training with WER=0.0741; with large (850MB) scorer; Test on CommonVoice | CER with lm: 0.0299 WER with lm: 0.0712 |

| DE | D37 + CommonVoice | Like above; Test on Tuda; Small and full scorers were behind above model with both testsets, too | CER with lm: 0.0280 WER with lm: 0.1066 |

| DE | CommonVoice | Test above checkpoint from CV training with WER=0.0774 with PocoLM large | Test-Loss: 11.6184 CER greedy: 0.0528 CER with lm: 0.0312 WER greedy: 0.1853 WER with lm: 0.0748 |

| ES | D8 + CommonVoice | Use PocoLM instead of KenLM (similar LM size); Checkpoint from D8+CV training with WER=0.1003; Test on CommonVoice | CER with lm: 0.0407 WER with lm: 0.1011 |

| ES | D8 + CommonVoice | Like above; Large scorer (790MB) | CER with lm: 0.0402 WER with lm: 0.1002 |

| ES | D8 + CommonVoice | Like above; Full scorer (1.2GB) | CER with lm: 0.0403 WER with lm: 0.1000 |

Experimenting with new architectures on LibriSpeech dataset:

| Network | Additional Infos | Performance Results |

|---|---|---|

| ContextNetSimple (0.8) | Run with multiple full restarts, ~3:30h/epoch; increased LR from 0.001 to 0.01 since iteration 5; set LR to 0.02 in It8a and 0.005 in It8b | Eval-Loss-1: 64.2793 Eval-Loss-2: 24.5743 Eval-Loss-3: 19.4896 Eval-Loss-4: 18.4973 Eval-Loss-4: 18.4973 Eval-Loss-5: 9.3007 Eval-Loss-6: 8.1340 Eval-Loss-7: 7.5170 Eval-Loss-8a: 7.6870 Eval-Loss-8b: 7.2683 |

| ContextNetSimple (0.8) | Test above checkpoint after iteration 8b | Test-Loss: 7.7407 CER greedy: 0.0237 CER with lm: 0.0179 WER greedy: 0.0767 WER with lm: 0.0492 |

| SimpleConformer (16x240x4) | Completed training after 28 epochs (~3:20h/epoch), without any augmentations | Eval-Loss: 70.6178 |

| Citrinet (344) | Completed training after 6 epochs (~4h/epoch), didn't learn anything | Eval-Loss: 289.7605 |

| QuartzNet (15x5) | Continued old checkpoint | Eval-Loss: 5.0922 Test-Loss: 5.3353 CER greedy: 0.0139 CER with lm: 0.0124 WER greedy: 0.0457 WER with lm: 0.0368 |

| QuartzNet (15x5+LSTM) | Frozen+Full training onto old checkpoint | Eval-Loss: 4.9105 Test-Loss: 5.3112 CER greedy: 0.0143 CER with lm: 0.0125 WER greedy: 0.0477 WER with lm: 0.0370 |

Tests with reduced dataset size and with multiple restarts:

| Language | Datasets | Additional Infos | Performance Results |

|---|---|---|---|

| DE | CV short (314h) | Test with PocoLM large; about 18h on 2xNvidia-V100; Iteration 1 | Eval-Loss: 12.5308 Test-Loss: 13.9343 CER greedy: 0.0654 CER with lm: 0.0347 WER greedy: 0.2391 WER with lm: 0.0834 |

| DE | CV short (314h) | Iteration 2; about 22h | Eval-Loss: 11.3072 Test-Loss: 12.6970 CER greedy: 0.0556 CER with lm: 0.0315 WER greedy: 0.1986 WER with lm:0.0776 |

| DE | CV short (314h) | Iteration 3; about 13h | Eval-Loss: 11.2485 Test-Loss: 12.5631 CER greedy: 0.0532 CER with lm: 0.0309 WER greedy: 0.1885 WER with lm: 0.0766 |

| DE | CV short (314h) | Test Iteration 3 on Tuda | Test-Loss: 27.5804 CER greedy: 0.0478 CER with lm: 0.0326 WER greedy: 0.1913 WER with lm: 0.1166 |

| DE | D37 + CommonVoice | Additional training iteration on CV using checkpoint from D37+CV training with WER=0.0718 | Eval-Loss: 8.7156 Test-Loss: 9.8192 CER greedy: 0.0443 CER with lm: 0.0268 WER greedy: 0.1544 WER with lm:0.0664 |

| DE | D37 + CommonVoice | Above, test on Tuda | Test-Loss: 19.2681 CER greedy: 0.0358 CER with lm: 0.0270 WER greedy: 0.1454 WER with lm:0.1023 |

| ES | CV short (203h) | A fifth iteration with lr=0.01 did not converge; about 19h on 2xNvidia-V100 for first iteration | Eval-Loss-1: 10.8212 Eval-Loss-2: 10.7791 Eval-Loss-3: 10.7649 Eval-Loss-4: 10.7918 |

| ES | CV short (203h) | Above, test of first iteration | Test-Loss: 12.5954 CER greedy: 0.0591 CER with lm: 0.0443 WER greedy: 0.1959 WER with lm: 0.1105 |

| ES | CV short (203h) | Above, test of third iteration | Test-Loss: 12.6006 CER greedy: 0.0572 CER with lm: 0.0436 WER greedy: 0.1884 WER with lm: 0.1093 |

| ES | D8 + CommonVoice | Additional training iterations on CV using checkpoint from D8+CV training with WER=0.1003; test of first iteration with PocoLM large; a second iteration with lr=0.01 did not converge | Eval-Loss-1: 9.5202 Eval-Loss-2: 9.6056 Test-Loss: 11.2326 CER greedy: 0.0501 CER with lm: 0.0398 WER greedy: 0.1606 WER with lm:0.0995 |

| ES | CV short (203h) | Two step frozen training, about 13h+18h | Eval-Loss-1: 61.5673 Eval-Loss-2: 10.9956 Test-Loss: 12.7028 CER greedy: 0.0604 CER with lm: 0.0451 WER greedy: 0.2015 WER with lm: 0.1111 |

| ES | CV short (203h) | Single step with last layer reinitialization, about 18h | Eval-Loss: 11.6488 Test-Loss: 13.4355 CER greedy: 0.0643 CER with lm: 0.0478 WER greedy: 0.2163 WER with lm: 0.1166 |

| FR | CV short (364h) | The fourth iteration with lr=0.01; about 25h on 2xNvidia-V100 for first iteration; test of third iteration | Eval-Loss-1: 12.6529 Eval-Loss-2: 11.7833 Eval-Loss-3: 11.7141 Eval-Loss-4: 12.6193 |

| FR | CV short (364h) | Above, test of third iteration | Test-Loss: 14.8373 CER greedy: 0.0711 CER with lm: 0.0530 WER greedy: 0.2142 WER with lm: 0.1248 |

| FR | D7 + CommonVoice | Additional training iterations on CV using checkpoint from D7+CV training with WER=0.1167; test of first iteration with PocoLM large; a second iteration with lr=0.01 did not converge | Eval-Loss-1: 9.5452 Eval-Loss-2: 9.5860 Test-Loss: 12.5477 CER greedy: 0.0590 CER with lm: 0.0466 WER greedy: 0.1747 WER with lm:0.1104 |

| IT | CommonVoice | Transfer from English | Eval-Loss: 12.7120 Test-Loss: 14.4017 CER greedy: 0.0710 CER with lm: 0.0465 WER greedy: 0.2766 WER with lm: 0.1378 |

| IT | CommonVoice | Transfer from Spanish with alphabet shrinking | Eval-Loss: 10.7151 Test-Loss: 12.3298 CER greedy: 0.0585 CER with lm: 0.0408 WER greedy: 0.2208 WER with lm: 0.1216 |

| IT | D5 + CommonVoice | Continuing above from Spanish | Eval-Loss: 9.3055 Test-Loss: 10.8521 CER greedy: 0.0543 CER with lm: 0.0403 WER greedy: 0.2000 WER with lm: 0.1170 |

| IT | D5 + CommonVoice | Fine-tuned above checkpoint on CommonVoice again (lr=0.0001) | Eval-Loss: 9.3318 Test-Loss: 10.8453 CER greedy: 0.0533 CER with lm: 0.0395 WER greedy: 0.1967 WER with lm: 0.1153 |

The following experiments were run with an old version of this repository, at that time named as DeepSpeech-Polyglot,

using the DeepSpeech1 network from Mozilla-DeepSpeech.

While they are outdated, some of them might still provide helpful information for training the new networks.

Old checkpoints and scorers:

- German (D17S5 training and some older checkpoints, WER: 0.128, Train: ~1582h, Test: ~41h): Link

- Spanish (CCLMTV training, WER: 0.165, Train: ~660h, Test: ~25h): Link

- French (CCLMTV training, WER: 0.195, Train: ~787h, Test: ~25h): Link

- Italian (CLMV training, WER: 0.248 Train: ~257h, Test: ~21h): Link

- Polish (CLM training, WER: 0.034, Train: ~157h, Test: ~6h): Link

First experiments:

(Default dropout is 0.4, learning rate 0.0005):

| Dataset | Additional Infos | Performance Results |

|---|---|---|

| Voxforge | WER: 0.676611 CER: 0.403916 loss: 82.185226 |

|

| Voxforge | with augmentation | WER: 0.624573 CER: 0.348618 loss: 74.403786 |

| Voxforge | without "äöü" | WER: 0.646702 CER: 0.364471 loss: 82.567413 |

| Voxforge | cleaned data, without "äöü" | WER: 0.634828 CER: 0.353037 loss: 81.905258 |

| Voxforge | above checkpoint, tested on not cleaned data | WER: 0.634556 CER: 0.352879 loss: 81.849220 |

| Voxforge | checkpoint from english deepspeech, without "äöü" | WER: 0.394064 CER: 0.190184 loss: 49.066357 |

| Voxforge | checkpoint from english deepspeech, with augmentation, without "äöü", dropout 0.25, learning rate 0.0001 | WER: 0.338685 CER: 0.150972 loss: 42.031754 |

| Voxforge | reduce learning rate on plateau, with noise and standard augmentation, checkpoint from english deepspeech, cleaned data, without "äöü", dropout 0.25, learning rate 0.0001, batch size 48 | WER: 0.320507 CER: 0.131948 loss: 39.923031 |

| Voxforge | above with learning rate 0.00001 | WER: 0.350903 CER: 0.147837 loss: 43.451263 |

| Voxforge | above with learning rate 0.001 | WER: 0.518670 CER: 0.252510 loss: 62.927200 |

| Tuda + Voxforge | without "äöü", checkpoint from english deepspeech, cleaned train and dev data | WER: 0.740130 CER: 0.462036 loss: 156.115921 |

| Tuda + Voxforge | first Tuda then Voxforge, without "äöü", cleaned train and dev data, dropout 0.25, learning rate 0.0001 | WER: 0.653841 CER: 0.384577 loss: 159.509476 |

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | checkpoint from english deepspeech, with augmentation, without "äöü", cleaned data, dropout 0.25, learning rate 0.0001 | WER: 0.306061 CER: 0.151266 loss: 33.218510 |

Some results with some older code version:

(Default values: batch size 12, dropout 0.25, learning rate 0.0001, without "äöü", cleaned data , checkpoint from english deepspeech, early stopping, reduce learning rate on plateau, evaluation with scorer and top-500k words)

| Dataset | Additional Infos | Losses | Training epochs of best model | Performance Results |

|---|---|---|---|---|

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | test only with Tuda + CommonVoice others completely for training, language model with training transcriptions, with augmentation | Test: 29.363405 Validation: 23.509546 |

55 | WER: 0.190189 CER: 0.091737 |

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | above checkpoint tested with 3-gram language model | Test: 29.363405 | WER: 0.199709 CER: 0.095318 |

|

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | above checkpoint tested on Tuda only | Test: 87.074394 | WER: 0.378379 CER: 0.167380 |

Some results with some older code version:

(Default values: batch size 36, dropout 0.25, learning rate 0.0001, without "äöü", cleaned data , checkpoint from english deepspeech, early stopping, reduce learning rate on plateau, evaluation with scorer and top-500k words, data augmentation)

| Dataset | Additional Infos | Losses | Training epochs of best model | Performance Results |

|---|---|---|---|---|

| Voxforge | training from scratch | Test: 79.124008 Validation: 81.982976 |

29 | WER: 0.603879 CER: 0.298139 |

| Voxforge | Test: 44.312195 Validation: 47.915317 |

21 | WER: 0.343973 CER: 0.140119 |

|

| Voxforge | without reduce learning rate on plateau | Test: 46.160049 Validation: 48.926518 |

13 | WER: 0.367125 CER: 0.163931 |

| Voxforge | dropped last layer | Test: 49.844028 Validation: 52.722362 |

21 | WER: 0.389327 CER: 0.170563 |

| Voxforge | 5 cycled training | Test: 42.973358 | WER: 0.353841 CER: 0.158554 |

|

| Tuda | training from scratch, correct train/dev/test splitting | Test: 149.653427 Validation: 137.645307 |

9 | WER: 0.606629 CER: 0.296630 |

| Tuda | correct train/dev/test splitting | Test: 103.179092 Validation: 132.243965 |

3 | WER: 0.436074 CER: 0.208135 |

| Tuda | dropped last layer, correct train/dev/test splitting | Test: 107.047821 Validation: 101.219325 |

6 | WER: 0.431361 CER: 0.195361 |

| Tuda | dropped last two layers, correct train/dev/test splitting | Test: 110.523621 Validation: 103.844562 |

5 | WER: 0.442421 CER: 0.204504 |

| Tuda | checkpoint from Voxforge with WER 0.344, correct train/dev/test splitting | Test: 100.846367 Validation: 95.410456 |

3 | WER: 0.416950 CER: 0.198177 |

| Tuda | 10 cycled training, checkpoint from Voxforge with WER 0.344, correct train/dev/test splitting | Test: 98.007607 | WER: 0.410520 CER: 0.194091 |

|

| Tuda | random dataset splitting, checkpoint from Voxforge with WER 0.344 Important Note: These results are not meaningful, because same transcriptions can occur in train and test set, only recorded with different microphones |

Test: 23.322618 Validation: 23.094230 |

27 | WER: 0.090285 CER: 0.036212 |

| CommonVoice | checkpoint from Tuda with WER 0.417 | Test: 24.688297 Validation: 17.460029 |

35 | WER: 0.217124 CER: 0.085427 |

| CommonVoice | above tested with reduced testset where transcripts occurring in trainset were removed, | Test: 33.376812 | WER: 0.211668 CER: 0.079157 |

|

| CommonVoice + GoogleWavenet | above tested with GoogleWavenet | Test: 17.653290 | WER: 0.035807 CER: 0.007342 |

|

| CommonVoice | checkpoint from Voxforge with WER 0.344 | Test: 23.460932 Validation: 16.641201 |

35 | WER: 0.215584 CER: 0.084932 |

| CommonVoice | dropped last layer | Test: 24.480028 Validation: 17.505738 |

36 | WER: 0.220435 CER: 0.086921 |

| Tuda + GoogleWavenet | added GoogleWavenet to train data, dev/test from Tuda, checkpoint from Voxforge with WER 0.344 | Test: 95.555939 Validation: 90.392490 |

3 | WER: 0.390291 CER: 0.178549 |

| Tuda + GoogleWavenet | GoogleWavenet as train data, dev/test from Tuda | Test: 346.486420 Validation: 326.615474 |

0 | WER: 0.865683 CER: 0.517528 |

| Tuda + GoogleWavenet | GoogleWavenet as train/dev data, test from Tuda | Test: 477.049591 Validation: 3.320163 |

23 | WER: 0.923973 CER: 0.601015 |

| Tuda + GoogleWavenet | above checkpoint tested with GoogleWavenet | Test: 3.406022 | WER: 0.012919 CER: 0.001724 |

|

| Tuda + GoogleWavenet | checkpoint from english deepspeech tested with Tuda | Test: 402.102661 | WER: 0.985554 CER: 0.752787 |

|

| Voxforge + GoogleWavenet | added all of GoogleWavenet to train data, dev/test from Voxforge | Test: 45.643063 Validation: 49.620488 |

28 | WER: 0.349552 CER: 0.143108 |

| CommonVoice + GoogleWavenet | added all of GoogleWavenet to train data, dev/test from CommonVoice | Test: 25.029057 Validation: 17.511973 |

35 | WER: 0.214689 CER: 0.084206 |

| CommonVoice + GoogleWavenet | above tested with reduced testset | Test: 34.191067 | WER: 0.213164 CER: 0.079121 |

Updated to DeepSpeech v0.7.3 and new english checkpoint:

(Default values: See flags.txt in releases, scorer with kaldi-tuda sentences only)

(Testing with noise and speech overlay is done with older noiseaugmaster branch, which implemented this functionality)

| Dataset | Additional Infos | Losses | Training epochs of best model | Performance Results |

|---|---|---|---|---|

| Voxforge | Test: 32.844025 Validation: 36.912005 |

14 | WER: 0.240091 CER: 0.087971 |

|

| Voxforge | without freq_and_time_masking augmentation | Test: 33.698494 Validation: 38.071722 |

10 | WER: 0.244600 CER: 0.094577 |

| Voxforge | using new audio augmentation options | Test: 29.280865 Validation: 33.294815 |

21 | WER: 0.220538 CER: 0.079463 |

| Voxforge | updated augmentations again | Test: 28.846869 Validation: 32.680268 |

16 | WER: 0.225360 CER: 0.083504 |

| Voxforge | test above with older noiseaugmaster branch | Test: 28.831675 | WER: 0.238961 CER: 0.081555 |

|

| Voxforge | test with speech overlay | Test: 89.661995 | WER: 0.570903 CER: 0.301745 |

|

| Voxforge | test with noise overlay | Test: 53.461609 | WER: 0.438126 CER: 0.213890 |

|

| Voxforge | test with speech and noise overlay | Test: 79.736122 | WER: 0.581259 CER: 0.310365 |

|

| Voxforge | second test with speech and noise to check random influence | Test: 81.241333 | WER: 0.595410 CER: 0.319077 |

|

| Voxforge | add speech overlay augmentation | Test: 28.843914 Validation: 32.341234 |

27 | WER: 0.222024 CER: 0.083036 |

| Voxforge | change snr=50:20 |

Test: 28.502413 Validation: 32.236247 |

28 | WER: 0.226005 CER: 0.085475 |

| Voxforge | test above with older noiseaugmaster branch | Test: 28.488537 | WER: 0.239530 CER: 0.083855 |

|

| Voxforge | test with speech overlay | Test: 47.783081 | WER: 0.383612 CER: 0.175735 |

|

| Voxforge | test with noise overlay | Test: 51.682060 | WER: 0.428566 CER: 0.209789 |

|

| Voxforge | test with speech and noise overlay | Test: 60.275940 | WER: 0.487709 CER: 0.255167 |

|

| Voxforge | add noise overlay augmentation | Test: 27.940659 Validation: 31.988175 |

28 | WER: 0.219143 CER: 0.076050 |

| Voxforge | change snr=50:20 |

Test: 26.588453 Validation: 31.151855 |

34 | WER: 0.206141 CER: 0.072018 |

| Voxforge | change to snr=18:9~6 | Test: 26.311581 Validation: 30.531299 |

30 | WER: 0.211865 CER: 0.074281 |

| Voxforge | test above with older noiseaugmaster branch | Test: 26.300938 | WER: 0.227466 CER: 0.073827 |

|

| Voxforge | test with speech overlay | Test: 76.401451 | WER: 0.499962 CER: 0.254203 |

|

| Voxforge | test with noise overlay | Test: 44.011471 | WER: 0.376783 CER: 0.165329 |

|

| Voxforge | test with speech and noise overlay | Test: 65.408264 | WER: 0.496168 CER: 0.246516 |

|

| Voxforge | speech and noise overlay | Test: 27.101889 Validation: 31.407527 |

44 | WER: 0.220243 CER: 0.082179 |

| Voxforge | test above with older noiseaugmaster branch | Test: 27.087360 | WER: 0.232094 CER: 0.080319 |

|

| Voxforge | test with speech overlay | Test: 46.012951 | WER: 0.362291 CER: 0.164134 |

|

| Voxforge | test with noise overlay | Test: 44.035809 | WER: 0.377276 CER: 0.171528 |

|

| Voxforge | test with speech and noise overlay | Test: 53.832214 | WER: 0.441768 CER: 0.218798 |

|

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | test with Voxforge + Tuda + CommonVoice others completely for training, with noise and speech overlay | Test: 22.055849 Validation: 17.613633 |

46 | WER: 0.208809 CER: 0.087215 |

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | above tested on Voxforge devdata | Test: 16.395443 | WER: 0.163827 CER: 0.056596 |

|

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | optimized scorer alpha and beta on Voxforge devdata | Test: 16.395443 | WER: 0.162842 | |

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | test with Voxforge + Tuda + CommonVoice, optimized scorer alpha and beta | Test: 22.055849 | WER: 0.206960 CER: 0.086306 |

|

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | scorer (kaldi-tuda) with train transcriptions, optimized scorer alpha and beta | Test: 22.055849 | WER: 0.134221 CER: 0.064267 |

|

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | scorer only out of train transcriptions, optimized scorer alpha and beta | Test: 22.055849 | WER: 0.142880 CER: 0.064958 |

|

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | scorer (kaldi-tuda + europarl + news) with train transcriptions, optimized scorer alpha and beta | Test: 22.055849 | WER: 0.135759 CER: 0.064773 |

|

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | above scorer with 1m instead of 500k top words, optimized scorer alpha and beta | Test: 22.055849 | WER: 0.136650 CER: 0.066470 |

|

| Tuda + Voxforge + SWC + Mailabs + CommonVoice | test with Tuda only | Test: 54.977085 | WER: 0.250665 CER: 0.103428 |

|

| Voxforge FR | speech and noise overlay | Test: 5.341695 Validation: 12.736551 |

49 | WER: 0.175954 CER: 0.045416 |

| CommonVoice + Css10 + Mailabs + Tatoeba + Voxforge FR | test with Voxforge + CommonVoice others completely for training, with speech and noise overlay | Test: 20.404339 Validation: 21.920289 |

62 | WER: 0.302113 CER: 0.121300 |

| CommonVoice + Css10 + Mailabs + Tatoeba + Voxforge ES | test with Voxforge + CommonVoice others completely for training, with speech and noise overlay | Test: 14.521997 Validation: 22.408368 |

51 | WER: 0.154061 CER: 0.055357 |

Using new CommonVoice v5 releases:

(Default values: See flags.txt in released checkpoints; using correct instead of random splits of CommonVoice; Old german scorer alpha+beta for all)

| Language | Dataset | Additional Infos | Losses | Training epochs of best model | Performance Results |

|---|---|---|---|---|---|

| DE | CommonVoice + CssTen + LinguaLibre + Mailabs + SWC + Tatoeba + Tuda + Voxforge + ZamiaSpeech | test with CommonVoice + Tuda + Voxforge, others completely for training; with speech and noise overlay; top-488538 scorer (words occurring at least five times) | Test: 29.286192 Validation: 26.864552 |

30 | WER: 0.182088 CER: 0.081321 |

| DE | CommonVoice + CssTen + LinguaLibre + Mailabs + SWC + Tatoeba + Tuda + Voxforge + ZamiaSpeech | like above, but using each file 10x with different augmentations | Test: 25.694464 Validation: 23.128045 |

16 | WER: 0.166629 CER: 0.071999 |

| DE | CommonVoice + CssTen + LinguaLibre + Mailabs + SWC + Tatoeba + Tuda + Voxforge + ZamiaSpeech | above checkpoint, tested on Tuda only | Test: 57.932476 | WER: 0.260319 CER: 0.109301 |

|

| DE | CommonVoice + CssTen + LinguaLibre + Mailabs + SWC + Tatoeba + Tuda + Voxforge + ZamiaSpeech | optimized scorer alpha+beta | Test: 25.694464 | WER: 0.166330 CER: 0.070268 |

|

| ES | CommonVoice + CssTen + LinguaLibre + Mailabs + Tatoeba + Voxforge | test with CommonVoice, others completely for training; with speech and noise overlay; top-303450 scorer (words occurring at least twice) | Test: 25.443010 Validation: 22.686161 |

42 | WER: 0.193316 CER: 0.093000 |

| ES | CommonVoice + CssTen + LinguaLibre + Mailabs + Tatoeba + Voxforge | optimized scorer alpha+beta | Test: 25.443010 | WER: 0.187535 CER: 0.083490 |

|

| FR | CommonVoice + CssTen + LinguaLibre + Mailabs + Tatoeba + Voxforge | test with CommonVoice, others completely for training; with speech and noise overlay; top-316458 scorer (words occurring at least twice) | Test: 29.761099 Validation: 24.691544 |

52 | WER: 0.231981 CER: 0.116503 |

| FR | CommonVoice + CssTen + LinguaLibre + Mailabs + Tatoeba + Voxforge | optimized scorer alpha+beta | Test: 29.761099 | WER: 0.228851 CER: 0.109247 |

|

| IT | CommonVoice + LinguaLibre + Mailabs + Voxforge | test with CommonVoice, others completely for training; with speech and noise overlay; top-51216 scorer out of train transcriptions (words occurring at least twice) | Test: 25.536196 Validation: 23.048596 |

46 | WER: 0.249197 CER: 0.093717 |

| IT | CommonVoice + LinguaLibre + Mailabs + Voxforge | optimized scorer alpha+beta | Test: 25.536196 | WER: 0.247785 CER: 0.096247 |

|

| PL | CommonVoice + LinguaLibre + Mailabs | test with CommonVoice, others completely for training; with speech and noise overlay; top-39175 scorer out of train transcriptions (words occurring at least twice) | Test: 14.902746 Validation: 15.508280 |

53 | WER: 0.040128 CER: 0.022947 |

| PL | CommonVoice + LinguaLibre + Mailabs | optimized scorer alpha+beta | Test: 14.902746 | WER: 0.034115 CER: 0.020230 |

| Dataset | Additional Infos | Losses | Training epochs of best model | Total training duration | WER |

|---|---|---|---|---|---|

| Voxforge | updated rlrop; frozen transfer-learning; no augmentation; es_min_delta=0.9 | Test: 37.707958 Validation: 41.832220 |

12 + 3 | 42 min | |

| Voxforge | like above; without frozen transfer-learning; | Test: 36.630890 Validation: 41.208125 |

7 | 28 min | |

| Voxforge | dropped last layer | Test: 42.516270 Validation: 47.105518 |

8 | 28 min | |

| Voxforge | dropped last layer; with frozen transfer-learning in two steps | Test: 36.600590 Validation: 40.640134 |

14 + 8 | 42 min | |

| Voxforge | updated rlrop; with augmentation; es_min_delta=0.9 | Test: 35.540062 Validation: 39.974685 |

6 | 46 min | |

| Voxforge | updated rlrop; with old augmentations; es_min_delta=0.1 | Test: 30.655203 Validation: 33.655750 |

9 | 48 min | |

| TerraX + Voxforge + YKollektiv | Voxforge only for dev+test but not in train; rest like above | Test: 32.936977 Validation: 36.828410 |

19 | 4:53 h | |

| Voxforge | layer normalization; updated rlrop; with old augmentations; es_min_delta=0.1 | Test: 57.330410 Validation: 61.025009 |

45 | 2:37 h | |

| Voxforge | dropout=0.3; updated rlrop; with old augmentations; es_min_delta=0.1 | Test: 30.353968 Validation: 33.144178 |

20 | 1:15 h | |

| Voxforge | es_min_delta=0.05; updated rlrop; with old augmentations | Test: 29.884317 Validation: 32.944382 |

12 | 54 min | |

| Voxforge | fixed updated rlrop; es_min_delta=0.05; with old augmentations | Test: 28.903509 Validation: 32.322064 |

34 | 1:40 h | |

| Voxforge | from scratch; no augmentations; fixed updated rlrop; es_min_delta=0.05 | Test: 74.347054 Validation: 79.838900 |

28 | 1:26 h | 0.38 |

| Voxforge | wav2letter; stopped by hand after one/two overnight runs; from scratch; no augmentations; single gpu; | 18/37 | 16/33 h | 0.61/0.61 |

| Language | Datasets | Additional Infos | Training epochs of best model Total training duration |

Losses Performance Results |

|---|---|---|---|---|

| DE | BasFormtask + BasSprecherinnen + CommonVoice + CssTen + Gothic + LinguaLibre + Kurzgesagt + Mailabs + MussteWissen + PulsReportage + SWC + Tatoeba + TerraX + Tuda + Voxforge + YKollektiv + ZamiaSpeech + 5x CV-SingleWords (D17S5) |

test with Voxforge + Tuda + CommonVoice others completely for training; files 10x with different augmentations; noise overlay; fixed updated rlrop; optimized german scorer; updated dataset cleaning algorithm -> include more short files; added the CV-SingleWords dataset five times because the last checkpoint had problems detecting short speech commands -> a bit more focus on training shorter words | 24 7d8h (7x V100-GPU) |

Test: 25.082140 Validation: 23.345149 WER: 0.161870 CER: 0.068542 |

| DE | D17S5 | test above on CommonVoice only | Test: 18.922359 WER: 0.127766 CER: 0.056331 |

|

| DE | D17S5 | test above on Tuda only, using all test files and full dataset cleaning | Test: 54.675545 WER: 0.245862 CER: 0.101032 |

|

| DE | D17S5 | test above on Tuda only, using official split (excluding Realtek recordings), only text replacements | Test: 39.755287 WER: 0.186023 CER: 0.064182 |

|

| FR | CommonVoice + CssTen + LinguaLibre + Mailabs + Tatoeba + Voxforge | test with CommonVoice, others completely for training; two step frozen transfer learning; augmentations only in second step; files 10x with different augmentations; noise overlay; fixed updated rlrop; optimized scorer; updated dataset cleaning algorithm -> include more short files | 14 + 34 5h + 5d13h (7x V100-GPU) |

Test: 24.771702 Validation: 20.062641 WER: 0.194813 CER: 0.092049 |

| ES | CommonVoice + CssTen + LinguaLibre + Mailabs + Tatoeba + Voxforge | like above | 15 + 27 5h + 3d1h (7x V100-GPU) |

Test: 21.235971 Validation: 18.722595 WER: 0.165126 CER: 0.075567 |