This repository contains code for semantic segmentation using PyTorch. It includes tools for training, testing, inference, and a Model Serving FastAPI + Streamlit for interactive visualization.

Sourse: https://www.kaggle.com/datasets/kumaresanmanickavelu/lyft-udacity-challenge

Download and unzip to data/ folder.

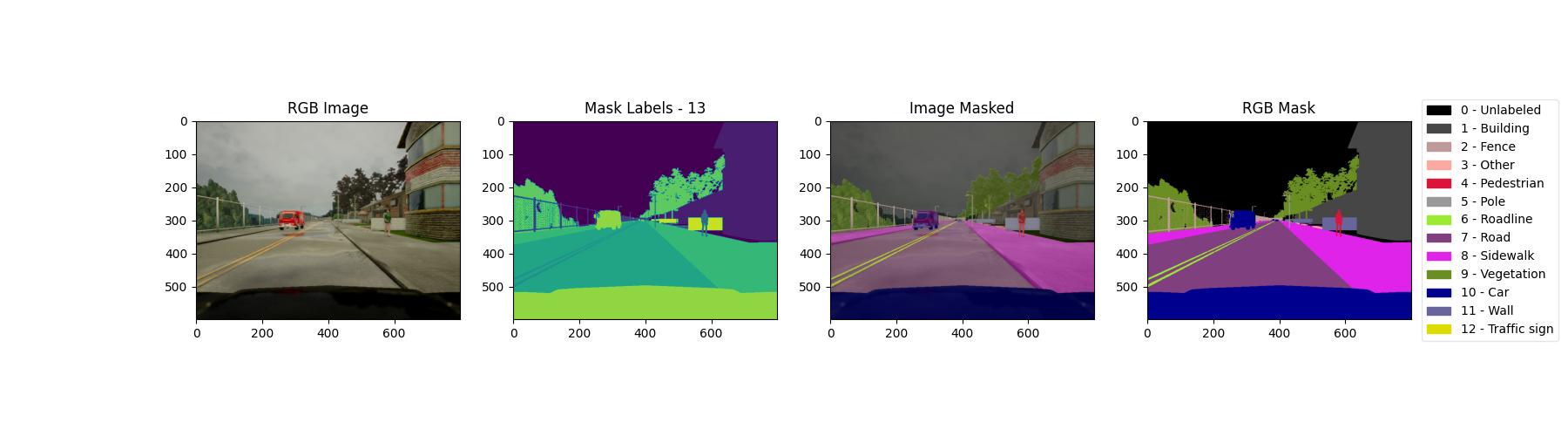

This dataset provides data images and labeled semantic segmentations captured via CARLA self-driving car simulator. The data was generated as part of the Lyft Udacity Challenge. This dataset can be used to train ML algorithms to identify semantic segmentation of cars, roads etc in an image (13 classes).

Follow these steps to set up your environment and install the necessary packages:

-

Clone this repository:

git clone https://github.com/ederev/rb-segmentation.git cd rb-segmentation -

Create and activate a virtual environment:

python3 -m venv .venv source .venv/bin/activateor via conda using environment.yaml

conda env create -f environment.yml conda activate .venv -

Install dependencies:

pip install -r requirements.txt

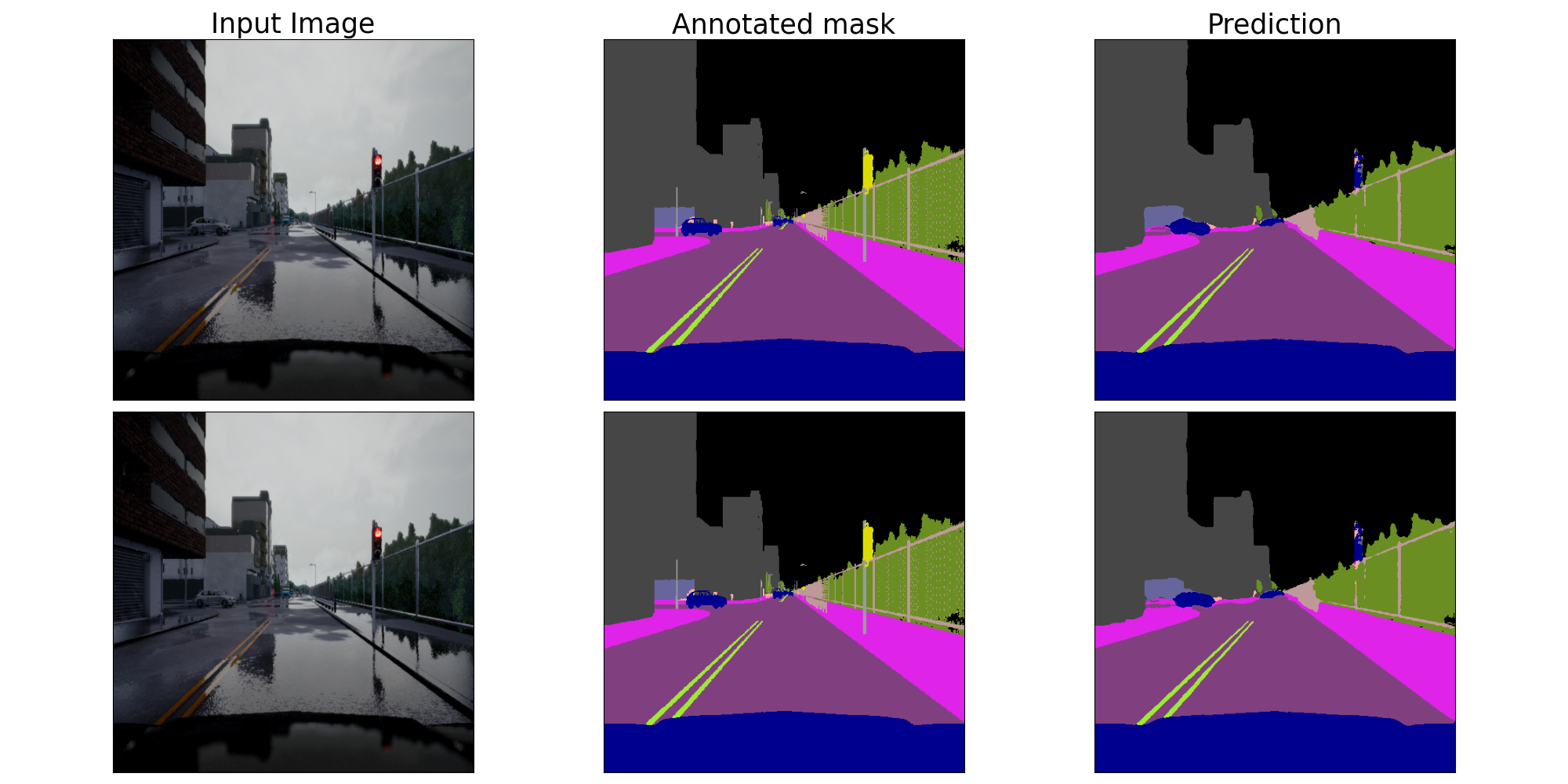

To train a semantic segmentation model, run the following command:

python -m segmentation.train --config=configs/unet.yaml --project_path=outputs

where

--configis the path to experiment config in configs/

!NOTE: (models available: UNetCustom - unet from scratch; SMP - any architecture + encoder supported in segmentation models pytorch)

--project_pathis the path for storing project artifacts (models, logs, checkpoints, visualizations, metrics, etc.)

As a result, torch model is saved and converted in formats for inference:.pt, .pth, .onnx.

Tensorboard logs visualization is also supported:

tensorboard --logdir outputs/<experiment-folder>/logs

Artifacts for 'UNet'+'timm-mobilenetv3_large_100' experiment - DOWNLOAD LINK (place it in outputs/ folder)

Several tests for inference models are excecuted directly at the end of training process in segmentation/train.py.

Also you can other tests to ensure the code is functioning correctly. Use the following command:

- inference on video feed using a pre-trained model

python -m segmentation.inference

- benchmarking CPU and GPU models

python -m segmentation.benchmark

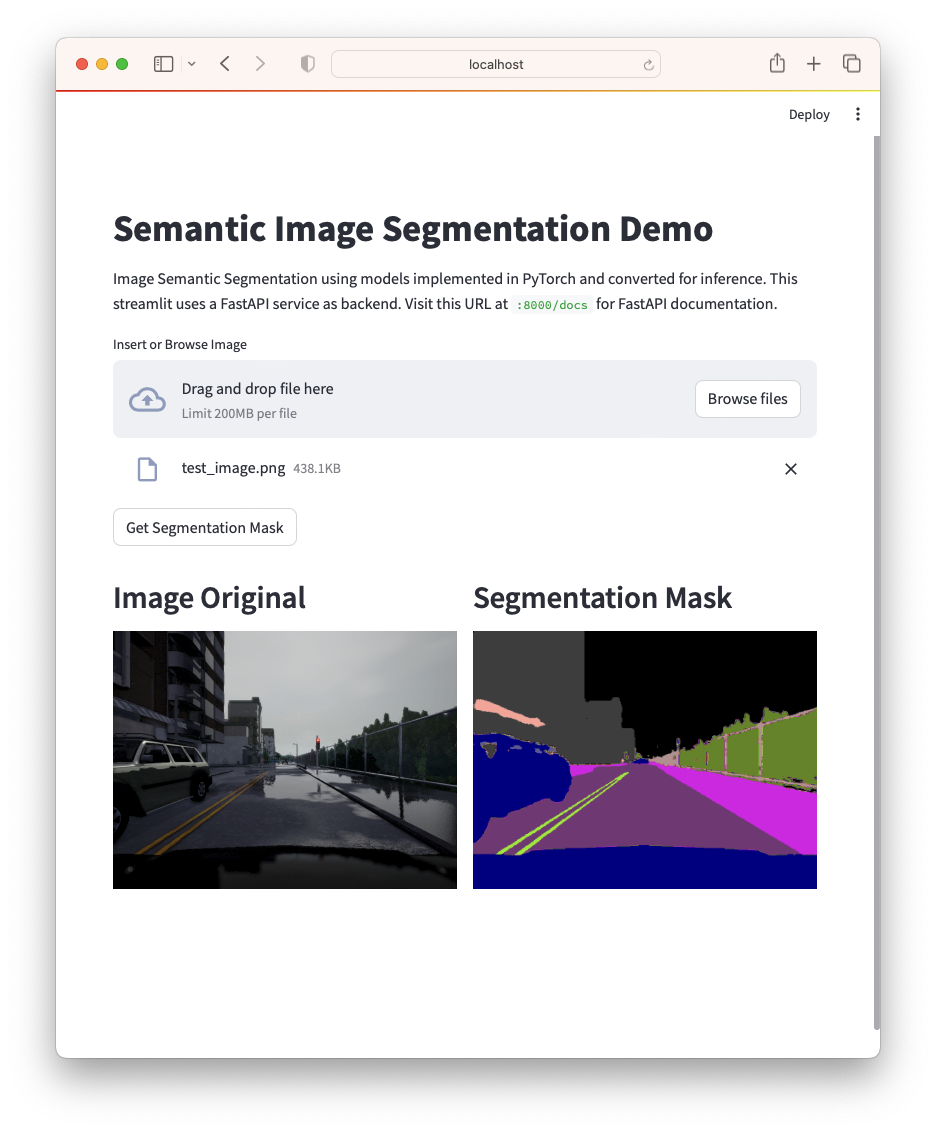

Еxample Demo of usage of streamlit and FastAPI for ML torch.jit() serving.

Here semantic segmentation torch traced model_traced.pt is served sing FastAPI for the backend service and streamlit for the frontend service,

docker compose orchestrates the two services and allows communication between them.

Make sure you have Docker installed.

To run the example in a machine running Docker and docker compose, run:

docker compose build

docker compose up

The FastAPI app will be available at http://localhost:8000, and the Streamlit UI at http://localhost:8501.

For Documentation of FastAPI visit http://localhost:8000/docs.