Machine Learning Zoomcap Capstone 2 project

- Problem statement

- Directory layout

- Setup

- Training model

- Notebooks

- Running the app with Docker (Recommended)

- Running with Kubernetes

- Application running on Cloud

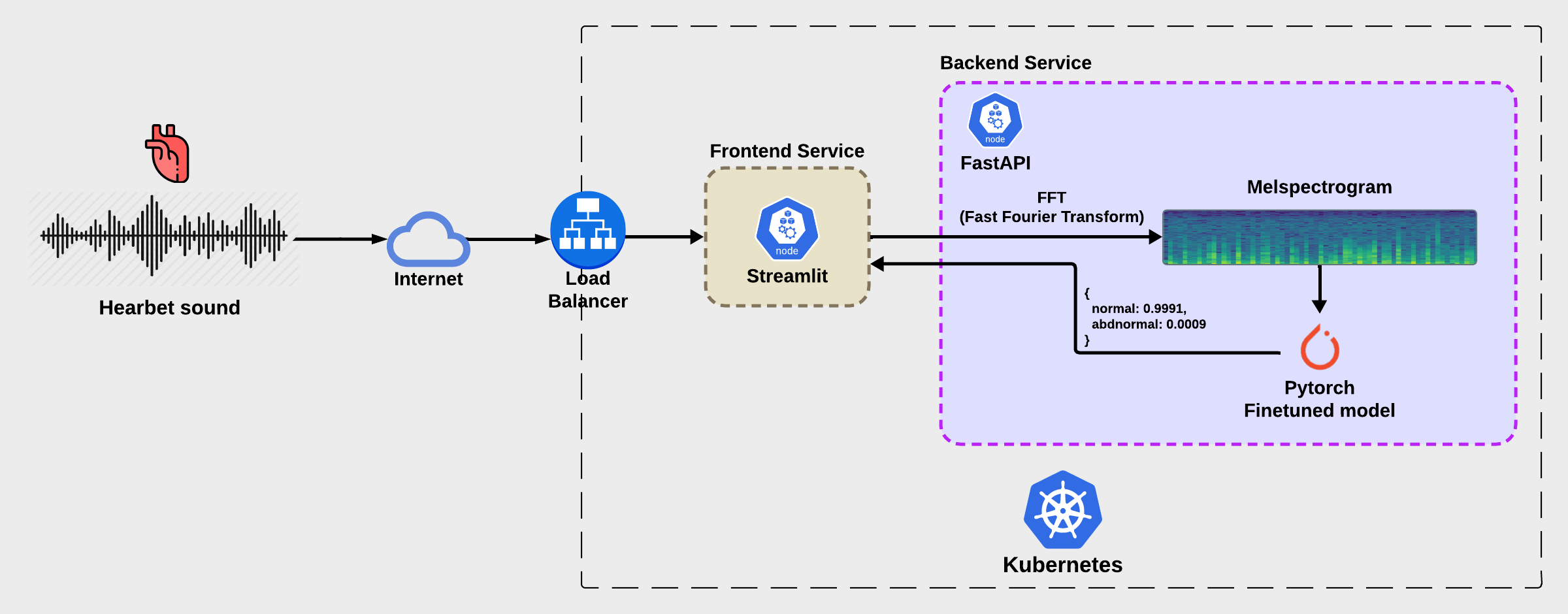

- Architecture

- Checkpoints

- References

This project addresses the critical challenge of early detection of heart anomalies by providing individuals with a reliable tool to assess their cardiovascular health based on heart sound analysis. Whether users are proactively monitoring their well-being or expressing concerns about potential cardiac conditions, an accurate anomaly detector is essential. To meet this need, a robust deep learning model has been developed and trained using the "Heartbeat Sound Anomaly Detection Database" (See on references), a comprehensive collection of audio files capturing diverse heart sounds.

The database encompasses information on ten crucial audio features, including variations in heartbeats, murmurs, tones, and other distinctive sound patterns associated with cardiac health. These audio variables were meticulously analyzed to uncover hidden patterns and gain valuable insights into factors influencing heart anomalies. Subsequently, a cutting-edge deep learning model was rigorously trained, validated, and deployed in real-time, enabling efficient and accurate detection of heart anomalies through the analysis of heartbeat sounds.

By leveraging this innovative solution, individuals can proactively monitor their cardiovascular health, receive timely alerts for potential anomalies detected in heartbeat sounds, and take preventive measures to ensure their well-being. The implementation of advanced technology in this domain empowers users to make informed decisions about their heart health, contributing to early intervention and improved cardiovascular outcomes.

.

├── backend_app # Backend files

├── config_management # Config files

├── frontend_app # Directory with files to create Streamlit UI application

├── images # Assets

├── model_pipeline # Files to preprocess and train the model

|── pth_models # Trained models

└── notebooks # Notebooks used to explore data and select the best model

7 directories

- Rename

.env.exampleto.envand set your Kaggle credentials in this file. - Sign into Kaggle account.

- Go to https://www.kaggle.com/settings

- Click on

Create new Tokento download thekaggle.jsonfile - Copy

usernameandkeyvalues and past them into.envvariables respectively. - Make installation:

- For UNIX-based systems and Windows (WSL), you do not need to install make.

- For Windows without WSL:

- Install chocolatey from here

- Then,

choco install make.

This project already provides a trained model in /pth_models directory to deploy the application, but if you wish generate new models, then follow the next steps.

Take into account it will take several minutes even with an available gpu:

-

Create a conda environment:

conda create -n <name-of-env> python=3.11 -

Start environment:

conda activate <name-of-env>orsource activate <name-of-env> -

Install pytorch dependencies:

With GPU:

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

With CPU:

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu

- Install rest of dependencies:

pip install kaggle pandas numpy seaborn pyyaml numpy matplotlib ipykernel librosa - Run

make train - The models will be saved to

/pth_modelsdirectory with the following pattern:epoch_{epoch}_acc={accuracy in training}_val_acc={accuracy in validation}.pth

You will see different models per epoch, you must choose one of them afterwards and set it in the configuration file. The model_name property:

title: 'Hearbet Sound Anomaly Detector API'

unzipped_directory: unzipped_data

kaggle_dataset: kinguistics/heartbeat-sounds

model_name: pth_models/epoch_4_acc=0.8416_val_acc=0.7614.pth ## Replace for the new model

...You are free to consciously manipulate this configuration file with different parameters.

Run notebooks in notebooks/ directory to conduct Exploratory Data Analysis and model training. The environment created in previous section can be also used here.

Run docker-compose up --build to start the services at first time or docker-compose up to start services after the initial build

http://localhost:8501(Streamlit UI)http://localhost:8000(Backend service)

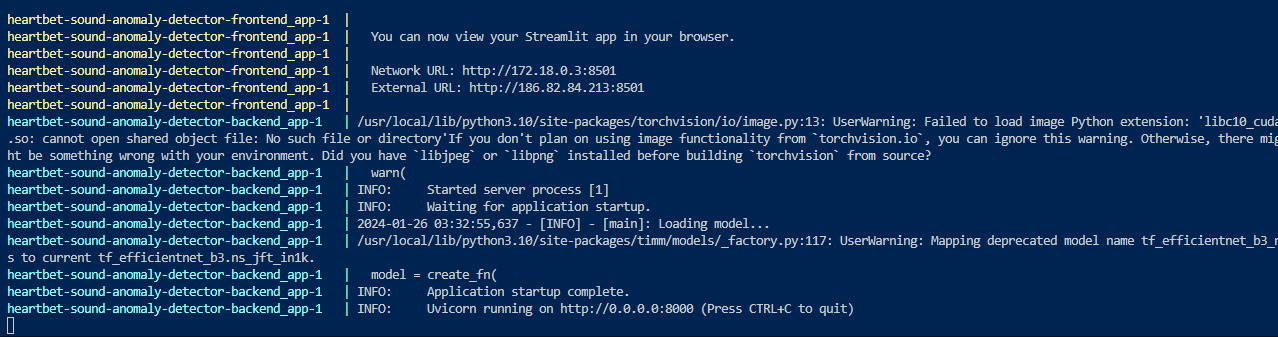

The output should look like this:

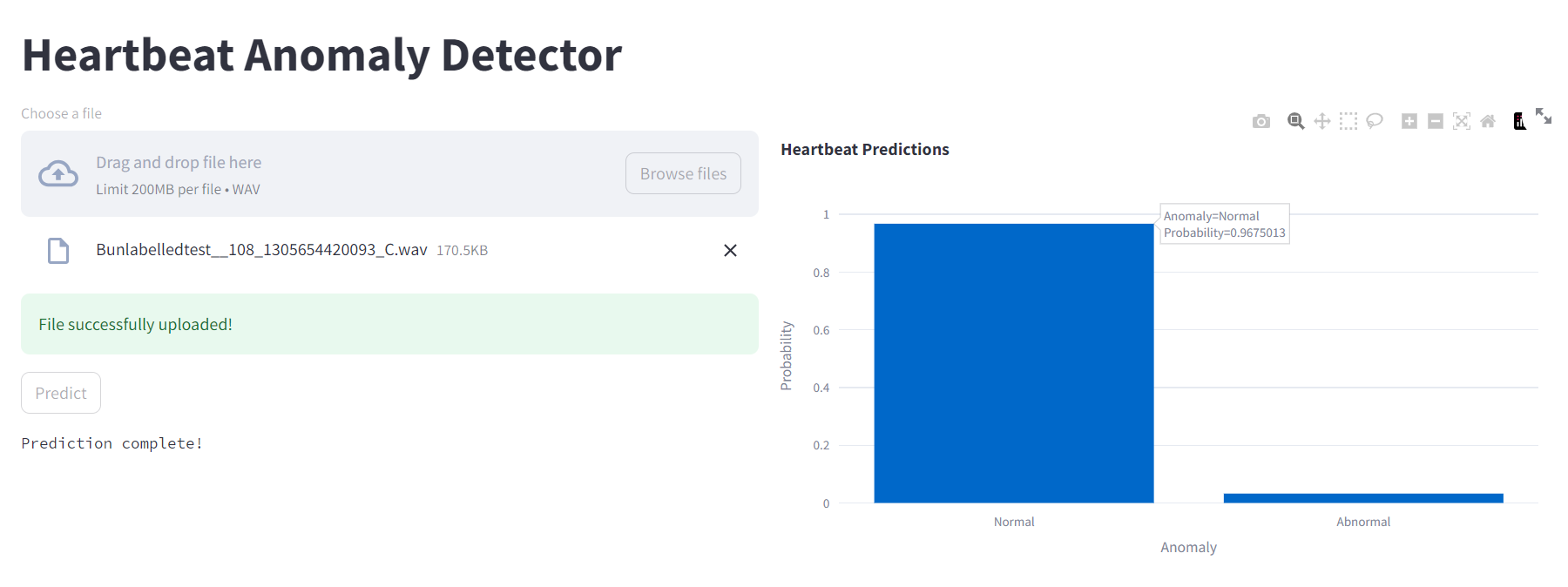

User interface designed using Streamlit to interact with backend endpoints:

If you did not train any model, then run make fetch_dataset to download the dataset from Kaggle website and test the prediction endpoint.

- Stop the services with

docker-compose down

Assuming you have kind tool installed on your system, follow the instructions on kube_instructions.md

The application has been deployed to cloud using AWS ElasticBeanstalk, both frontend and backend were separately deployed using eb command:

- Problem description

- EDA

- Model training

- Exporting notebook to script

- Reproducibility

- Model deployment

- Dependency and enviroment management

- Containerization

- Cloud deployment

- Linter

- CI/CD workflow

- Pipeline orchestration

- Unit tests