This repository contains an implementation of the Tacotron text-to-speech model from scratch. This implementation follows the structure and principles described in the original paper by Wang et al., with some modifications and improvements.

! Check out the generated audios in samples folder

- End-to-end text-to-speech synthesis

- Mel-spectrogram generation

- Griffin-Lim vocoder for waveform synthesis

- Support for custom datasets

Clone the repository, and install the required dependencies:

git clone https://github.com/dykyivladk1/tacotron.git

cd tacotron

pip install -r requirements.txtEnsure you have the necessary libraries installed. The main dependencies are:

- PyTorch

- Numpy

- Librosa

- Matplotplib

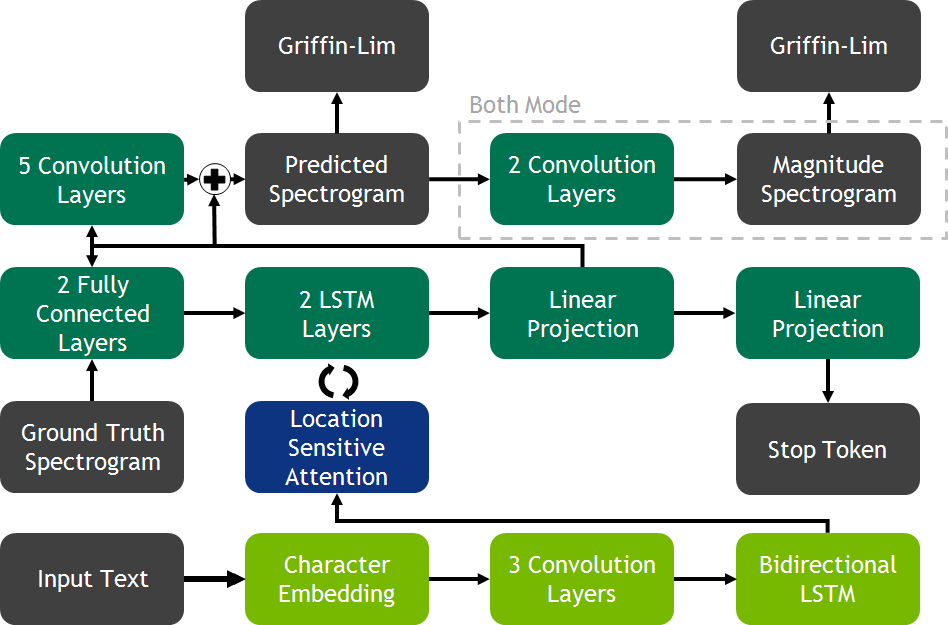

- Frontend

- Text Normalization

- Token Embedding

- Encoder

- CBHG(Convolutional Banks, HighWay Networks, Bidirectional GRU)

- Attention Mechanism

- Bahdanau Attention

- Decoder

- PreNet

- Autoregressive GRU Layers

- Linear Projection Layer

- Waveform Synthesis

- Griffin-Lim Algorithm of Neural Vocoder

In order to train the model for custom dataset, prepare the data in the following format

data/: Contains all files.wav: all wav filesmetadata.csv: contains all the text transcripts and audio file names

The metadata.csv should have the following format:

filename|transcriptionRun the preprocessing script to convert audio files into mel-spectrograms:

python source/prepare_data.py --data-dir data --output_dir processed_data --metadata_file metadata.csv --config-path config.json --num-jobs 4After run the split file for splitting the dataset into training and testing datasets:

python source/split.py --metadata metadata.txtThe metadata.txt is the file, containing the indexes of all files.

python source/train.py For configuring the training parameters, change the config/config.yaml and for setting different number of epochs, change the variable EPOCHS to a desired number.

python source/inference.py --checkpoint-path weights/checkpoint_step165000.pth --text "Thanks for your attention"The generated audio file is saved in samples folder.

Wang, Y., Skerry-Ryan, R. J., Stanton, D., Wu, Y., Weiss, R. J., Jaitly, N., Yang, Z., Xiao, Y., Chen, Z., Bengio, S., Le, Q., Agiomyrgiannakis, Y., Clark, R., & Saurous, R. A. (2017). Tacotron: Towards End-to-End Speech Synthesis. arXiv. https://arxiv.org/abs/1703.10135

This project is licensed under the MIT License - see the LICENSE file for details.

Check out my Medium article on Full Text-to-Speech Synthesis with Tacotron for an in-depth explanation and tutorial.

Thanks for attention!