Visual Language Maps for Robot Navigation

Chenguang Huang, Oier Mees, Andy Zeng, Wolfram Burgard

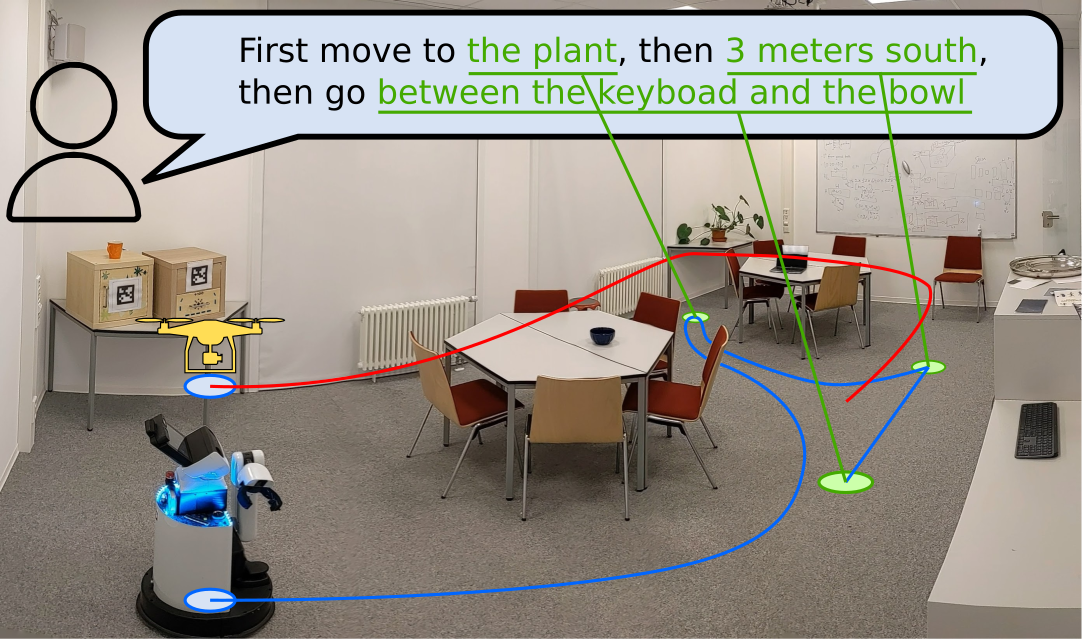

We present VLMAPs (Visual Language Maps), a spatial map representation in which pretrained visuallanguage model features are fused into a 3D reconstruction of the physical world. Spatially anchoring visual language features enables natural language indexing in the map, which can be used to, e.g., localize landmarks or spatial references with respect to landmarks – enabling zero-shot spatial goal navigation without additional data collection or model finetuning.

Try VLMaps creation and landmark indexing in .

To begin on your own machine, clone this repository locally

git clone https://github.com/vlmaps/vlmaps.gitInstall requirements:

$ conda create -n vlmaps python=3.8 -y # or use virtualenv

$ conda activate vlmaps

$ conda install jupyter -y

$ cd vlmaps

$ bash install.bashStart the jupyter notebook

$ jupyter notebook demo.ipynbIf you find the dataset or code useful, please cite:

@inproceedings{huang23vlmaps,

title={Visual Language Maps for Robot Navigation},

author={Chenguang Huang and Oier Mees and Andy Zeng and Wolfram Burgard},

booktitle = {Proceedings of the IEEE International Conference on Robotics and Automation (ICRA)},

year={2023},

address = {London, UK}

} MIT License