If you have raw delay discounting data and you want to score that data using Bayesian methods, then you have come to the right place.

The goal here is maximal accessibility to those who are not familiar with programming. So these steps are pretty easy to follow, but we should note that we cannot do all the advanced things that we might be able to do when using a programming language like Python, Julia, or R.

If you use this work, please cite the following paper:

Vincent, B. T. (2016). Hierarchical Bayesian estimation and hypothesis testing for delay discounting tasks. Behavior Research Methods. 48(4), 1608-1620. [Link to paper]

If you literally love this, then consider buying me a coffee or just tweet about it. I am @inferenceLab on twitter.

- Download the latest version of JASP.

- Enable JAGS by running JASP, click on the "+" icon in the top right and check the tick box called "JAGS".

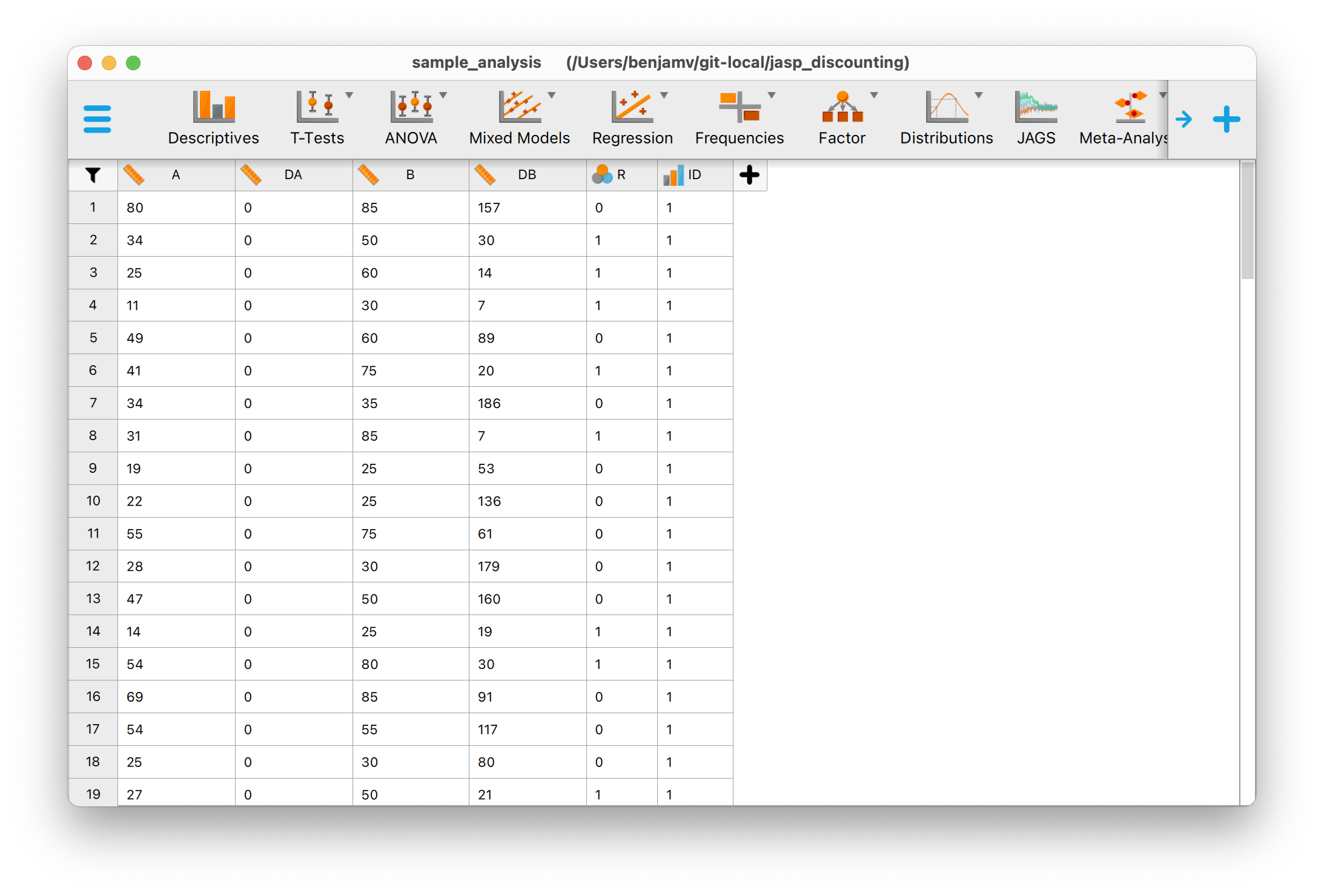

This might be the only really taxing step... Get your data into the form where you have the following columns:

A= reward magnitude of the smaller sooner rewardDA= delay to the smaller sooner rewardB= reward magnitude of the larger later rewardDB= delay to the larger later rewardR= participant response, coded as 1 = chose larger later, 0 = chose smaller sooner.ID= participant ID. These need to be1, 2, 3, ... N.

Each row will correspond to a single trial (choice) of the delay discounting experiment.

This needs to be saved in .csv format for easy import to JASP. If you are preparing your data in excel, you can simply export as a .csv file.

- Open up JAGS and

Open > Computer > Browse. If you want you can use the sample data from just 4 participants (sample_data.csv). You should end up with something that looks like this:

-

Create a JAGS analysis block by clicking on the JAGS icon in the top ribbon of JASP.

-

Enter the following JAGS model code. This particular model equates to a relatively simple model which treats the participants independently - there is no hierarchical estimation in this model. Other JAGS models are provided below.

model{

# set up priors for each participant

for (p in 1:max(ID))

{

logk[p] ~ dnorm(log(1/50), 1/(2.5^2))

alpha[p] ~ dexp(0.01)

epsilon[p] ~ dbeta(1.1 , 10.9 ) T(,0.5)

}

# loop over all trials

for (t in 1:length(R))

{

VA[t] <- A[t] / (1+(exp(logk[ID[t]])*DA[t]))

VB[t] <- B[t] / (1+(exp(logk[ID[t]])*DB[t]))

P[t] <- epsilon[ID[t]] + (1-2*epsilon[ID[t]]) * phi( (VB[t]-VA[t]) / alpha[ID[t]] )

R[t] ~ dbern(P[t])

}

}

This model scores delay discounting data according to the hyperbolic discounting model. The parameters for each participant are estimated independently. The core parameters is the log discount rate

logk. However each participant also has parameters relating to response accuracy:alphais a comparison acuity parameter which models how accurately they can compare present subjective values, andepsilonis a lapse rate corresponding to proportion of random responses. Ideallyalphaandepsilonare low values. See Vincent (2016) for more information.

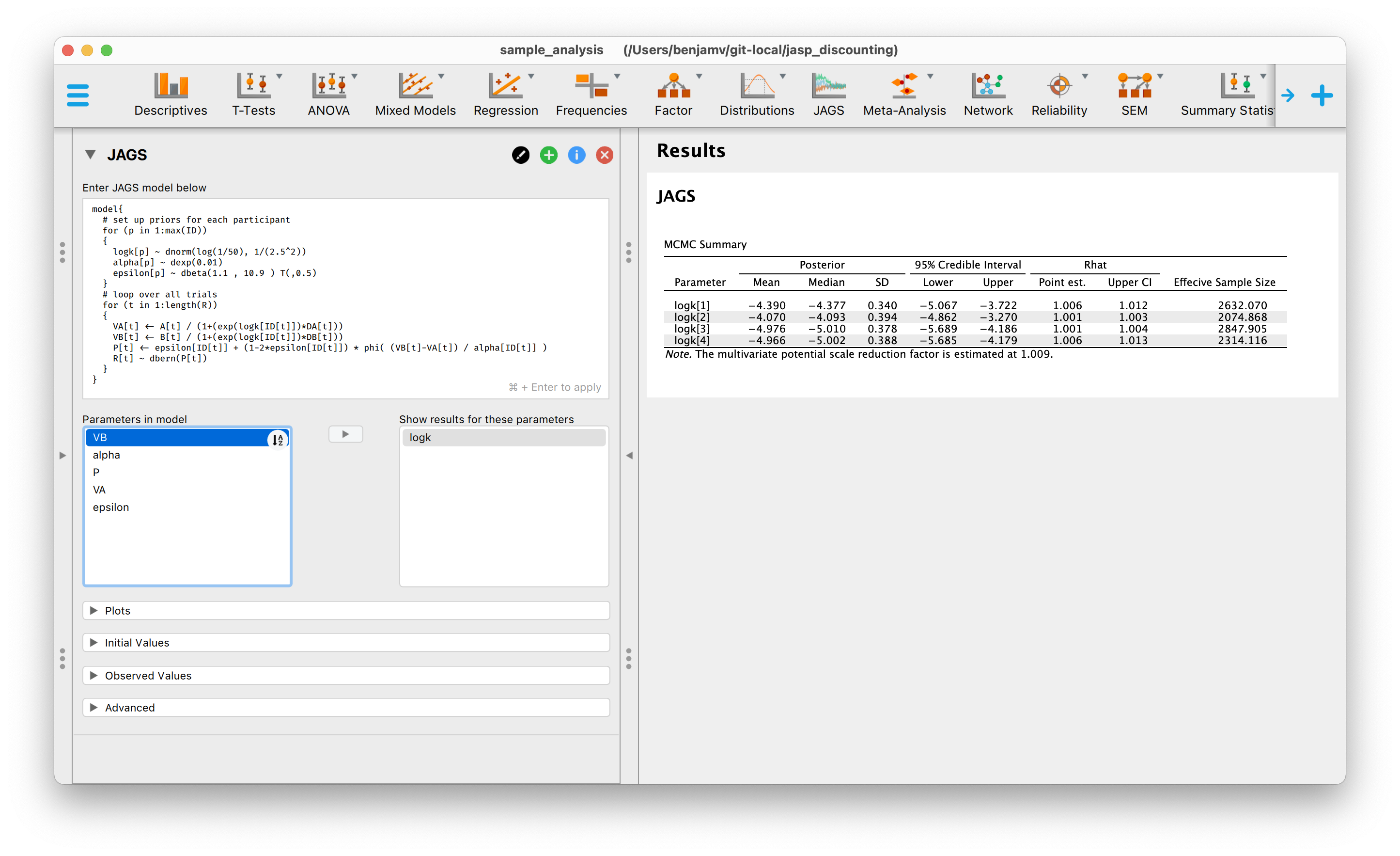

- Click

Commandand+(or whatever the equivalent is on a PC) and the model will run. If you get a message saying "Please specify which parameters to show output for!" then findlogkin the list of model parameters and move it to the box on the right. You can do that by dragging, or by clicking on the right facing arrow.

By now you should have some results that look like this:

This is quite possibly sufficient to do the job... you now have a list of posterior mean values of the logk variable. You can feel free to use these values as your Bayesian scored discount rates. However you may want to do some extra due diligence.

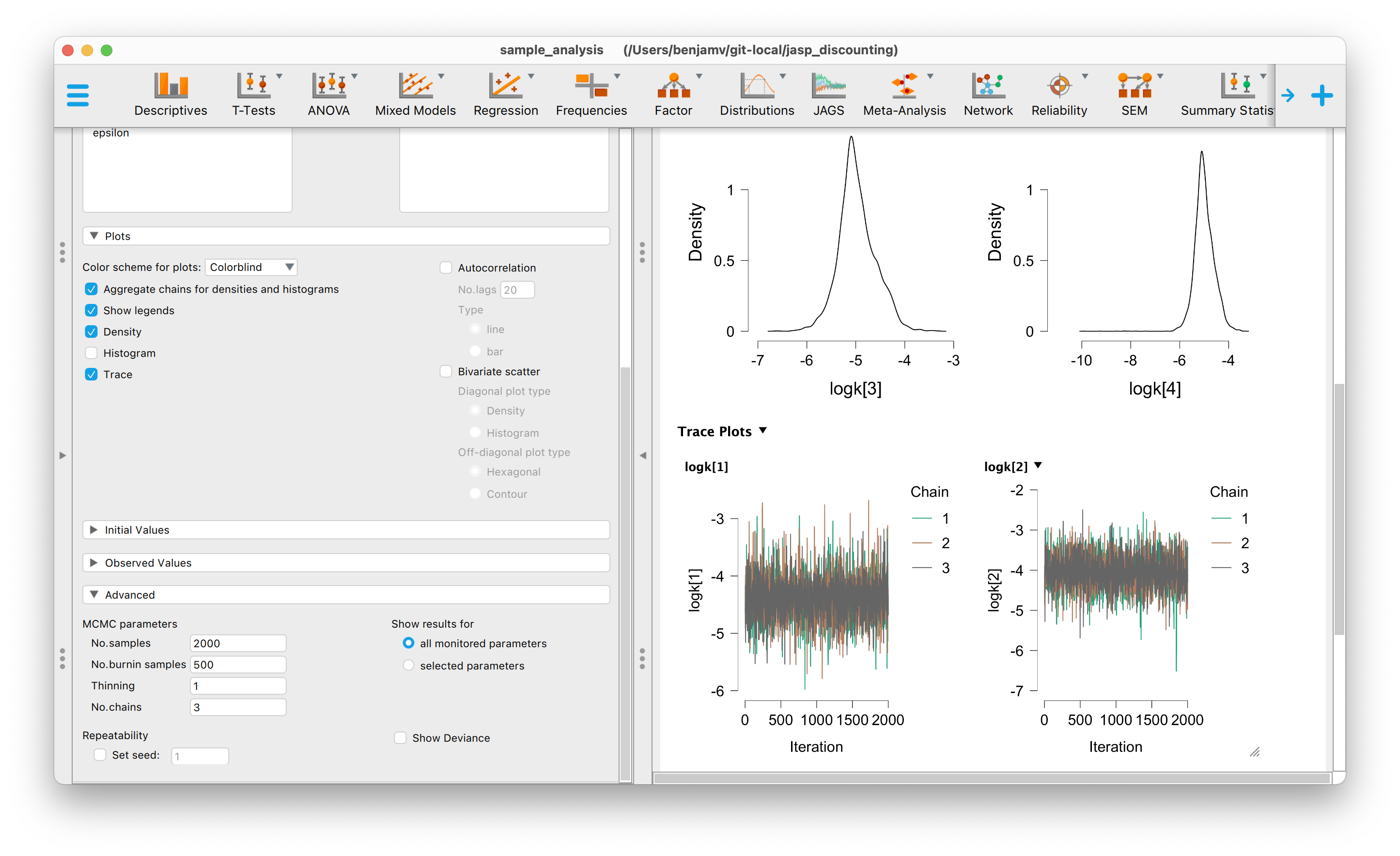

It is good practice to check that these results are meaningful estimates of the posterior means by checking convergence. If you click to see the Density and Trace plots then this can help. For the trace plots, you want to check that the chains overlap as a visual check that they are representing the same posterior distribution. Disagreement here is a bad thing.

You might also want to check the posterior density plots as a sanity check to see if they make sense to you.

To get really accurate results, you might also want to increase the number of samples. If there are issues with the chains then you could increase the number of burn-in samples.

I would also recommend that you set a random seed value in the Advanced tab. This will help others to be able to precisely replicate your results.

You can do a different analysis by pasting in different JAGS models. Here are a list of models. This list will grow over time if people find this useful - but let me know through @inferenceLab on twitter, or create an Issue here on GitHub.

The key parameters here are m and c, so make sure they are added in the 'show results for these parameters' box. This model involves no pooling - no hierarchical estimation.

model{

# set up priors for each participant

for (p in 1:max(ID))

{

m[p] ~ dnorm(-2.43, 1/(0.5^2))

c[p] ~ dnorm(0, 1/(1000^2))

alpha[p] ~ dexp(0.01)

epsilon[p] ~ dbeta(1.1 , 10.9 ) T(,0.5)

}

# loop over all trials

for (t in 1:length(R))

{

VA[t] <- A[t] / (1+(exp( m[ID[t]]*log(A[t])+c[ID[t]])))

VB[t] <- B[t] / (1+(exp( m[ID[t]]*log(B[t])+c[ID[t]])))

P[t] <- epsilon[ID[t]] + (1-2*epsilon[ID[t]]) * phi( (VB[t]-VA[t]) / alpha[ID[t]] )

R[t] ~ dbern(P[t])

}

}

Warning: It is not always the case that your data will be able to give meaningful estimates. For example, while the Kirby 27-item questionnaire is used to estimate the magnitude effect (in a slightly different way), it doesn't mean it is a test for that. For example, the range of reward magnitudes is pretty narrow, so the data alone don't always do a great job of informing us of the m or c parameters. So users should be aware of this and perhaps alter the priors according to their use case. By default I would recommend using the hyperbolic model (no magnitude effect) with Kirby data and only use the magnitude effect model with delay discounting choices which are designed to estimate discount rates over a wider range of magnitudes.

The key parameter here is k, so make sure that is added in the 'show results for these parameters' box. This model involves no pooling - no hierarchical estimation.

model{

# set up priors for each participant

for (p in 1:max(ID))

{

k[p] ~ dnorm(0.01, 1/(0.5^2))

alpha[p] ~ dexp(0.01)

epsilon[p] ~ dbeta(1.1 , 10.9 ) T(,0.5)

}

# loop over all trials

for (t in 1:length(R))

{

VA[t] <- A[t] * (exp(-k[ID[t]]*DA[t]))

VB[t] <- B[t] * (exp(-k[ID[t]]*DB[t]))

P[t] <- epsilon[ID[t]] + (1-2*epsilon[ID[t]]) * phi( (VB[t]-VA[t]) / alpha[ID[t]] )

R[t] ~ dbern(P[t])

}

}

Vincent, B. T. (2016). Hierarchical Bayesian estimation and hypothesis testing for delay discounting tasks. Behavior Research Methods. 48(4), 1608-1620.