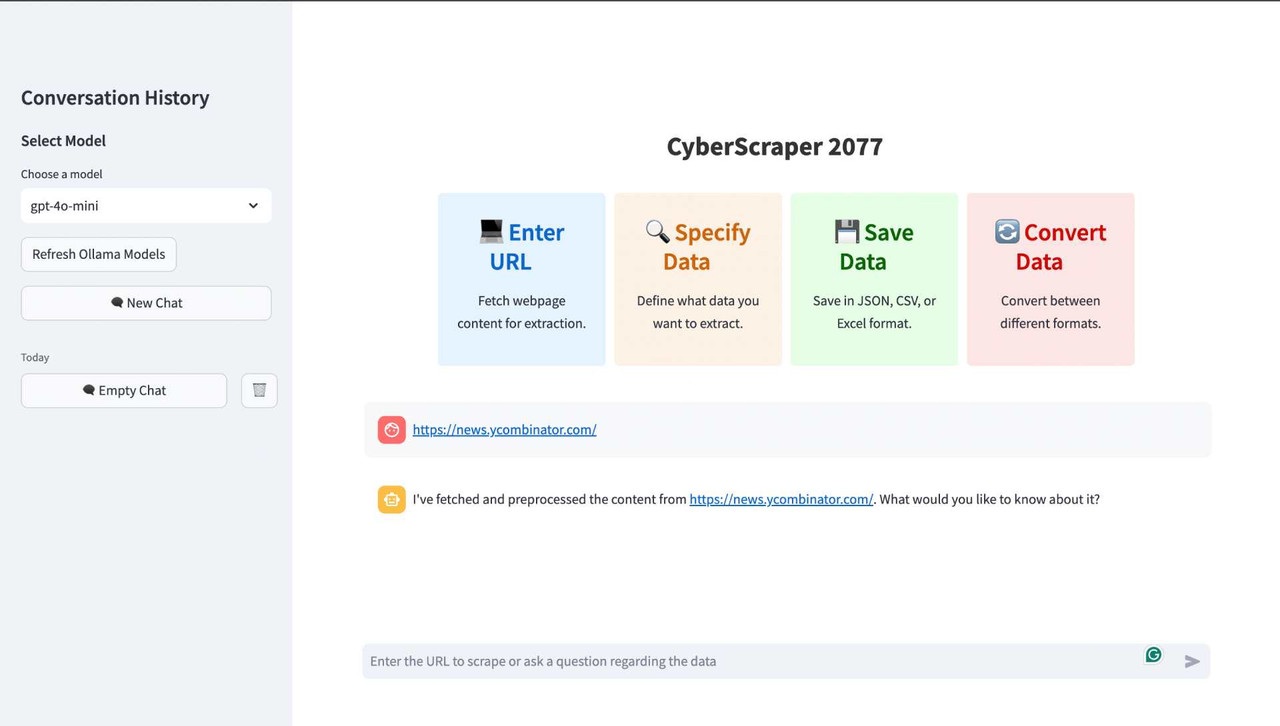

Rip data from the net, leaving no trace. Welcome to the future of web scraping.

CyberScraper 2077 is not just another web scraping tool – it's a glimpse into the future of data extraction. Born from the neon-lit streets of a cyberpunk world, this AI-powered scraper uses OpenAI to slice through the web's defenses, extracting the data you need with unparalleled precision and style.

Whether you're a corpo data analyst, a street-smart netrunner, or just someone looking to pull information from the digital realm, CyberScraper 2077 has got you covered.

- 🤖 AI-Powered Extraction: Utilizes cutting-edge AI models to understand and parse web content intelligently.

- 💻 Sleek Streamlit Interface: User-friendly GUI that even a chrome-armed street samurai could navigate.

- 🔄 Multi-Format Support: Export your data in JSON, CSV, HTML, SQL or Excel – whatever fits your cyberdeck.

- 🌐 Stealth Mode: Implemented stealth mode parameters that helps it from getting detected as bot.

- 🤖 Ollama Support: Use a huge libarary of open source LLMs.

- 🚀 Async Operations: Lightning-fast scraping that would make a Trauma Team jealous.

- 🧠 Smart Parsing: Structures scraped content as if it was extracted straight from the engram of a master netrunner.

- 🛡️ Ethical Scraping: Respects robots.txt and site policies. We may be in 2077, but we still have standards.

- 📄 Caching: We implemented content-based and query-based caching using LRU cache and a custom dictionary to reduce redundant API calls.

- ✅ Upload to Google Sheets: Now you can easily upload your extract csv data to google sheets with one click.

- 🌐 Proxy Mode (Coming Soon): Built-in proxy support to keep you ghosting through the net.

- 🛡️ Navigate through the Pages (BETA): Navigate through the webpage and scrap the data from different pages.

Check out our Redisgned and Improved Version of CyberScraper-2077 with more functionality YouTube video for a full walkthrough of CyberScraper 2077's capabilities.

Check out our first build (Old Video) YouTube video

Please follow the Docker Container Guide given below, As I won't be able to maintain another version for windows system.

-

Clone this repository:

git clone https://github.com/itsOwen/CyberScraper-2077.git cd CyberScraper-2077 -

Create and activate a virtual environment:

virtualenv venv source venv/bin/activate # Optional

-

Install the required packages:

pip install -r requirements.txt

-

Install the playwright:

playwright install

-

Set OpenAI Key in your enviornment:

Linux/Mac:

export OPENAI_API_KEY='your-api-key-here'

For Windows:

set OPENAI_API_KEY=your-api-key-here -

If you want to use the Ollama:

Note: I only recommend using OpenAI API as GPT4o-mini is really good at following instructions, If you are using open-source LLMs make sure you have a good system as the speed of the data generation/presentation depends on how good your system is in running the LLM and also you may have to fine-tune the prompt and add some additional filters yourself.

1. Setup Ollama using `pip install ollama`

2. Download the Ollama from the official website: https://ollama.com/download

3. Now type: ollama pull llama3.1 or whatever LLM you want to use.

4. Now follow the rest of the steps below.If you prefer to use Docker, follow these steps to set up and run CyberScraper 2077:

-

Ensure you have Docker installed on your system.

-

Clone this repository:

git clone https://github.com/itsOwen/CyberScraper-2077.git cd CyberScraper-2077 -

Build the Docker image:

docker build -t cyberscraper-2077 . -

Run the container:

- Without OpenAI API key:

docker run -p 8501:8501 cyberscraper-2077

- With OpenAI API key:

docker run -p 8501:8501 -e OPENAI_API_KEY='your-actual-api-key' cyberscraper-2077

- Without OpenAI API key:

-

Open your browser and navigate to

http://localhost:8501.

If you want to use Ollama with the Docker setup:

-

Install Ollama on your host machine following the instructions at https://ollama.com/download

-

Run Ollama on your host machine:

ollama pull llama3.1

-

Find your host machine's IP address:

- On Linux/Mac:

ifconfigorip addr show - On Windows:

ipconfig

- On Linux/Mac:

-

Run the Docker container with the host network and set the Ollama URL:

docker run -e OLLAMA_BASE_URL=http://host.docker.internal:11434 -p 8501:8501 cyberscraper-2077

On Linux you might need to use this below:

docker run -e OLLAMA_BASE_URL=http://<your-host-ip>:11434 -p 8501:8501 cyberscraper-2077

Replace

<your-host-ip>with your actual host machine IP address. -

In the Streamlit interface, select the Ollama model you want to use (e.g., "ollama:llama3.1").

Note: Ensure that your firewall allows connections to port 11434 for Ollama.

-

Fire up the Streamlit app:

streamlit run main.py

-

Open your browser and navigate to

http://localhost:8501. -

Enter the URL of the site you want to scrape or ask a question about the data you need.

-

Ask the chatbot to extract the data in any format, Select whatever data you want to export or even everything from the webpage.

-

Watch as CyberScraper 2077 tears through the net, extracting your data faster than you can say "flatline"!

Note: The multi-page scraping feature is currently in beta. While functional, you may encounter occasional issues or unexpected behavior. We appreciate your feedback and patience as we continue to improve this feature.

CyberScraper 2077 now supports multi-page scraping, allowing you to extract data from multiple pages of a website in one go. This feature is perfect for scraping paginated content, search results, or any site with data spread across multiple pages.

I suggest you enter the URL structure every time If you want to scrape multiple pages so it can detect the URL structure easily, It detects nearly all URL types.

-

Basic Usage: To scrape multiple pages, use the following format when entering the URL:

https://example.com/page 1-5 https://example.com/p/ 1-6 https://example.com/xample/something-something-1279?p=1 1-3This will scrape pages 1 through 5 of the website.

-

Custom Page Ranges: You can specify custom page ranges:

https://example.com/p/ 1-5,7,9-12 https://example.com/xample/something-something-1279?p=1 1,7,8,9This will scrape pages 1 to 5, page 7, and pages 9 to 12.

-

URL Patterns: For websites with different URL structures, you can specify a pattern:

https://example.com/search?q=cyberpunk&page={page} 1-5Replace

{page}with where the page number should be in the URL. -

Automatic Pattern Detection: If you don't specify a pattern, CyberScraper 2077 will attempt to detect the URL pattern automatically. However, for best results, specifying the pattern is recommended.

- Start with a small range of pages to test before scraping a large number.

- Be mindful of the website's load and your scraping speed to avoid overloading servers.

- Use the

simulate_humanoption for more natural scraping behavior on sites with anti-bot measures. - Regularly check the website's

robots.txtfile and terms of service to ensure compliance.

URL Example : "https://news.ycombinator.com/?p=1 1-3 or 1,2,3,4"If you want to scrape a specific page, Just enter the query please scrape page number 1 or 2, If you want to scrape all pages, Simply give a query like scrape all pages in csv or whatever format you want.

If you encounter errors during multi-page scraping:

- Check your internet connection

- Verify the URL pattern is correct

- Ensure the website allows scraping

- Try reducing the number of pages or increasing the delay between requests

As this feature is in beta, we highly value your feedback. If you encounter any issues or have suggestions for improvement, please:

- Open an issue on our GitHub repository

- Provide detailed information about the problem, including the URL structure and number of pages you were attempting to scrape

- Share any error messages or unexpected behaviors you observed

Your input is crucial in helping us refine and stabilize this feature for future releases.

- Go to the Google Cloud Console (https://console.cloud.google.com/).

- Select your project.

- Navigate to "APIs & Services" > "Credentials".

- Find your existing OAuth 2.0 Client ID and delete it.

- Click "Create Credentials" > "OAuth client ID".

- Choose "Web application" as the application type.

- Name your client (e.g., "CyberScraper 2077 Web Client").

- Under "Authorized JavaScript origins", add:

- Under "Authorized redirect URIs", add:

- Click "Create" to generate the new client ID.

- Download the new client configuration JSON file and rename it to

client_secret.json.

Customize the PlaywrightScraper settings to fit your scraping needs, If some websites are giving you issues, You might want to check the behaviour of the website:

use_stealth: bool = True,

simulate_human: bool = False,

use_custom_headers: bool = True,

hide_webdriver: bool = True,

bypass_cloudflare: bool = True:Adjust these settings based on your target website and environment for optimal results.

We welcome all cyberpunks, netrunners, and code samurais to contribute to CyberScraper 2077!

Ran into a glitch in the matrix? Let me know by adding the issue to this repo so that we can fix it together.

Q: Is CyberScraper 2077 legal to use? A: CyberScraper 2077 is designed for ethical web scraping. Always ensure you have the right to scrape a website and respect their robots.txt file.

Q: Can I use this for commercial purposes? A: Yes, under the terms of the MIT License. But remember, in Night City, there's always a price to pay, Just kidding!

This project is licensed under the MIT License - see the LICENSE file for details. Use it, mod it, sell it – just don't blame us if you end up flatlined.

Got questions? Need support? Want to hire me for a gig?

- 📧 Email: owensingh72@gmail.com

- 🐦 Twitter: @_owensingh

- 💬 Website: Portfolio

Listen up, choombas! Before you jack into this code, you better understand the risks:

-

This software is provided "as is", without warranty of any kind, express or implied.

-

The authors are not liable for any damages or losses resulting from the use of this software.

-

This tool is intended for educational and research purposes only. Any illegal use is strictly prohibited.

-

We do not guarantee the accuracy, completeness, or reliability of any data obtained through this tool.

-

By using this software, you acknowledge that you are doing so at your own risk.

-

You are responsible for complying with all applicable laws and regulations in your use of this software.

-

We reserve the right to modify or discontinue the software at any time without notice.

Remember, samurai: In the dark future of the NET, knowledge is power, but it's also a double-edged sword. Use this tool wisely, and may your connection always be strong and your firewalls impenetrable. Stay frosty out there in the digital frontier.

CyberScraper 2077 – Because in 2077, what makes someone a criminal? Getting caught.

Built with ❤️ and chrome by the streets of Night City | © 2077 Owen Singh