Airflow-provider-dolphindb

The Airflow-provider-dolphindb enables the execution of DolphinDB scripts (.dos files) within Apache Airflow workflows. It provides custom DolphinDB Hooks and Operators that allow users to seamlessly run DolphinDB scripts including insertion of data, ETL transformations, data modeling, etc.

Installing airflow-provider-dolphindb

pip install airflow-provider-dolphindbRunning a DAG Example

The following example demonstrates the DolphinDB provider with a sample DAG for creating a DolphinDB database and table, and executing a .dos script to insert data. Follow these steps to run it:

-

Copy example_dolphindb.py and insert_data.dos to your DAGs folder. If you use the default airflow configuration airflow.cfg, you may need to create the DAGs folder yourself at AIRFLOW_HOME/dags.

-

Start your DolphinDB server on port 8848.

-

Navigate to your Airflow project directory and start Airflow in development mode:

cd /path/to/airflow/project # Only absolute paths are accepted export AIRFLOW_HOME=/your/project/dir/ export AIRFLOW_CONN_DOLPHINDB_DEFAULT="dolphindb://admin:123456@127.0.0.1:8848" python -m airflow standalone

For detailed instructions, refer to Airflow - Production Deployment.

- Configure a DolphinDB connection

To enable communication between Airflow and DolphinDB, you first need to configure a DolphinDB connection:

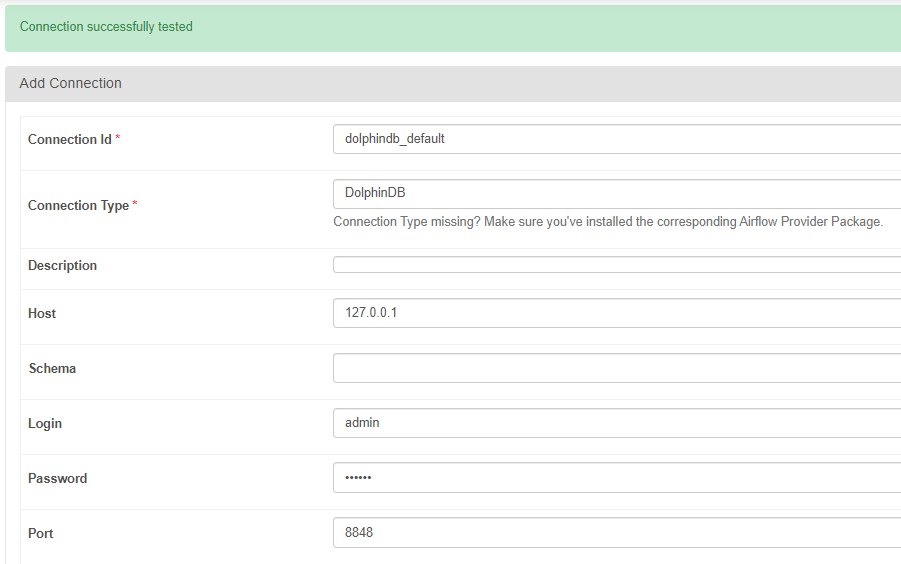

(1) Click Admin -> Connections -> + icon to add a connection.

(2) Enter the following values in the form:

Connect Id: dolphindb_default

Connection Type: DolphinDB

(3) Fill in any additional connection details according to your environment.

Click the test button to test the connection. If successful, it will output "Connection successfully tested". Press the save button to save the connection.

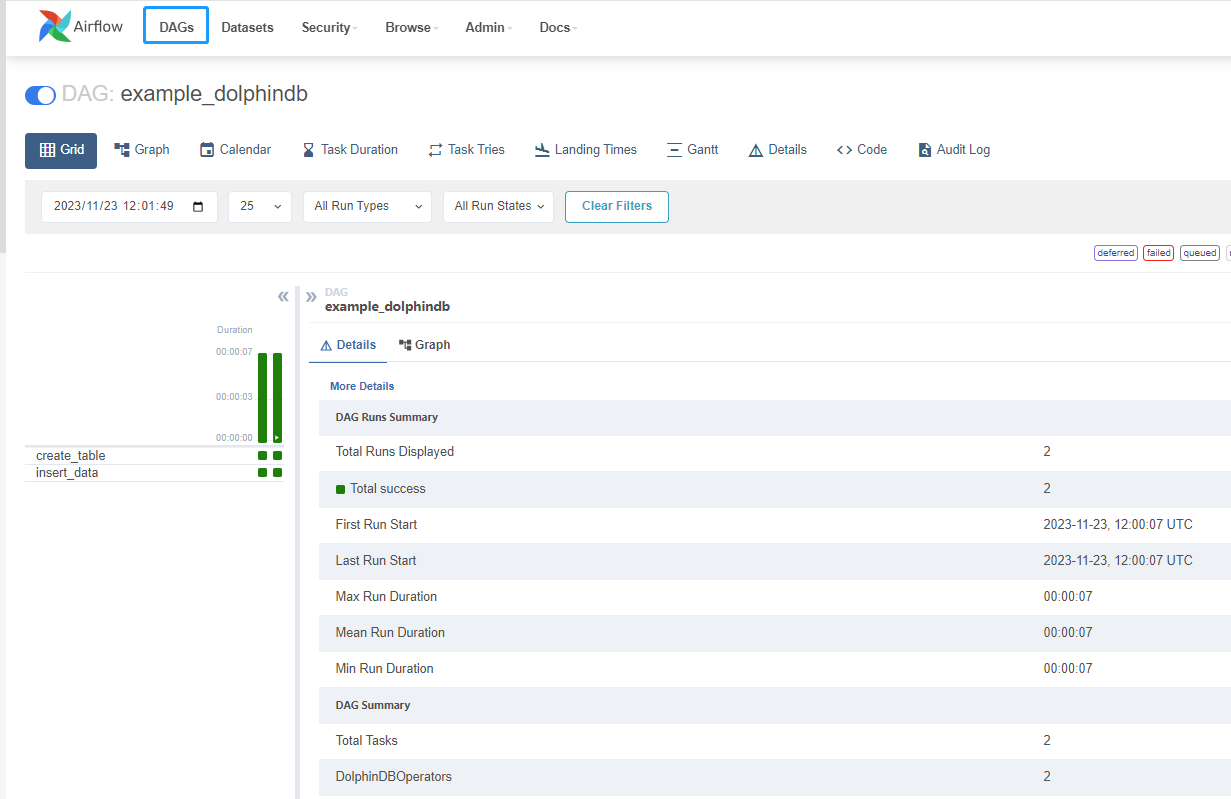

Once the connection is created, the example_dolphindb DAG will be able to interface with DolphinDB automatically using this connection. Try triggering the example DAG and inspecting the task logs to see the DolphinDB scripts executing using the configured connection.

Developer Guides

This guide covers key steps for developers working on and testing the Airflow DolphinDB provider:

Installing Apache Airflow

Refer to Airflow - Quick Start for further details.

# It is recommended to use the current project directory as the airflow working directory

cd /your/source/dir/airflow-provider-dolphindb

# Only absolute paths are accepted

export AIRFLOW_HOME=/your/source/dir/airflow-provider-dolphindb

# Install apache-airflow 2.6.3

AIRFLOW_VERSION=2.6.3

PYTHON_VERSION="$(python --version | cut -d " " -f 2 | cut -d "." -f 1-2)"

CONSTRAINT_URL="https://raw.githubusercontent.com/apache/airflow/constraints-${AIRFLOW_VERSION}/constraints-${PYTHON_VERSION}.txt"

pip install "apache-airflow==${AIRFLOW_VERSION}" --constraint "${CONSTRAINT_URL}"You may need to install Kubernetes to eliminate errors in airflow routines:

pip install kubernetesInstalling airflow-provider-dolphindb for testing

To use the DolphinDB provider in test workflows, install the library in editable mode:

python -m pip install -e .Refer to pip documentation for further details.

Running Provider Tests

Validate the changes through the test suite:

cd /your/source/dir/airflow-provider-dolphindb

# Only absolute paths are accepted

export AIRFLOW_HOME=/your/source/dir/airflow-provider-dolphindb

export AIRFLOW_CONN_DOLPHINDB_DEFAULT="dolphindb://admin:123456@127.0.0.1:8848"

pytestBuilding a Package

Bundle the provider into a package using:

python -m build