This repository is an official PyTorch implementation of the paper, PLASTIC: Improving Input and Label Plasticity for Sample Efficient Reinforcement Learning, NeurIPS 2023.

Authors: Hojoon Lee*, Hanseul Cho*, Hyunseung Kim*, Daehoon Gwak, Joonkee Kim, Jaegul Choo, Se-Young Yun, and Chulhee Yun.

To run the synthetic experiments, please follow the instructions of Readme.md in the folder synthetic.

To run the Atari-100k experiments, please follow the instructions of Readme.md in the folder atari.

To run the DMC-M experiments, please follow the instructions of Readme.md in the folder dmc.

-

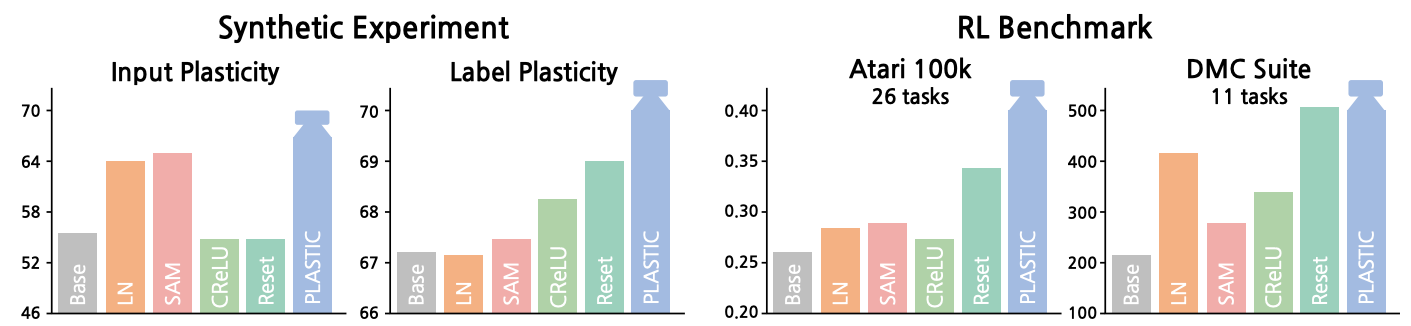

Left: Performance of Synthetic Experiments. Layer Normalization (LN) and SharpenessAware Minimization (SAM) considerably enhance input plasticity, while their effect on label plasticity is marginal. Conversely, Concatenated ReLU (CReLU) and periodic reinitialization (Reset) predominantly improve label plasticity with subtle benefits on input plasticity.

-

Right: Performance of RL Benchmarks. PLASTIC consistently outperforms individual methods, highlighting the synergistic benefits of its integrated approach.

@inproceedings{lee2023plastic,

title={PLASTIC: Improving Input and Label Plasticity for Sample Efficient Reinforcement Learning},

author={Lee, Hojoon and Cho, Hanseul and Kim, Hyunseung and Gwak, Daehoon and Kim, Joonkee and Choo, Jaegul and Yun, Se-Young and Yun, Chulhee},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023}

}

For personal communication, please contact Hojoon Lee, Hanseul Cho, or Hyunseung Kim at

{joonleesky, jhs4015, mynsng}@kaist.ac.kr.