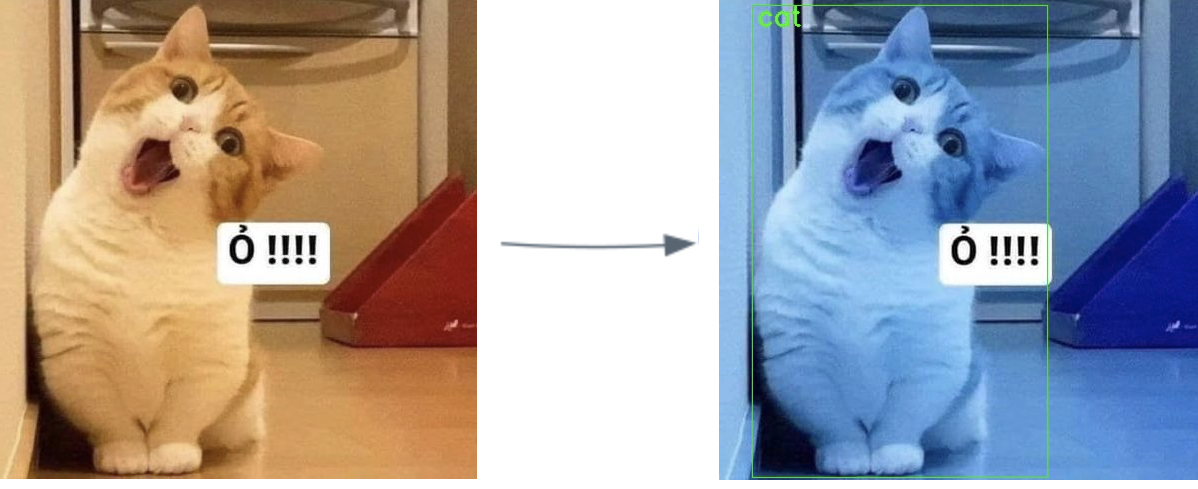

This repo gives an introduction to how to make full working example to serve your model using asynchronous Celery tasks and FastAPI. This post walks through a working example for serving a ML model using Celery and FastAPI. All code can be found in this repository. We won’t specifically discuss the ML model used for this example however it was trained using coco dataset with 80 object class like cat, dog, bird ... more detail here Coco Dataset. The model have been train with tensorflow Tensorflow

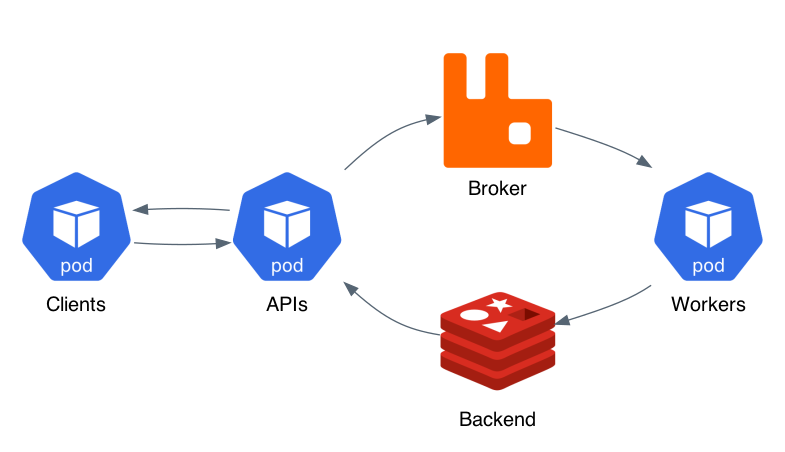

View System

https://www.docker.com/

git clone https://github.com/apot-group/ml-models-in-production.git

cd ml-models-in-production && docker-compose up

| Service | URL |

|---|---|

| API docs | http://localhost/api/docs |

| Demo Web | http://localhost |

go to Demo web http://localhost and test with your picture.

- Email-1: duynnguyenngoc@hotmail.com - Duy Nguyen ❤️ ❤️ ❤️