This is the PyTorch implementation of the paper: BiST: Bi-directional Spatio-Temporal Reasoning for Video-Grounded Dialogues. Hung Le, Doyen Sahoo, Nancy F. Chen, Steven C.H. Hoi. EMNLP 2020. (arXiv)

This code has been written using PyTorch 1.0.1. If you find the paper or the source code useful to your projects, please cite the following bibtex:

@inproceedings{le-etal-2020-bist,

title = "{B}i{ST}: Bi-directional Spatio-Temporal Reasoning for Video-Grounded Dialogues",

author = "Le, Hung and

Sahoo, Doyen and

Chen, Nancy and

Hoi, Steven C.H.",

booktitle = "Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP)",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.emnlp-main.145",

doi = "10.18653/v1/2020.emnlp-main.145",

pages = "1846--1859"

}

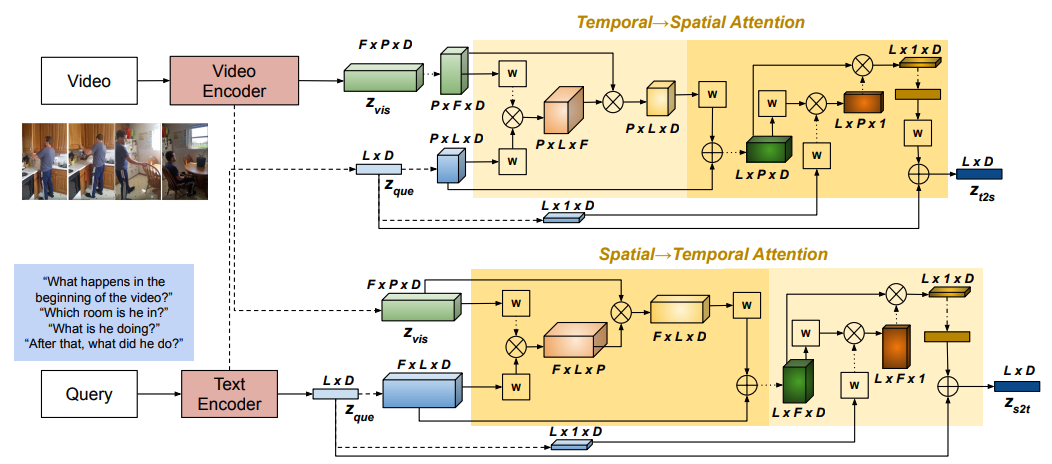

Video-grounded dialogues are very challenging due to (i) the complexity of videos which contain both spatial and temporal variations, and (ii) the complexity of user utterances which query different segments and/or different objects in videos over multiple dialogue turns. However, existing approaches to video-grounded dialogues often focus on superficial temporal-level visual cues, but neglect more fine-grained spatial signals from videos. To address this drawback, we proposed Bi-directional Spatio-Temporal Learning (BiST), a vision-language neural framework for high-resolution queries in videos based on textual cues. Specifically, our approach not only exploits both spatial and temporal-level information, but also learns dynamic information diffusion between the two feature spaces through spatial-to-temporal and temporal-to-spatial reasoning. The bidirectional strategy aims to tackle the evolving semantics of user queries in the dialogue setting. The retrieved visual cues are used as contextual information to construct relevant responses to the users. Our empirical results and comprehensive qualitative analysis show that BiST achieves competitive performance and generates reasonable responses on a large-scale AVSD benchmark. We also adapt our BiST models to the Video QA setting, and substantially outperform prior approaches on the TGIF-QA benchmark.

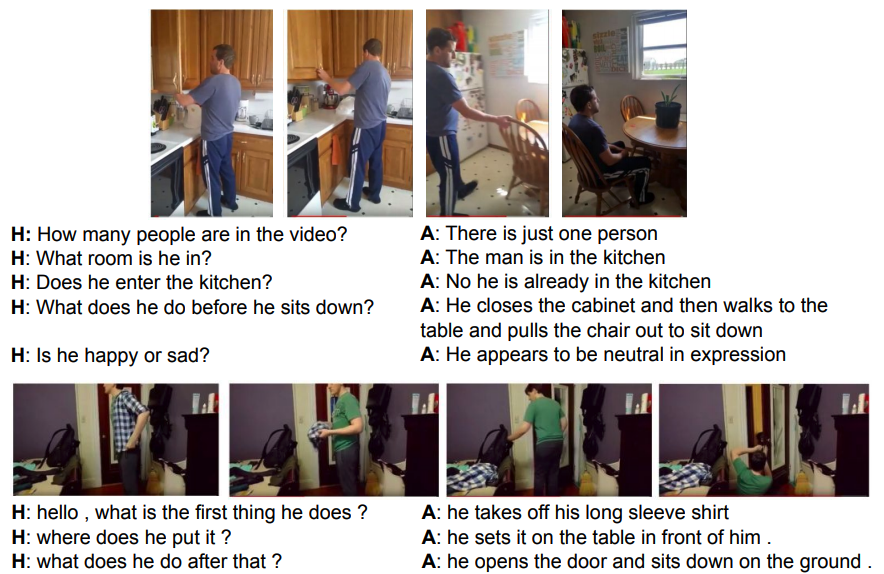

Examples of video-grounded dialogues from the benchmark datasets of Audio-Visual Scene Aware Dialogues (AVSD) challenge. H: human, A: the dialogue agent.

Our bidirectional approach models the dependencies between text and vision in two reasoning directions: spatial→temporal and temporal→spatial. ⊗ and ⊕ denote dot-product operation and element-wise summation.

We use the AVSD@DSTC7 benchmark. Refer to the official benchmark repo here to download the dataset. Alternatively, you can refer here for download links of dialogue-only data.

To use the spatio-temporal features, we extracted the visual features from a published pretrained ResNext-101 model. The extraction code is slightly changed to obtain the features right before average pooling along spatial regions. This extracted visual features for all videos used in the AVSD benchmark can be downloaded here.

Alternatively, you can download Charades videos (train+validation and test videos, scaled to 480p) to extract features by yourself. Please refer to our modified code for feature extraction under the video-classification-3d-cnn-pytorch folder. An example running script is in the run.sh file in this folder. Videos are extracted by batches, specified by start and end index of video files.

For audio features, we reused the public features accompanying the AVSD benchmark (Please refer to the benchmark repo).

We created scripts/exec.sh to prepare evaluation code, train models, generate dialogue response, and evaluating the generated responses with automatic metrics. You can directly run this file which includes example parameter setting:

| Parameter | Description | Values |

|---|---|---|

| device | device to specific which GPU to be used | e.g. 0, 1, 2, ... |

| stage | different value specifying different processes to be run | 1: training stage, 2. generating stage, 3: evaluating stage |

| test_mode | test mode is on for debugging purpose. Set true to run with a small subset of data | true/false |

| t2s | set 1 to use temporal-to-spatial attention operation | 0, 1 |

| s2t | set 1 to use spatial-to-temporal attention operation | 0, 1 |

| nb_workers | number of worker to preprocess data and create batches | e.g. 4 |

An example to run scripts/exec.sh is shown in scripts/run.sh. Please update the data_root in scripts/exec.sh to your local directory of the dialogue data/video features before running.

Other model parameters can be also be set, either manually or changed as dynamic input, including but not limited to:

| Parameter | Description | Values |

|---|---|---|

| data_root | the directory of dialogue data as well as video visual/audio extracted features | data/dstc7/ |

| include_caption | specify the type of caption to be used, 'none' if not using caption as input | caption, summary, or none |

| d_model | dimension of word embedding as well as transformer layer dimension | e |

| nb_blocks | number of response decoding attention layers | e.g. 3 |

| nb_venc_blocks | number of visual reasoning attention layers | e.g. 3 |

| nb_cenc_blocks | number of caption reasoning attention layers | e.g. 3 |

| nb_aenc_blocks | number of audio reasoning attention layers | e.g. 3 |

Refer to configs folder for more definitions of other parameters which can be set through scripts/exec.sh.

While training, the model with the best validation is saved. The model is evaluated by using the losses from response generation as well as question auto-encoding generation.

The model output, parameters, vocabulary, and training and validation logs will be save into folder determined in the expdir parameter.

Examples of pretrained BiST models using different parameter settings through scripts/run.sh can be downloaded here. Unzip the download file and update the expdir parameter in the test command in the scripts/test.sh to the corresponding unzip directory. Using the pretrained model, the test script provides the following results:

| Model | Epochs | Link | Visual | Audio | Caption | BLEU1 | BLEU2 | BLEU3 | BLEU4 | METEOR | ROUGE-L | CIDEr |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| visual-audio-text | 50 | Download | ResNeXt | VGGish | Summary | 0.752 | 0.619 | 0.510 | 0.423 | 0.283 | 0.581 | 1.193 |

| visual-text | 30 | Download | ResNeXt | No | Summary | 0.755 | 0.623 | 0.517 | 0.432 | 0.284 | 0.585 | 1.194 |

| visual-text | 50 | Download | ResNeXt | No | Summary | 0.755 | 0.620 | 0.512 | 0.426 | 0.285 | 0.585 | 1.201 |

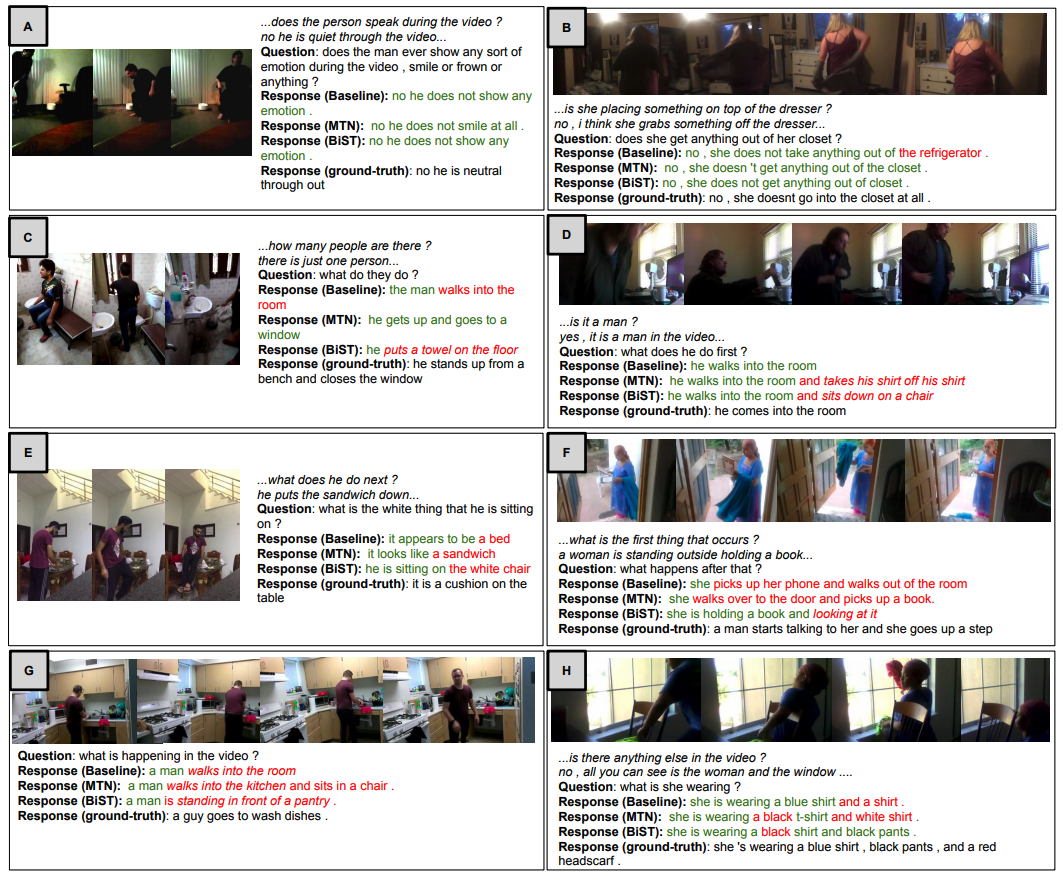

Comparison of dialogue response outputs of BiST against the baseline models: Baseline (Hori et al., 2019) and MTN (Le et al., 2019b). Parts of the outputs that match and do not match the ground truth are highlighted in green and red respectively.

BiST can be adapted to Video-QA tasks such as TGIF-QA. Please refer to this repo branch for TGIF-QA experiment details.