Big data smart alarm by sql

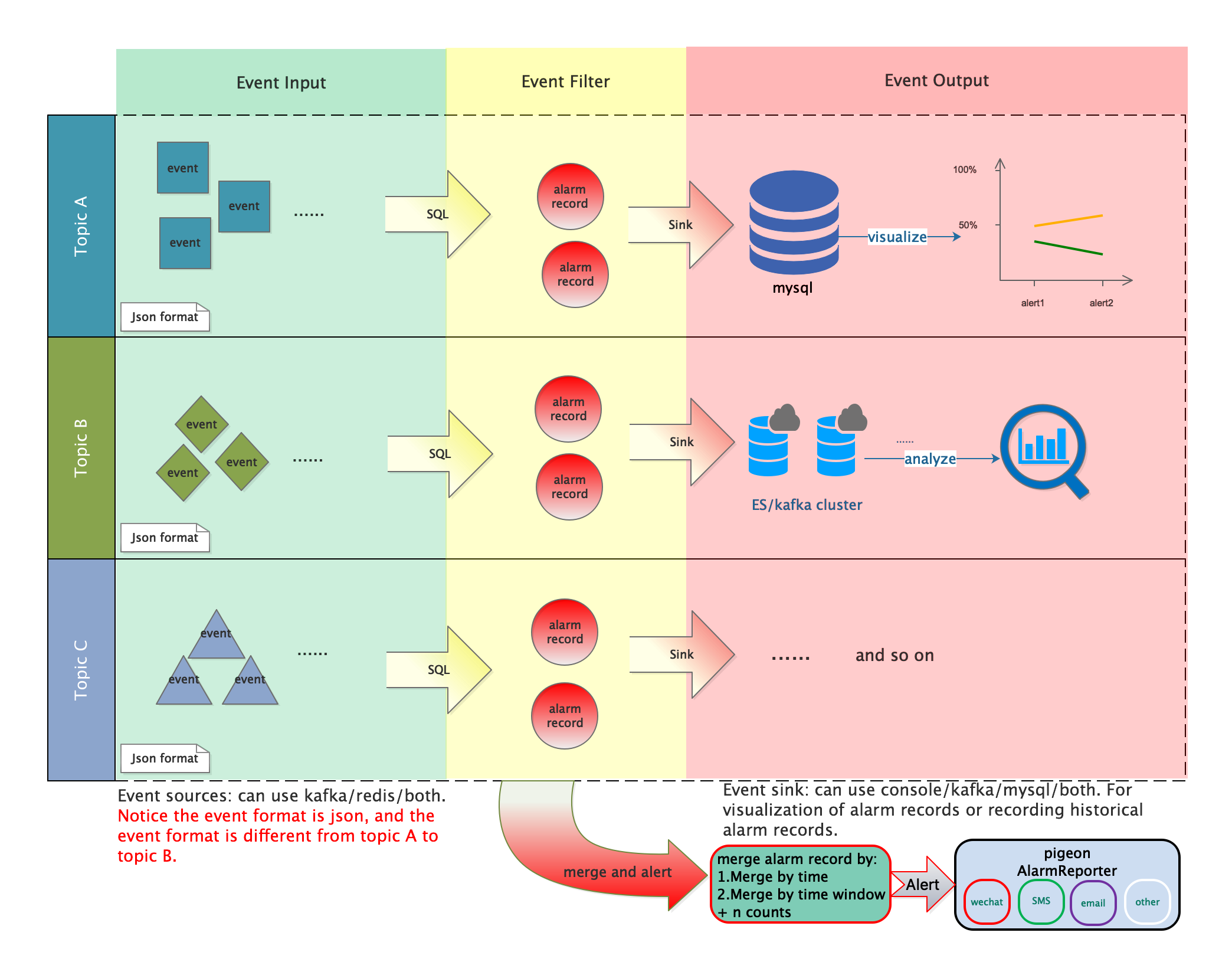

SQLAlarm is for event(time-stamped) alarm which is built on spark structured-steaming. This system including following abilities:

- Event filtering through SQL

- Alarm records noise reduction

- Alarm records dispatch in specified channels

The integral framework idea is as follows:

Introduce of modules:

- sa-admin: web console and rest api for sqlalarm

- sa-core: core module of sqlalarm(including source/filter/sink(alert))

You can use bin/start-local.sh to start a local SQLAlarm serve at IntelliJ IDEA. We recommend to run it use yarn-client or local mode in spark cluster after packaged jar.

Minimal requirements for a SQLAlarm serve are:

- Java 1.8 +

- Spark 2.4.x

- Redis (Redis 5.0, if use Redis Stream)

- Kafka (this is also needless if you only use Redis Stream for event alerts)

For example, I started a SQLAlarm serve that consume kafka event message to do alarm flow:

spark-submit --class dt.sql.alarm.SQLAlarmBoot \

--driver-memory 2g \

--master local[4] \

--name SQLALARM \

--conf "spark.kryoserializer.buffer=256k" \

--conf "spark.kryoserializer.buffer.max=1024m" \

--conf "spark.serializer=org.apache.spark.serializer.KryoSerializer" \

--conf "spark.redis.host=127.0.0.1" \

--conf "spark.redis.port=6379" \

--conf "spark.redis.db=4" \

sa-core-1.0-SNAPSHOT.jar \

-sqlalarm.name sqlalarm \

-sqlalarm.sources kafka \

-sqlalarm.input.kafka.topic sqlalarm_event \

-sqlalarm.input.kafka.subscribe.topic.pattern 1 \

-sqlalarm.input.kafka.bootstrap.servers "127.0.0.1:9092" \

-sqlalarm.sinks consolenotes: the above simple example takes kafka as the message center, filtering alarm event and output to the console.

- Packaged the core jar: sa-core-1.0-SNAPSHOT.jar.

- Deploy the jar package in spark cluster.

- Add an alarm rule(put at redis):

# hset key uuid value

# key: sqlalarm_rule:${sourceType}:${topic}

HSET "sqlalarm_rule:kafka:sqlalarm_event" "uuid00000001"

{

"item_id":"uuid00000001",

"platform":"alarm",

"title":"sql alarm test",

"source":{

"type":"kafka",

"topic":"sqlalarm_event"

},

"filter":{

"table":"fail_job",

"structure":[

{

"name":"job_name",

"type":"string",

"xpath":"$.job_name"

},

{

"name":"job_owner",

"type":"string",

"xpath":"$.job_owner"

},

{

"name":"job_stat",

"type":"string",

"xpath":"$.job_stat"

},

{

"name":"job_time",

"type":"string",

"xpath":"$.job_time"

}

],

"sql":"select job_name as job_id,job_stat,job_time as event_time,'job failed' as message, map('job_owner',job_owner) as context from fail_job where job_stat='Fail'"

}

}- Wait for event center(may be kafka or redis) produce alarm events. Produce manually:

1.create if not exists topic:

kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic sqlalarm_event2.produce events:

kafka-console-producer.sh --broker-list localhost:9092 --topic sqlalarm_event

{

"job_name":"sqlalarm_job_000",

"job_owner":"bebee4java",

"job_stat":"Succeed",

"job_time":"2019-12-26 12:00:00"

}

{

"job_name":"sqlalarm_job_001",

"job_owner":"bebee4java",

"job_stat":"Fail",

"job_time":"2019-12-26 12:00:00"

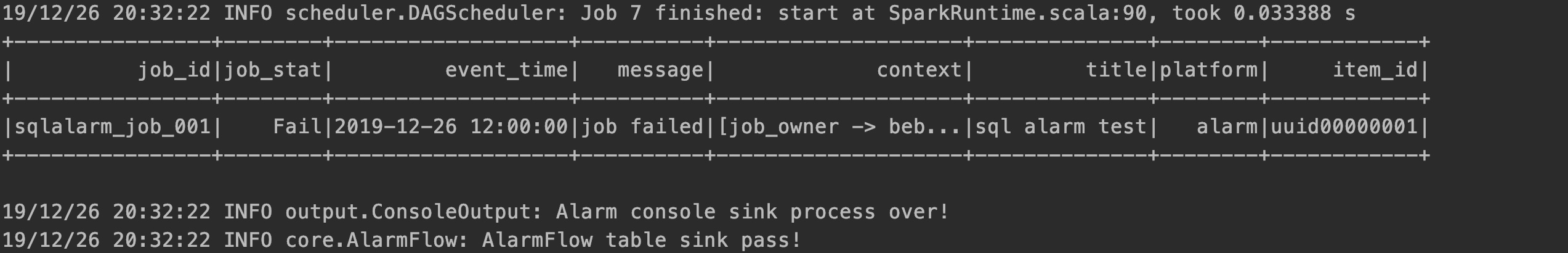

}- If use console sink, you will get following info in the console(Observable the fail events are filtered out and succeed events are ignored):

notes: the order of step 2&3 is not required, and the alarm rule is not necessary when starting the SQLAlarm serve.

- Supports docking multiple data sources as event center(kafka or redis stream-enabled source), and it's scalable you can customize the data source only extends the class BaseInput

- Supports docking multiple data topics with inconsistent structure

- Supports output of alarm events to multiple sinks(kafka/jdbc/es etc.), and it's scalable you can customize the data sink only extends the class BaseOutput

- Supports alarm filtering for events through SQL

- Supports multiple policies(time merge/time window+N counts merge) for alarm noise reduction

- Supports alarm rules and policies to take effect dynamically without restarting the serve

- Supports adding data source topics dynamically(If your subscription mode is

subscribePattern) - Supports sending alarm records by specific different channels

SQLAlarm does't automatically generate metrics events, it only obtains metrics events from the message center and analyzes them. However, you can collect and report metrics events in another project called metrics-exporter, this makes up for this shortage well.

In this way, a complete alarm process looks like:

metrics-exporter —> sqlalarm —> alarm-pigeon

The documentation of SQLAlarm is located on the issues page: SQLAlarm issues. It contains a lot of information such as configuration and use tutorial etc. If you have any questions, please free to commit issues.

This is an active open-source project. We are always open to people who want to use the system or contribute to it. Contact us if you are looking for implementation tasks that fit your skills.