I have written a blog post describing this project in more detail, make sure to check "How to generate music clips with AI" to learn more!

With this project, you can use AI to generate music tracks and video clips. Provide some information on how you would like the music and videos, the code will do the rest.

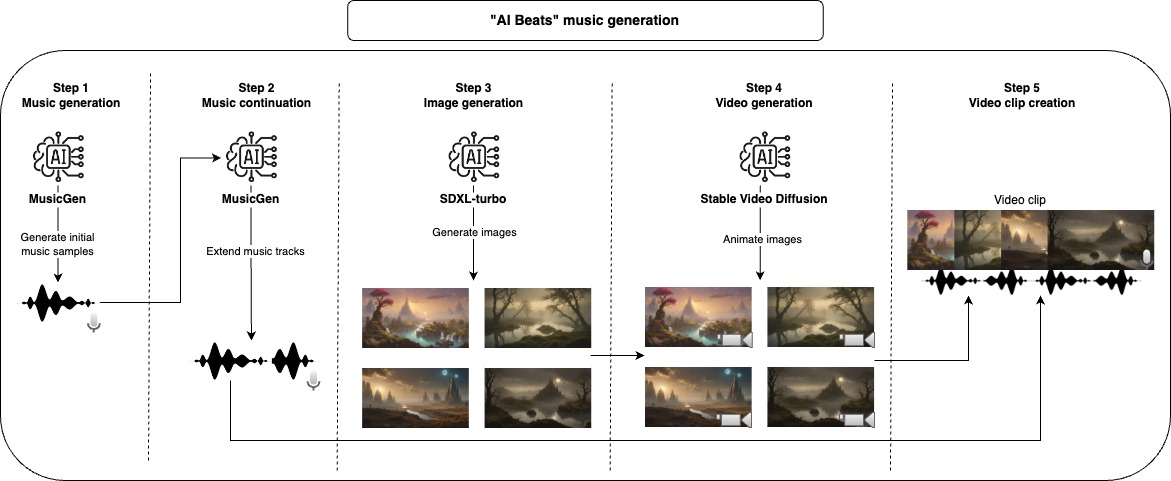

First, we use a generative model to create music samples, the default model used here is only able to generate a max of 30 seconds of music, for this reason, we take another step to extend the music. After finishing with the audio part we can generate the video, first, we start with a Stable Diffusion model to generate images and then we use another generative model to give it a bit of motion and animation. To compose the final video clip, we take each generated music and join together with as many animated images as necessary to match the length of the music.

All of those steps will generate intermediate files that you can inspect and manually remove what you don't like to improve the results.

The recommended approach to use this repository is with Docker, but you can also use a custom venv, just make sure to install all dependencies.

Note: make sure to update the device param to maximize performance, but notice that some models might not work for all device options (cpu, cuda, mps).

- Music generation: Generate the initial music tracks

- Music continuation: Extend the initial music tracks to a longer duration

- Image generation: Create the images that will be used to fill the video clip

- Video generation: Generate animations from the images to compose video clips

- Video clip creation: Join multiple video clips together to accompany the music tracks

project_dir: beats

project_name: lofi

seed: 42

music:

prompt: "lo-fi music with a relaxing slow melody"

model_id: facebook/musicgen-small

device: cpu

n_music: 5

music_duration: 60

initial_music_tokens: 1050

max_continuation_duration: 20

prompt_music_duration: 10

image:

prompt: "Mystical Landscape"

prompt_modifiers:

- "concept art, HQ, 4k"

- "epic scene, cinematic, sci fi cinematic look, intense dramatic scene"

- "digital art, hyperrealistic, fantasy, dark art"

- "digital art, hyperrealistic, sense of comsmic wonder"

- "mystical and ethereal atmosphere, photo taken with a wide-angle lens"

model_id: stabilityai/sdxl-turbo

device: mps

n_images: 5

inference_steps: 3

height: 576

width: 1024

video:

model_id: stabilityai/stable-video-diffusion-img2vid

device: cpu

n_continuations: 2

loop_video: true

video_fps: 6

decode_chunk_size: 8

motion_bucket_id: 127

noise_aug_strength: 0.1

audio_clip:

n_music_loops: 1

- project_dir: Folder that will host all your projects

- project_name: Project name and main folder

- seed: Seed used to control the randomness of the models

- music

- prompt: Text prompt used to generate the music

- model_id: Model used to generate and extend the music tracks

- device: Device used by the model, usually one of (cpu, cuda, mps)

- n_music: Number of music tracks that will be created

- music_duration: Duration length of the final music

- initial_music_tokens: Duration length of the initial music (in tokens)

- max_continuation_duration: Maximum length of each extended music segment

- prompt_music_duration: Length of base music used to create the extension

- image

- prompt: Text prompt used to generate the images

- prompt_modifiers: Prompt modifiers used to change the image style

- model_id: Model used to create the images

- device: Device used by the model, usually one of (cpu, cuda, mps)

- n_images: Number of images that will be created

- inference_steps: Number of inference steps for the diffusion model

- height: Height of the generated image

- width: Width of the generated image

- video

- model_id: Model used to animate the images

- device: Device used by the model, usually one of (cpu, cuda, mps)

- n_continuations: Number of animation segments that will be created

- loop_video: If the each music video will be looped

- video_fps: Frames per second of each video clip

- decode_chunk_size: Video diffusion's decode chunk size parameter

- motion_bucket_id: Video diffusion's motion bucket id parameter

- noise_aug_strength: Video diffusion's noise aug strength parameter

- audio_clip

- n_music_loops: Number of times to loop each music track

Build the Docker image

make buildApply lint and formatting to the code (only needed for development)

make lintRun the whole pipeline to create the music video

make ai_beatsRun the music generation step

make musicRun the music continuation step

make music_continuationRun the image generation step

make imageRun the video generation step

make videoRun the audio clip creation step

make audio_clipFor development make sure to install requirements-dev.txt and run make lint to maintain the coding style.

I developed and tested most of this project on my MacBook Pro M2, the only step that I was not able to run was the video creation step, for that I used Google Colab (with V100 or A100 GPU). Some of the models were not runnable on MPS but they run on a reasonable time anyway.

The models used by default here have specific licenses that might not be suited for all use cases, if you want to use the same models make sure to check their licenses. For music generation MusicGen and its CC-BY-NC 4.0 license, for image generation SDXL-Turbo and its LICENSE-SDXL1.0 license, and stable video diffusion and its STABLE VIDEO DIFFUSION NC COMMUNITY LICENSE license for video generation.