- Overview

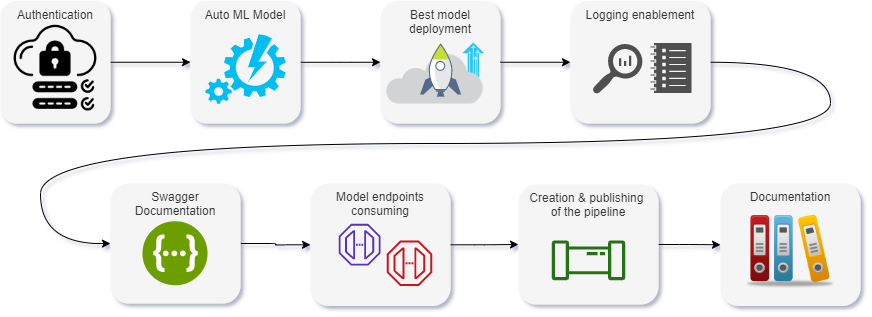

- Architectural Diagram

- Key Steps

- Screenshots

- Screen Recording

- Comments and future improvements

- Dataset Citation

- References

This project is formed by two parts:

- The first part consists of creating a machine learning production model using AutoML in Azure Machine Learning Studio, and then deploy the best model and consume it with the help of Swagger UI using the REST API endpoint and the key produced for the deployed model.

- The second part of the project is following the same steps but this time using Azure Python SDK to create, train, and publish a pipeline. For this part, I am using the Jupyter Notebook provided. The whole procedure is explained in this README file and the result is demonstrated in the screencast video.

For both parts of the project I use the dataset that can be obtained from here and contains marketing data about individuals. The data is related with direct marketing campaigns (phone calls) of a Portuguese banking institution. The classification goal is to predict whether the client will subscribe a bank term deposit. The result of the prediction appears in column y and it is either yes or no.

The architectural diagram is not very detailed by nature; its purpose is to give a rough overview of the operations. The diagram below is a visualization of the flow of operations from start to finish:

The key steps of the project are described below:

-

Authentication: This step was actually omitted since it could not be implemented in the lab space provided by Udacity, because I am not authorized to create a security principal. However, I am still mentioning it here as it is a crucial step if one uses their own Azure account but, obviously, I am not including a screenshot.

-

Automated ML Experiment: At this point, security is enabled and authentication is completed. This step involves the creation of an experiment using Automated ML, configuring a compute cluster, and using that cluster to run the experiment.

-

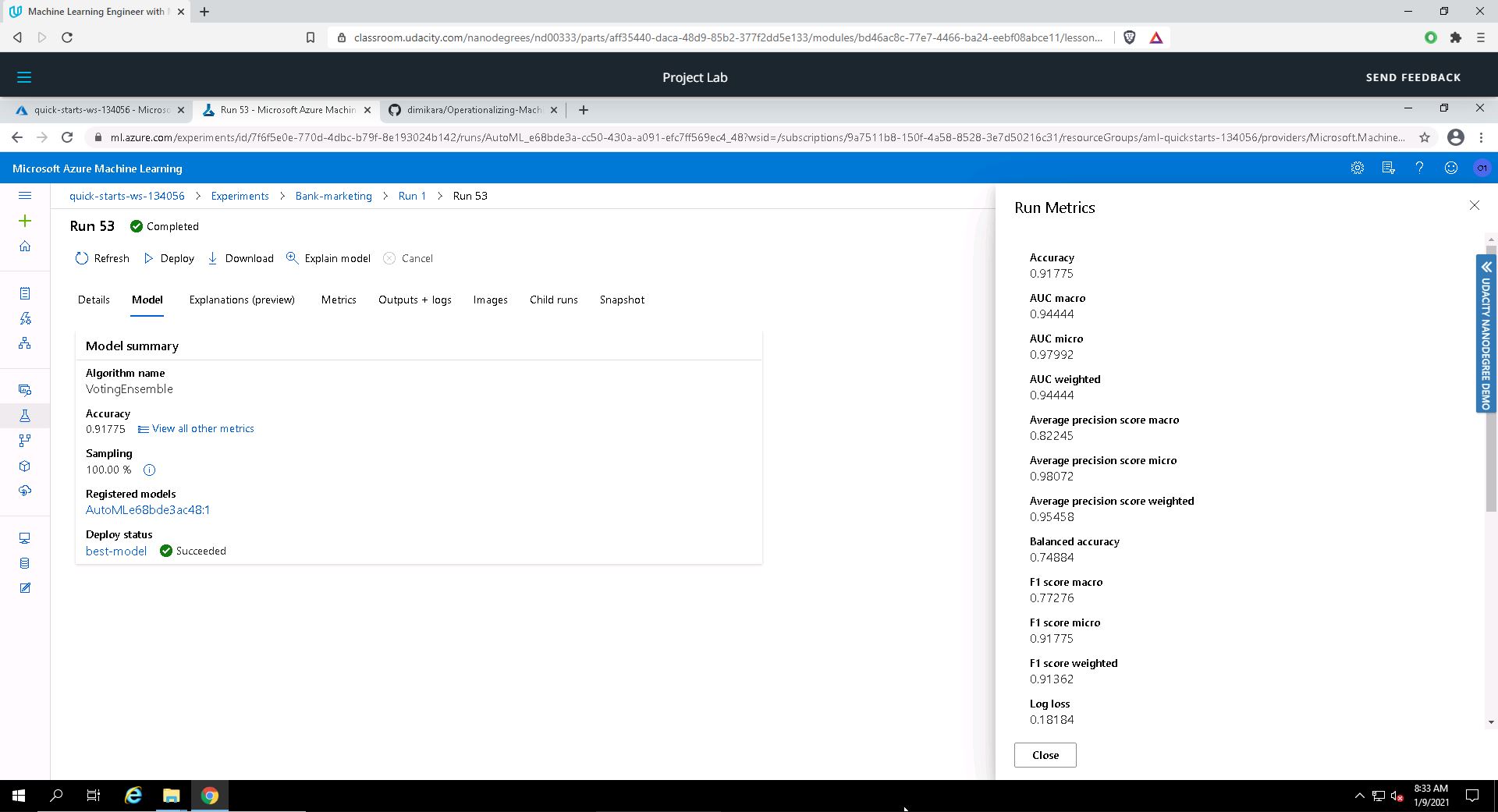

Deploy the Best Model: After the completion of the experiment run, a summary of all the models and their metrics are shown, including explanations. The Best Model will appear in the Details tab, while it will appear first in the Models tab. This is the model that should be selected for deployment. Its deployment allows to interact with the HTTP API service and interact with the model by sending data over POST requests.

-

Enable Logging: After the deployment of the Best Model, I enabled Application Insights and retrieve logs.

-

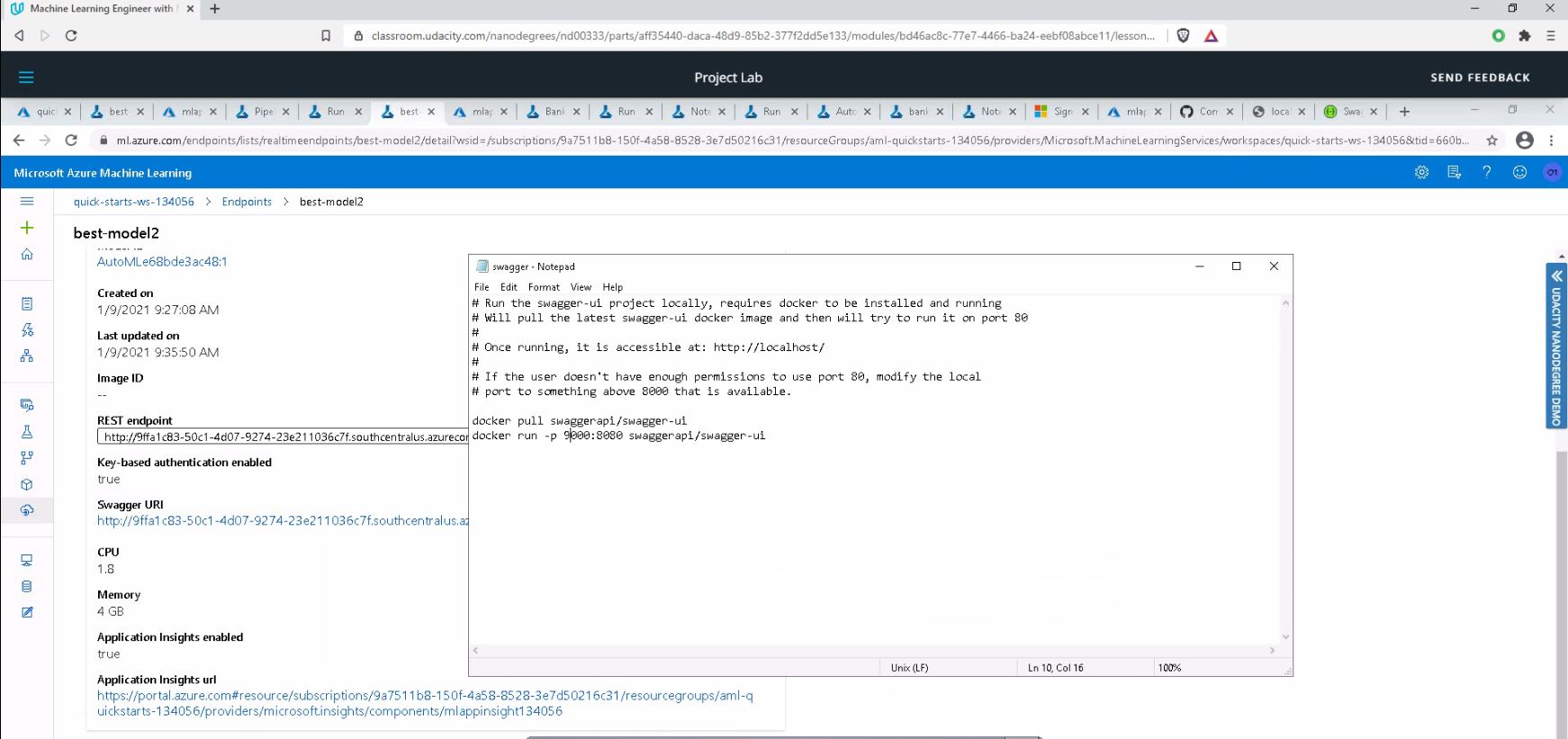

Swagger Documentation: This is the step where the deployed model will be consumed using Swagger. Azure provides a Swagger JSON file for deployed models. We can find the deployed model in the Endpoints section, where it should be the first one on the list.

-

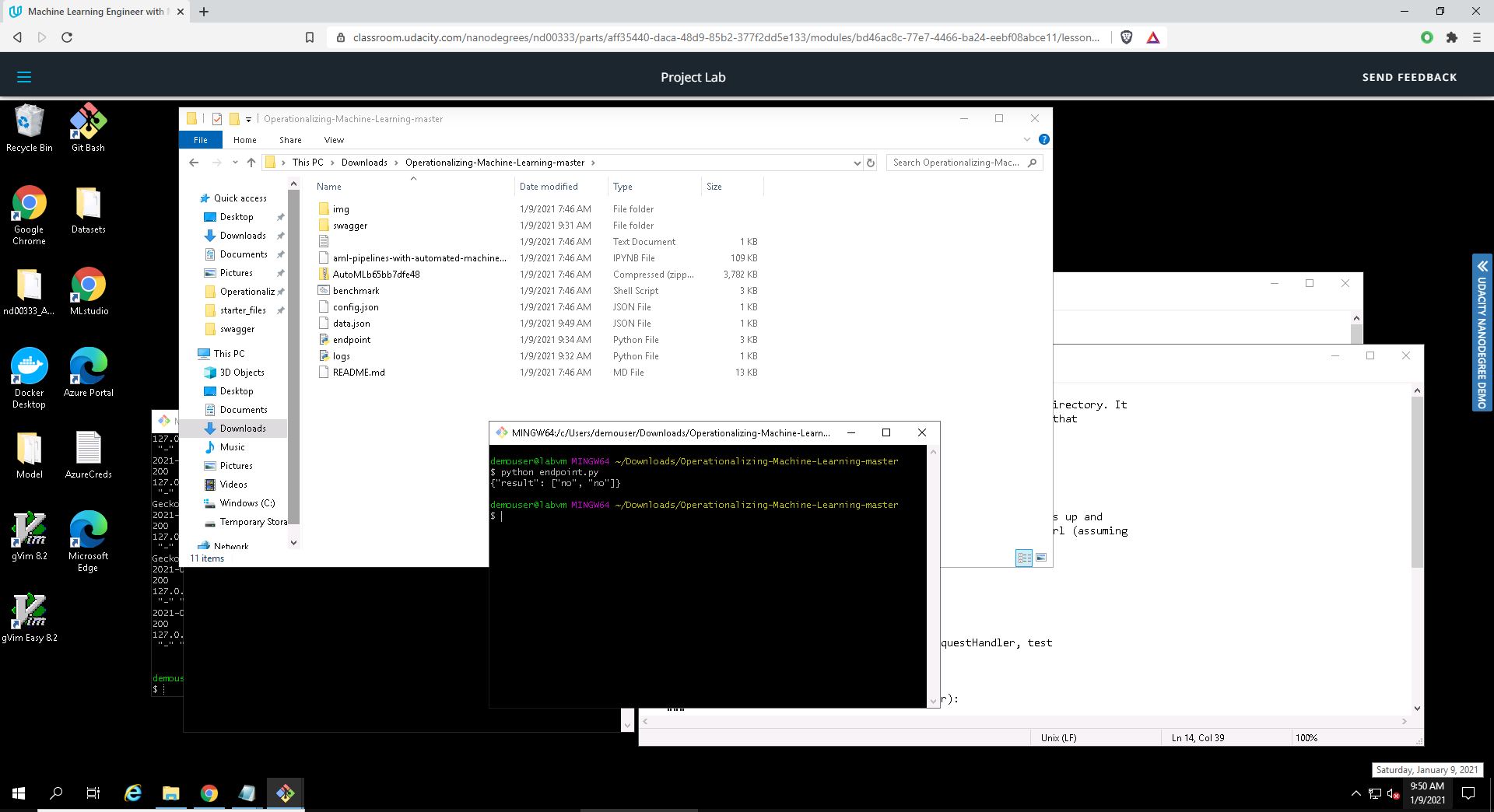

Consume Model Endpoints: Once the model is deployed, I am using the

endpoint.pyscript to interact with the trained model. I run the script with the scoring_uri that was generated after deployment and -since I enabled Authentication- the key of the service. This URI is found in the Details tab, above the Swagger URI. -

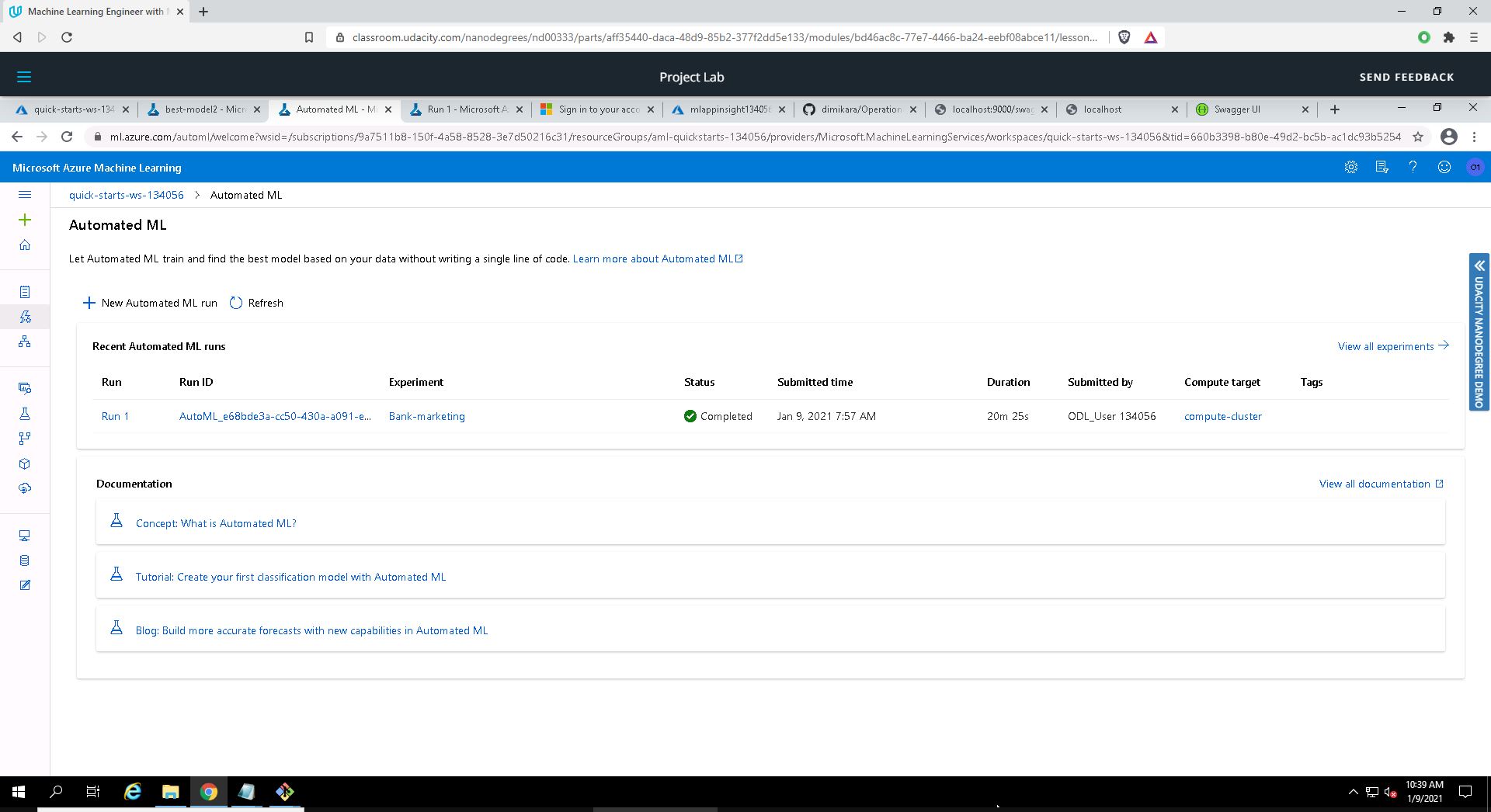

Create and Publish a Pipeline: In this part of the project, I am using the Jupyter Notebook with the same keys, URI, dataset, cluster, and model names already created.

-

Documentation: The documentation includes: 1. the screencast that shows the entire process of the working ML application; and 2. this README file that describes the project and documents the main steps.

As I explained above, I start with the step 2 because I am using the virtual lab environment that is provided by Udacity.

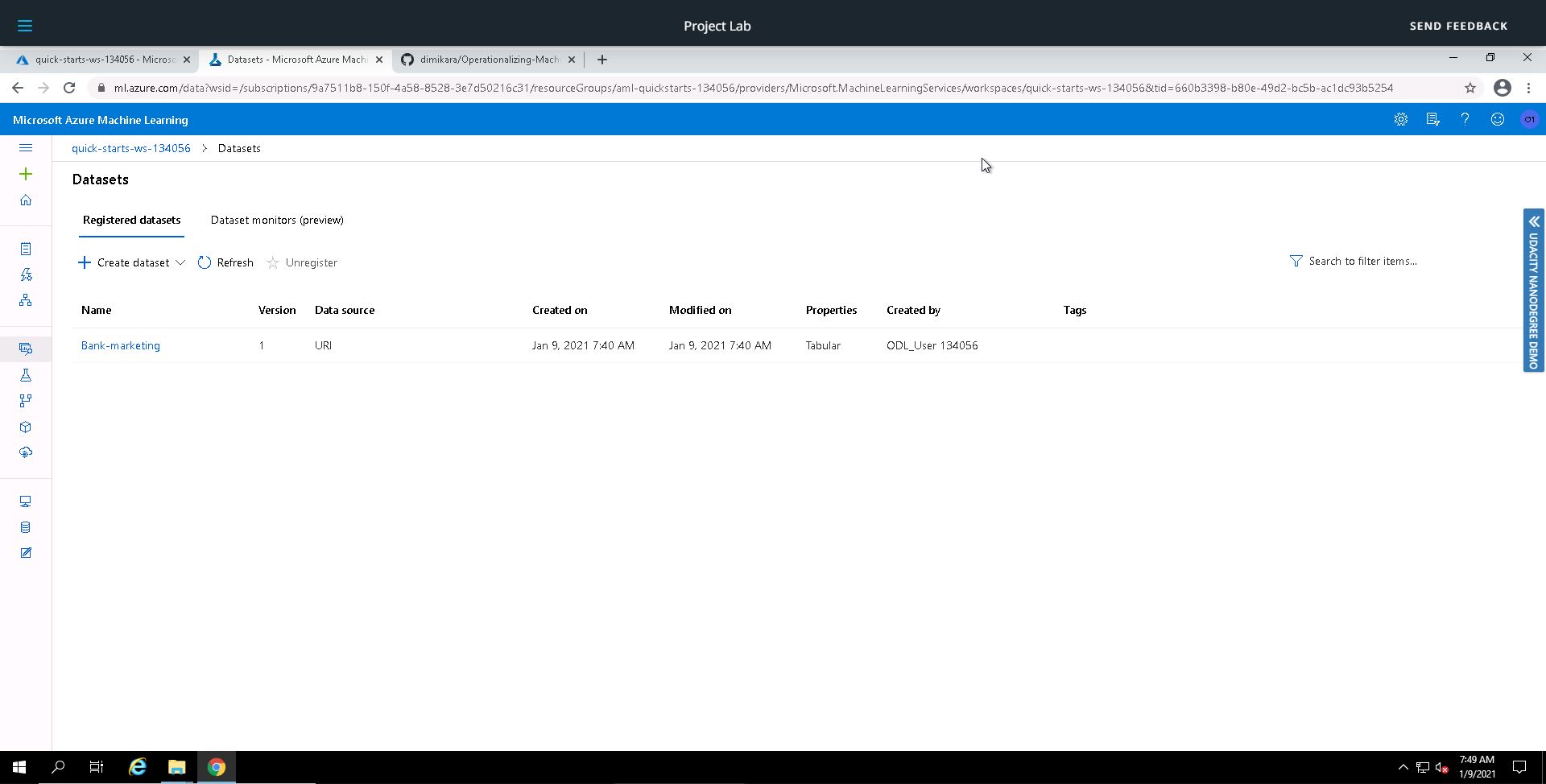

The first thing I check after opening the Azure Machine Learning Studio, is whether the dataset is included in the Registered Datasets, which it is as we can see below.

Registered Datasets:

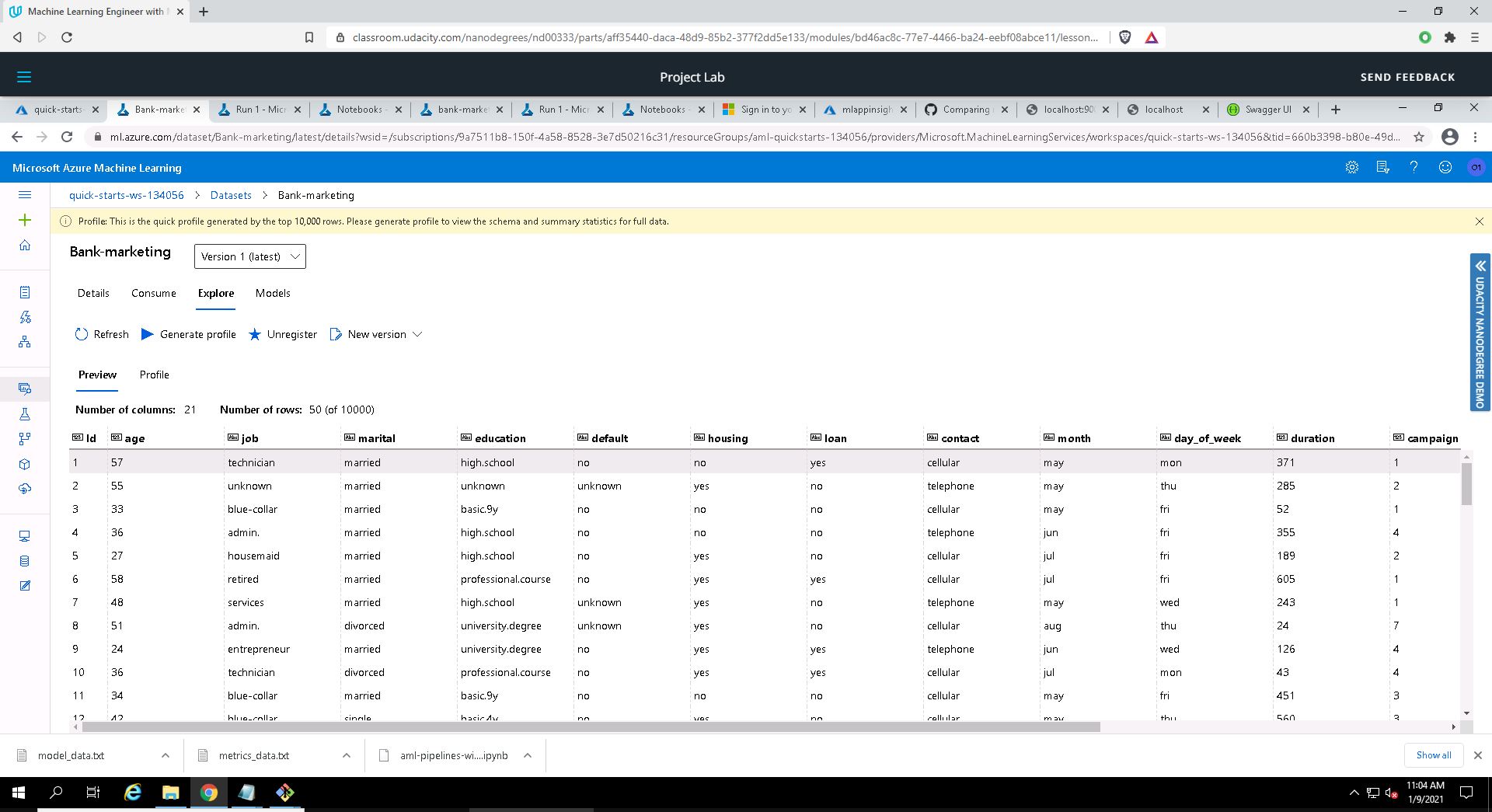

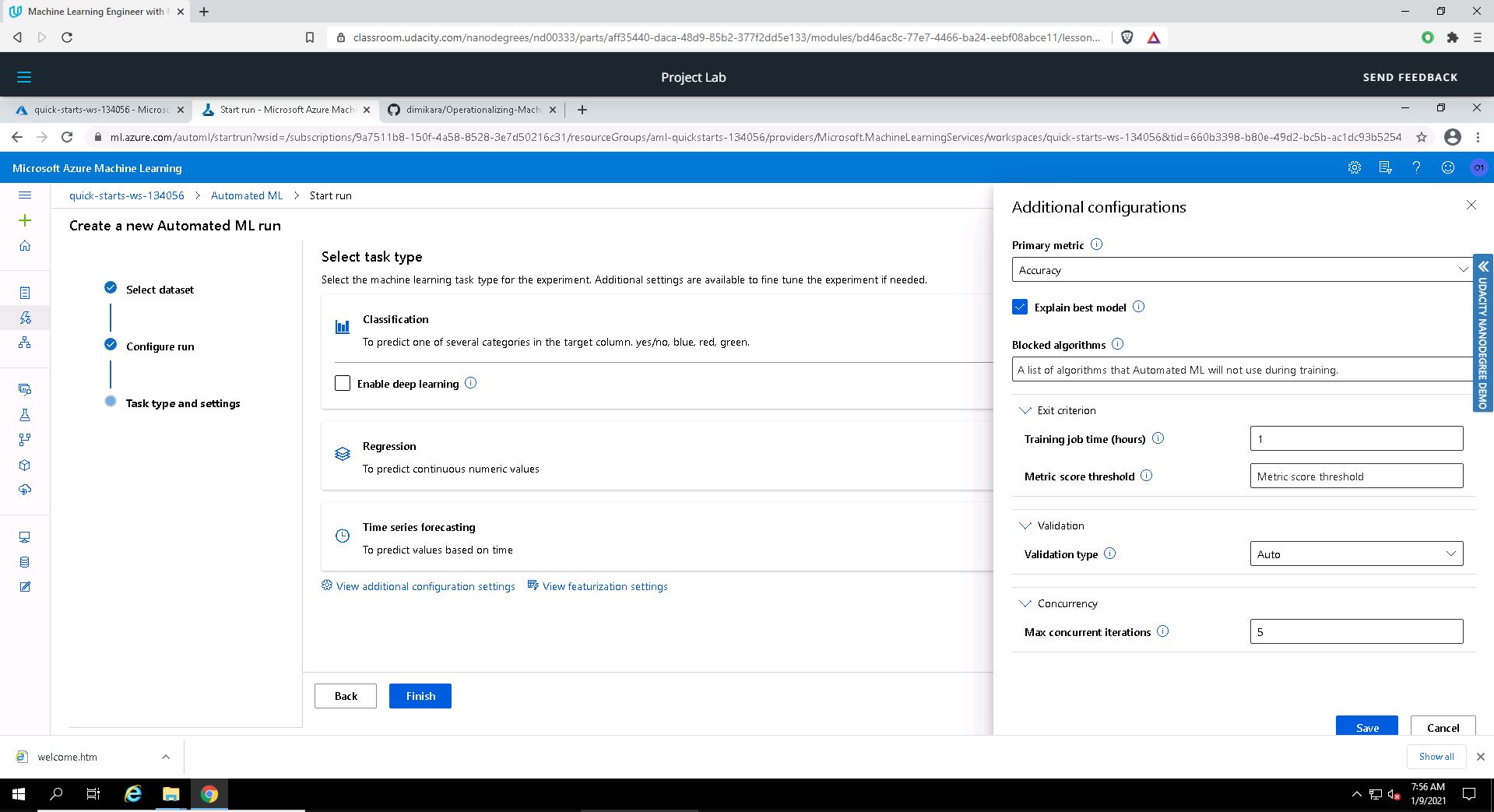

Creating a new Automated ML run:

I select the Bank-marketing dataset and in the second screen, I make the following selections:

- Task: Classification

- Primary metric: Accuracy

- Explain best model

- Exit criterion: 1 hour in Job training time (hours)

- Max concurrent iterations: 5. Please note that the number of concurrent operations MUST always be less than the maximum number of nodes configured in the cluster.

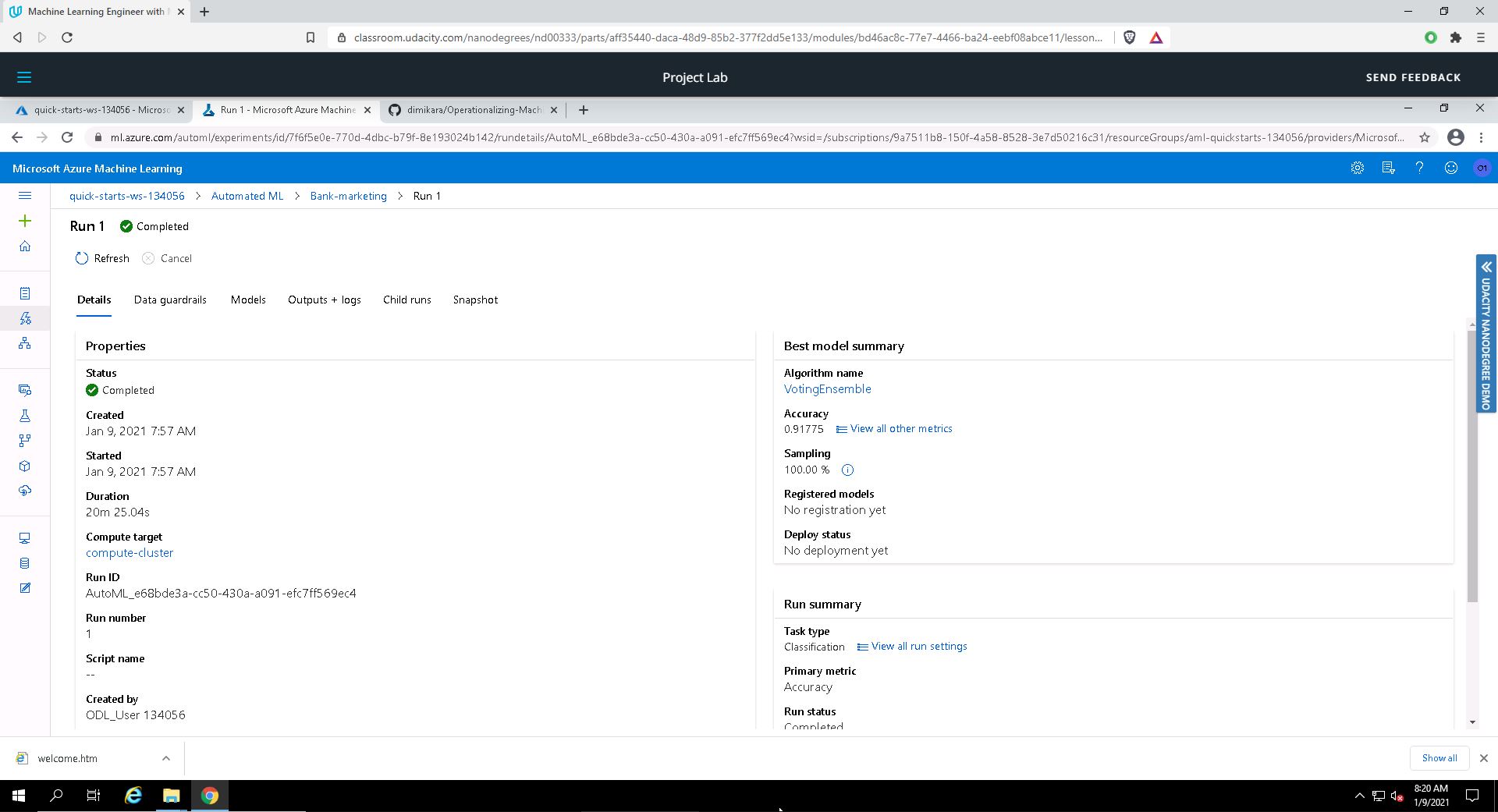

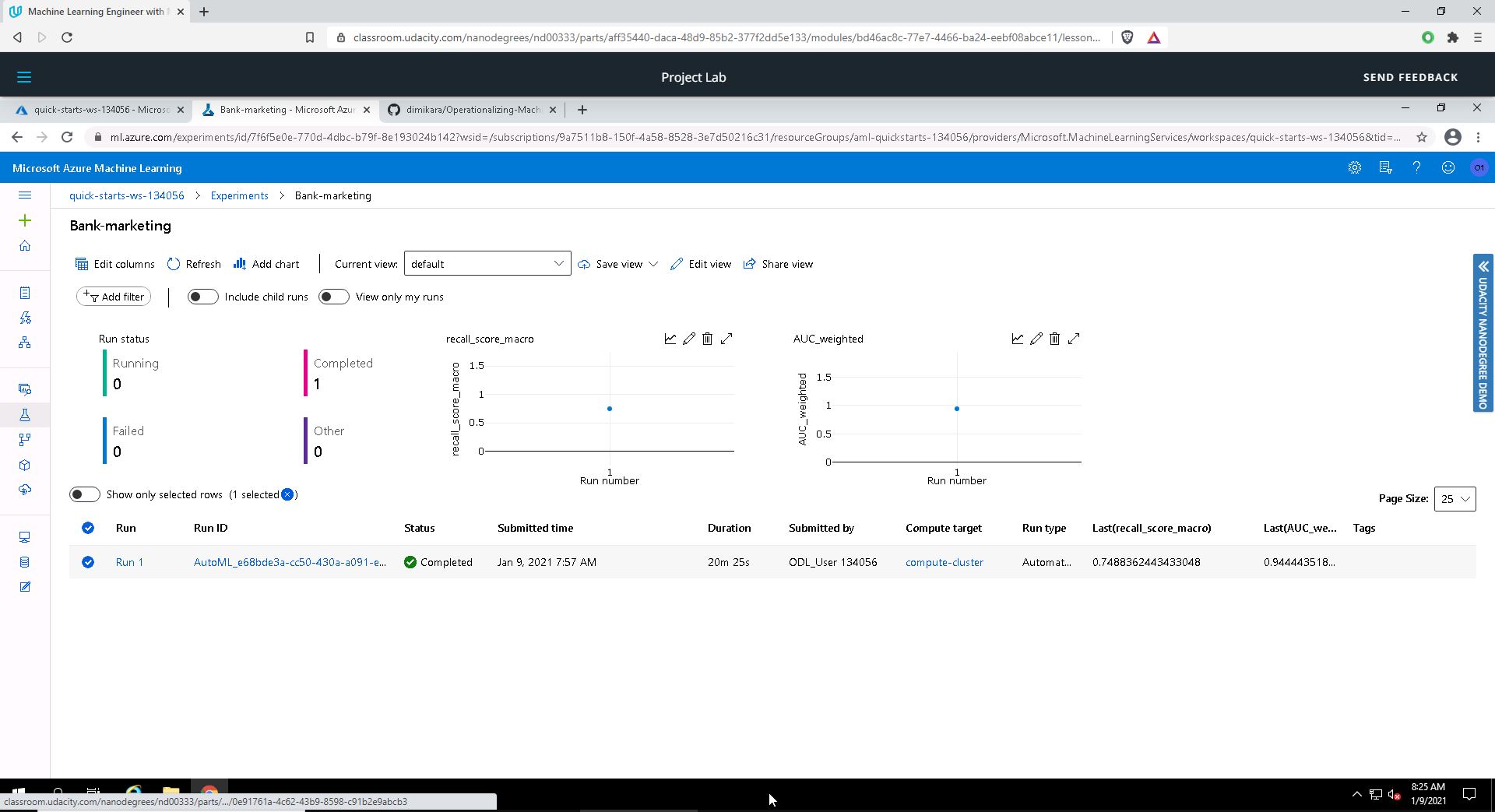

Experiment is completed

The experiment runs for about 20 min. and is completed:

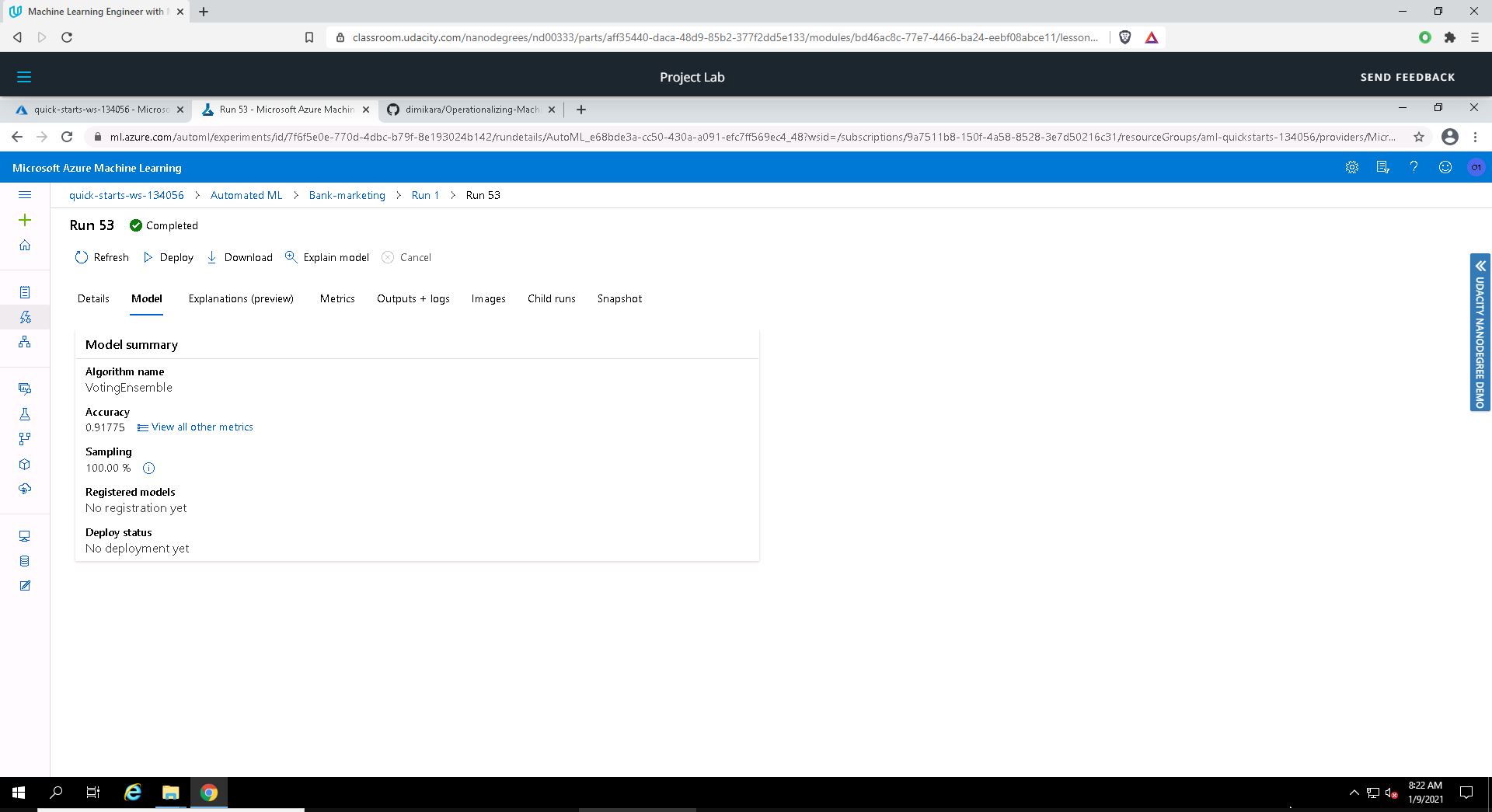

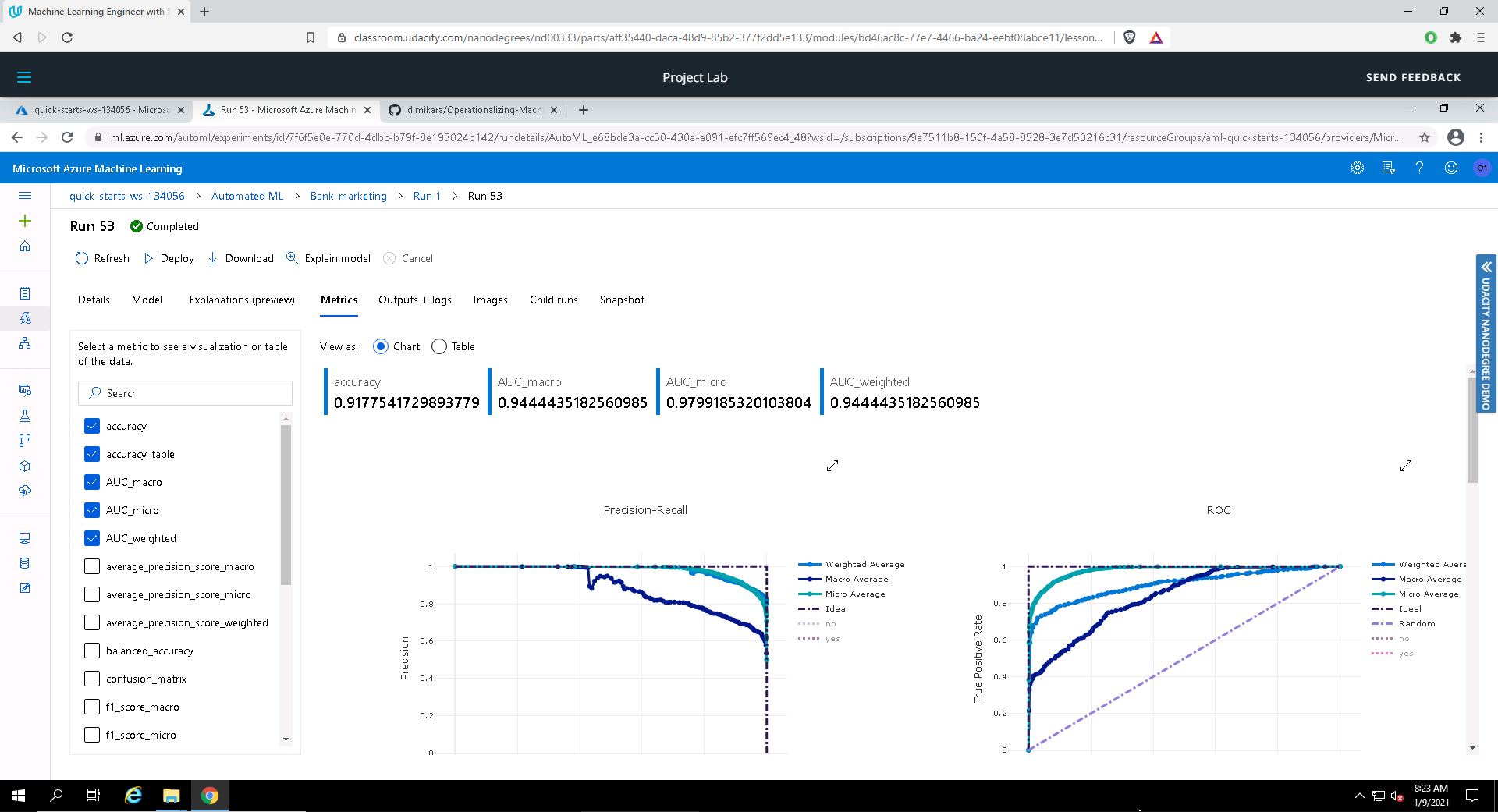

Best model

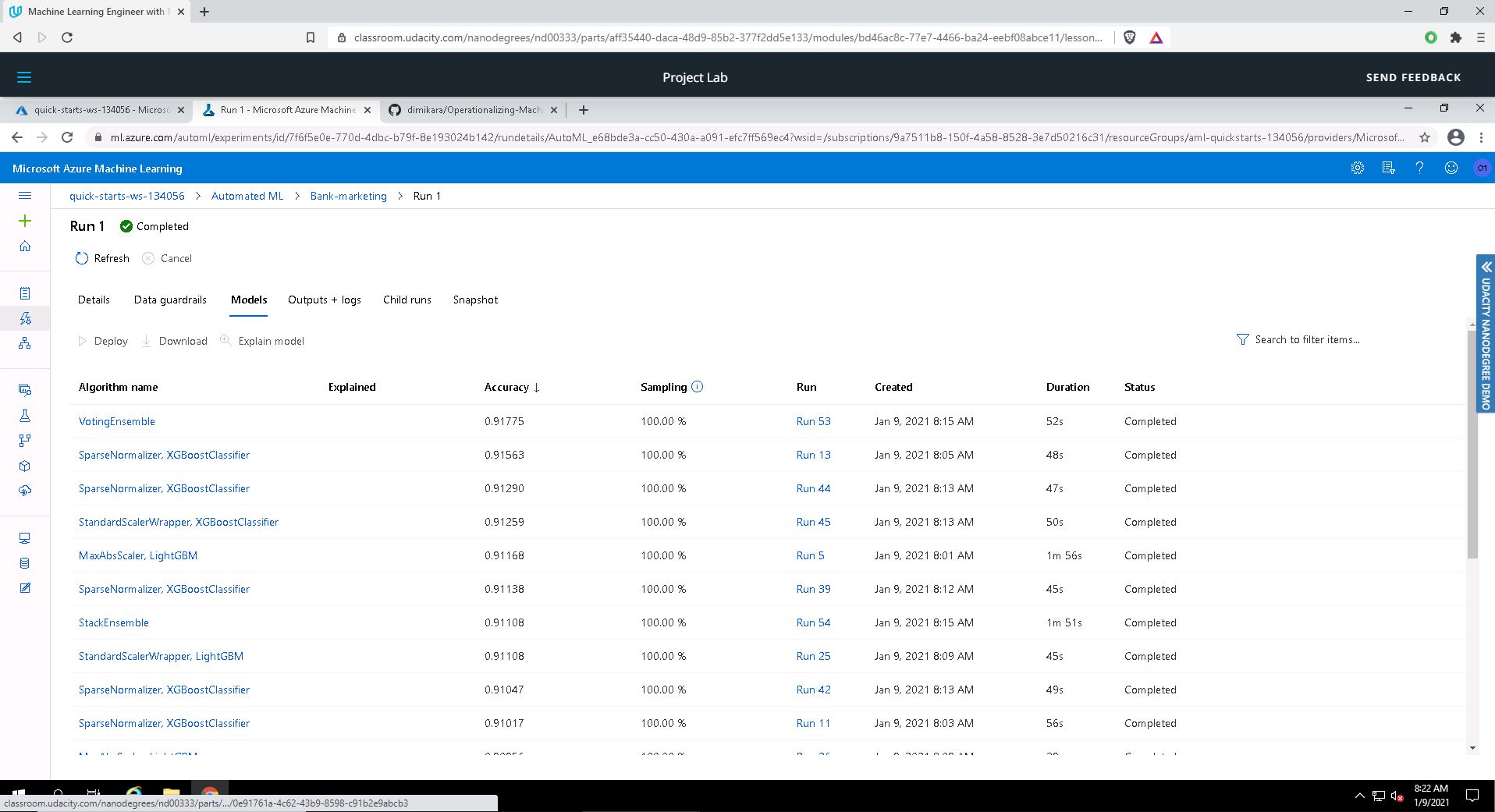

After the completion, we can see the resulting models:

In the Models tab, the first model (at the top) is the best model. You can see it below along with some of its characteristics & metrics:

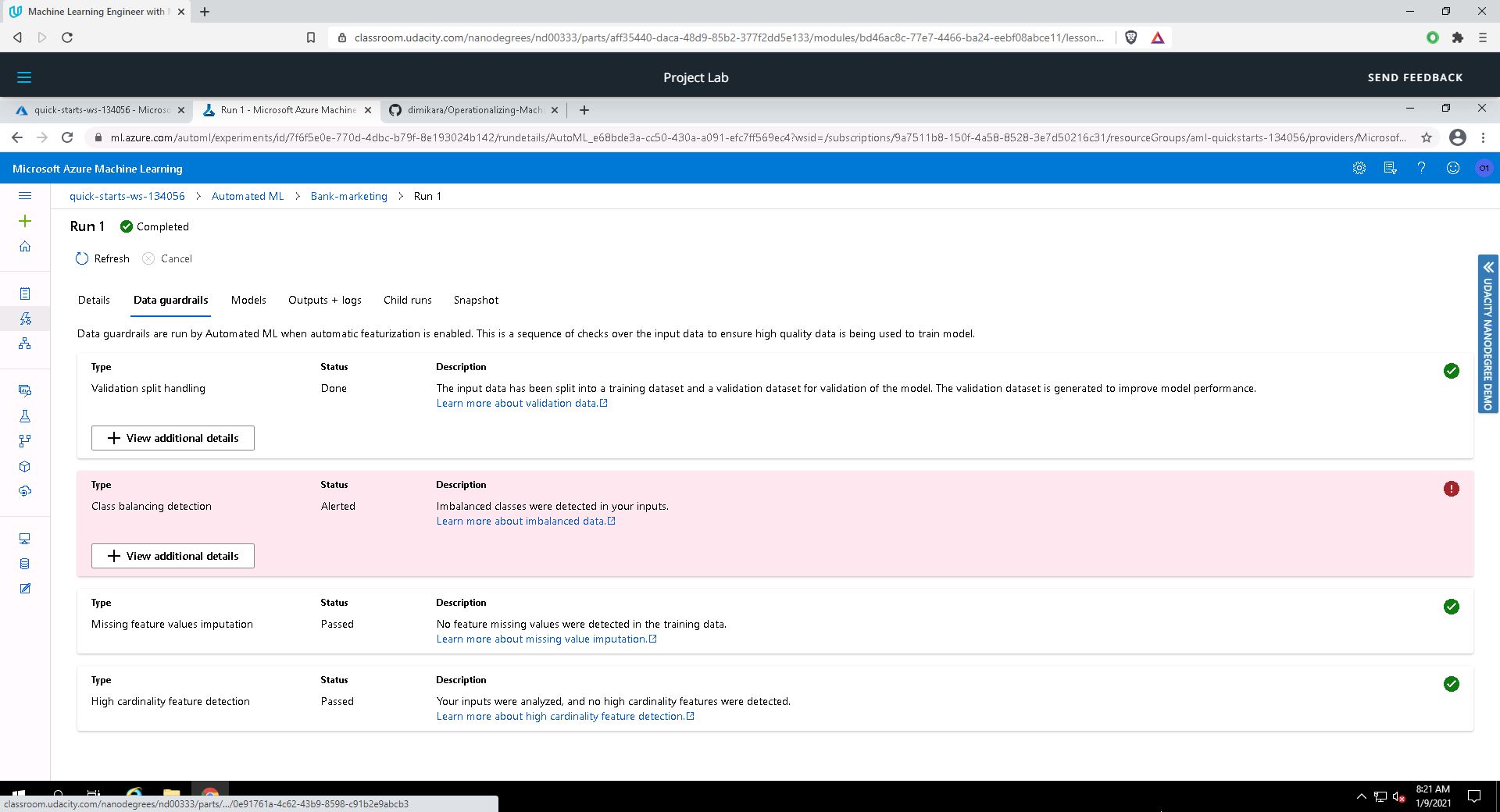

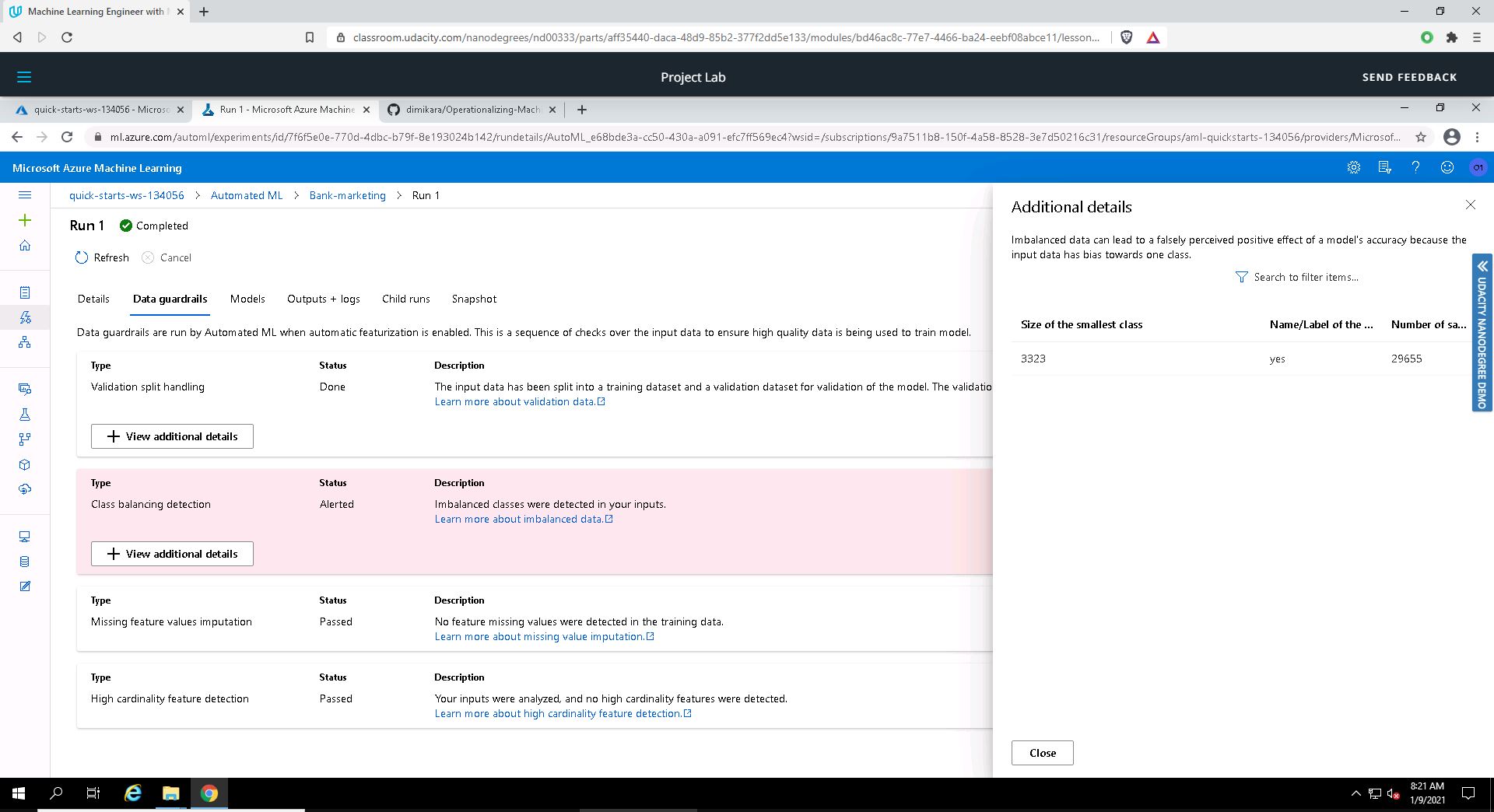

By clicking the Data guardrails tab, we can also see some very interesting info about potential issues with data. In this case, the imbalanced data issue was flagged:

The next step in the procedure is the deployment of the best model. First, I choose the best model i.e. the first model at the top in the Models tab. I deploy the model with Authentication enabled and using the Azure Container Instance (ACI).

Deploying the best model will allow us to interact with the HTTP API service and interact with the model by sending data over POST requests.

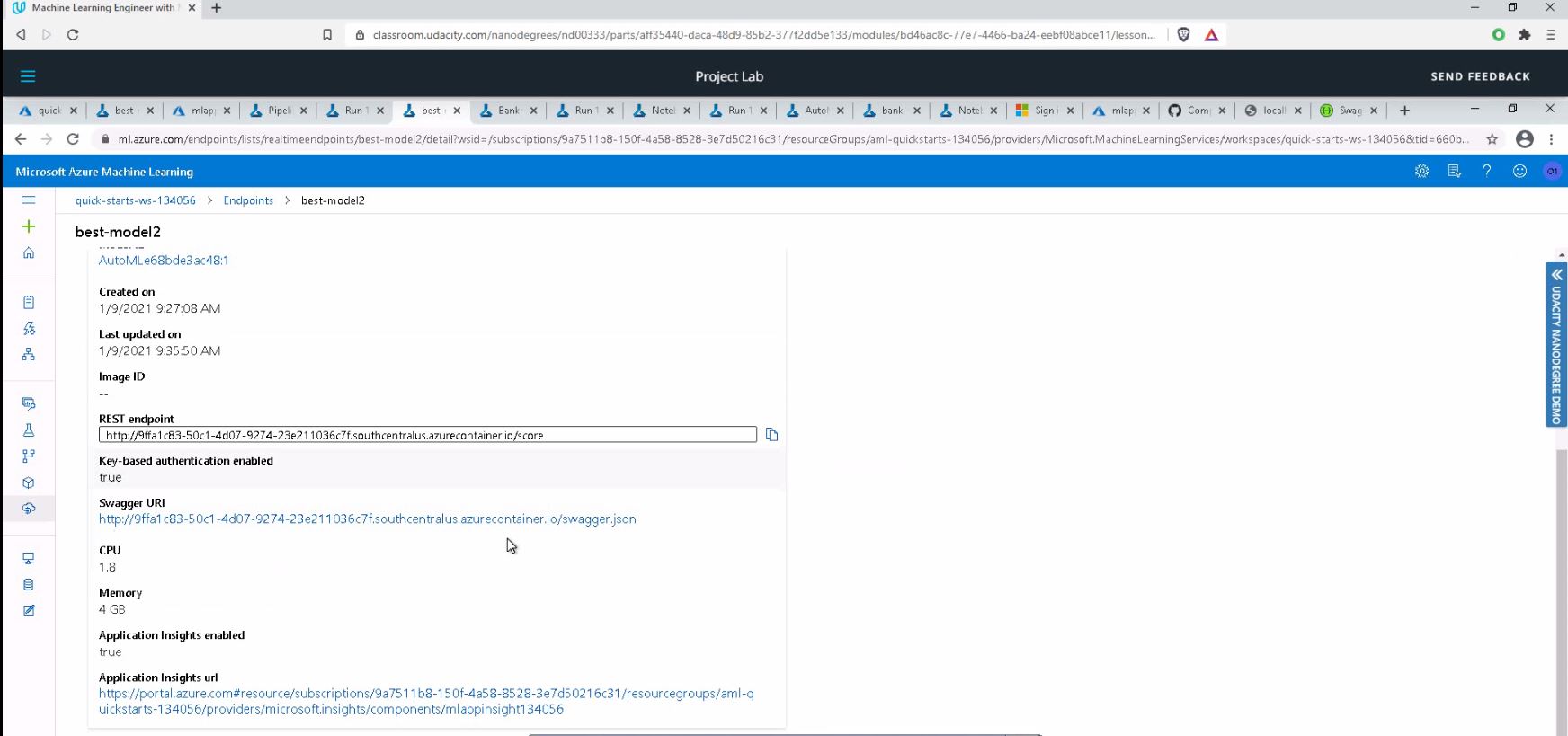

After the deployment of the best model, I can enable Application Insights and be able to retrieve logs:

"Application Insights" enabled in the Details tab of the endpoint

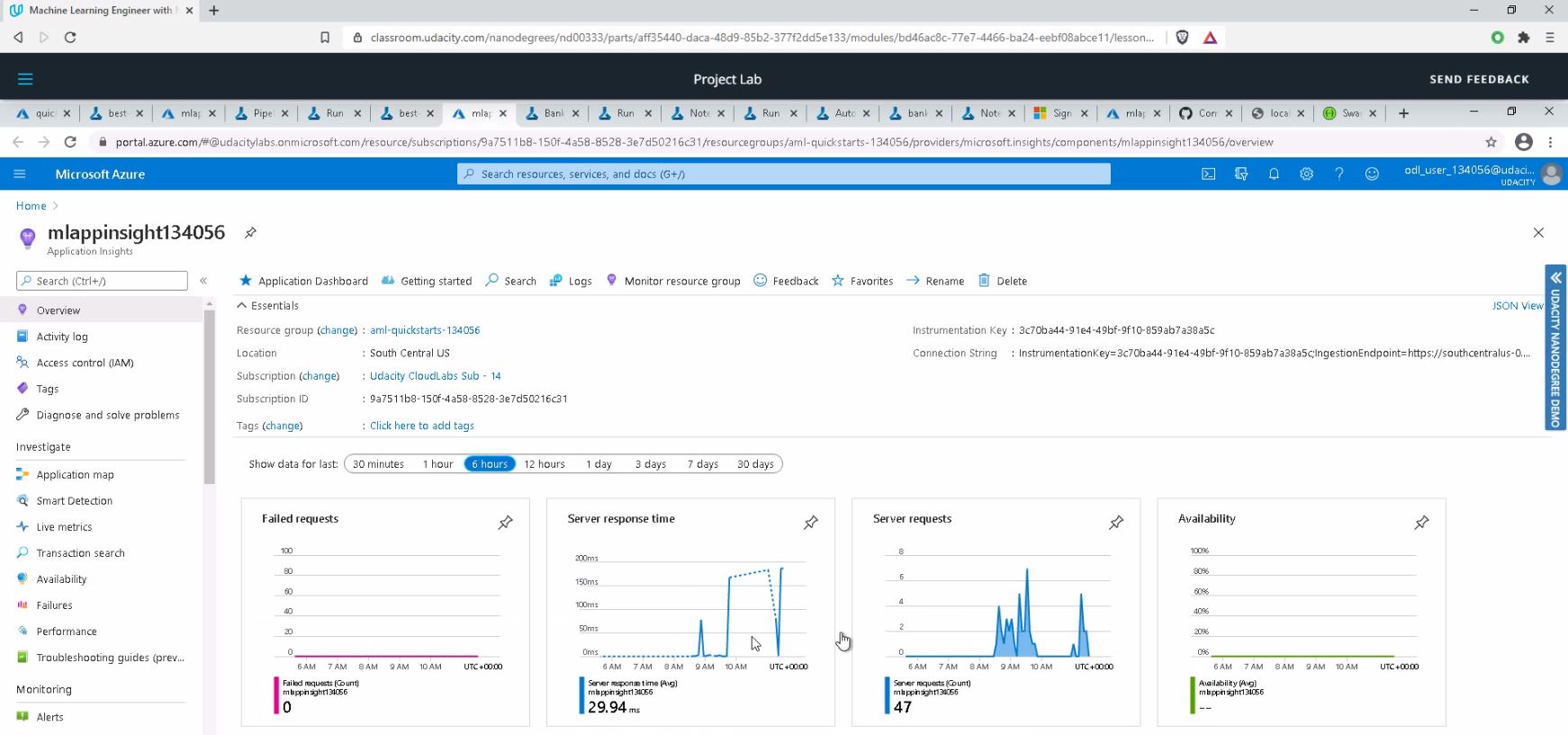

Screenshot of the tab running "Application Insights":

We can see Failed requests, Server response time, Server requests & Availability graphs in real time.

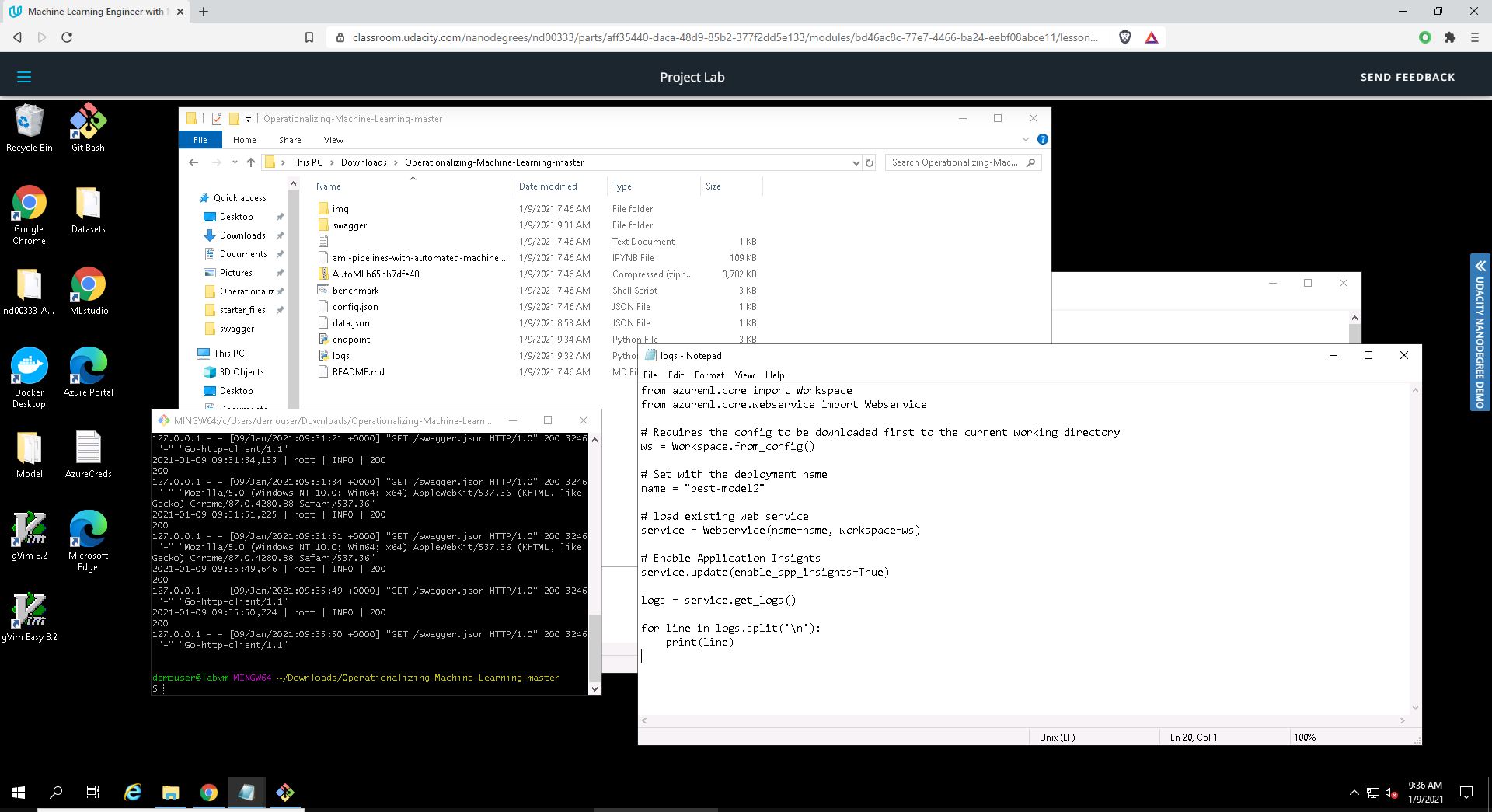

Running logs.py script

Although we can enable Application Insights at deploy time with a check-box, it is useful to be able to run code that will enable it for us. For this reason, I run the logs.py Python file, where I put in name the name of the deployed model (best-model2) and I add the line service.update(enable_app_insights=True):

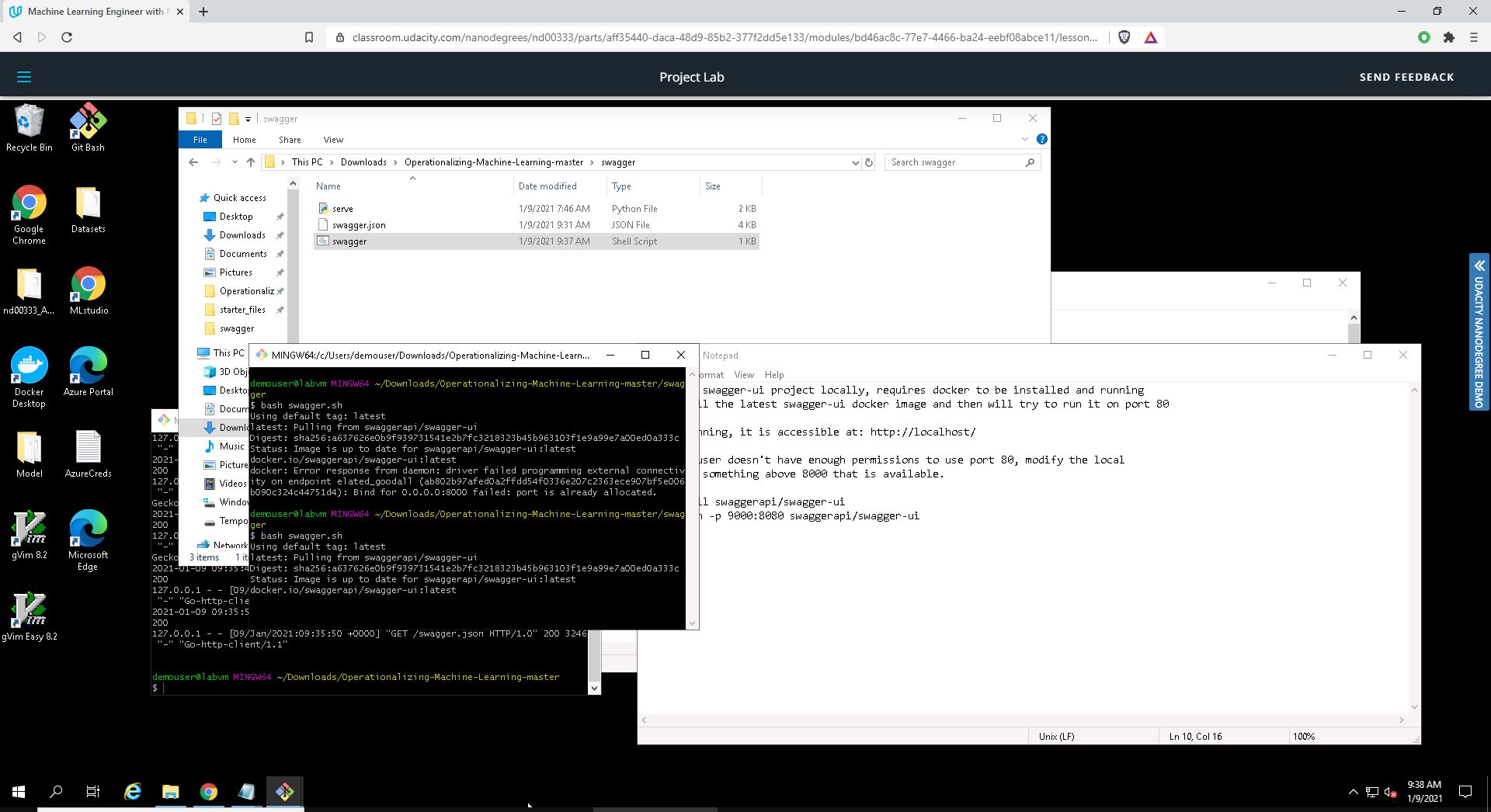

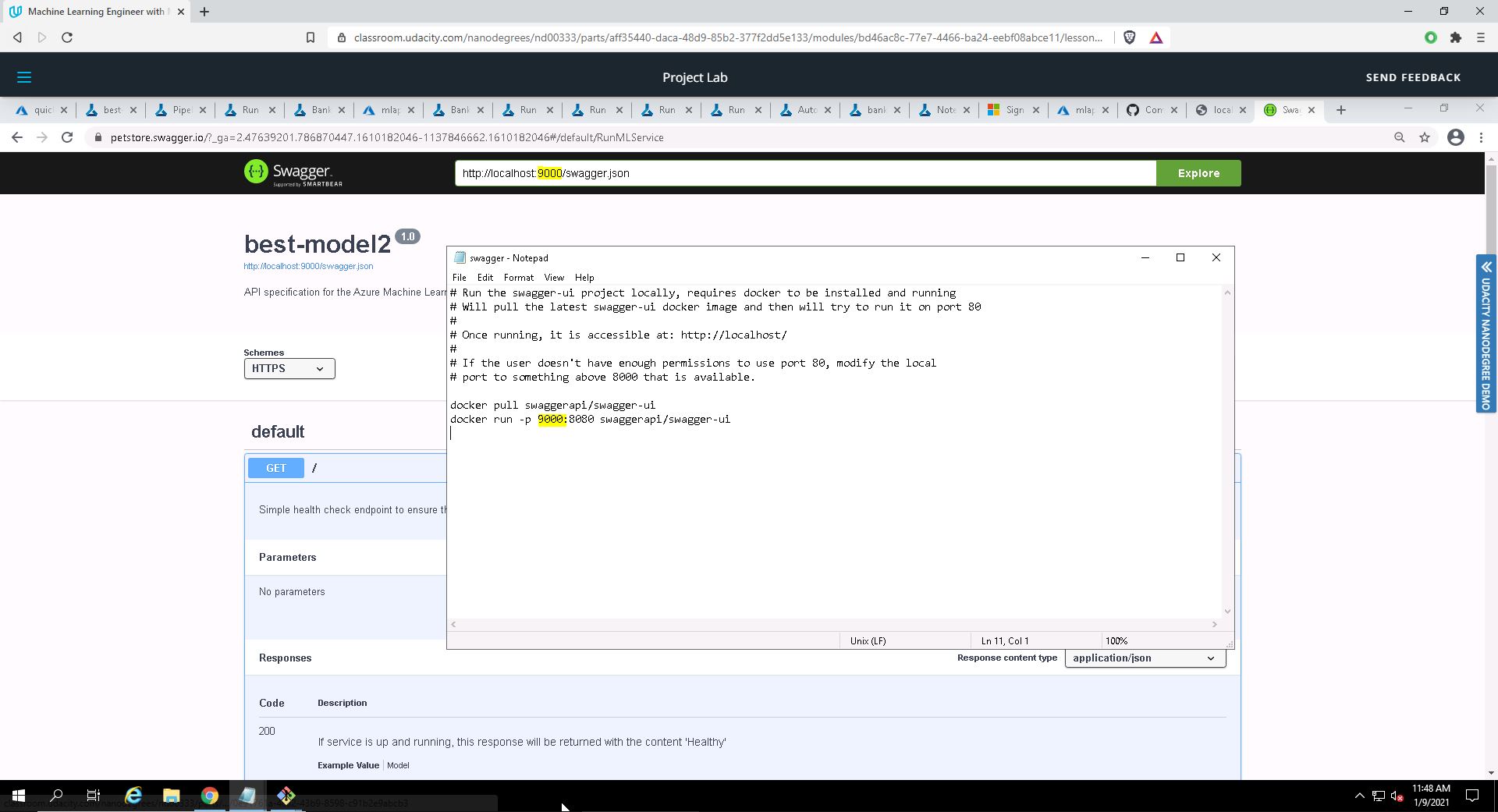

Swagger is a set of open-source tools built around the OpenAPI Specification that can help us design, build, document and consume REST APIs. One of the major tools of Swagger is Swagger UI, which is used to generate interactive API documentation that lets the users try out the API calls directly in the browser.

In this step, I consume the deployed model using Swagger. Azure provides a Swagger JSON file for deployed models. This file can be found in the Endpoints section, in the deployed model there, which should be the first one on the list. I download this file and save it in the Swagger folder.

I execute the files swagger.sh and serve.py. What these two files do essentially is to download and run the latest Swagger container (swagger.sh), and start a Python server on port 9000 (serve.py).

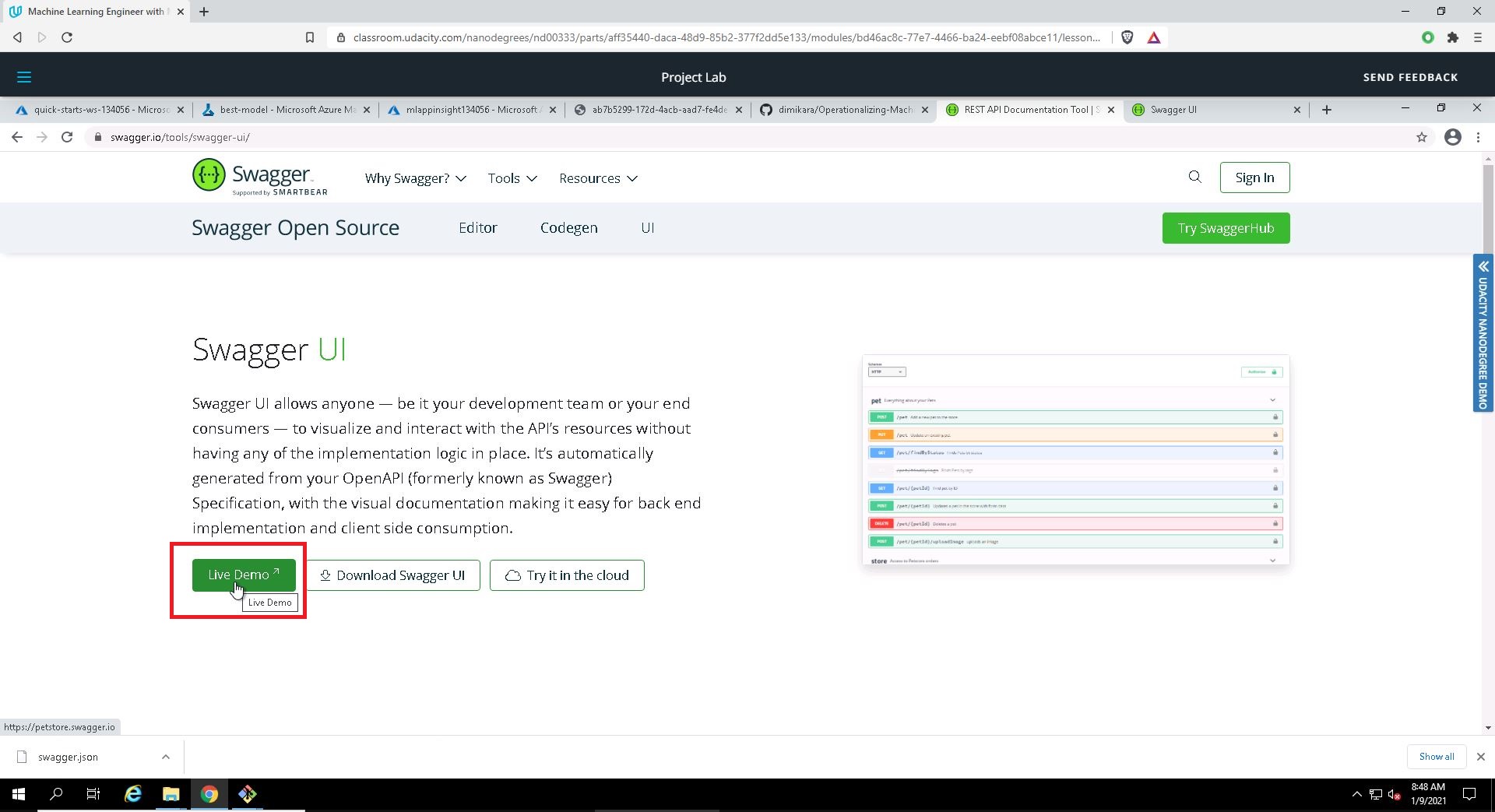

In the Live Demo page of Swagger UI:

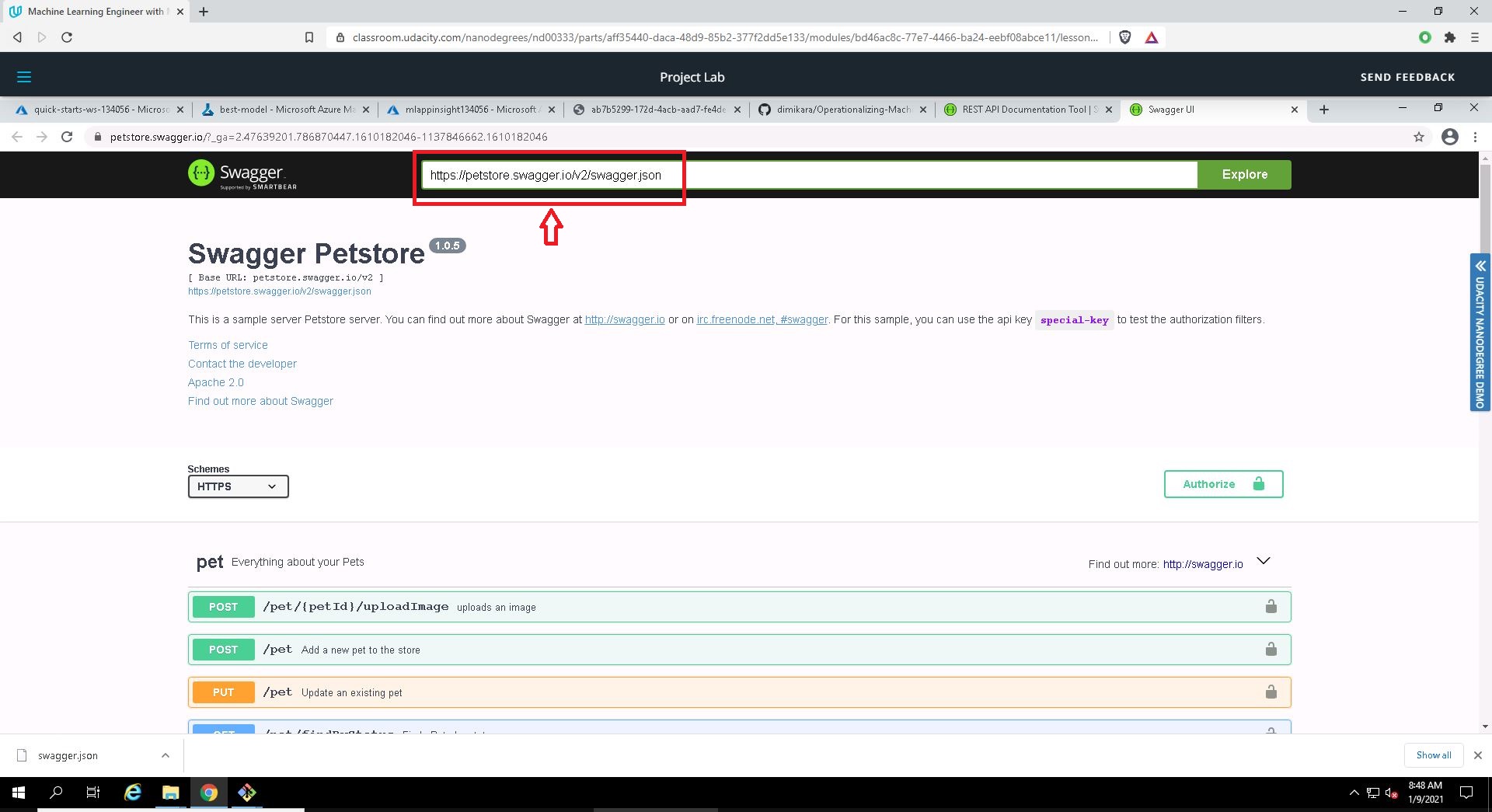

I click on Live Demo button and am transfered in a demo page with a sample server:

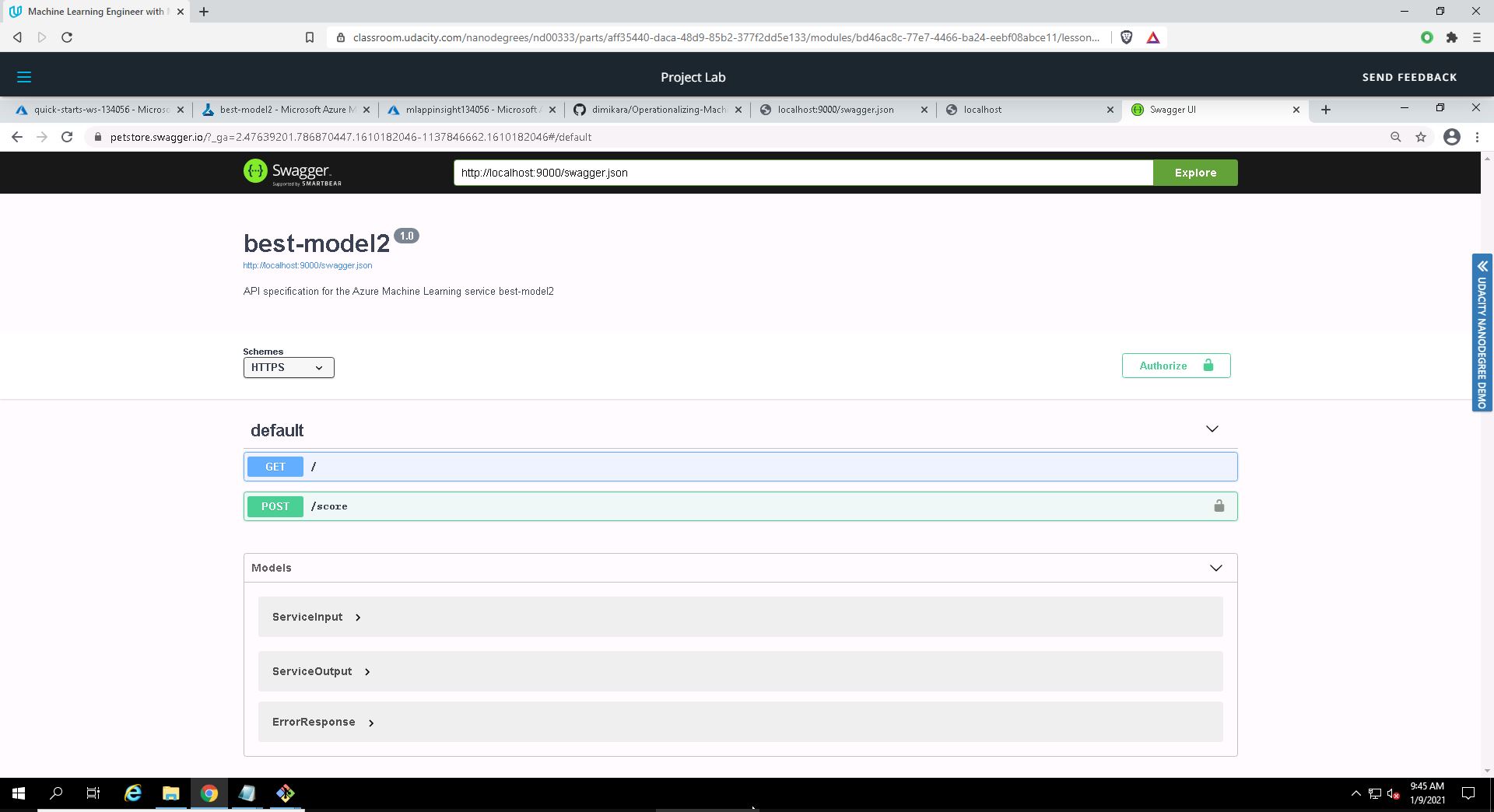

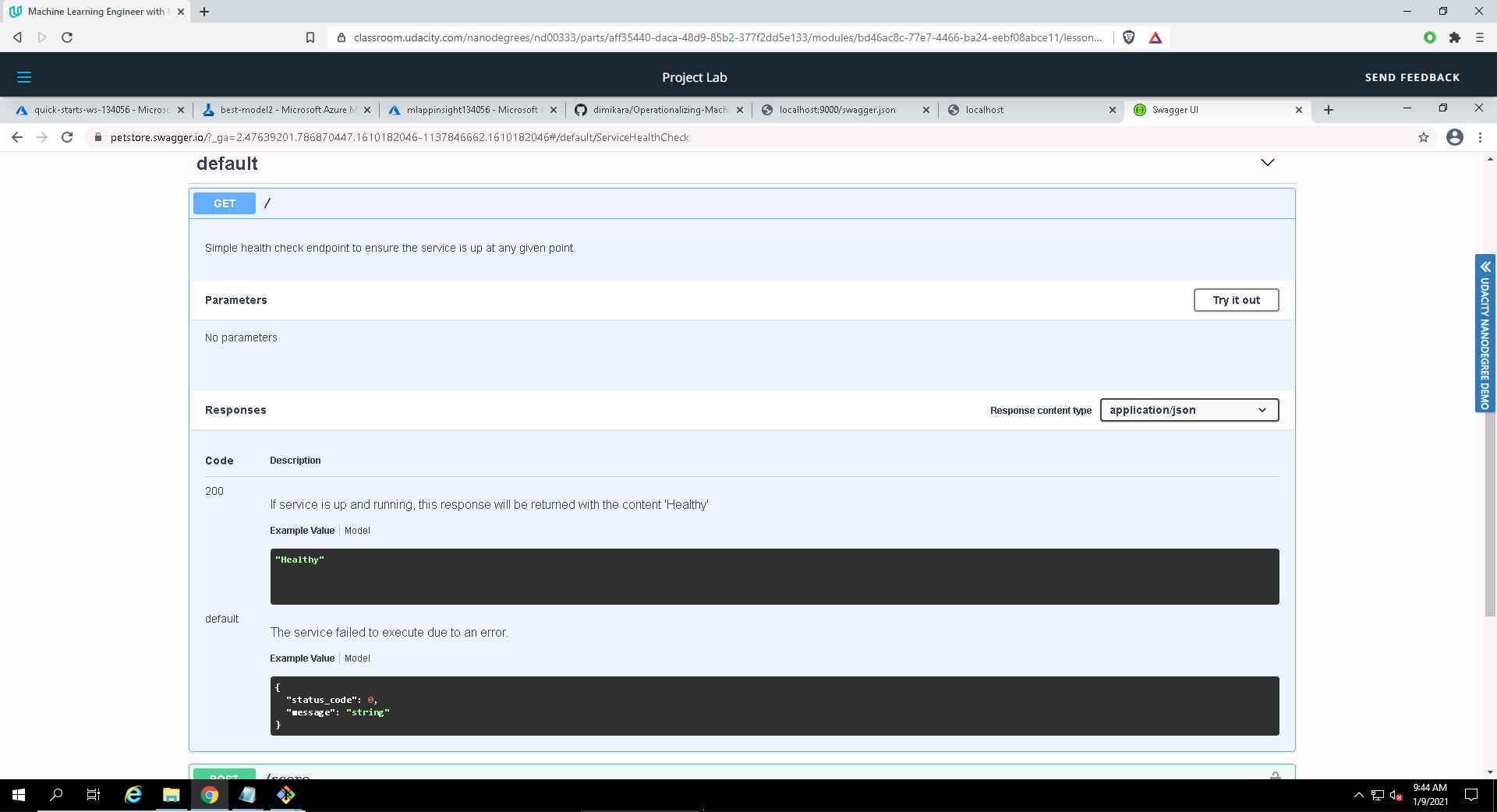

I delete the address in the address bar pointed with the red arrow and replace it with: http://localhost:9000/swagger.json. After hitting Explore, Swagger UI generates interactive API documentation that lets us try out the API calls directly in the browser.

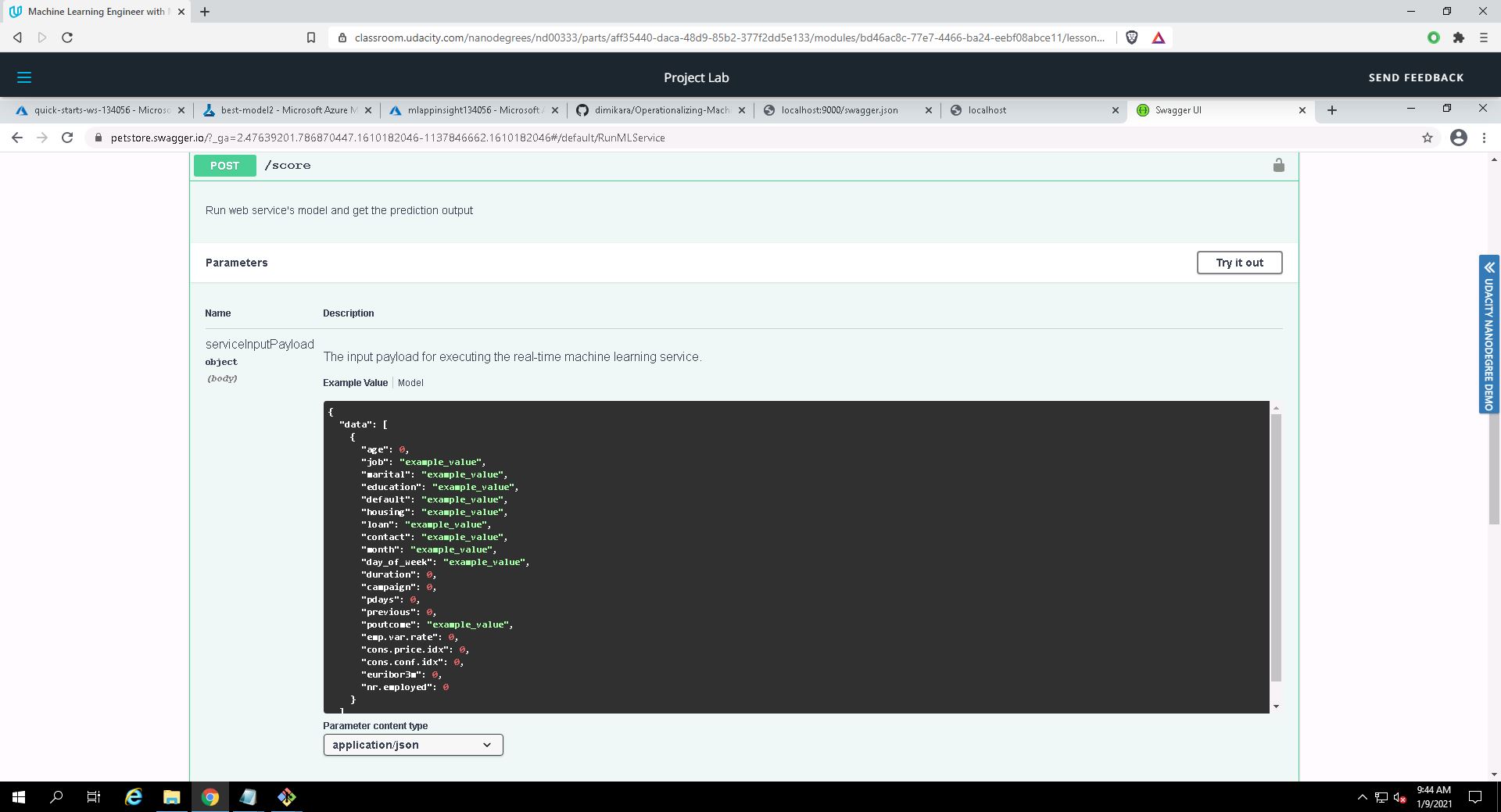

We can see below the HTTP API methods and responses for the model:

Swagger runs on localhost - GET & POST/score endpoints

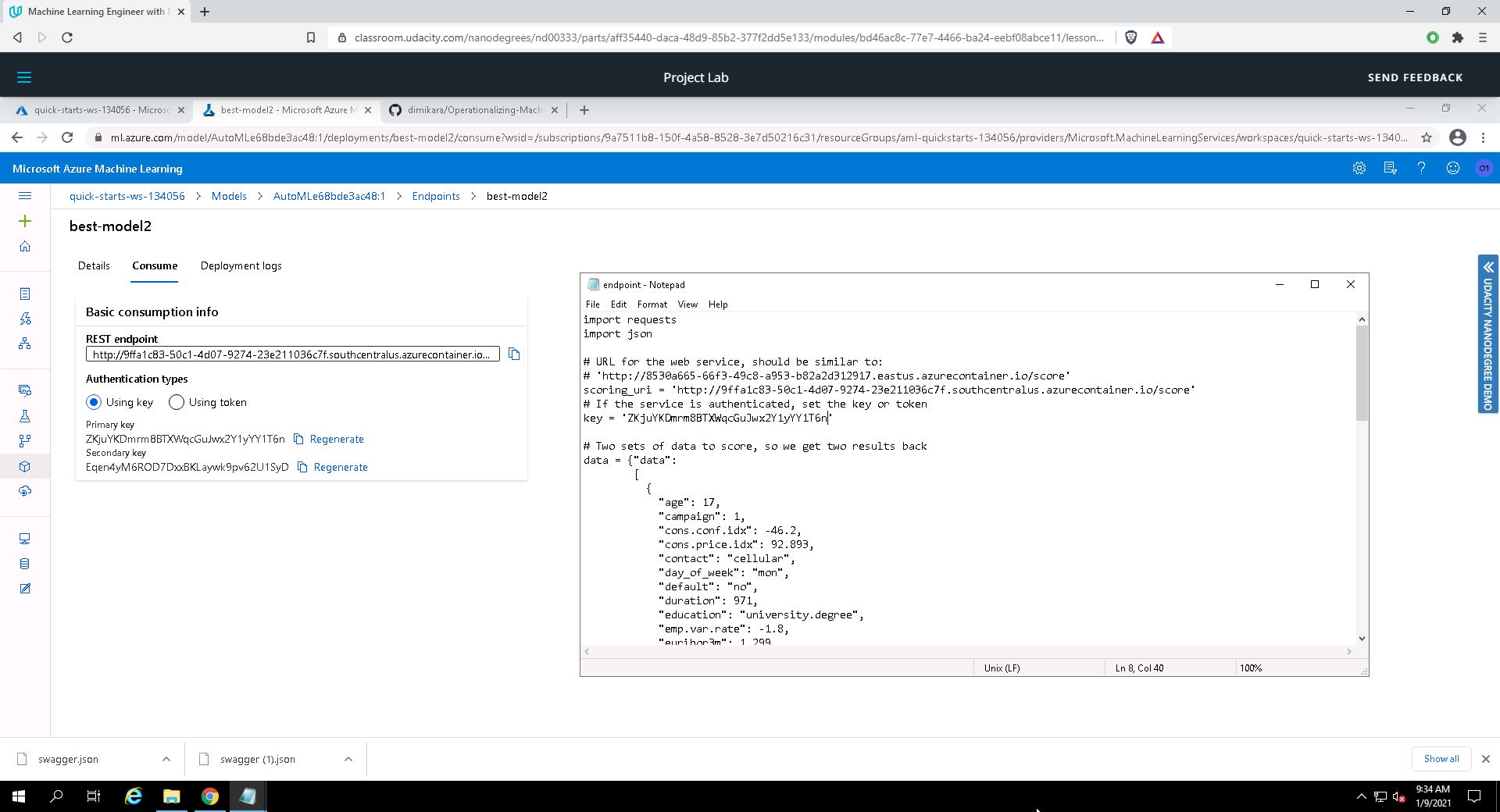

Once the best model is deployed, I consume its endpoint using the endpoint.py script provided where I replace the values of scoring_uri and key to match the corresponding values that appear in the Consume tab of the endpoint:

Consume Model Endpoints: running endpoint.py

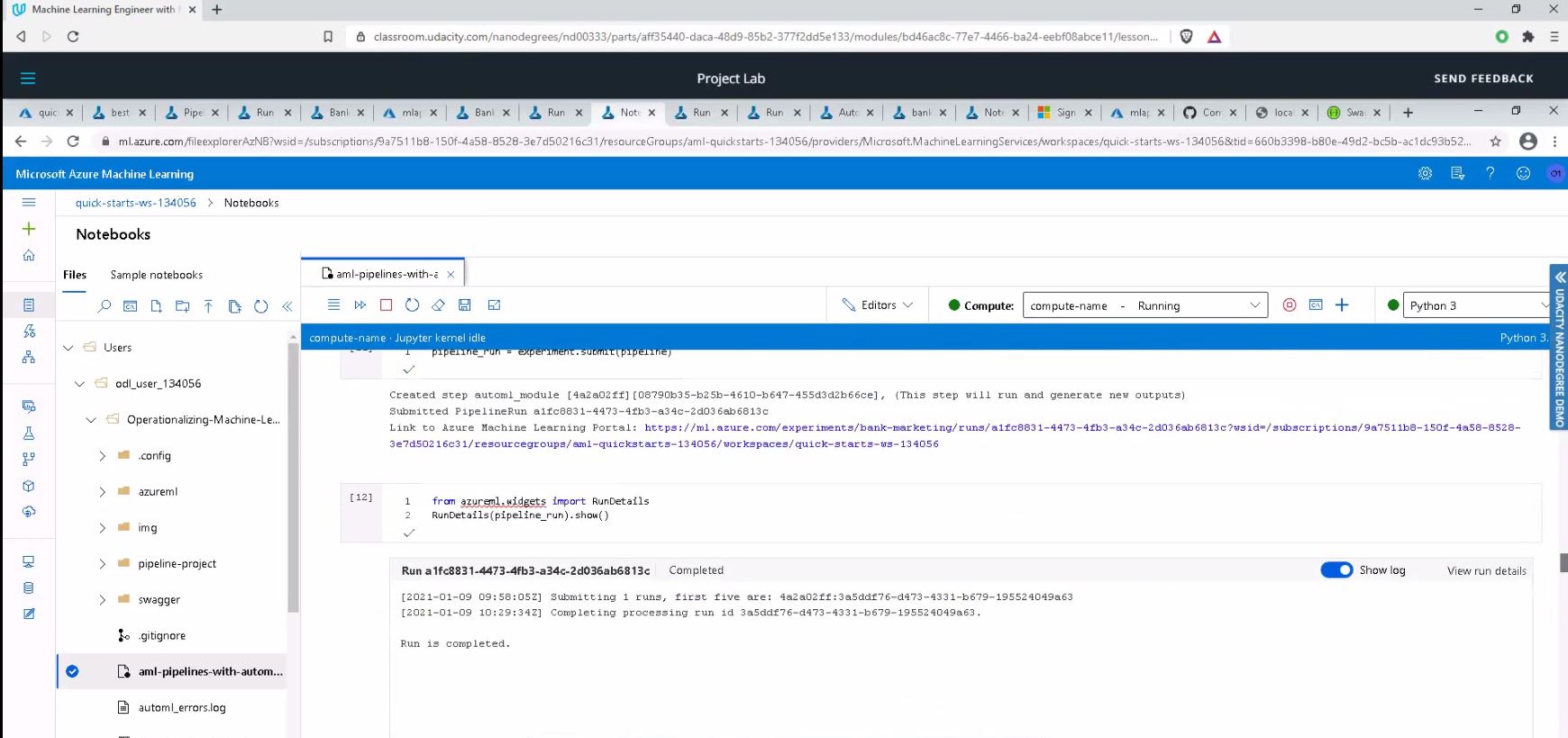

In this second part of the project, I use the Jupyter Notebook provided: aml-pipelines-with-automated-machine-learning-step.ipynb. The notebook is updated so as to have the same dataset, keys, URI, cluster, and model names that I created in the first part.

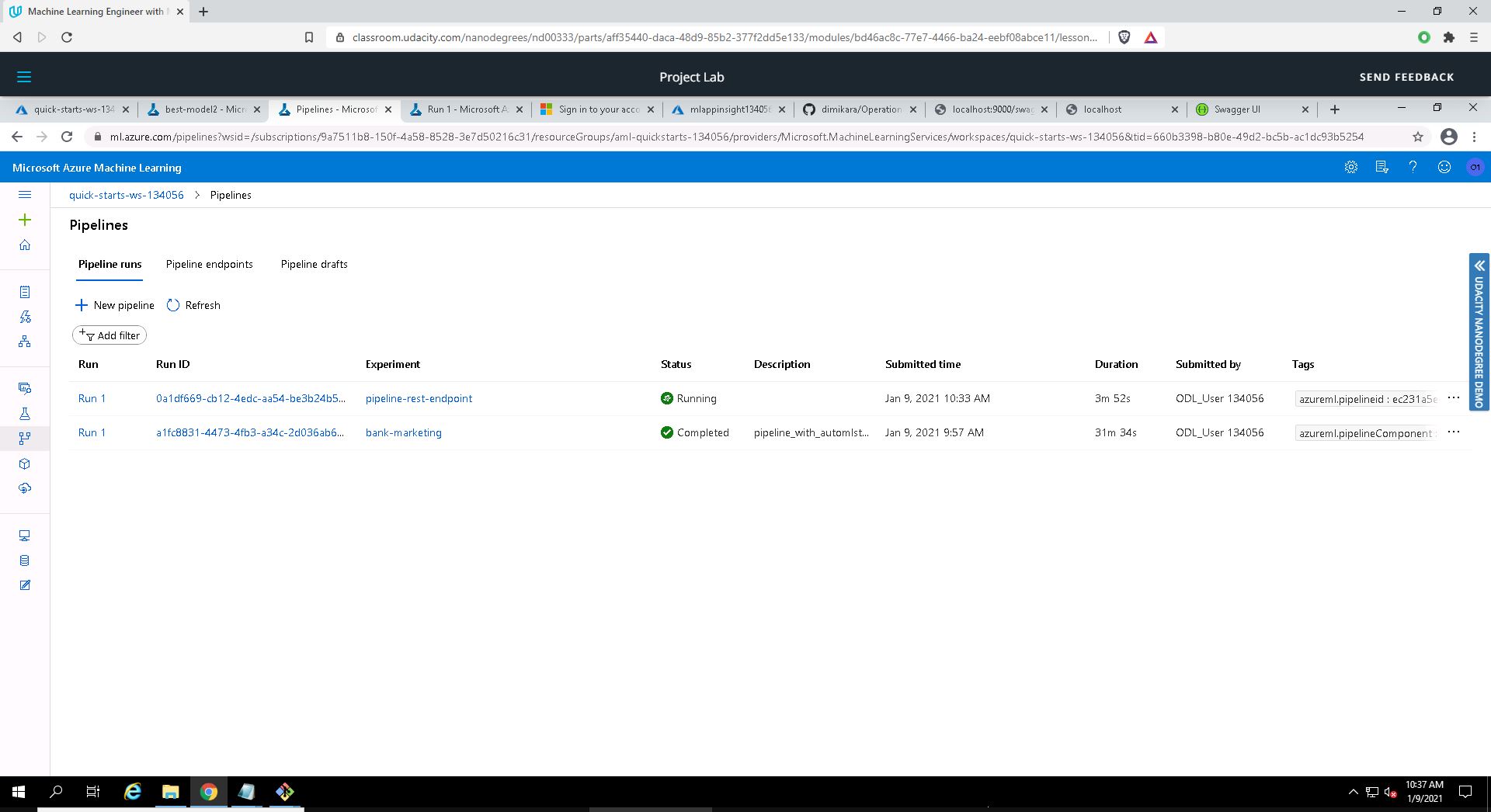

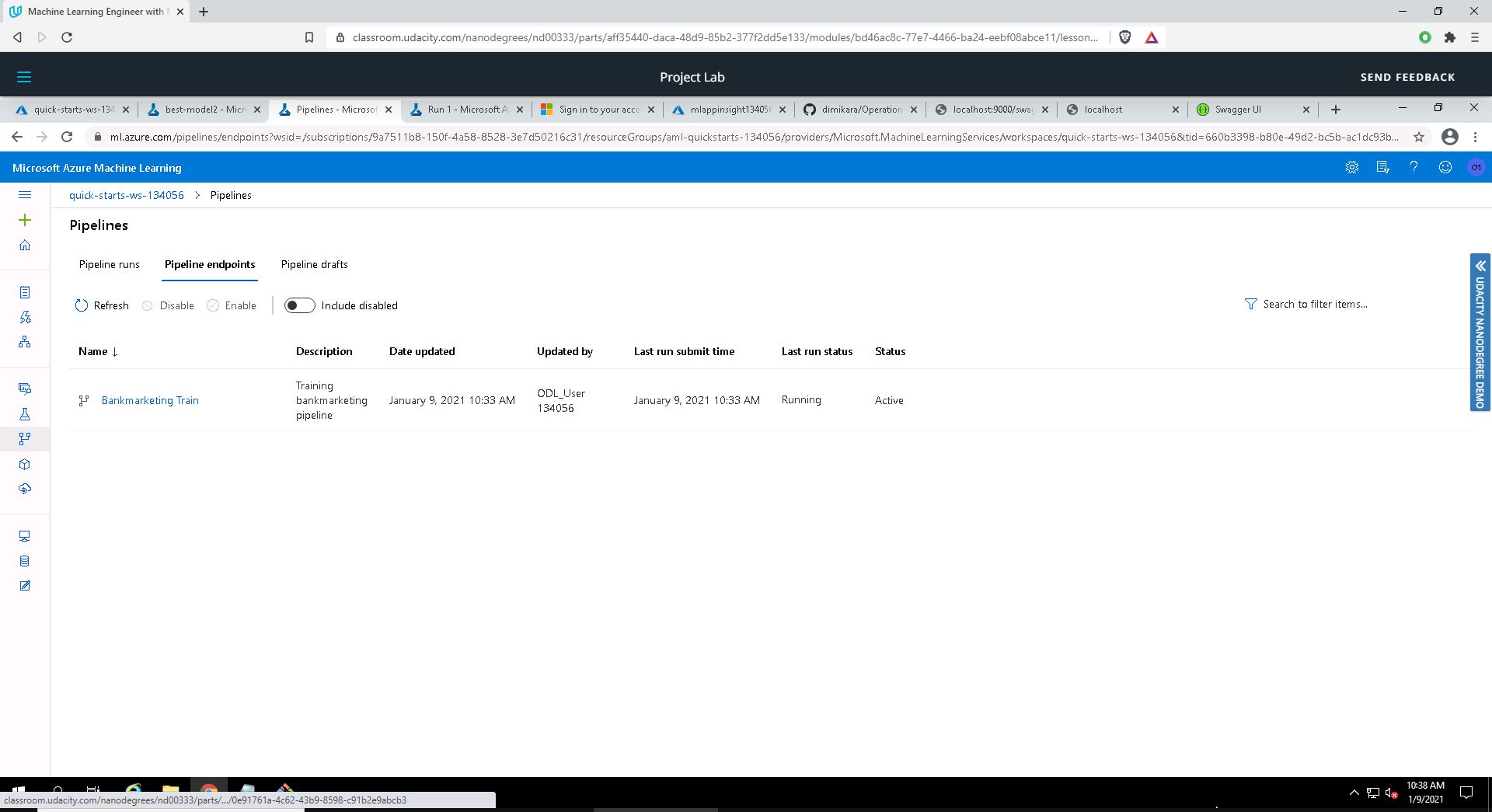

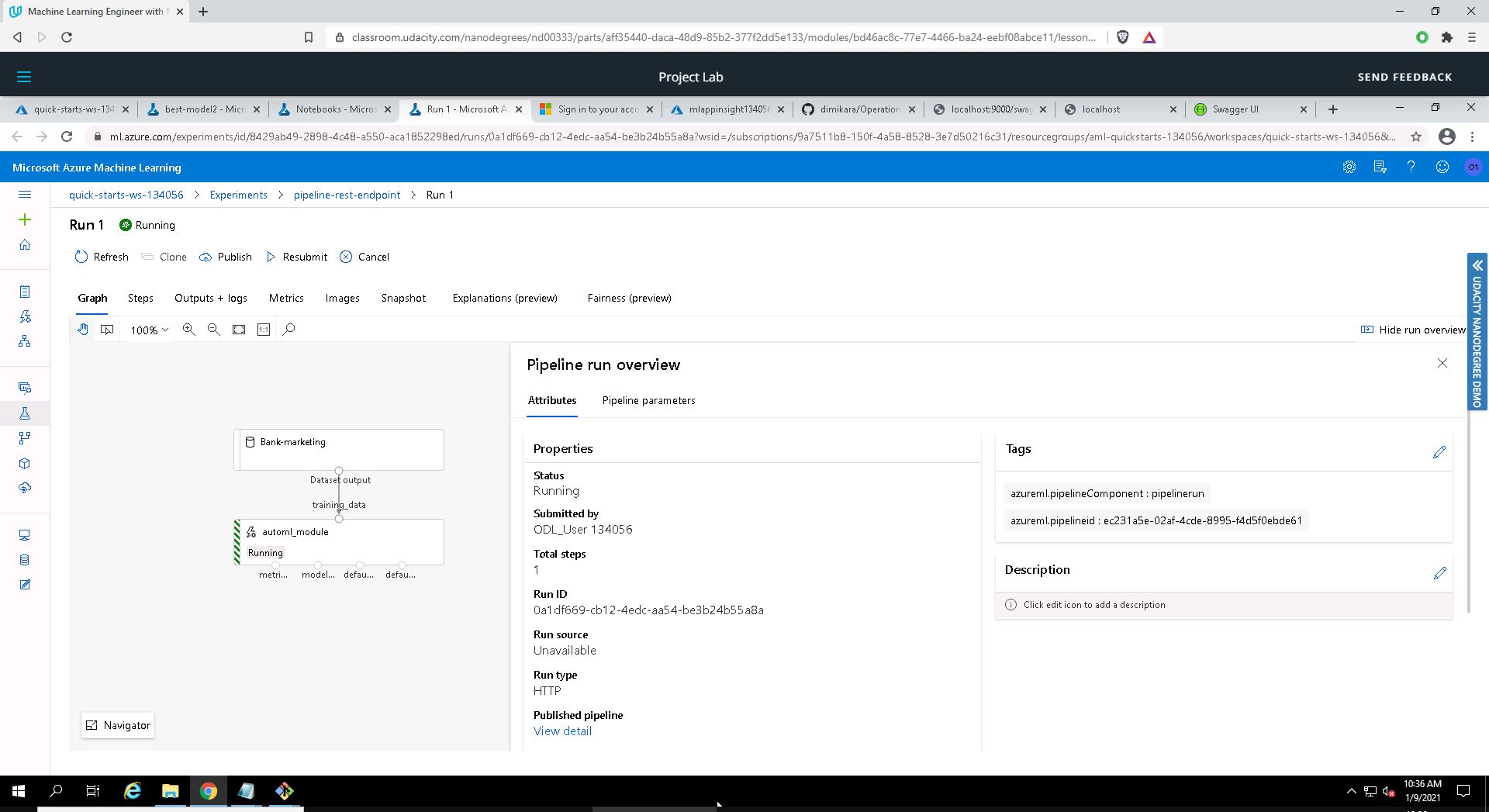

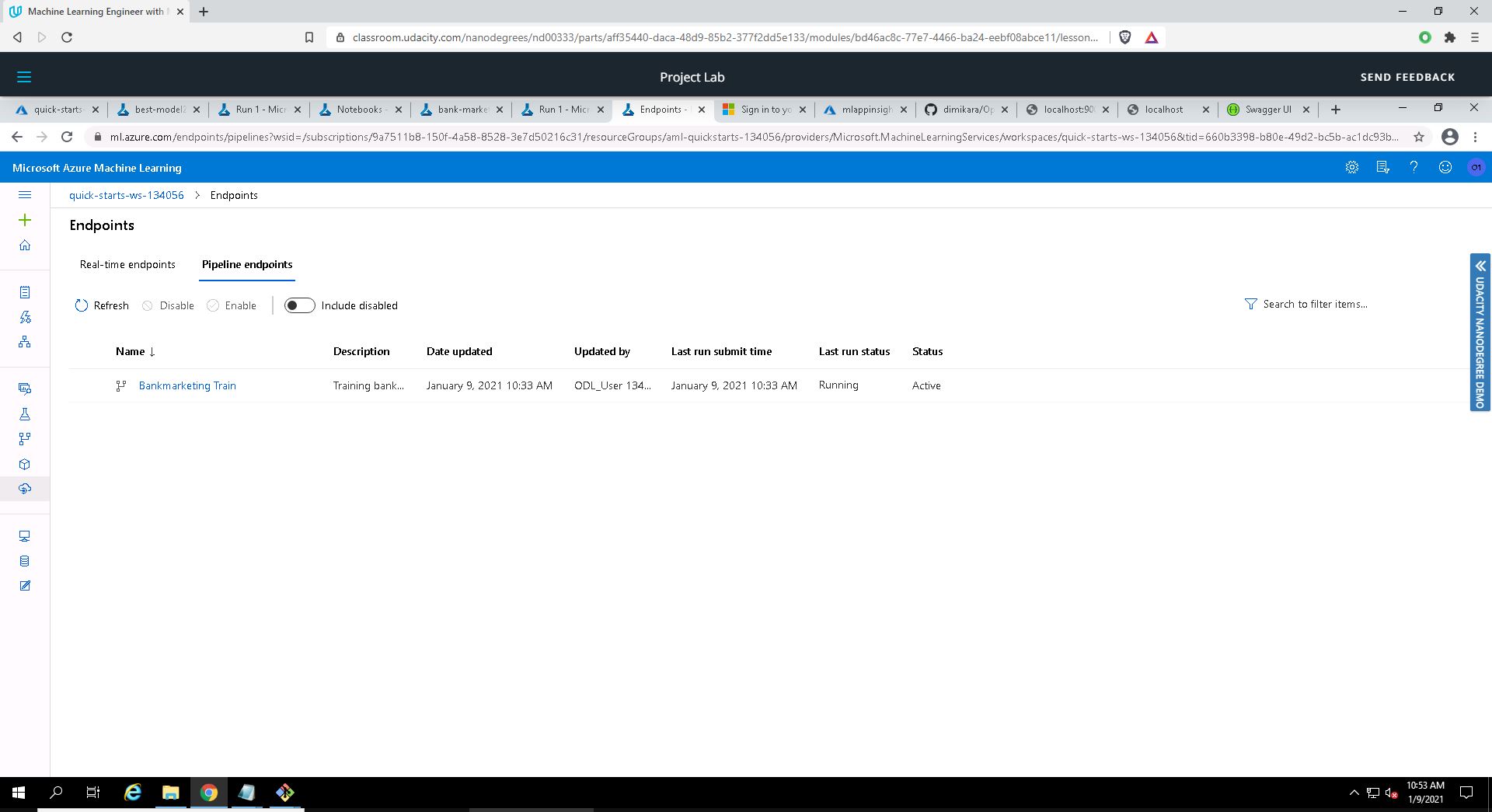

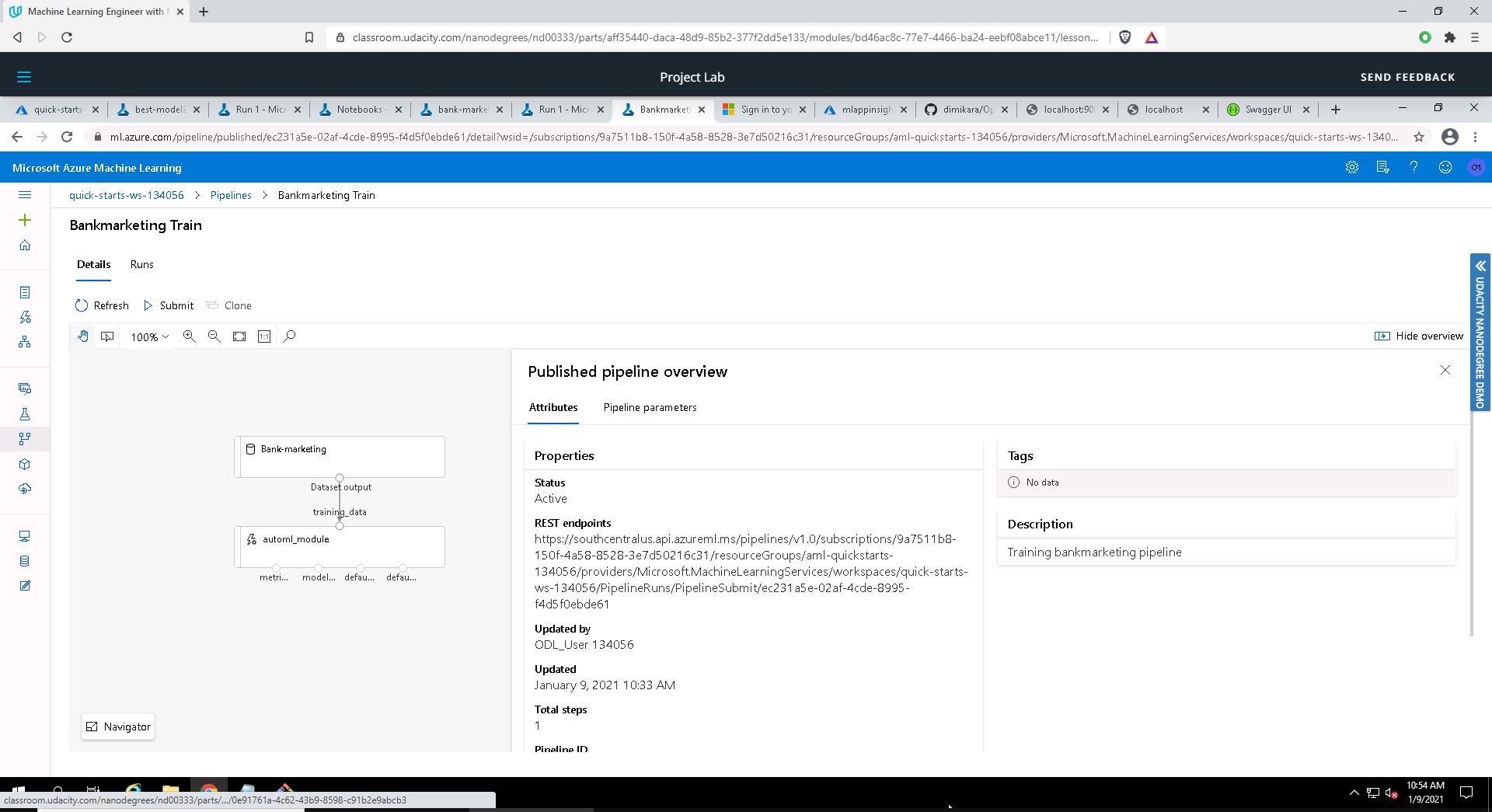

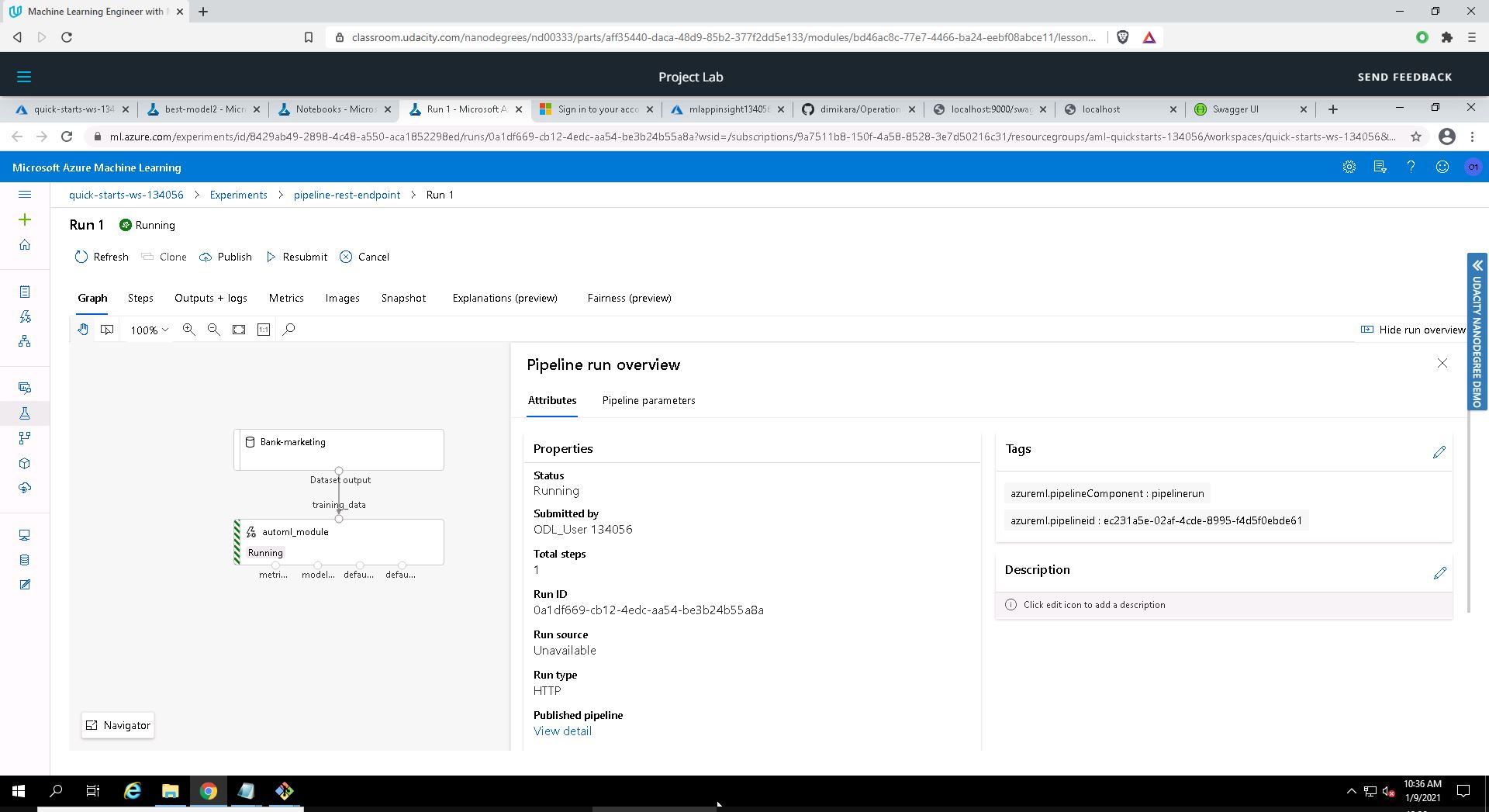

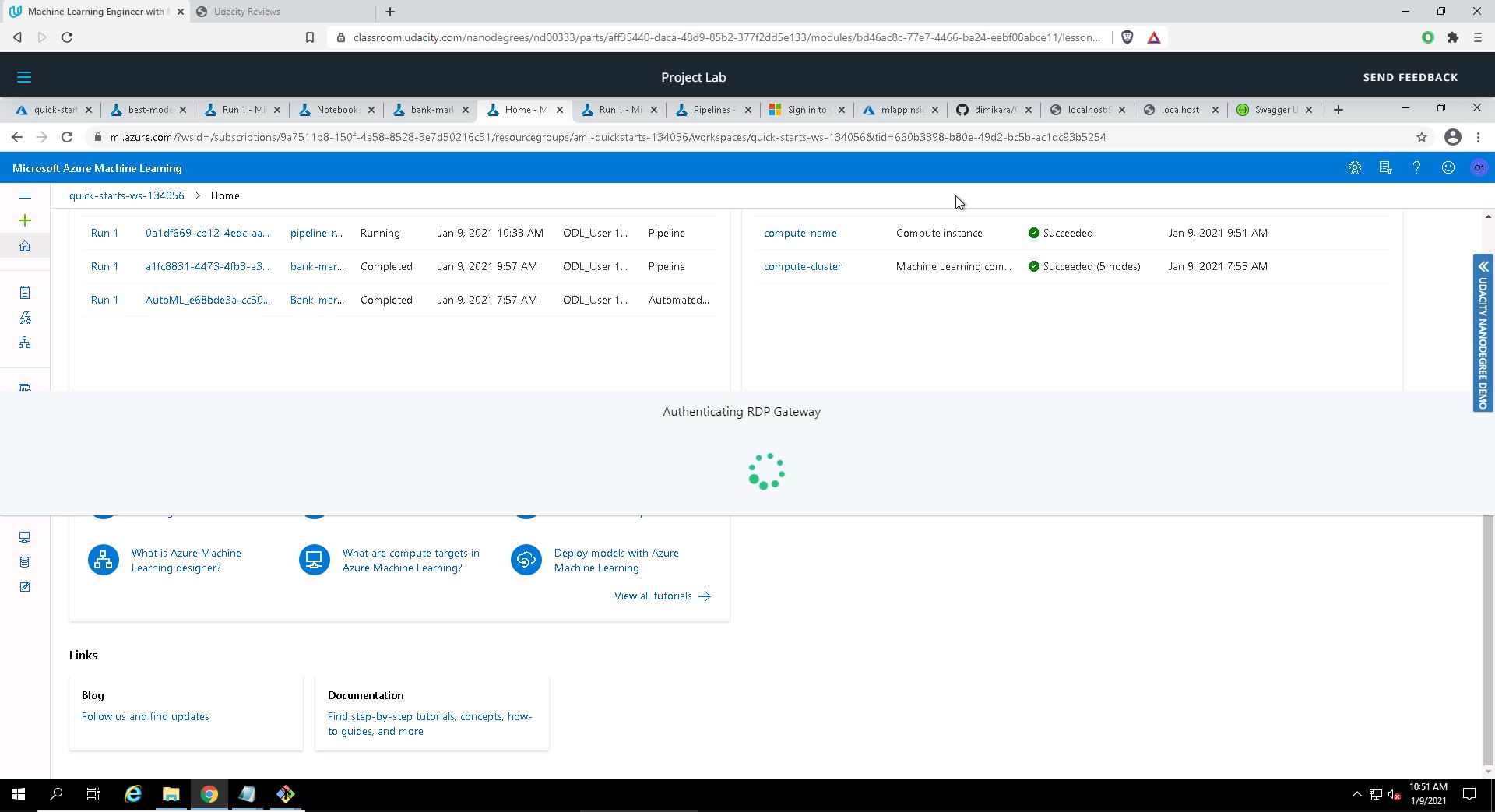

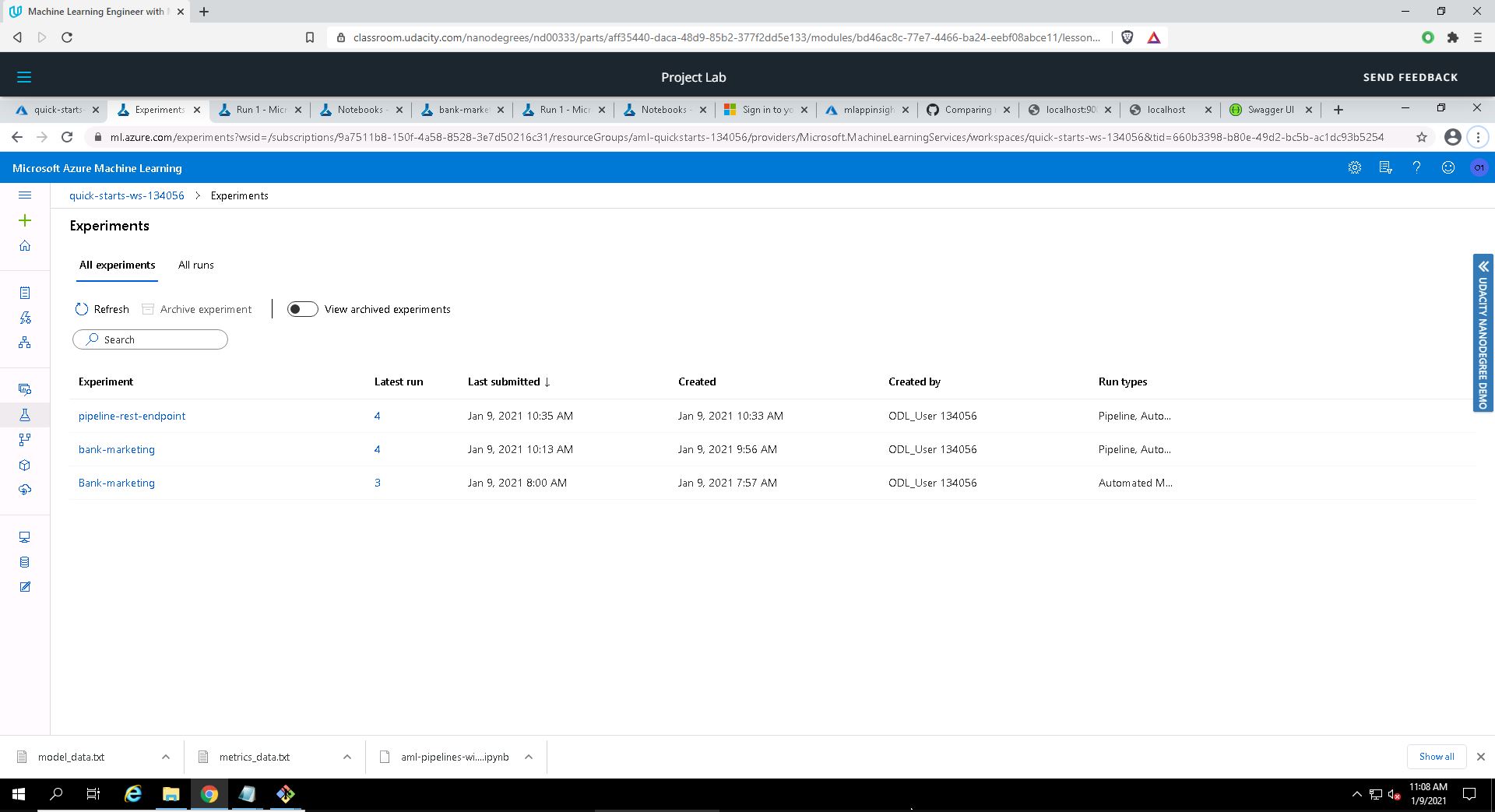

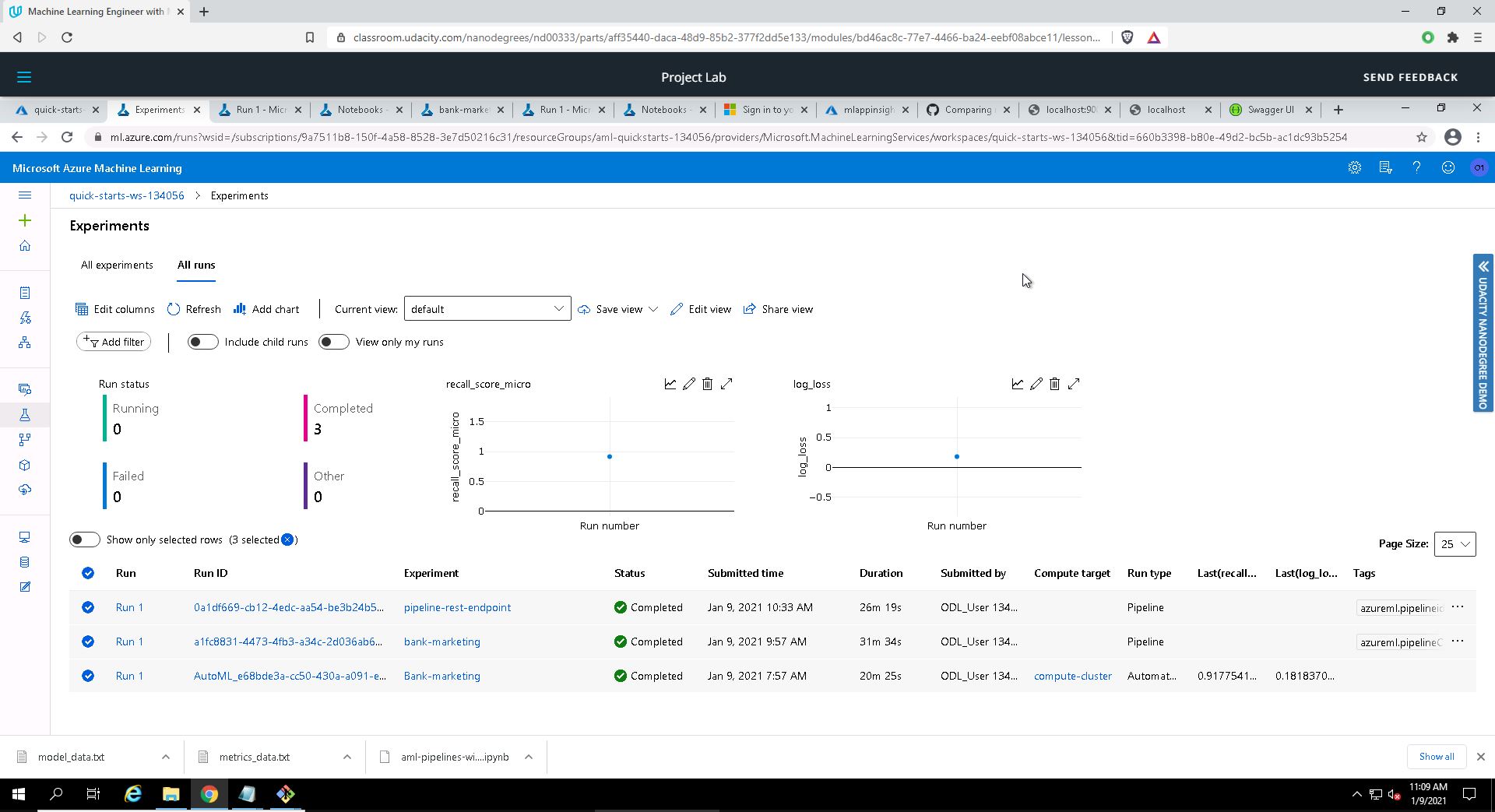

The purpose of this step is to create, publish and consume a pipeline using the Azure Python SDK. We can see below the relevant screenshots:

The Pipelines section of Azure ML Studio

Bankmarketing dataset with the AutoML module

Published Pipeline Overview showing a REST endpoint and an ACTIVE status

Jupyter Notebook: RunDetails Widget shows the step runs

In ML Studio: Completed run

The screen recording can be found here and it shows the project in action. More specifically, the screencast demonstrates:

- The working deployed ML model endpoint

- The deployed Pipeline

- Available AutoML Model

- Successful API requests to the endpoint with a JSON payload

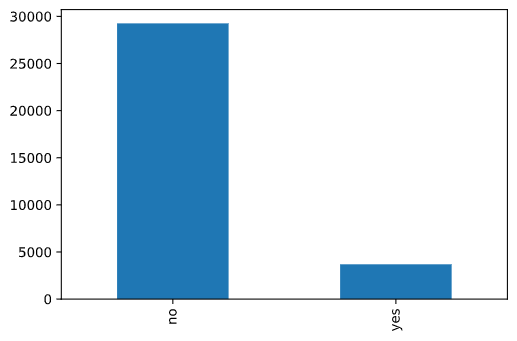

- As I have pointed out in the 1st project as well, the data is highly imbalanced:

Although AutoML normally takes into account this imbalance automatically, there should be more room to improve the model's accuracy in predicting the minority class. For example, we could use Random Under-Sampling of majority class, or Random Over-Sampling of minority class, or even try different algorithms.

A side note here: out of curiosity, I clicked the 'Data guardrails' tab (see screenshots above, step 3) and found many interesting observations done by Azure analysis. Unfortunately, I ran out of time and was not able to look into this with more detail. My remark here is that even though I can understand that there must be time contraints in our runs, this can impede our in depth learning because we miss the chance to browse around looking for the many extra but less important things; this is really a pity. As a suggestion, it would be interesting to create a virtual environment with everything running in simulation -thus running with no actual cost- where the learner could freely look around.

-

Another factor that could improve the model is increasing the training time. This suggestion might be seen as a no-brainer, but it would also increase costs and there must always be a balance between minimum required accuracy and assigned budget.

-

I could not help but wonder how more accurate would be the resulting model in case

Deep Learningwas used, as we were specifically instructed NOT to enable it in the AutoML settings. While searching for more info, I found this very interesting article in Microsoft Docs: Deep learning vs. machine learning in Azure Machine Learning. There it says that deep learning excels at identifying patterns in unstructured data such as images, sound, video, and text. In my understanding, it might be an overkill to use it in a classification problem like this. -

Lastly, a thing that could be taken into account is any future change(s) in the dataset that could impact the accuracy of the model. I do not have any experience on how this could be done in an automated way, but I am sure that a method exists and can be spotted if/when such a need arises.

[Moro et al., 2014] S. Moro, P. Cortez and P. Rita. A Data-Driven Approach to Predict the Success of Bank Telemarketing. Decision Support Systems, Elsevier, 62:22-31, June 2014.

- Udacity Nanodegree material

- App used for the creation of the Architectural Diagram

- Prevent overfitting and imbalanced data with automated machine learning

- Dr. Ware: Dealing with Imbalanced Data in AutoML

- Microsoft Tech Community: Dealing with Imbalanced Data in AutoML

- A very interesting paper on the imbalanced classes issue: Analysis of Imbalance Strategies Recommendation using a Meta-Learning Approach

- Imbalanced Data : How to handle Imbalanced Classification Problems

- Consume an Azure Machine Learning model deployed as a web service

- Deep learning vs. machine learning in Azure Machine Learning

- A Review of Azure Automated Machine Learning (AutoML)

- Supported data guardrails

- Online Video Cutter