Simple derived implementation of Rotation Forest algorithm[1, 2] based upon sklearn's random forest module.

for tree in trees:

split the attributes in the training set into K non-overlapping subsets of equal size.

bootstrap þ% of the data from each K dataset and use the bootstrap data in the following steps:

- Run PCA on the i-th subset in K. Retain all principal components. For every feature j in the Kth subsets, we have a principal component a.

- Create a rotation matrix of size n X n where n is the total number of features. Arrange the principal component in the matrix such that the components match the position of the feature in the original training dataset.

- Project the training dataset on the rotation matrix.

- Build a decision tree with the projected dataset

- Store the tree and the rotation matrix.

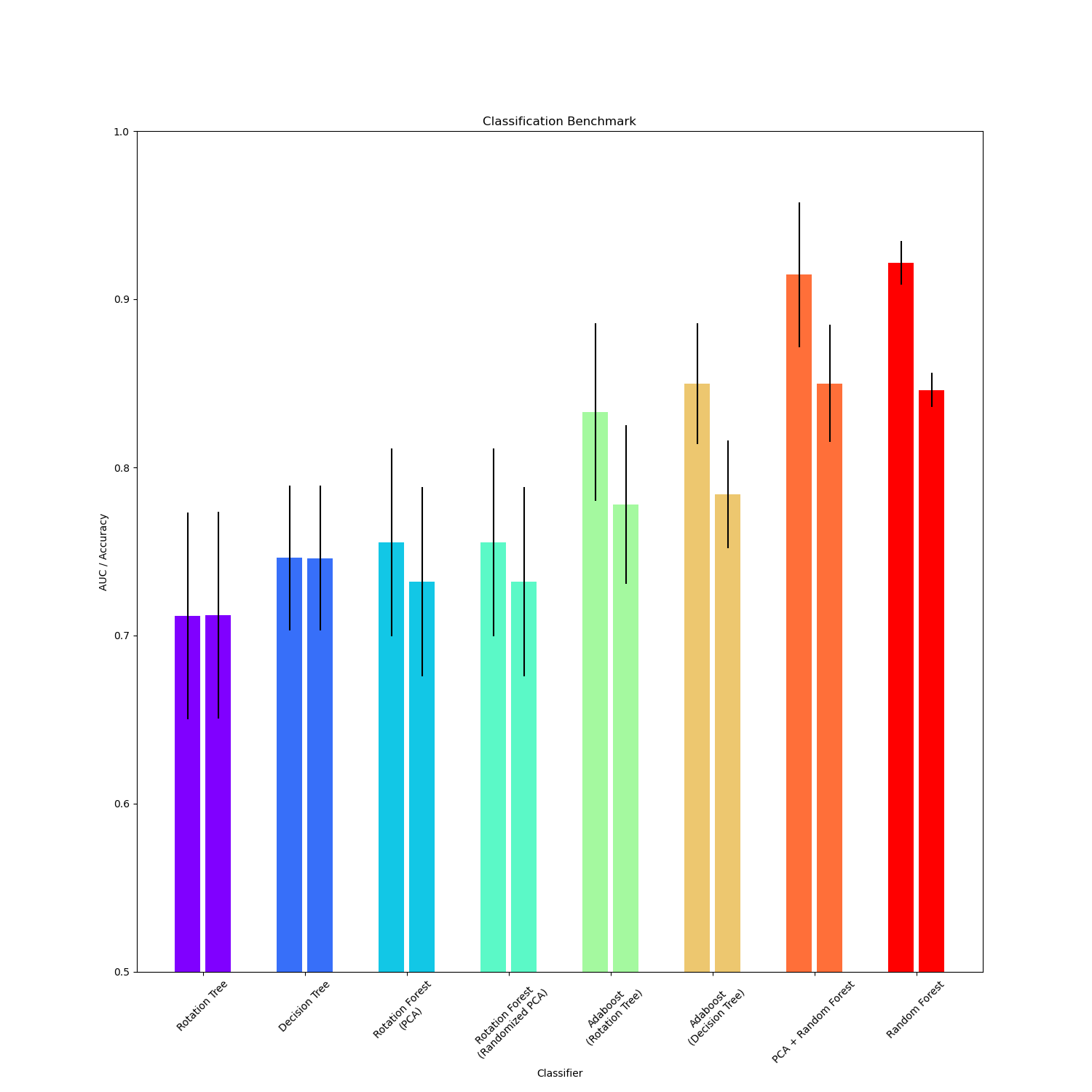

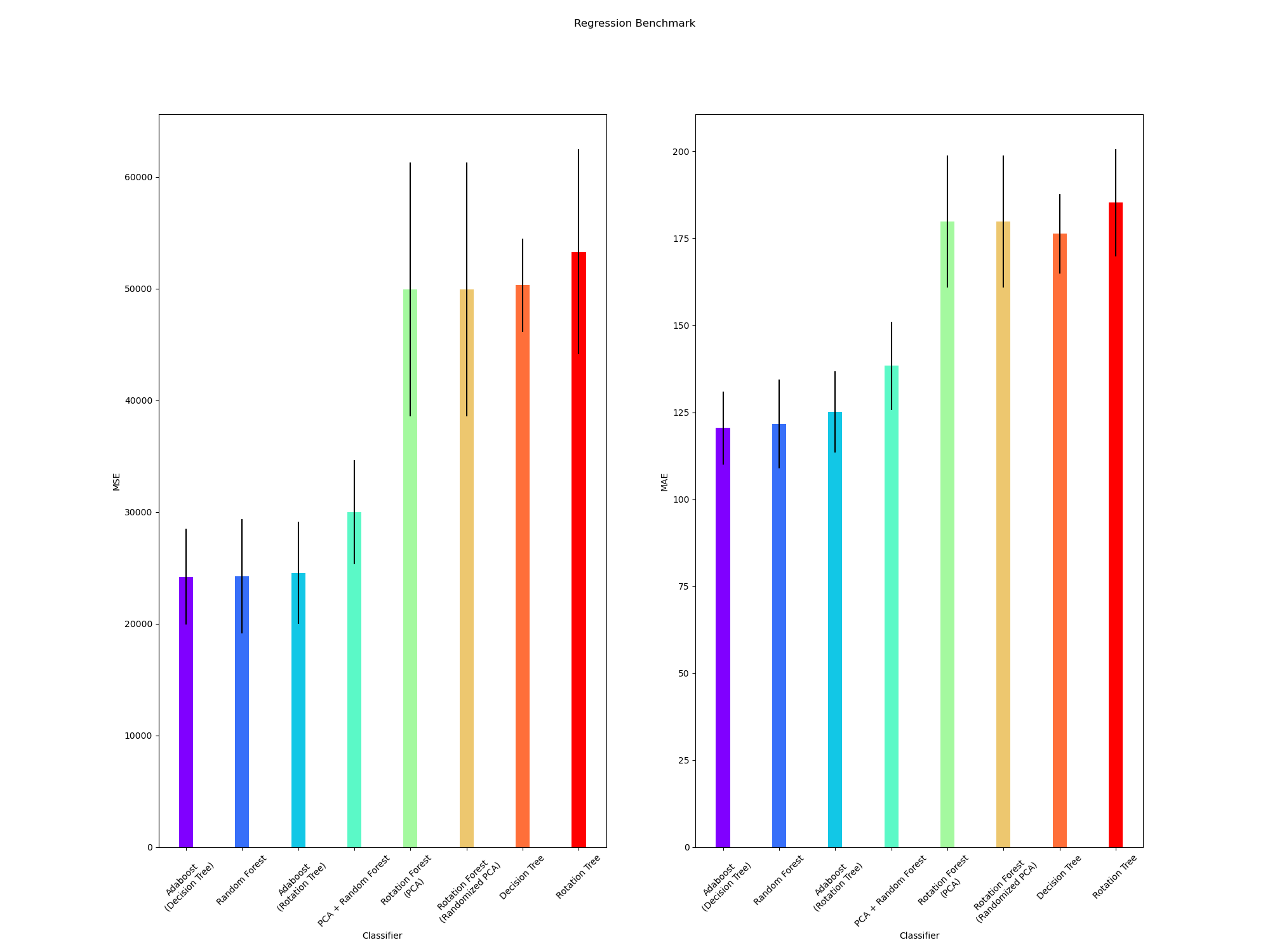

- Rotation forest has been implemented both as classifier[1] and regressor[2].