BirdSAT: Cross-View Contrastive Masked Autoencoders for Bird Species Classification and Mapping

Srikumar Sastry, Subash Khanal, Aayush Dhakal, Di Huang, Nathan Jaocbs

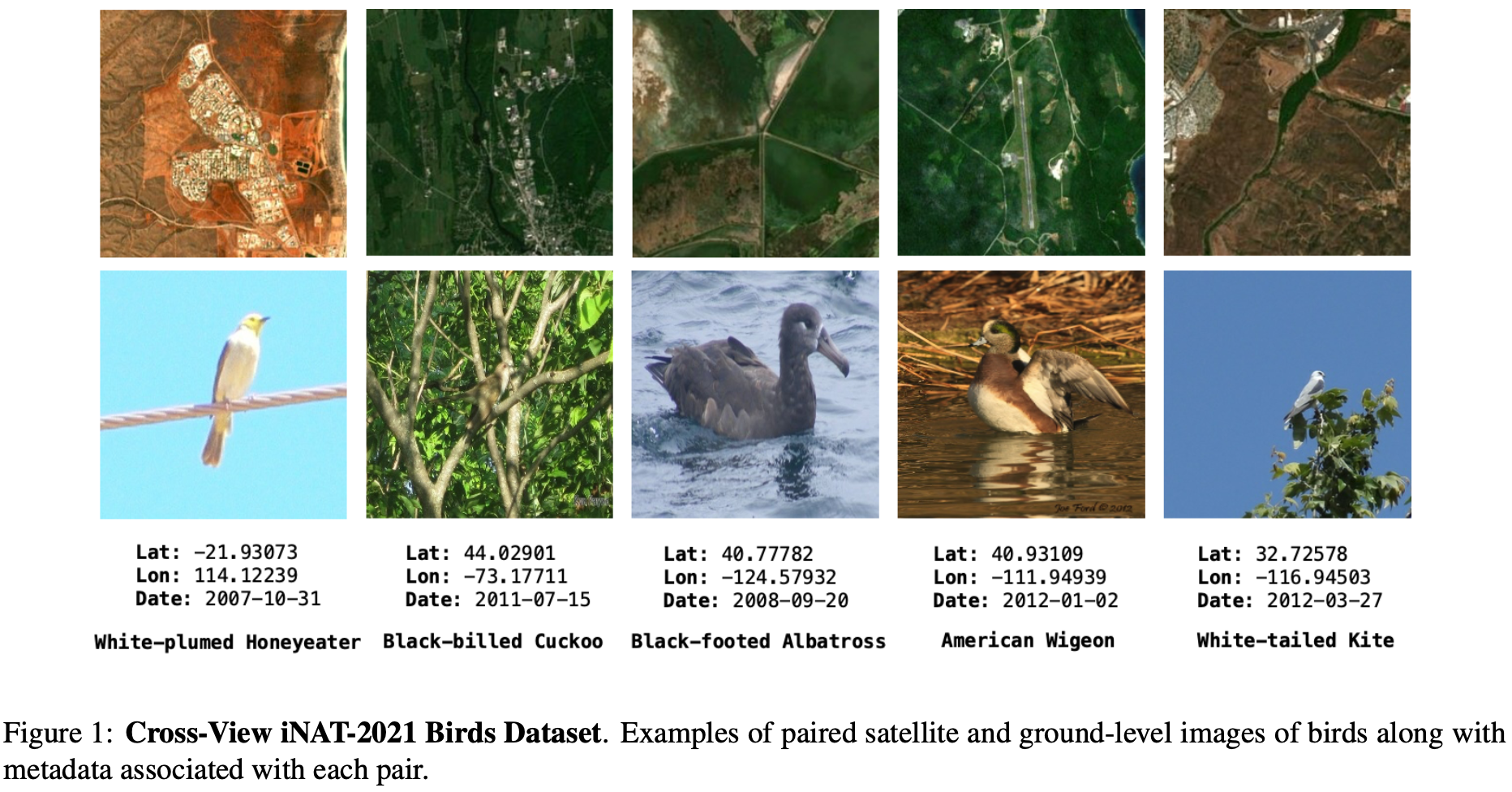

🦢 Dataset Released: Cross-View iNAT Birds 2021

This cross-view birds species dataset consists of paired ground-level bird images and satellite images, along with meta-information associated with the iNaturalist-2021 dataset.

Satellite images along with meta-information - Link

iNaturalist Images - Link

Computer Vision Tasks

- Fine-Grained image classification

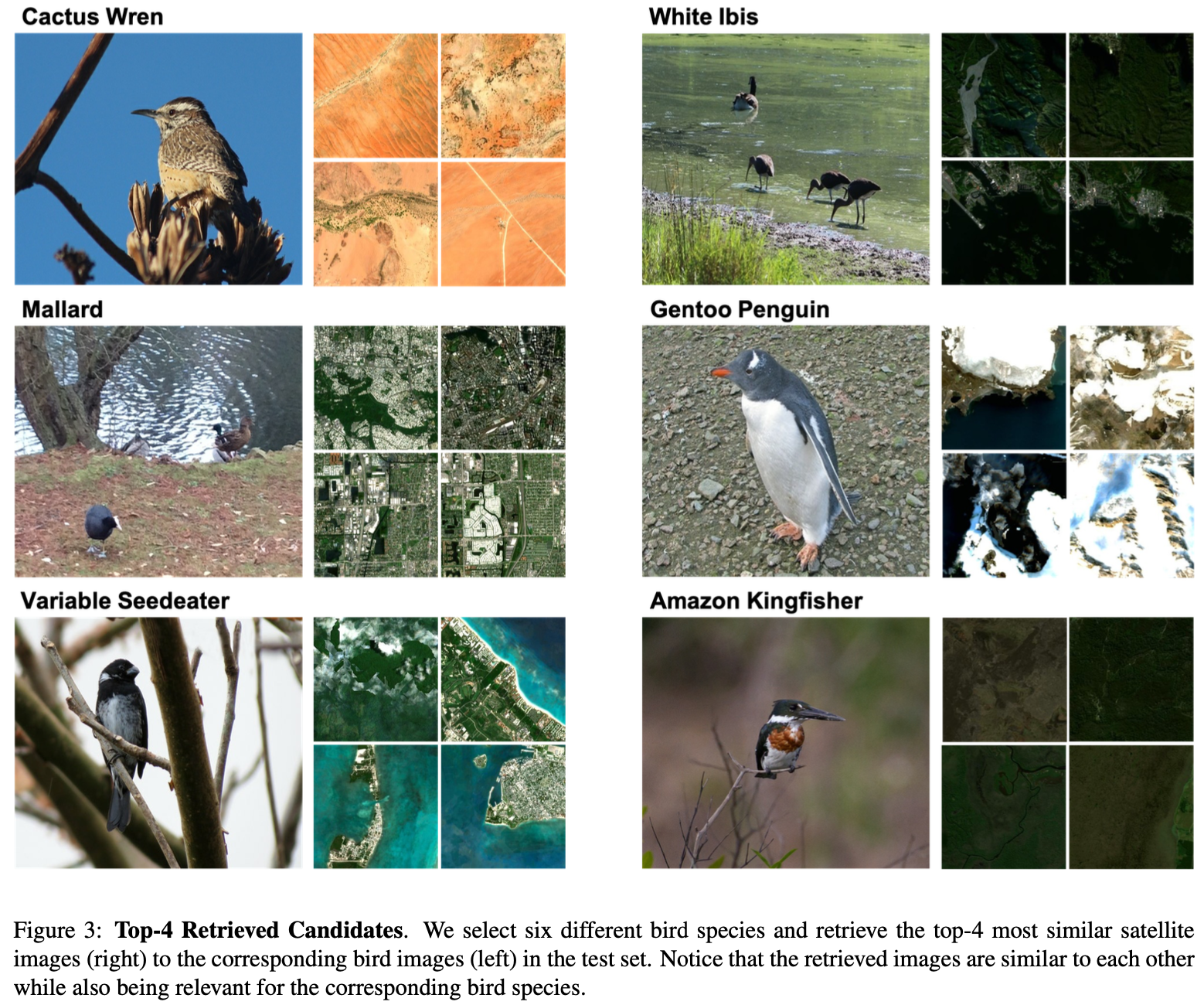

- Satellite-to-bird image retrieval

- Bird-to-satellite image retrieval

- Geolocalization of Bird Species

An example of task 3 is shown below:

👨💻 Getting Started

Setting up

- Clone this repository:

git clone https://github.com/mvrl/BirdSAT.git- Clone the Remote-Sensing-RVSA repository inside BirdSAT:

cd BirdSAT

git clone https://github.com/ViTAE-Transformer/Remote-Sensing-RVSA.git-

Append the code for CVMMAE present in

utils_model/CVMMAE.pyto the file present inRemote-Sensing-RVSA/MAEPretrain_SceneClassification/models_mae_vitae.py -

Download pretrained satellite image encoder from - Link and place inside folder

pretrained_models. You might get an error while loading this model. You need to set the optionkernel=3in the fileRemote-Sensing-RVSA/MAEPretrain_SceneClassification/models_mae_vitae.pyin the classMaskedAutoencoderViTAE. -

Download all datasets, unzip them and place inside folder

data.

Installing Required Packages

There are two options to setup your environment to be able to run all the functions in the repository:

- Using Dockerfile provided in the repository to create a docker image with all required packages:

docker build -t <your-docker-hub-id>/birdsat .

- Creating conda Environment with all required packages:

conda create -n birdsat python=3.10 && \ conda activate birdsat && \ pip install requirements.txt

Additionally, we have hosted a pre-built docker image on docker hub with tag srikumar26/birdsat:latest for use.

🔥 Training Models

- Setup all the parameters of interest inside

config.pybefore launching the training script. - Run pre-training by calling:

python pretrain.py

- Run fine-tuning by calling:

python finetune.py

❄️ Pretrained Models

Download pretrained models from the given links below:

| Model Type | Download Url |

|---|---|

| CVE-MAE | Link |

| CVE-MAE-Meta | Link |

| CVM-MAE | Link |

| CVM-MAE-Meta | Link |

📑 Citation

@inproceedings{sastry2024birdsat,

title={BirdSAT: Cross-View Contrastive Masked Autoencoders for Bird Species Classification and Mapping},

author={Srikumar, Sastry and Subash, Khanal and Aayush, Dhakal and Huang, Di and Nathan, Jacobs},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

year={2024}

}🔍 Additional Links

Check out our lab website for other interesting works on geospatial understanding and mapping;