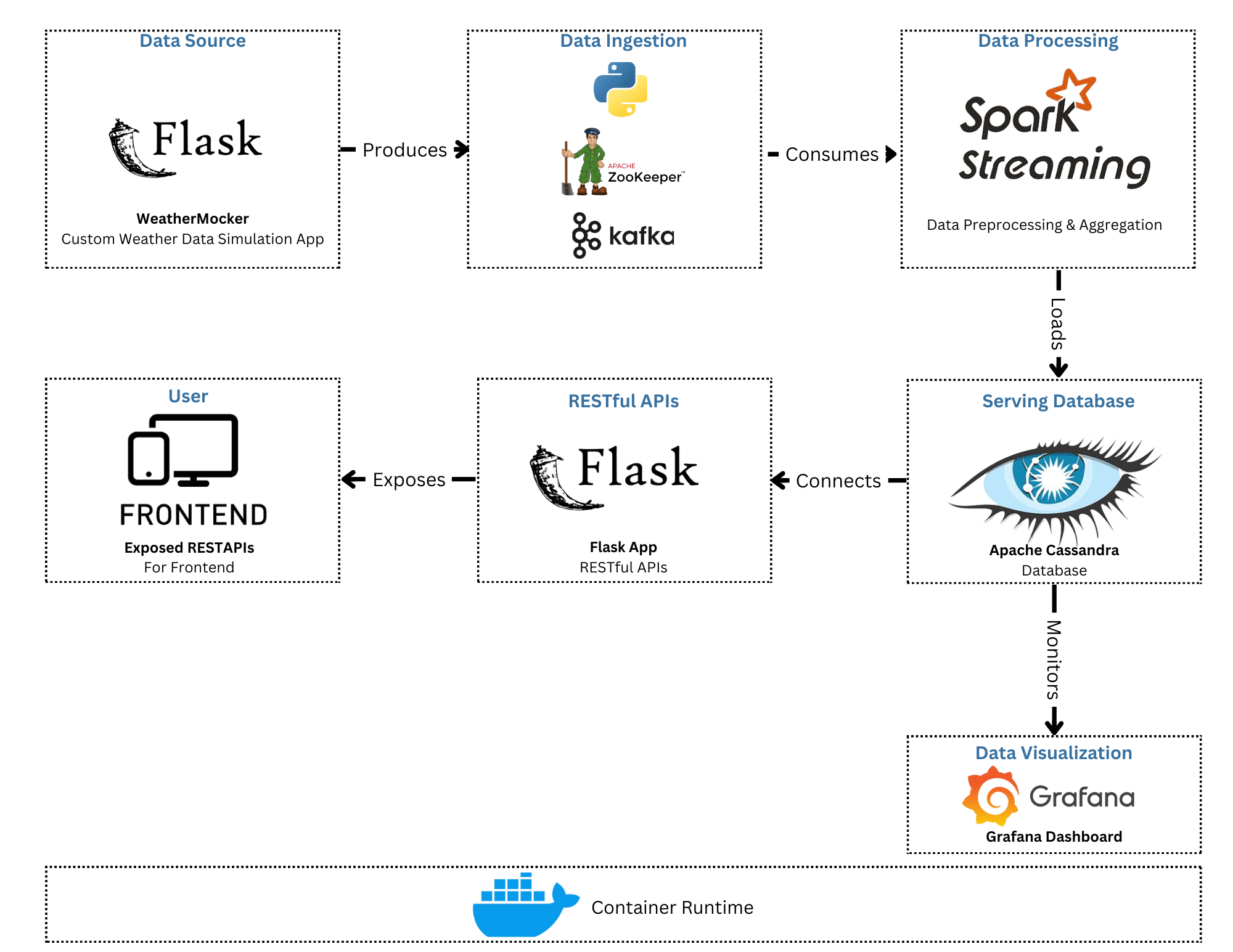

This project implements a weather data pipeline that generates, ingests, processes, stores, and visualizes weather data in real-time. It uses Flask for data generation, Kafka for data ingestion, Spark for processing, Cassandra for storage, and Grafana for visualization.

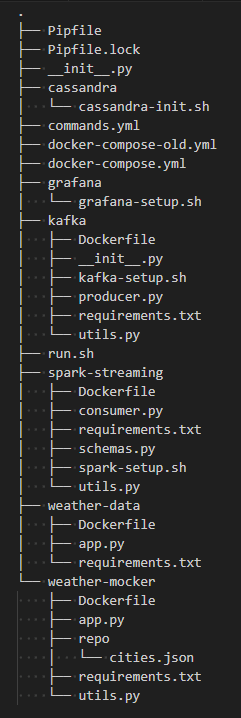

- Purpose: Generates random weather data simulating a real weather API.

- Main File:

weather-mocker/app.py - Dependencies:

weather-mocker/requirements.txt

- Purpose: Exposes an API to access weather data stored in Cassandra.

- Main File:

weather-data/app.py - Dependencies:

weather-data/requirements.txt

- Setup Script:

kafka/kafka-setup.sh - Description: Creates a Kafka topic if it does not exist.

- Producer:

kafka/producer.py

- Setup Script:

cassandra/cassandra-init.sh - Description: Initializes Cassandra by creating a keyspace and table if they do not exist.

- Setup Script:

spark-streaming/spark-setup.sh - Description: Configures Spark for streaming and initializes necessary settings.

- Consumer:

spark-streaming/consumer.py

- Setup Script:

grafana/grafana-setup.sh - Description: Installs the Cassandra plugin, adds the data source, and creates a dashboard for real-time data visualization.

-

Data Generation and Publishing:

- WeatherMocker generates random weather data.

- Kafka producer publishes the data to a Kafka topic.

-

Data Consumption and Processing:

- Spark-Streaming consumes data from Kafka, processes it, and writes it to Cassandra.

-

Data Visualization:

- Grafana visualizes the real-time data stored in Cassandra.

The entire stack is managed using Docker Compose, defined in docker-compose.yml. Each service runs in its own Docker container.

To run the Docker pipeline, run the following two commands from the root directory:

- Make the

run.shscript executable:$ chmod +x run.sh $ ./run.sh