Tianyun Yang, Ziyao Huang, Juan Cao, Lei Li, Xirong Li

AAAI 2022 Arxiv

- [Mar 3, 2022] Note: The resource links are placeholders for now, we will update them and open source code soon!

- [Mar 10, 2022] The source code, dataset and models are all released.

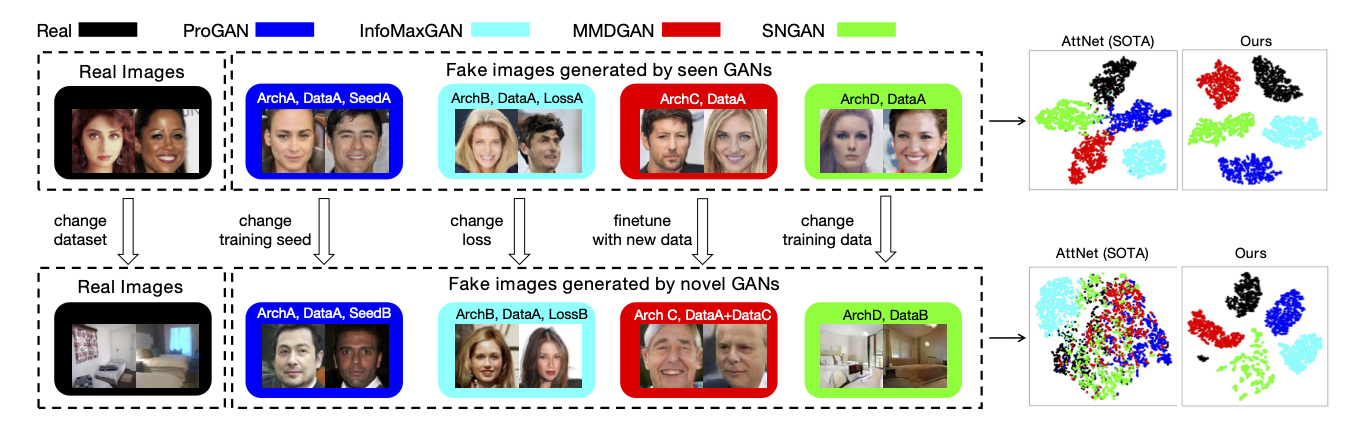

With the rapid progress of generation technology, it has become necessary to attribute the origin of fake images. Existing works on fake image attribution perform multi-class classification on several Generative Adversarial Network (GAN) models and obtain high accuracies. While encouraging, these works are restricted to model-level attribution, only capable of handling images generated by seen models with a specific seed, loss and dataset, which is limited in real-world scenarios.

In this work:

- We present the first study on Deepfake Network Architecture Attribution to attribute fake images on architecture-level.

- We develop a simple yet effective approach named DNA-Det to extract architecture traces, which adopts pre-training on image transformation classification and

patchwise contrastive learning to capture globally consistent features that are invariant to semantics.

- The evaluations on multiple cross-test setups and a large-scale dataset verify the effectiveness of DNA-Det. DNA-Det maintains a significantly higher accuracy than existing methods in cross-seed, cross-loss, cross-finetune and cross-dataset settings.

- Linux

- NVIDIA GPU + CUDA 11.1

- Python 3.7.10

- pytorch 1.9.0

You can download the dataset after an application: Application to Use the Dataset for Deepfake Network Architecture Attribution, the detailed information about this dataset is shown below.

| Resolution | Real, GAN | Content | Source |

|---|---|---|---|

| 128x128 | Real | CelebA, LSUN-bedroom | CelebA, LSUN |

| 128x128 | ProGAN | CelebA, LSUN-bedroom | GANFingerprint |

| 128x128 | MMDGAN | CelebA, LSUN-bedroom | GANFingerprint |

| 128x128 | SNGAN | CelebA, LSUN-bedroom | GANFingerprint |

| 128x128 | CramerGAN | CelebA, LSUN-bedroom | GANFingerprint |

| 128x128 | InfoMaxGAN | CelebA, LSUN-bedroom | mimicry |

| 128x128 | SSGAN | CelebA, LSUN-bedroom | mimicry |

| 256x256 | Real | cat, airplane, boat, horse, sofa, cow, dog, train, bicycle, bottle, diningtable, motorbike, sheep, tvmonitor, bird, bus, chair, person, pottedplant, car | CNNDetection |

| 256x256 | ProGAN | cat, airplane, boat, horse, sofa, cow, dog, train, bicycle, bottle, diningtable, motorbike, sheep, tvmonitor, bird, bus, chair, person, pottedplant, car | CNNDetection |

| 256x256 | StackGAN2 | cat, church, bird, bedroom, dog | StackGAN-v2 |

| 256x256 | CycleGAN | winter, orange apple, horse, summer, zebra | CNNDetection |

| 256x256 | StyleGAN2 | cat, church horse | StyleGAN2 |

| 1024x1024 | Real | FFHQ, CeleA-HQ | FFHQ, CeleA-HQ |

| 1024x1024 | StyleGAN | FFHQ, celebA-HQ, Yellow, Model, Asian Star, kid, elder, adult, glass, male, female, smile | StyleGAN, seeprettyface |

| 1024x1024 | StyleGAN2 | FFHQ, Yellow, Wanghong, Asian Star, kid | StyleGAN2, seeprettyface |

- Download dataset, and put it into the directory

./dataset. - Download the train part of CNNDetection dataset, and put it into the directory

./dataset/GANs. - Prepare annotation files for training, validation, closed-set testing and cross testings.

- Prepare dataset for the celebA experiment.

python generate_data_multiple_cross.py --mode celeba- Prepare dataset for the LSUN-bedroom experiment.

python generate_data_multiple_cross.py --mode lsun- Prepare dataset for the in the wild experiment.

After generation, the folder should be like this:python generate_data_in_the_wild.py --mode in_the_wildwheredataset ├── ${mode}_test │ └── annotations │ ├── ${mode}_test.txt │ ├── ${mode}_test_cross_seed.txt │ ├── ${mode}_test_cross_loss.txt │ ├── ${mode}_test_cross_finetune.txt │ └── ${mode}_test_cross_dataset.txt ├── ${mode}_train │ └── annotations │ └── ${mode}_train.txt └── ${mode}_val └── annotations └── ${mode}_val.txt{mode}_train.txt, {mode}_val.txt, {mode}_test.txt, {mode}_test_cross_*.txtare the annotation files for training, validation, closed-set testing and cross testing spilts. - Quick implement: We provide generated txt files, they can be downloaded from Baiduyun(passwd:olci) or GoogleDrive.

Put them into the directory

./dataset.

sh ./script/run_train_pretrain.sh

After training, the model and logs are saved in ./dataset/pretrain_train/models/pretrain_val/pretrain/run_${run_id}/.

- Specify training configurations in

./configs/${config_name}.py - Specify settings including config_name, data_path and so on in

./script/run_train.shand run:

sh ./script/run_train.sh

- Following is an example for celebA experiment:

data_path=./dataset/

train_collection=celeba_train

val_collection=celeba_val

config_name=celeba

run_id=1

pretrain_model_path=./dataset/pretrain_train/models/model.pth

python main.py --data_path $data_path --train_collection $train_collection --val_collection $val_collection \

--config_name $config_name --run_id $run_id \

--pretrain_model_path $pretrain_model_path

where

data_path: The dataset pathtrain_collection: The training split directoryval_collection: The validation split directoryconfig_name: The config filerun_id: The running id for numbering this trainingpretrain_model_path: The pretrain model on image transformation classification

Similarly, for the LSUN-bedroom experiment:

data_path=./dataset/

train_collection=lsun_train

val_collection=lsun_val

config_name=lsun

run_id=1

pretrain_model_path=./datasets/pretrain_train/models/pretrain_val/pretrain/run_0/model.pth

python main.py --data_path $data_path --train_collection $train_collection --val_collection $val_collection \

--config_name $config_name --run_id $run_id \

--pretrain_model_path $pretrain_model_path

For the in the wild experiment:

data_path=./dataset/

train_collection=in_the_wild_train

val_collection=in_the_wild_val

config_name=in_the_wild

run_id=1

pretrain_model_path=./datasets/pretrain_train/models/pretrain_val/pretrain/run_0/model.pth

python main.py --data_path $data_path --train_collection $train_collection --val_collection $val_collection \

--config_name $config_name --run_id $run_id \

--pretrain_model_path $pretrain_model_path

- After training, the models and logs are saved in

./${data_path}/${train_collection}$/models/${val_collection}/${config_name}/run_${run_id}/.

We provide pre-trained models:

Baiduyun(passwd:olci) or GoogleDrive.

They have been put into the right path along with the annotation files. See model_path in ./script/run_test.sh.

To evaluate the trained model on multiple cross-test setups. Specify settings in ./script/run_test.sh and run:

sh ./script/run_test.sh

- Following is an example for the celebA experiment:

config_name=celeba

model_path=./dataset/celeba_train/models/celeba_val/celeba/run_0/model.pth

python3 pred_eval.py --model_path $model_path --config_name $config_name \

--test_data_paths \

./dataset/celeba_test/annotations/celeba_test.txt \

./dataset/celeba_test/annotations/celeba_test_cross_seed.txt \

./dataset/celeba_test/annotations/celeba_test_cross_loss.txt \

./dataset/celeba_test/annotations/celeba_test_cross_finetune.txt \

./dataset/celeba_test/annotations/celeba_test_cross_dataset.txt

- Following is an example for the LSUN-bedroom experiment:

config_name=lsun

model_path=./dataset/lsun_train/models/lsun_val/lsun/run_0/model.pth

python3 pred_eval.py --model_path $model_path --config_name $config_name \

--test_data_paths \

./dataset/lsun_test/annotations/lsun_test.txt \

./dataset/lsun_test/annotations/lsun_test_cross_seed.txt \

./dataset/lsun_test/annotations/lsun_test_cross_loss.txt \

./dataset/lsun_test/annotations/lsun_test_cross_finetune.txt \

./dataset/lsun_test/annotations/lsun_test_cross_dataset.txt

- Following is an example for the in the wild experiment:

config_name=in_the_wild

model_path=./dataset/in_the_wild_train/models/in_the_wild_val/in_the_wild/run_0/model.pth

python3 pred_eval.py --model_path $model_path --config_name $config_name \

--test_data_paths \

./dataset/in_the_wild_test/annotations/in_the_wild_test.txt \

./dataset/in_the_wild_test/annotations/in_the_wild_test_cross_dataset.txt

After running, the result will be saved in ./${test_data_path}/pred/result.txt

To visualize feature space on cross data by t-SNE (Figure 1 in our paper),

sh ./script/do_tsne.sh

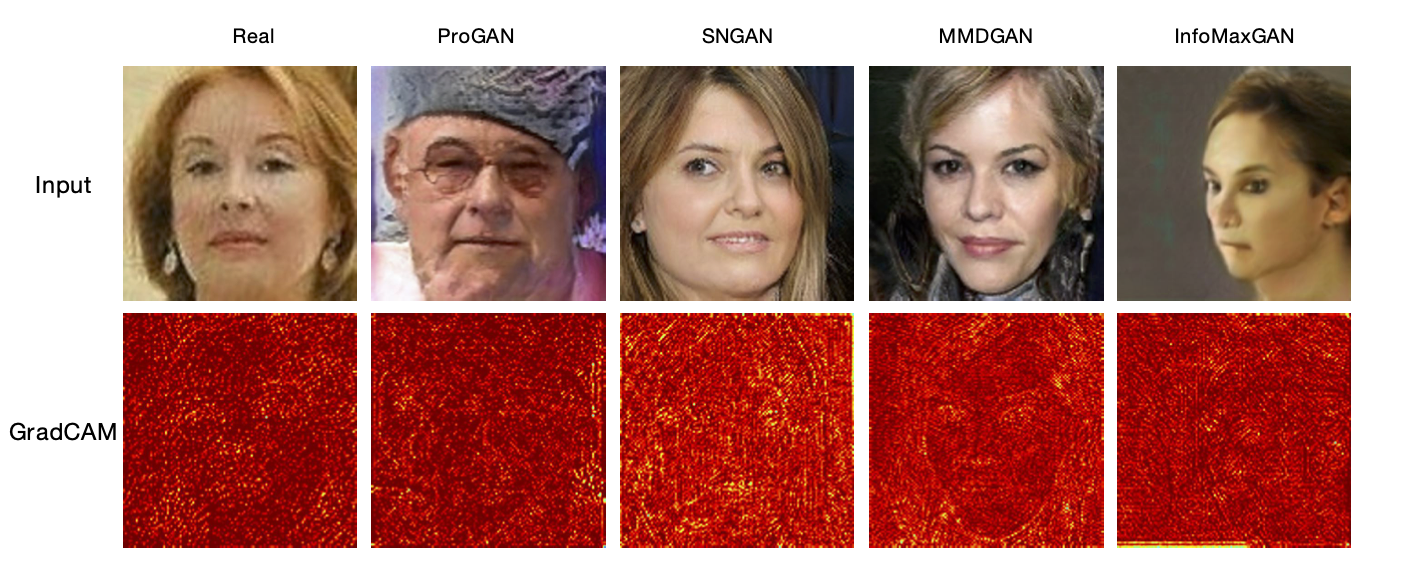

To visualize what regions the network focuses on by GradCAM (Figure 6 in our paper),

sh ./script/do_gradcam.sh

If you find our model/method/dataset useful, please cite our work

@inproceedings{yang2022deepfake,

title={Deepfake Network Architecture Attribution},

author={Yang, Tianyun and Huang, Ziyao and Cao, Juan and Li, Lei and Li, Xirong},

booktitle={Proceedings of the 36th AAAI Conference on Artificial Intelligence (AAAI 2022)},

year={2022}

}

This work was supported by the Project of Chinese Academy of Sciences (E141020), the Project of Institute of Computing Technology, Chinese Academy of Sciences (E161020), Zhejiang Provincial Key Research and Development Program of China (No. 2021C01164), and the National Natural Science Foundation of China (No. 62172420).