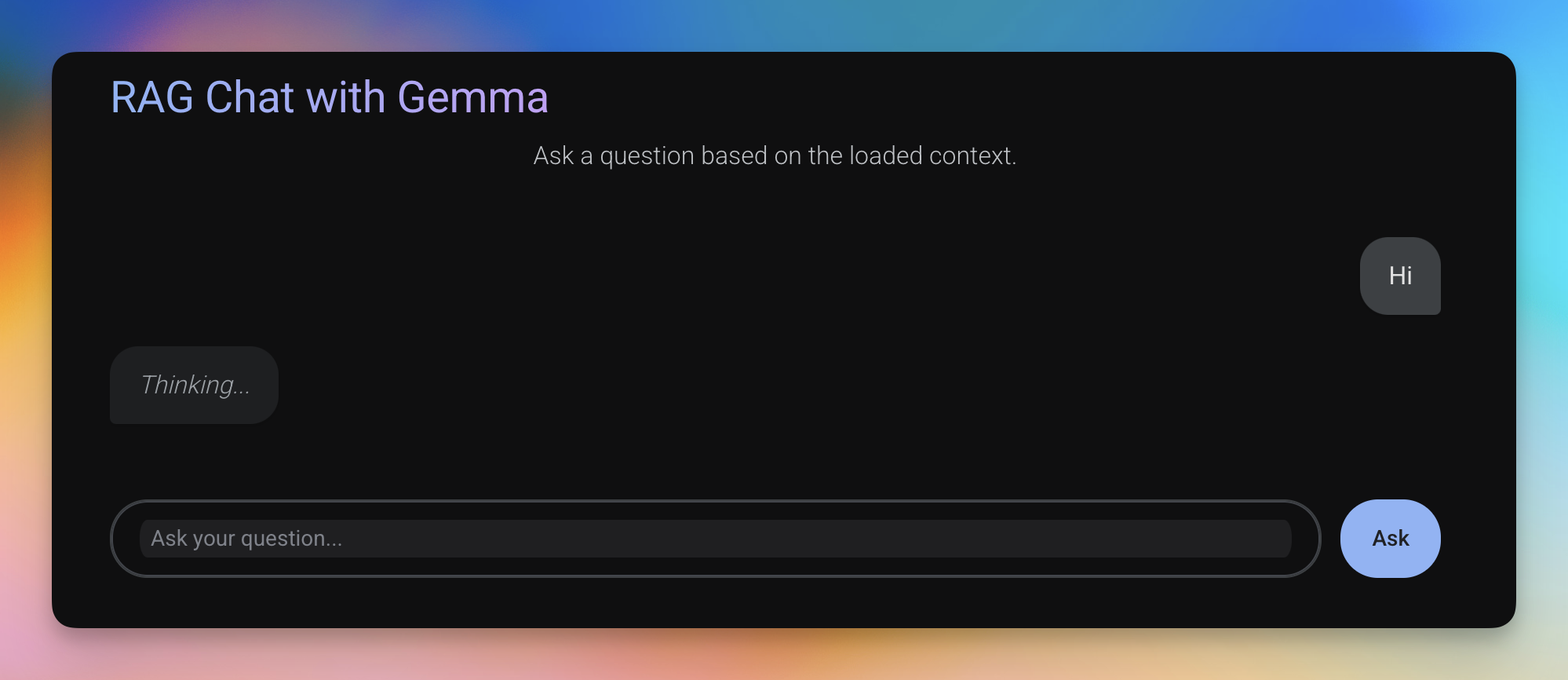

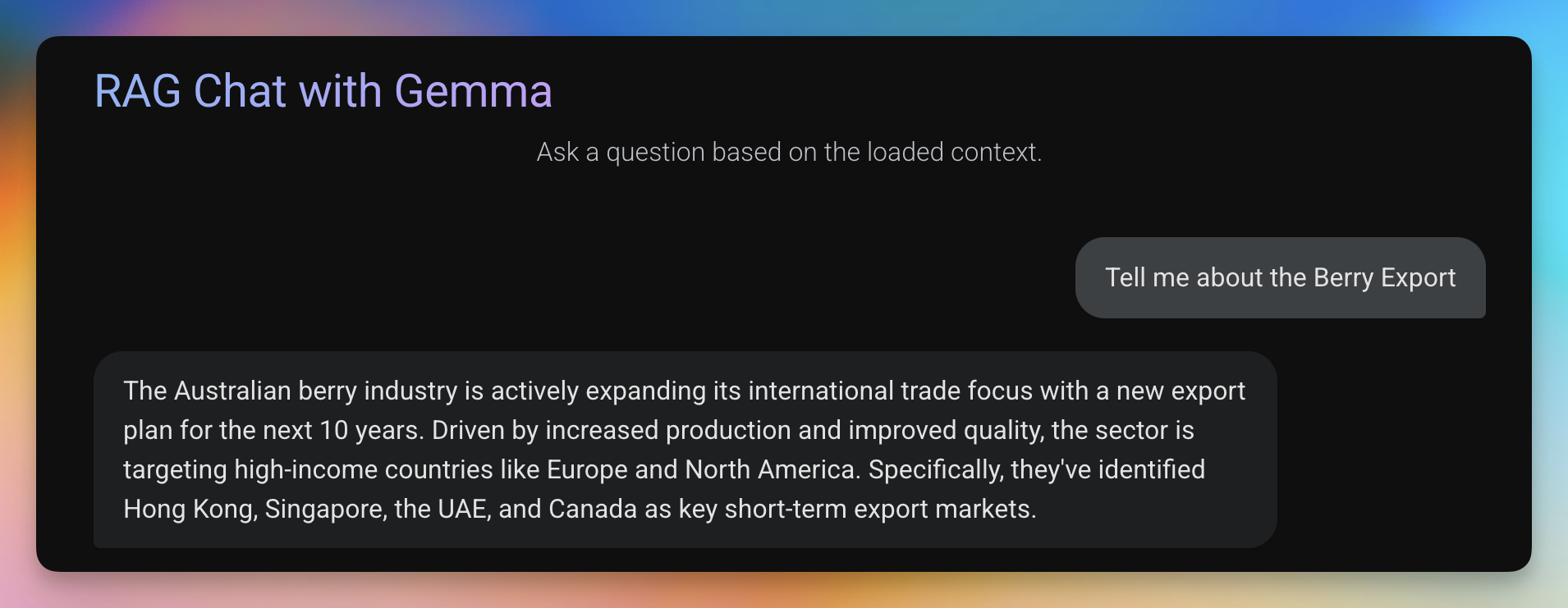

A Retrieval-Augmented Generation (RAG) chatbot application built with Reflex, LangChain, and Ollama's Gemma model. This application allows users to ask questions and receive answers enhanced with context retrieved from a dataset.

This project contains code samples for the blog post here.

- 💬 Modern chat-like interface for asking questions

- 🔍 Retrieval-Augmented Generation for more accurate answers

- 🧠 Uses Gemma 3 4B-IT model via Ollama

- 📚 Built with the neural-bridge/rag-dataset-12000 dataset

- 🛠️ FAISS vector database for efficient similarity search

- 🔄 Full integration with LangChain for RAG pipeline

- 🌐 Built with Reflex for a reactive web interface

- Python 3.12+

- Ollama installed and running

- The Gemma 3 4B model pulled in Ollama:

ollama pull gemma3:4b-it-qat

-

Clone the repository:

git clone https://github.com/srbhr/Local-RAG-with-Ollama.git cd RAG_Blog -

Install dependencies:

pip install -r requirements.txt

-

Make sure Ollama is running and you've pulled the required model:

ollama pull gemma3:4b-it-qat

-

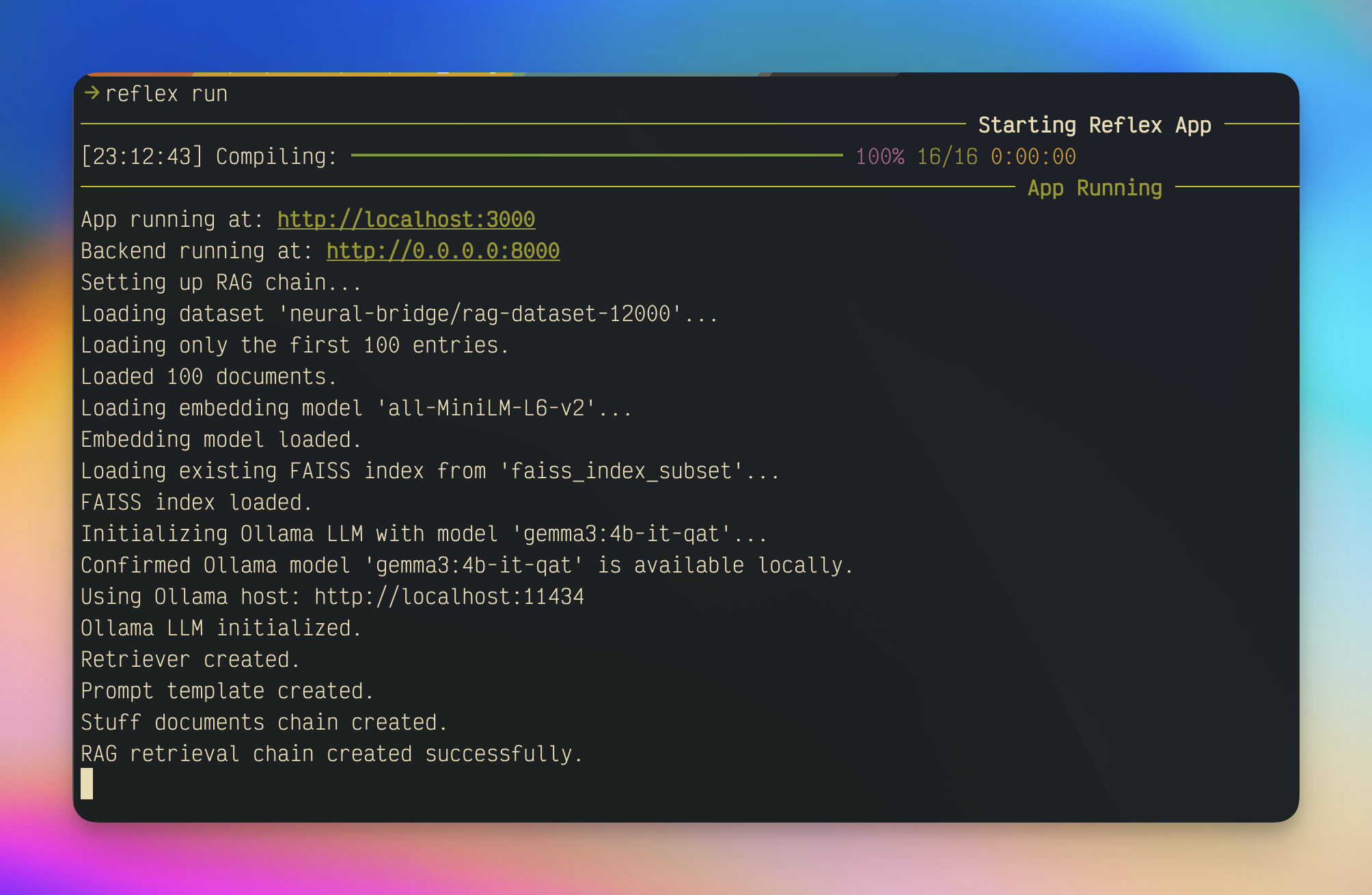

Start the Reflex development server:

reflex run

-

Open your browser and go to

http://localhost:3000

- Start asking questions in the chat interface!

RAG_Blog/

├── assets/ # Static assets

├── faiss_index_neural_bridge/ # FAISS vector database for the full dataset

│ ├── index.faiss # FAISS index file

│ └── index.pkl # Pickle file with metadata

├── faiss_index_subset/ # FAISS vector database for a subset of data

├── rag_gemma_reflex/ # Main application package

│ ├── __init__.py

│ ├── rag_gemma_reflex.py # UI components and styling

│ ├── rag_logic.py # Core RAG implementation

│ └── state.py # Application state management

├── requirements.txt # Project dependencies

└── rxconfig.py # Reflex configuration

This application implements a RAG (Retrieval-Augmented Generation) architecture:

-

Embedding and Indexing: Documents from the neural-bridge/rag-dataset-12000 dataset are embedded using HuggingFace's all-MiniLM-L6-v2 model and stored in a FAISS vector database.

-

Retrieval: When a user asks a question, the application converts the question into an embedding and finds the most similar documents in the FAISS index.

-

Generation: The retrieved documents are sent to the Gemma 3 model (running via Ollama) along with the user's question to generate a contextualized response.

-

UI: The Reflex framework provides a reactive web interface for the chat application.

You can customize the following aspects of the application:

- LLM Model: Change the

OLLAMA_MODELenvironment variable or modify theDEFAULT_OLLAMA_MODELinrag_logic.py - Dataset: Modify the

DATASET_NAMEinrag_logic.py - Embedding Model: Change the

EMBEDDING_MODEL_NAMEinrag_logic.py - UI Styling: Modify the styles in

rag_gemma_reflex.py

OLLAMA_MODEL: Override the default Gemma modelOLLAMA_HOST: Specify a custom Ollama host (default: http://localhost:11434)

This project is licensed under the MIT License. See the LICENSE file for details.

- Reflex for the reactive web framework

- LangChain for the RAG pipeline components

- Ollama for local LLM hosting

- HuggingFace for embeddings and dataset hosting