ONNX implementation for WASI NN

This project is an experimental ONNX implementation for the WASI NN specification, and it enables performing neural network inferences in WASI runtimes at near-native performance for ONNX models by leveraging CPU multi-threading or GPU usage on the runtime, and exporting this host functionality to guest modules running in WebAssembly.

It follows the WASI NN implementation from Wasmtime, and adds two new runtimes for performing inferences on ONNX models:

- one based on the native ONNX runtime, which uses community-built Rust bindings to the runtime's C API.

- one based on the Tract crate, which is a native inference engine for running ONNX models, written in Rust.

How does this work?

WASI NN is a "graph loader" API. This means the guest WebAssembly module passes the ONNX model as opaque bytes to the runtime, together with input tensors, the runtime performs the inference, and the guest module can then retrieve the output tensors. The WASI NN API is as follows:

loada model using one or more opaque byte arraysinit_execution_contextand bind some tensors to it usingset_inputcomputethe ML inference using the bound context- retrieve the inference result tensors using

get_output

The two back-ends from this repository implement the API defined above using each of the two runtimes mentioned. So why two implementations? The main reason has to do with the performance vs. ease of configuration trade-off. More specifically:

- the native ONNX runtime will provide the most performance, with multi-threaded CPU and access to the GPU. Additionally, any ONNX module should be fully compatible with this runtime (keeping in mind our current implementation limitations described below). However, setting it up requires that the ONNX shared libraries be downloaded and configured on the host.

- the Tract runtime is implemented purely in Rust, and does not need any shared libraries. However, it only passes successfully about 85% of ONNX backend tests, and it does not implement internal multi-threading or GPU access.

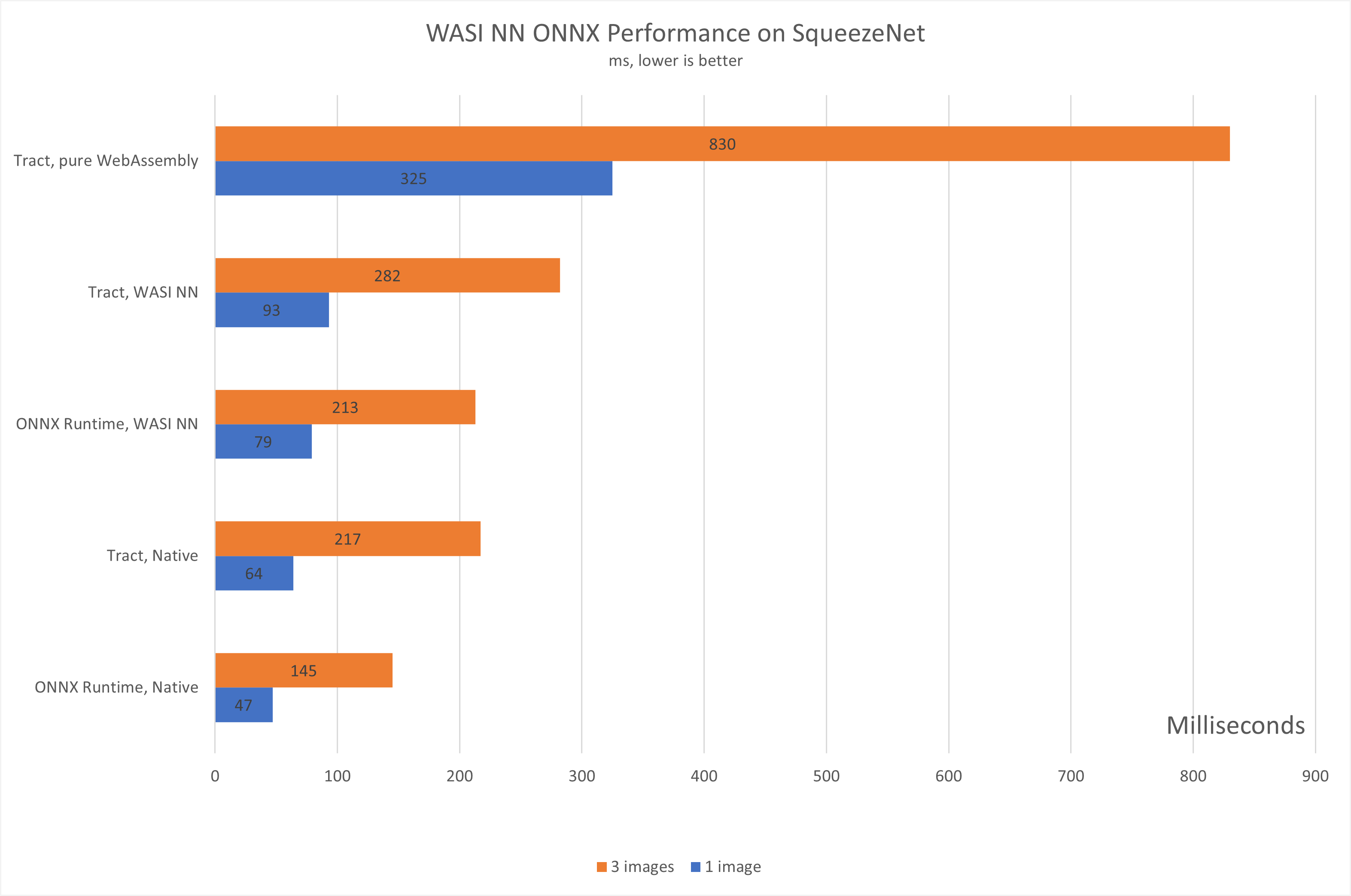

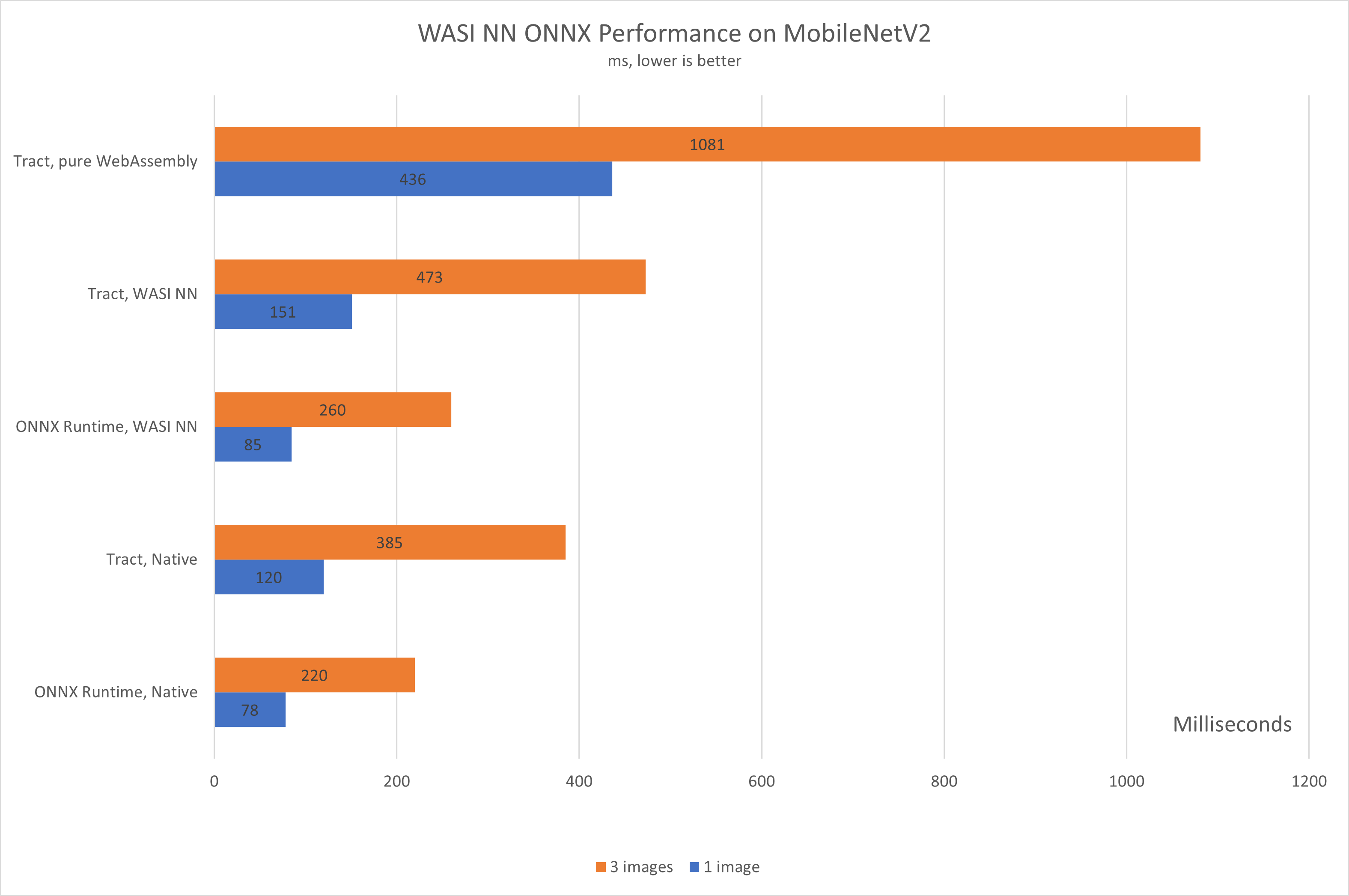

The following represents a very simple benchmark of running two computer vision models, SqueezeNetV1 and MobileNetV2, compiled natively, run with WASI NN with both back-ends, and run purely on WebAssembly using Tract. All inferences are performed on the CPU-only for now:

A few notes on the performance:

-

this represents very early data, on a limited number of runs and models, and should only be interpreted in terms of the relative performance difference we can expect between native, WASI NN and pure WebAssembly

-

the ONNX runtime is running multi-threaded on the CPU only, as the GPU is not yet enabled

-

in each case, all tests are executing the same ONNX model on the same images

-

all WebAssembly modules (both those built with WASI NN and the ones running pure Wasm) are run with Wasmtime v0.28, with caching enabled

-

no other special optimizations have been performed on either module, and we suspect that optimizations such as using

wasm-opt, Wizer, or AOT compilation could significantly improve module startup time. -

there are known limitations in both runtimes that, when fixed, should also significantly improve the performance

-

as we test with more ONNX models, the data should be updated

Current limitations

- only FP32 tensor types are currently supported (#20) - this is the main limitation right now, and it has to do with the way we track state internally. This should not affect popular models (such as computer vision scenarios).

- GPU execution is not yet enabled in the native ONNX runtime (#9)

Building, running, and writing WebAssembly modules that use WASI NN

The following are the build instructions for Linux. First, download the ONNX runtime 1.6 shared library and unarchive it. Then, build the helper binary:

➜ cargo build --release --bin wasmtime-onnx --features tract,c_onnxruntime

At this point, follow the Rust example and test to build a WebAssembly module that uses this API, which uses the Rust client bindings for the API.

Then, to run the example and test from this repository, using the native ONNX runtime:

➜ LD_LIBRARY_PATH=<PATH-TO-ONNX>/onnx/onnxruntime-linux-x64-1.6.0/lib RUST_LOG=wasi_nn_onnx_wasmtime=info,wasmtime_onnx=info \

./target/release/wasmtime-onnx \

tests/rust/target/wasm32-wasi/release/wasi-nn-rust.wasm \

--cache cache.toml \

--dir tests/testdata \

--invoke batch_squeezenet \

--c-runtime

Or to run the same function using the Tract runtime:

➜ LD_LIBRARY_PATH=<PATH-TO-ONNX>/onnx/onnxruntime-linux-x64-1.6.0/lib RUST_LOG=wasi_nn_onnx_wasmtime=info,wasmtime_onnx=info \

./target/release/wasmtime-onnx \

tests/rust/target/wasm32-wasi/release/wasi-nn-rust.wasm \

--cache cache.toml \

--dir tests/testdata \

--invoke batch_squeezenet \

The project exposes two Cargo features: tract, which is the default feature,

and c_onnxruntime, which when enabled, will compile support for using the C

API for the ONNX runtime. After building with this feature enabled, running the

binary requires passing the path to the ONNX shared libraries, either as part of

the PATH, or by setting the LD_LIBRARY_PATH.

Contributing

We welcome any contribution that adheres to our code of conduct. This project is

experimental, and we are delighted you are interested in using or contributing

to it! Please have a look at

the issue queue and either

comment on existing issues, or open new ones for bugs or questions. We are

particularly looking for help in fixing the current known limitations, so please

have a look at

issues labeled with help wanted.

Code of Conduct

This project has adopted the Microsoft Open Source Code of Conduct.

For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.