This project gets data from spotify API ingest into a kafka broker. Spark streaming can be connected to this broker and process it. This project can be setup on your local computer or even deployed to azure.

├── README.md

├── kafka

│ ├── cities.csv

│ ├── config.yml

│ ├── kafka_utils.py

│ ├── producer.py

│ ├── spotify_utils.py

│ └── user_utils.py

├── requirements.txt

├── scripts

│ ├── install_docker.sh

│ └── setup_kafka.sh

├── spark_streaming

│ ├── README.md

│ ├── images

│ │ ├── databricks.png

│ │ └── notebook.png

│ ├── process_stream.py

│ ├── schema.py

│ └── spark streaming notebook.ipynb

└── terraform

├── README.md

├── data.tf

├── images

│ └── resources.png

├── main.tf

├── modules

│ └── general_vm

│ ├── main.tf

│ ├── outputs.tf

│ ├── providers.tf

│ ├── run_kafka.sh

│ └── variables.tf

├── outputs.tf

├── providers.tf

├── terraform.tfstate

├── terraform.tfstate.backup

├── terraform.tfvars

├── variables.tf

├── vnet.tf

└── workspace.tf

You need to provide your credentials in the .env file.

Go to https://developer.spotify.com/. Sign in with your account.

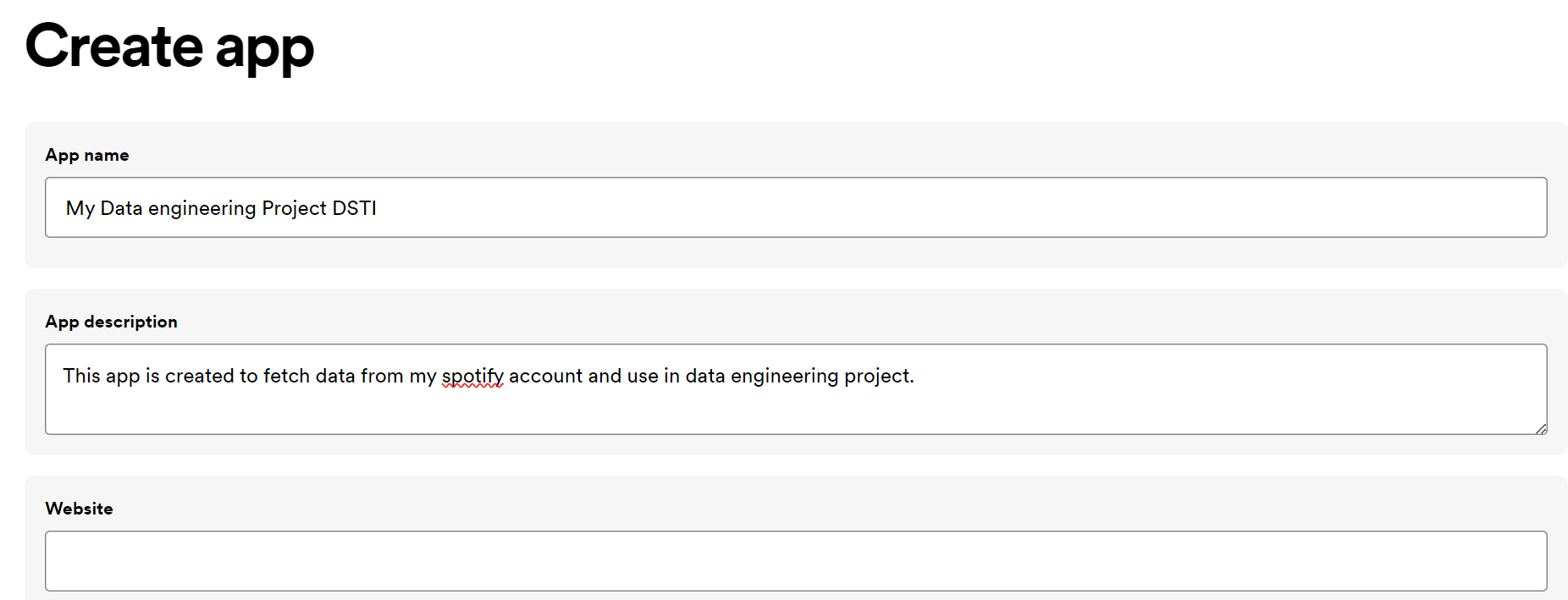

Now create a new app as follows

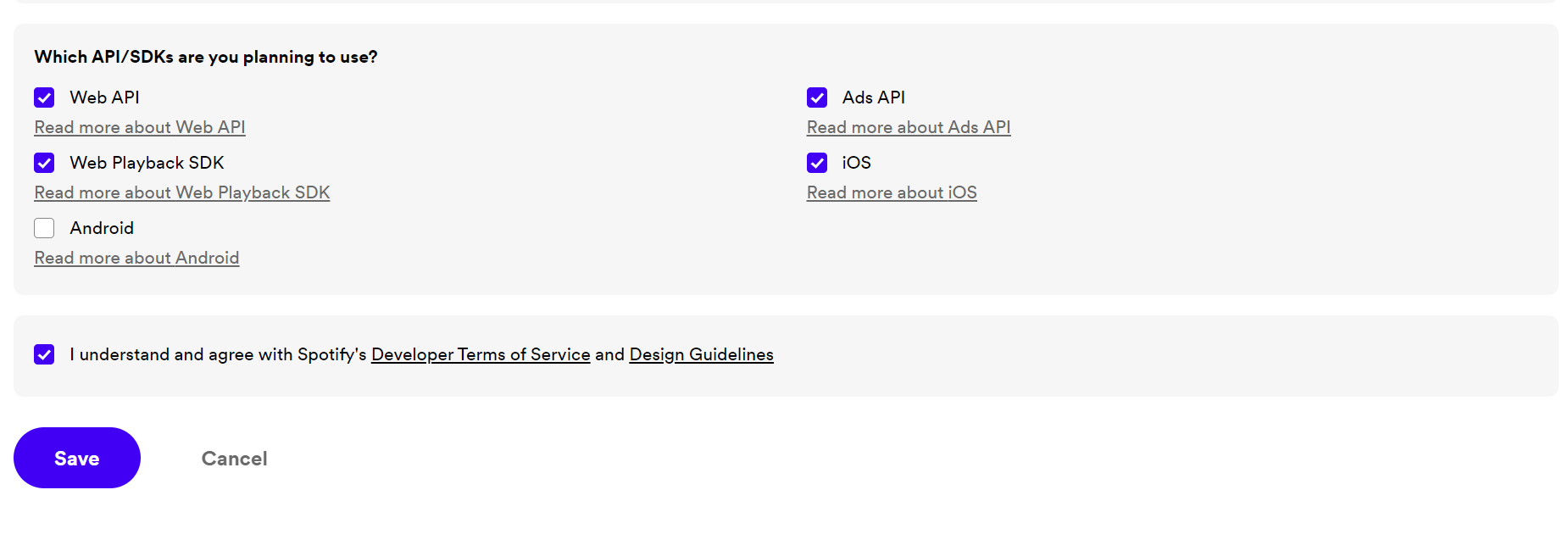

After filling in all the options click on submit

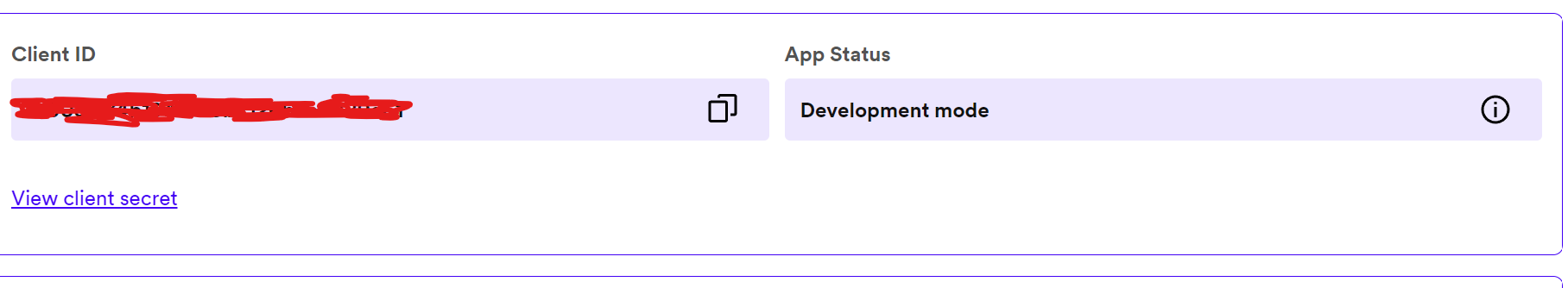

Now go to settings click on app settings

Note down your client id and client secret as follows:

Note No need to fill in the .env file with your secret. I have already done. When the kafka broker VM gets deployed it uses these credentials. Future scope would be to use credentails given by user when they are used in azure VM.

The Realtime data from spotify gets ingested into Kakfa. Look at the kafka directory to know more about code.

For running it locally use

- Install kafka.

- python -m pip install -r requirements.txt

- cd kafka

- Create a topic mentioned in the .env file.

- Now run

python producer.py

For running it on azure follow this README

We can connect to kafka cluster and process the data. One such example is

Find out which song is most popular among Indian males?

We can either run locally or on azure.

- For that you must ensure kafka is running. Follow the kafka section for that.

- Install pyspark.

- cd spark_streaming

- Run

python process_stream.py

Follow this README to know more.

- Include CI / CD with GitHub actions.

- Handle spotify credentials better.

- Try more complex queries.

- Try delta tables.

- Add vizualization like showing popular songs in every city of India and display it on a map.

- Store query results in Azure blob storage.