This repository contains the pytorch code for the 2023 ICASSP paper "Preformer: Predictive Transformer with Multi-Scale Segment-wise Correlations for Long-Term Time Series Forecasting”.

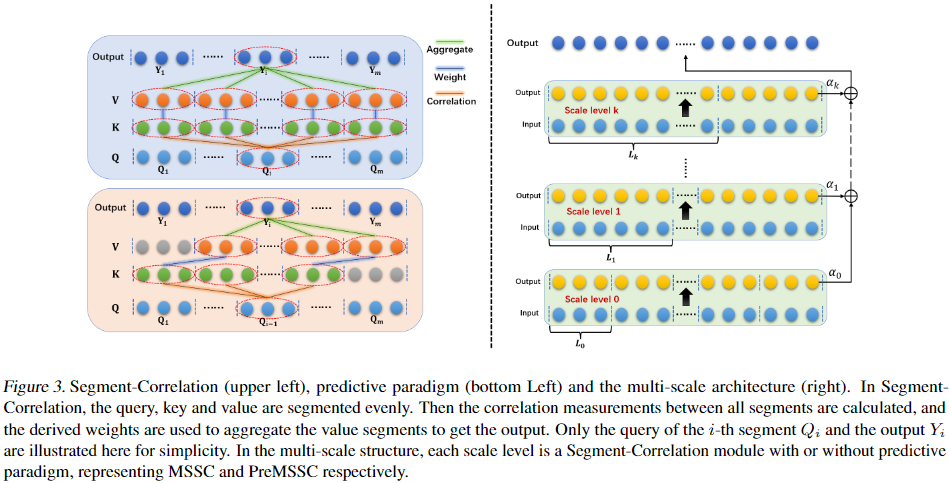

The core MSSC module:

This repository uses some code from Autoformer and Informer. Thanks to the authors for their work!

Install Python 3.6, PyTorch 1.9.0.

We use all the datasets provided by Autoformer directly, so you can download them from Google drive provided in Autoformer. All the datasets are well pre-processed and can be used easily. After downloading, put these dataset files (such as ETTh1.csv) in the ./data/ folder.

python run.py --is_training 1 --root_path ./data/ --data_path ETTh1.csv --model_id ETTh1_96_48 --model Preformer --data ETTh1 --features M --seq_len 96 --label_len 48 --pred_len 48 --e_layers 2 --d_layers 1 --factor 4 --enc_in 7 --dec_in 7 --c_out 7 --des 'Exp' --itr 1 --n_heads 4 --d_model 32 --d_ff 128

python run.py --is_training 0 --root_path ./data/ --data_path ETTh1.csv --model_id ETTh1_96_48 --model Preformer --data ETTh1 --features M --seq_len 96 --label_len 48 --pred_len 48 --e_layers 2 --d_layers 1 --factor 4 --enc_in 7 --dec_in 7 --c_out 7 --des 'Exp' --itr 1 --n_heads 4 --d_model 32 --d_ff 128

@inproceedings{du2022preformer,

title={Preformer: Predictive Transformer with Multi-Scale Segment-wise Correlations for Long-Term Time Series Forecasting},

author={Du, Dazhao and Su, Bing and Wei, Zhewei},

booktitle={ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

year={2023},

organization={IEEE}

}