Disclosure: This is not an official HashiCorp repository and it is intended to be used for learning purposes

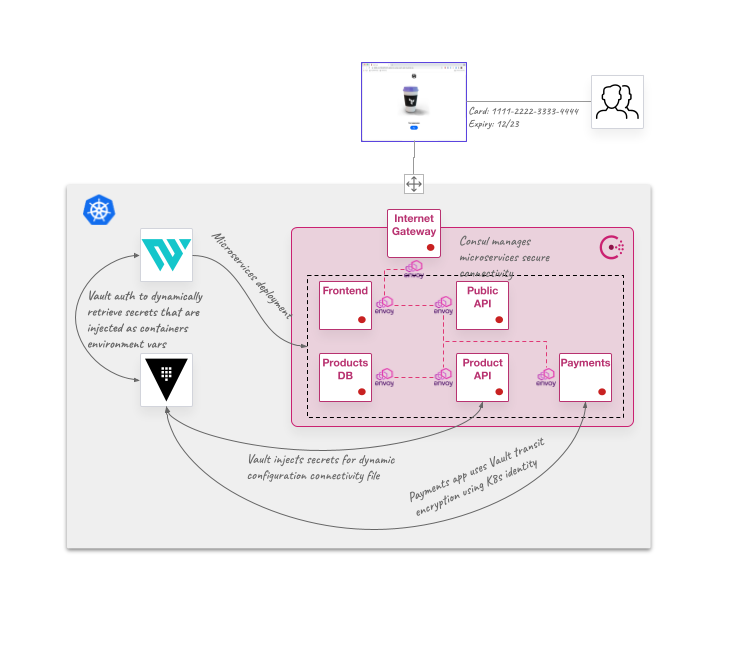

This repository is built to show an example of the Zero-Trust concept by using different HashiCorp tools:

- HashiCorp Vault to centraly handle secrets management and encryption with an identity based authentication

- HashiCorp Consul to secure service-to-service connectivity using Consul Service Mesh

- HashiCorp Waypoint to deploy the application services and dynamically manage configuration injection

Here is an image of the deployment environment:

We mean by Zero-Trust to follow three principles:

- Least privilege. Everything should be managed by the least privilege principle, so anyone should have access to only the specific needed resources

- Trust nothing. Every access/action should be always authenticated and authorized

- Assume the breach. Defend and prevent assuming that your environment is going to be compromised at some point

Some important configurations in this learning repo are related with the Zero-Trust concept:

- Vault Kubernetes authentication to inject authentication and secrets access to some Kubernetes deployed pods, and also to encrypt data in transit from the application, enforced by policies defined in Vault

- Consul Template is used as sidecar containers to configure dynamically some services with connectivity configurations from Consul and secrets access from Vault

- Dynamic deployment environment configurations from Vault secrets by injecting at deployment time environment variables configurations

- Consul Connect Service Mesh configuration to enforce security mTLS connections in our East-West traffic

- Consul CRDs to enforce Kubernetes service mesh secure connectivity like intentions and ingress traffic

You are going to deploy the whole environment by doing different things:

- Create or get access to a Kubernetes cluster. This repo was created by testing with Minikube and GKE clusters

- Deploy Vault with Helm. We are providing a

values.yamlfile to deploy a simple 1-node cluster with file persistent storage (without HA) - Deploy Consul with Helm. We are providing a

values.yamlfile to deploy a simple 1-node cluster with ACLs enabled - Install Waypoint with Helm. Here is the

values.yamlwhich deploys Waypoint server and runner - Create a namespace

appsto deploy your applications

Then you will be able to test the Zero-Trust application environment by:

- Configuring Vault policies, authentication methods and create all the required secrets

- Configuring Waypoint initial login and server to manage dynamic configuration with Vault

- Deploying your applications with Waypoint:

- If you have Docker Registry credentials to push to a container registry you can use this Waypoint config

- If you just want to work with default application images you can use this other Waypoint config

- Configuring Consul intentions and service defaults to access your example application

I have included some scripts to make the process easier, which is explained below

You will need some pieces to work with this repo

- A MacOS or Linux terminal

- Minikube or GCP access to create the K8s cluster (you should be able to work with any other Kubernetes environmet, but I didn't test it)

- kubectl CLI tool

- Docker local installation

- Waypoint binary

- JQ installed (installation scripts are using this tool to parse JSONs)

If you want to deploy everything in just a couple of minutes here are the steps using the scripts (Minikube example, but with GKE would be similar):

- If you want to deploy locally create a Minikube cluster:

minikube start -p hashikube --cpus='4' --memory='8g'

Note: Instead of using Minikube - if you have a GCP account with GKE admin permissions - you can do the following to create a GKE cluster from your terminal install gcloud SDK first:

gcloud auth login gcloud container clusters create hashikube \ -m n2-standard-2 \ --num-nodes 3 \ --zone europe-west1-c \ --release-channel rapid gcloud container clusters get-credentials hashikube --zone europe-west1-c

- Open a new terminal and execute the

minikube tunnelto expose theLoadBalancerservices (you don't need to do this if running in a GKE environment or using MetalLB or similar in your one node cluster):minikube tunnel -p hashikube -c

Note: If you don't like

minikube tunnelyou can use MetalLB addon from Minikube:minikube addons enable metallb -p hashikube minikube ip # Take note of the IP address to configure your LBs range minikube addons configure metallb -p hashikube -- Enter Load Balancer Start IP: < Next IP address from you Minikube IP> -- Enter Load Balancer End IP: < Last IP address you want for your LBs address >

-

Deploy Vault, Consul and Waypoint with the following command in the first open terminal (or a terminal where your

minikube tunnelis not running):make install

-

Configure Vault:

make vault

-

Configure Waypoint:

make waypoint

NOTE: Wait for Waypoint to complete deployment Sometimes Waypoint can take some time to deploy the Waypoint Runner. You can check by:

kubectl get po -n waypoint NAME READY STATUS RESTARTS AGE waypoint-bootstrap--1-588ck 0/1 Completed 0 2m45s waypoint-runner-0 1/1 Running 0 3m5s waypoint-server-0 1/1 Running 0 3m5s

- Deploy Consul CRDs:

make consul

Use the main Waypoint configuration at Project > waypoint.hcl to deploy the application. It just deploys by pulling already built images in hcdcanadillas registry. You just need to do the following to deploy:

cd Project

waypoint init

waypoint upNOTE: The images are built for x86 architecture. If you are running an ARM based machine like an Apple M1, you can do it by changing the

platformparameter value tolinux/arm64:waypoint up -var platform=linux/arm64This uses the images with the tag

*-waypoint-arm64fromhcdcanadillasregistry which are built forarm64architecture (you can change also toghcr.io/dcanadillas/hashicupsregistry in the Waypoint input variables if the previous is not working).Also, if you can build the whole project and doing a push to your own container registry, it can be done in the other Waypoint configuration located at

Project/applications/waypoint.hcl. Take a look at thisREADME.

The background process of previous commands is:

- It pulls the required microservices of the application from

hcdcanadillas/*-waypoint-amd64using the Waypoint Docker Plugin - It deploys the different services as Helm releases by the usage of the Waypoing Helm plugin

Now you can access to your application in your web browser by connecting to the Consul Ingress Gateway. It is running as a LoadBalancer service, so you can get the URL by:

echo "http://$(kubectl get svc -n consul consul-ingress-gateway -o jsonpath={.status.loadBalancer.ingress[].ip}):8080"Because we are using the Waypoint Entrypoint we are injecting some of the configurations in our application pods.

You can check Waypoint configuration to connect to Vault:

$ waypoint config source-get -type vault

KEY | VALUE

-------------------------+--------------------------

kubernetes_role | waypoint

skip_verify | true

namespace | root

addr | http://vault.vault:8200

auth_method | kubernetes

auth_method_mount_path | auth/kubernetes

And checking the variables injected in the applications:

$ waypoint config get

SCOPE | NAME | VALUE | WORKSPACE | LABELS

----------------+-------------------+---------------------+-----------+---------

app: postgres | POSTGRES_PASSWORD | <dynamic via vault> | |

app: postgres | POSTGRES_USER | <dynamic via vault> | |

And you can access to the pod value using Waypoint:

$ waypoint exec -app postgres printenv POSTGRES_USER

Connected to deployment v1

postgres

$ waypoint exec -app postgres printenv POSTGRES_PASSWORD

Connected to deployment v1

password

You can check that these are the values also from Vault:

$ kubectl exec -ti vault-0 -n vault -- vault kv get -field data kv/hashicups-db

map[password:password username:postgres]

We are considering this example as a zero-trust use case because of some exposed configurations:

product-apimicroservice is using aConfigMapthat defines connection string to thepostgresdatabase microservice. The configuration file data includes a username, a password and the connection string with service and port. These are using Consul Template sidecar to connect to Vault and Consul to retrieve data (credentials from Vault and service data from Consul). Also, the Vault Agent injector is being used to share Vault token to Consul Template to authenticate using Kubernetes service account identity.postgresmicroservice is using Waypoint dynamic config to inject environment variables with database credentials from Vault. This means that values are securely stored in Vault, and Waypoint is using Kubernetes service account identity to access only to the required secrets, using the least privilege principle which Vault is based on.paymentsmicroservice is encrypting data in transit using Vault transit engine, so it can encrypt and decrypt data using centralized encryption key managed by Vault. The access to Vault is bootstrapped within the microservice application by using Spring connectivity into Vault- Database credentials used by

product-apiservice are dynamic database credentials from Vault. So DB users are created on demand by Vault with a short-live credentials to connect to the Postgres database.

If you want to clean everything just execute the following from the root path of this repo:

make clean