This repository encompasses the work I did for my FSharp Advent 2022 submission that write-up for which can be found here; the submission is a good starting point to understand the rationale and underlying concepts.

Experiments: To get started with viewing the experiments, run Install.cmd that will install the prerequisites to run PolyGlot Notebooks.

// Create a Gaussian Process Model based on the Squared Exponential Kernel for the Sin Function.

let gaussianModelForSin() : GaussianModel =

let squaredExponentialKernelParameters : SquaredExponentialKernelParameters = { LengthScale = 1.; Variance = 1. }

let gaussianProcess : GaussianProcess = createProcessWithSquaredExponentialKernel squaredExponentialKernelParameters

let sinObjectiveFunction : ObjectiveFunction = QueryContinuousFunction Trig.Sin

createModel gaussianProcess sinObjectiveFunction -Math.PI Math.PI resolution

// Maximize the Sin Function between -π and π for 10 iterations.

let model : GaussianModel = gaussianModelForSin()

let optimaResults : OptimaResults = findOptima model Goal.Max 10

printfn "Optima: Sin(x) is maximized when x = %A at %A" optimaResults.Optima.X optimaResults.Optima.Y-

Kernel Parameters For the Squared Exponential Kernel: Length Scale and Variance: The length controls the smoothness between the points while the Variance controls the vertical amplitude. A more comprehensive explanation can be found here but in a nutshell, the length scale determines the length of the 'wiggles' in your function and the variance determines how far out the function can fluctuate.

-

Resolution: Our priors are uniformly initialized as a list ranging from the min and max provided when we create the model. The resolution indicates the number of elements we'd want in the priors that'll be initialized as a uniform list. The code for this is as follows:

// src/Optimization.Core/Model.fs

let inputs : double list = seq { for i in 0 .. (resolution - 1) do i }

|> Seq.map(fun idx -> min + double idx * (max - min) / (double resolution - 1.))

|> Seq.toListThe idea here is to use a higher resolution where precision is of paramount importance i.e. you can guess that the optima will require many digits after the decimal point.

-

Iterations: The number of iterations the Bayesian Optimization Algorithm should run for i.e. the number of times the objective function will be computed by running the workload to get to the optima. The more the better, however, we'd be wasting cycles if we have already reached the maxima and are continuing to iterate; this can be curtailed by early stopping.

-

Range: The Min and Max whose inclusive interval we want to optimize on.

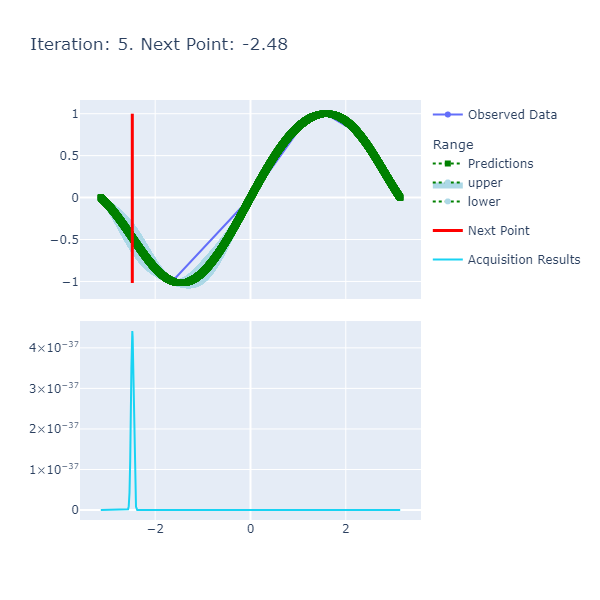

An example of a chart the algorithm is:

Here are the details:

- Predictions: The predictions represent the mean of the results obtained from the underlying model that's the approximate of the unknown objective function. This model is also known as the Surrogate Model and more details about this can be found here. The blue ranges indicate the area between the upper and lower limits based on the confidence generated by the posterior.

- Acquisition Results series represents the acquisition function whose maxima indicates where it is best to search. More details on how the next point to search is discerned can be found here.

- Next Point: The next point is derived from the maximization of the acquisition function and points to where we should sample next.

- Observed Data: The observed data is the data collected from iteratively maximizing the acquisition function. As the number of observed data points increases with increased iterations, the expectation is that the predictions and observed data points converge if the model parameters are set correctly.

Now that a basic definition, reason and other preliminary questions and answers for using Bayesian Optimization are presented, I want to provide some pertinent examples that'll help with contextualizing the associated ideas and highlight the usage of the library.

- Maximizing

Sinfunction between -π and π. - Minimizing The Wall Clock Time of A Simple Command Line App

- Minimizing The Percent of Time Spent during Garbage Collection For a High Memory Load Case With Bursty Allocations By Varying The Number of Heaps

- Gaussian Processes

- More About Gaussian Processes

- Bayesian Optimization From Scratch

- Gaussian Processes for Dummies

- Heavily Inspired by this Repository

- More About the Squared Exponential Kernel

Test out model on a simple Sin function as the objective.Create a simple command line app that easily helps us reach some extrema.Start working on the infrastructure that orchestrates runs.Criteria:Execution TimeSome Aggregate Trace Property

Adding Discrete Bayesian Optimization.1. Add the case where we don't try out values we have tried before.Abstracting Out The Kernel Method.Abstracting Out The Acquisition Function.Visualization.Save plot as png.Creating Gif Out Of Iterations.

Creating Notebooks / Experimental Console Apps:Sin Function.Simple Workload.High Memory Load Based Bursty Allocations.

Unit Tests.- Clean up main logic.

- Possibly add computation expressions.

- Possibly add ROP-esque behavior.

Finish writing the article.- Early Stopping.

- Abstract behavior of the Observation Points.