Record spatial features of real-world objects, then use the results to find those objects in the user's environment and trigger AR content.

One way to build compelling AR experiences is to recognize features of the user's environment and use them to trigger the appearance of virtual content. For example, a museum app might add interactive 3D visualizations when the user points their device at a displayed sculpture or artifact.

In iOS 12, you can create such AR experiences by enabling object detection in ARKit: Your app provides reference objects, which encode three-dimensional spatial features of known real-world objects, and ARKit tells your app when and where it detects the corresponding real-world objects during an AR session.

This sample code project provides multiple ways to make use of object detection:

- Run the app to scan a real-world object and export a reference object file, which you can use in your own apps to detect that object.

- Use the

ARObjectScanningConfigurationandARReferenceObjectclasses as demonstrated in this sample app to record reference objects as part of your own asset production pipeline. - Use

detectionObjectsin a world-tracking AR session to recognize a reference object and create AR interactions.

Requires Xcode 10.0, iOS 12.0 and an iOS device with an A9 or later processor. ARKit is not supported in iOS Simulator.

The programming steps to scan and define a reference object that ARKit can use for detection are simple. (See "Create a Reference Object in an AR Session" below.) However, the fidelity of the reference object you create, and thus your success at detecting that reference object in your own apps, depends on your physical interactions with the object when scanning. Build and run this app on your iOS device to walk through a series of steps for getting high-quality scan data, resulting in reference object files that you can use for detection in your own apps.

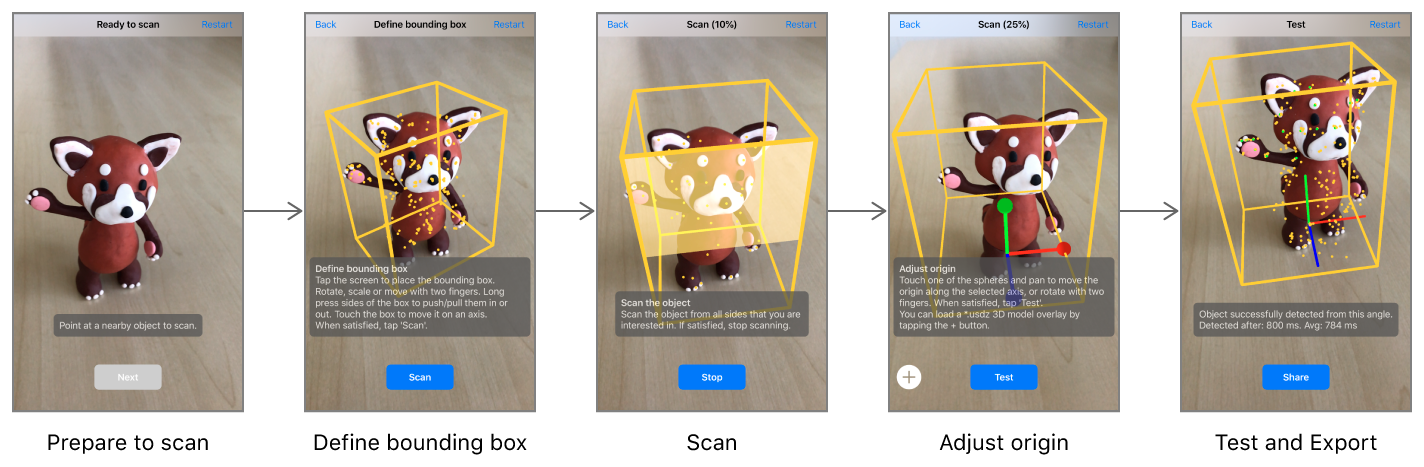

- Prepare to scan. When first run, the app displays a box that roughly estimates the size of whatever real-world objects appear centered in the camera view. Position the object you want to scan on a surface free of other objects (like an empty tabletop). Then move your device so that the object appears centered in the box, and tap the Next button.

- Define bounding box. Before scanning, you need to tell the app what region of the world contains the object you want to scan. Drag to move the box around in 3D, or press and hold on a side of the box and then drag to resize it. (Or, if you leave the box untouched, you can move around the object and the app will attempt to automatically fit a box around it.) Make sure the bounding box contains only features of the object you want to scan (not those from the environment it's in), then tap the Scan button.

- Scan the object. Move around to look at the object from different angles. The app highlights parts of the bounding box to indicate when you've scanned enough to recognize the object from the corresponding direction. Be sure to scan on all sides from which you want users of your app to be able to recognize the object. The app automatically proceeds to the next step when a scan is complete, or you can tap the Stop button to proceed manually.

- Adjust origin. The app displays x, y, and z coordinate axis lines showing the object's anchor point, or origin. Drag the circles to move the origin relative to the object. In this step you can also use the Add (+) button to load a 3D model in USDZ format. The app displays the model as it would appear in AR upon detecting the real-world object, and uses the model's size to adjust the scale of the reference object. Tap the Test button when done.

- Test and export. The app has now created an

ARReferenceObjectand has reconfigured its session to detect it. Look at the real-world object from different angles, in various environments and lighting conditions, to verify that ARKit reliably recognizes its position and orientation. Tap the Export button to open a share sheet for saving the finished.arobjectfile. For example, you can easily send it to your development Mac using AirDrop, or send it to the Files app to save it to iCloud Drive.

- Note: An

ARReferenceObjectcontains only the spatial feature information needed for ARKit to recognize the real-world object, and is not a displayable 3D reconstruction of that object.

You can use an Xcode asset catalog to bundle reference objects in an app for use in detection:

-

Open your project's asset catalog, then use the Add button (+) to add a new AR resource group.

-

Drag

.arobjectfiles from the Finder into the newly created resource group. -

Optionally, for each reference object, use the inspector to provide a descriptive name for your own use.

- Note: Put all objects you want to look for in the same session into a resource group, and use separate resource groups to hold sets of objects for use in separate sessions. For example, a museum app might use separate sessions (and thus separate resource groups) for recognizing displays in different wings of the museum.

To enable object detection in an AR session, load the reference objects you want to detect as ARReferenceObject instances, provide those objects for the detectionObjects property of an ARWorldTrackingConfiguration, and run an ARSession with that configuration:

let configuration = ARWorldTrackingConfiguration()

guard let referenceObjects = ARReferenceObject.referenceObjects(inGroupNamed: "gallery", bundle: nil) else {

fatalError("Missing expected asset catalog resources.")

}

configuration.detectionObjects = referenceObjects

sceneView.session.run(configuration)When ARKit detects one of your reference objects, the session automatically adds a corresponding ARObjectAnchor to its list of anchors. To respond to an object being recognized, implement an appropriate ARSessionDelegate, ARSKViewDelegate, or ARSCNViewDelegate method that reports the new anchor being added to the session. For example, in a SceneKit-based app you can implement renderer(_:didAdd:for:) to add a 3D asset to the scene, automatically matching the position and orientation of the anchor:

func renderer(_ renderer: SCNSceneRenderer, didAdd node: SCNNode, for anchor: ARAnchor) {

if let objectAnchor = anchor as? ARObjectAnchor {

node.addChildNode(self.model)

}

}For best results with object scanning and detection, follow these tips:

- ARKit looks for areas of clear, stable visual detail when scanning and detecting objects. Detailed, textured objects work better for detection than plain or reflective objects.

- Object scanning and detection is optimized for objects small enough to fit on a tabletop.

- An object to be detected must have the same shape as the scanned reference object. Rigid objects work better for detection than soft bodies or items that bend, twist, fold, or otherwise change shape.

- Detection works best when the lighting conditions for the real-world object to be detected are similar to those in which the original object was scanned. Consistent indoor lighting works best.

- High-quality object scanning requires peak device performance. Reference objects scanned with a recent, high-performance iOS device work well for detection on all ARKit-supported devices.

This sample app provides one way to create reference objects. You can also scan reference objects in your own app—for example, to build asset management tools for defining AR content that goes into other apps you create.

A reference object encodes a slice of the internal spatial-mapping data that ARKit uses to track a device's position and orientation. To enable the high-quality data collection required for object scanning, run a session with ARObjectScanningConfiguration:

let configuration = ARObjectScanningConfiguration()

configuration.planeDetection = .horizontal

sceneView.session.run(configuration, options: .resetTracking)View in Source

During your object-scanning AR session, scan the object from various angles to make sure you collect enough spatial data to recognize it. (If you're building your own object-scanning tools, help users walk through the same steps this sample app provides.)

After scanning, call createReferenceObject(transform:center:extent:completionHandler:) to produce an ARReferenceObject from a region of the user environment mapped by the session:

// Extract the reference object based on the position & orientation of the bounding box.

sceneView.session.createReferenceObject(

transform: boundingBox.simdWorldTransform,

center: float3(), extent: boundingBox.extent,

completionHandler: { object, error in

if let referenceObject = object {

// Adjust the object's origin with the user-provided transform.

self.scannedReferenceObject =

referenceObject.applyingTransform(origin.simdTransform)

self.scannedReferenceObject!.name = self.scannedObject.scanName

if let referenceObjectToMerge = ViewController.instance?.referenceObjectToMerge {

ViewController.instance?.referenceObjectToMerge = nil

// Show activity indicator during the merge.

ViewController.instance?.showAlert(title: "", message: "Merging previous scan into this scan...", buttonTitle: nil)

// Try to merge the object which was just scanned with the existing one.

self.scannedReferenceObject?.mergeInBackground(with: referenceObjectToMerge, completion: { (mergedObject, error) in

var title: String

var message: String

if let mergedObject = mergedObject {

mergedObject.name = self.scannedReferenceObject?.name

self.scannedReferenceObject = mergedObject

title = "Merge successful"

message = "The previous scan has been merged into this scan."

} else {

print("Error: Failed to merge scans. \(error?.localizedDescription ?? "")")

title = "Merge failed"

message = """

Merging the previous scan into this scan failed. Please make sure that

there is sufficient overlap between both scans and that the lighting

environment hasn't changed drastically.

"""

}

// Hide activity indicator and inform the user about the result of the merge.

ViewController.instance?.dismiss(animated: true) {

ViewController.instance?.showAlert(title: title, message: message, buttonTitle: "OK", showCancel: false)

}

creationFinished(self.scannedReferenceObject)

})

} else {

creationFinished(self.scannedReferenceObject)

}

} else {

print("Error: Failed to create reference object. \(error!.localizedDescription)")

creationFinished(nil)

}

})View in Source

When detecting a reference object, ARKit reports its position based on the origin the reference object defines. If you want to place virtual content that appears to sit on the same surface as the real-world object, make sure the reference object's origin is placed at the point where the real-world object sits. To adjust the origin after capturing an ARReferenceObject, use the applyingTransform(_:) method.

After you obtain an ARReferenceObject, you can either use it immediately for detection (see "Detect Reference Objects in an AR Experience" above) or save it as an .arobject file for use in later sessions or other ARKit-based apps. To save an object to a file, use the export method. In that method, you can provide a picture of the real-world object for Xcode to use as a preview image.