The purpose of this database is to gather information about user activity on their new music streaming app, their analytics teams wants to know what songs are the user listening to, they can create many machine learning models like suggestion models, lists with the most listened by them among others.

You can run the python scripts through the terminal, you firstly need to run create_tables.py, here you will create the database and the tables that will storaged the streaming app information, after this you need to run etl.py, this script is the ETL in charge of take the .json's files and formatting for putting into the tables on SQL postgres.

- Command line:

>>> python create_tables.py && python etl.py- && means that afther create_tables.py executes correctly etl.py will start its execution.

There are two data sets that contains JSON format files,one of them is *song dataset that is partitioned by the first three letters of each song's track ID with this dataset the 'song' and 'artis' tables are created, then there is log dataset that are partitioned by year and month, with this dataset the 'songplay', 'user' and 'time' tables are created.

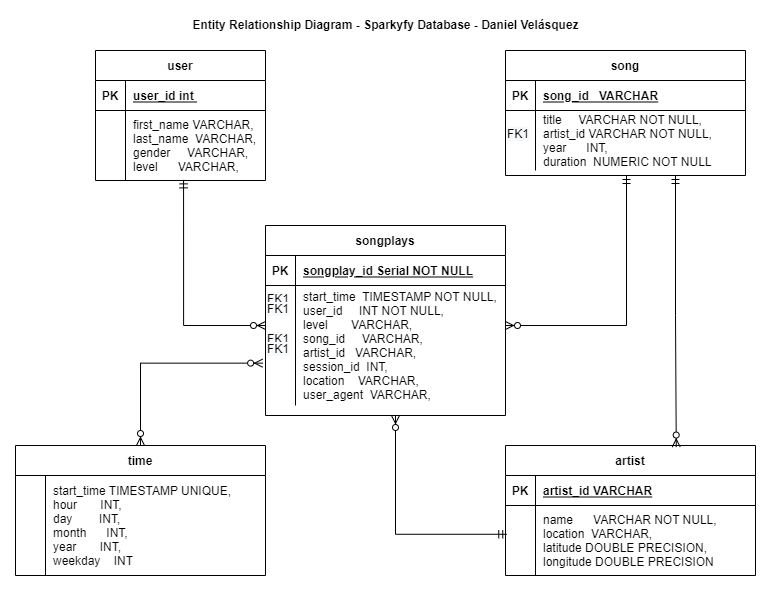

Below you can see the entity relationship diagram:

The database has a start schema, we have a fact table called 'songplays', and 'user','song','artist' and 'date' tables are dimension table.

- Fact table:

- songplay:

- songplay_id Serial PRIMARY KEY

- start_time timestamp NOT NULL

- user_id int NOT NULL

- level varchar

- song_id varchar

- session_id int

- location varchar

- user_agent varchar

- songplay:

- Dimension tables:

- user:

- user_id int PRIMARY KEY

- first_name varchar

- last_name varchar

- gender varchar

- level varchar

- song:

- song_id int PRIMARY KEY

- title varchar NOT NULL

- artist_id varchar NOT NULL

- year int

- duration numeric NOT NULL

- artist:

- artist_id varchar PRIMARY KEY

- name varchar NOT NULL

- location varchar

- latitude doble precision

- longitude doble precision

- time:

- start_time timestamp UNIQUE

- hour int

- day int

- month int

- year int

- weekday int

- user:

ETL

extract(files.json) -> transform(organice the data for each table) -> load(we push the cleaned and organized data into SQL postgres)