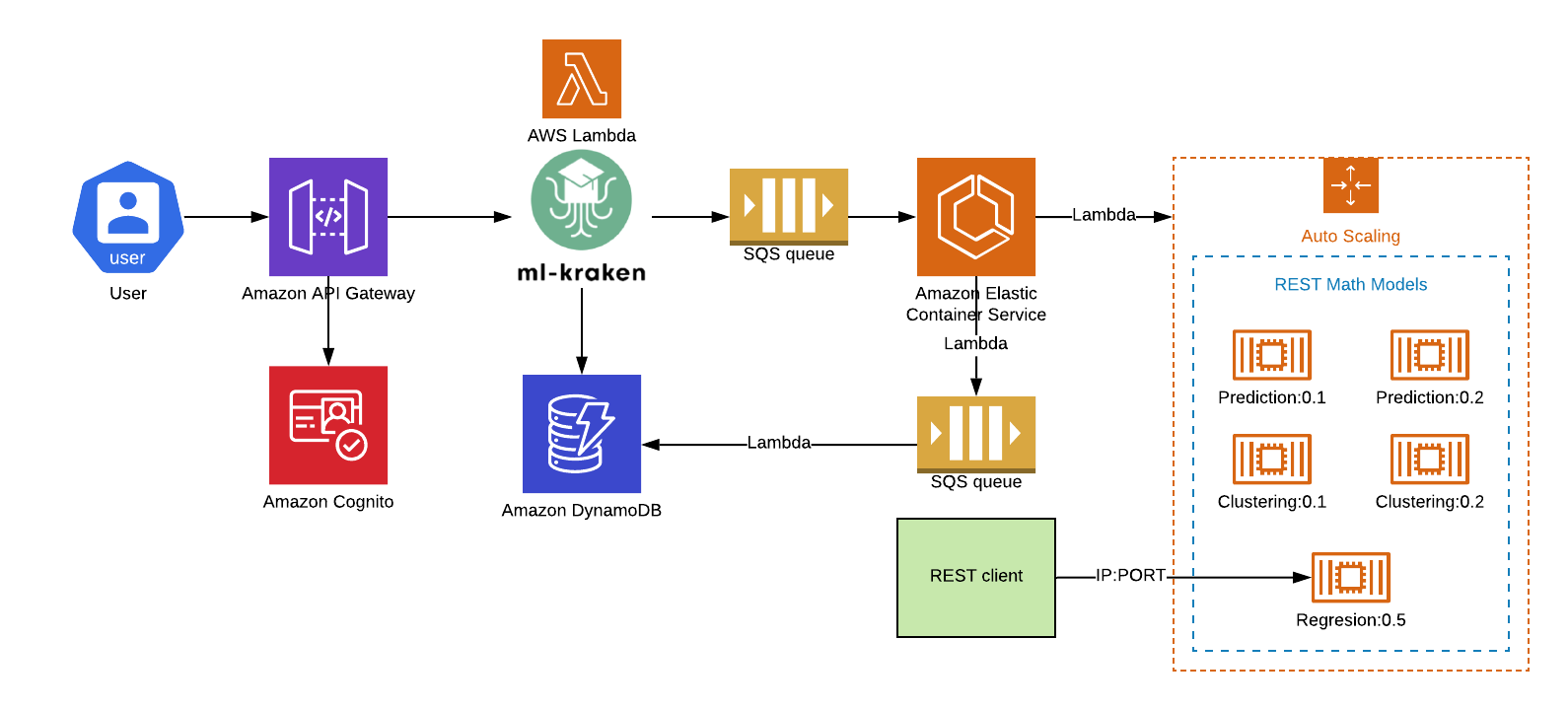

ML-Kraken is a fully cloud-based solution designed and built to improve models management process. Each math model is treated as a separate network service that can be exposed under IP address and defined port. This approach is called MaaS (model as a service).

ML-kraken combines two pieces, backend based on serverless solution and frontend which is an Angular based app. As a native execution platform, we decided to use AWS public cloud.

- R/Spark/Python models deployment

- MaaS (model as a service) - networked models

- models exposed under REST services

- easy integration with real-time analytical systems

- environment that easly scale-up

- ability to keep many model versions in one place

- jupyterhub plugin to communicate with ML-Kraken

- AWS account created

- serverless installed

- npm installed

Before running build-and-deploy.sh script setup access to your AWS account. The serverless framework looks for .aws folder on your machine.

git clone git@github.com:datamass-io/ml-kraken.git

cd ./ml-kraken

./build-deploy.sh

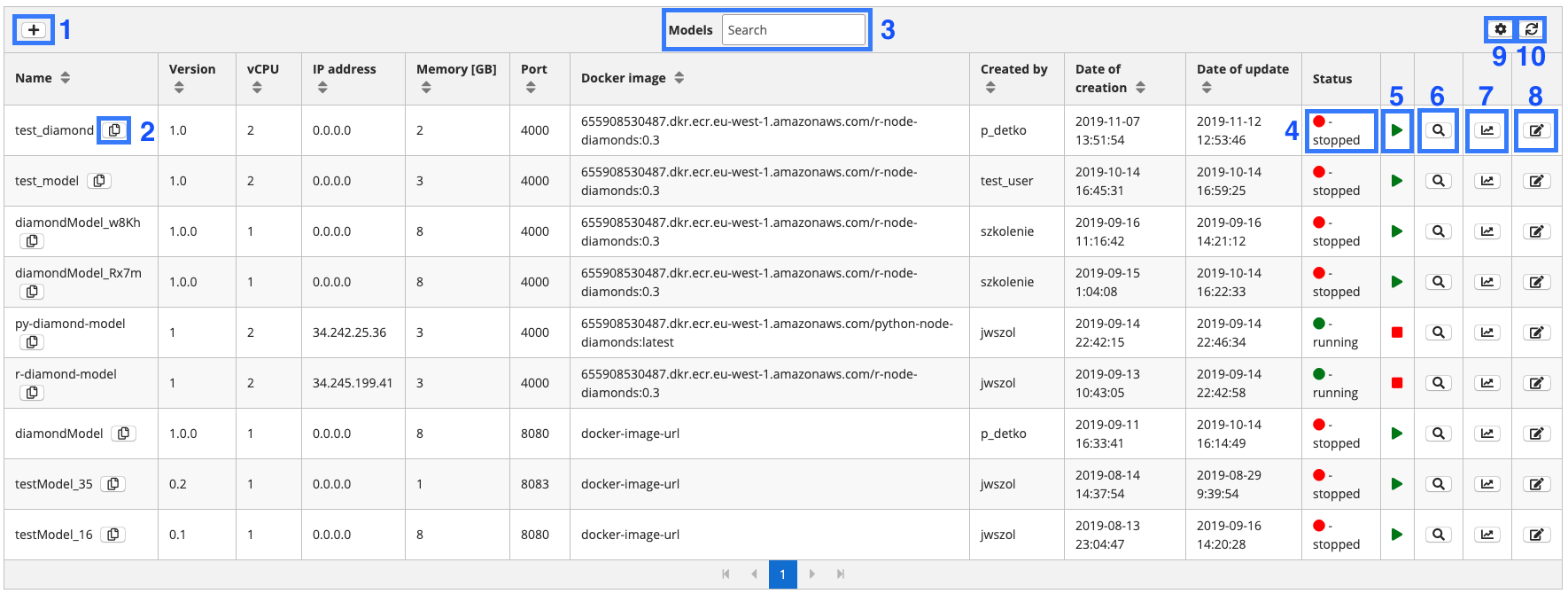

This is the main part of ML Kraken where all created models are stored. It allows to:

- add/star/stop models

- view the response time of individual calculations in the model

- view logs for each model

Functions of selected table fragments:

- The button opens the form for adding a new model

- Clicking this button allows you to copy the model id. Useful for quickly pasting id into the created query

- Allows filtering of models in the table

- Model status - determines whether the container responsible for a given model is running

- Model start/stop button. Allows to start or stop the container associated with the given model

- Button that opens the model log view

- Opens the graph of response time to given calculations in the model

- Opens the form for editing model parameters

- Selects the displayed columns in the main table

- Refreshes the model table

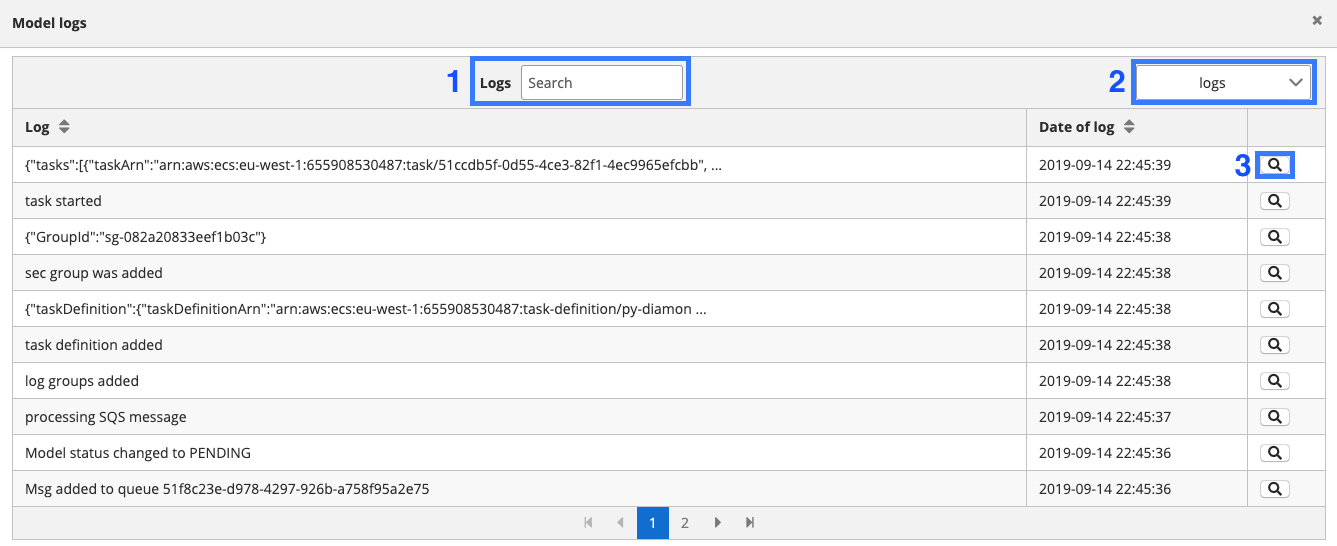

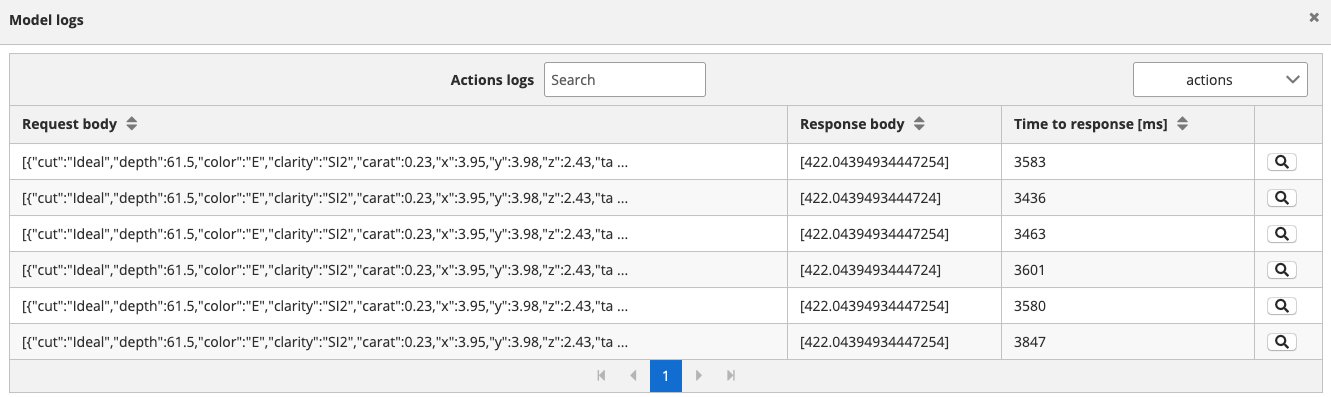

This table stores entries about what operations were performed on the backend side. It is also possible to view the request and response history.

The fragments marked in the picture are designed to:

- Allow filtering of logs in the table

- Change the type of displayed logs from/to backend logs to/from request and response logs

- Display log details

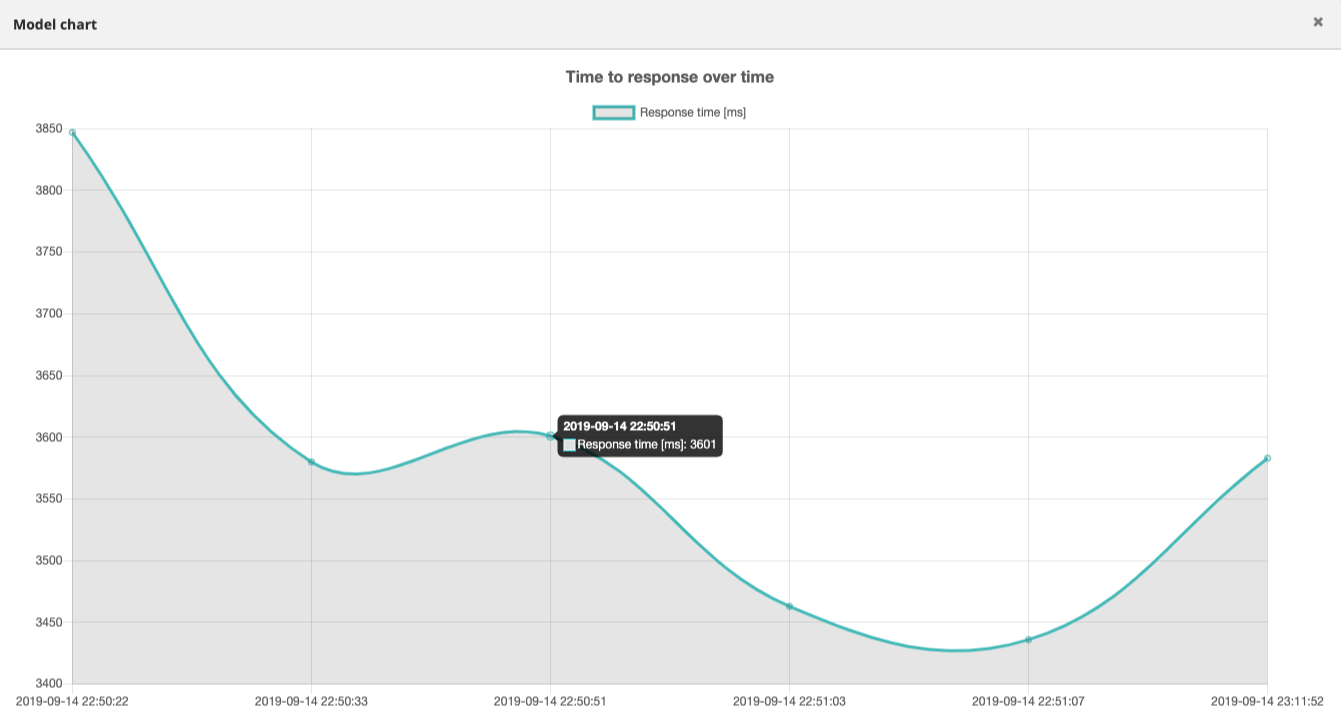

ML Kraken allows to display a graph of response time versus time. On its basis, you can determine the current model load and the level of complexity of the calculations. An example chart is presented below.

Each ML-Kraken model created has an assigned docker container from Amazon ECS. As a result, it is possible to run independent models that can be addressed with REST queries. After clicking the model start button, it takes a while for the container to become operational and establish an external IP.

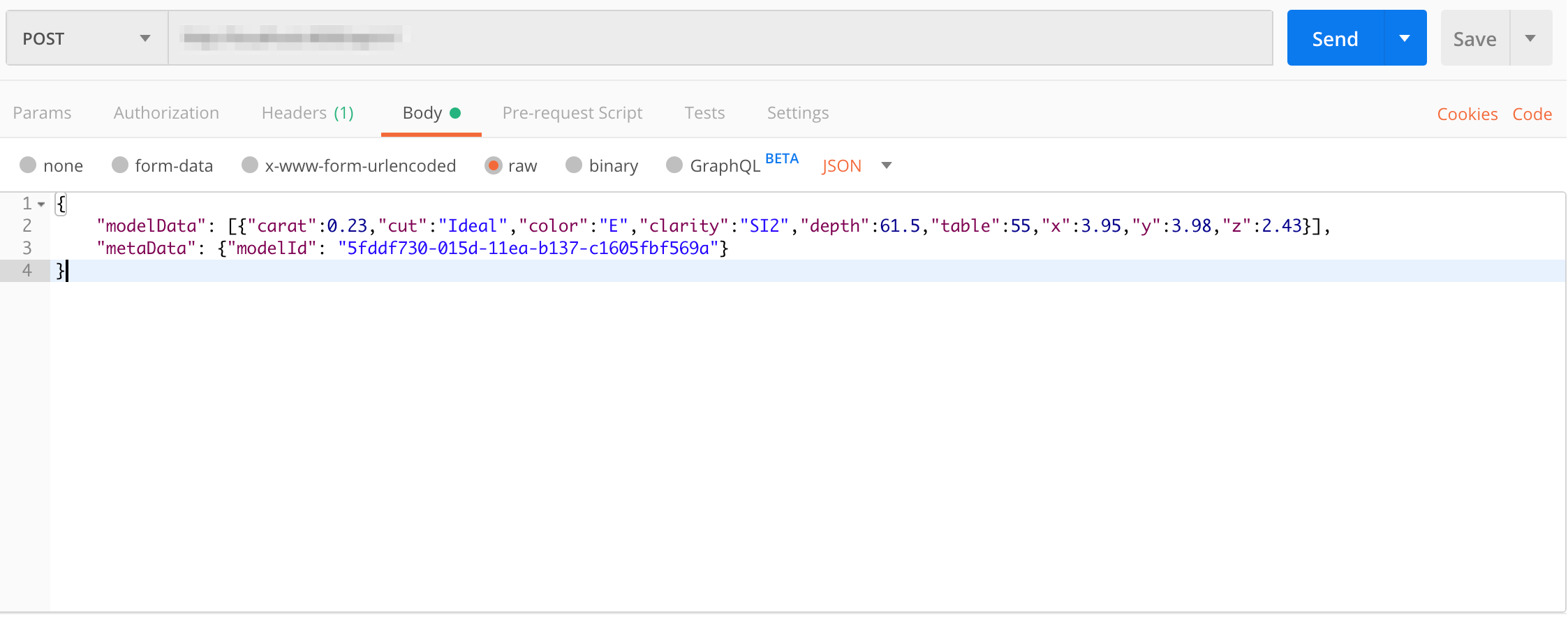

Performing calculations on a running model requires sending a POST request with the body containing data in JSON format. Example POST request using Postman:

As above, JSON should contain:

- modelData - parameters which are send to model as a input

- metaData - requires specifying the model id to send a request to the correct model

After the calculations are finished, a new point will be visible on the graph showing the time from sending the request to the response.

We happily welcome contributions to ML-Kraken. Please contact us in case of any questions/concerns.