The EUCA framework is a prototyping tool to design explainable artificial intelligence for non-technical end-users.

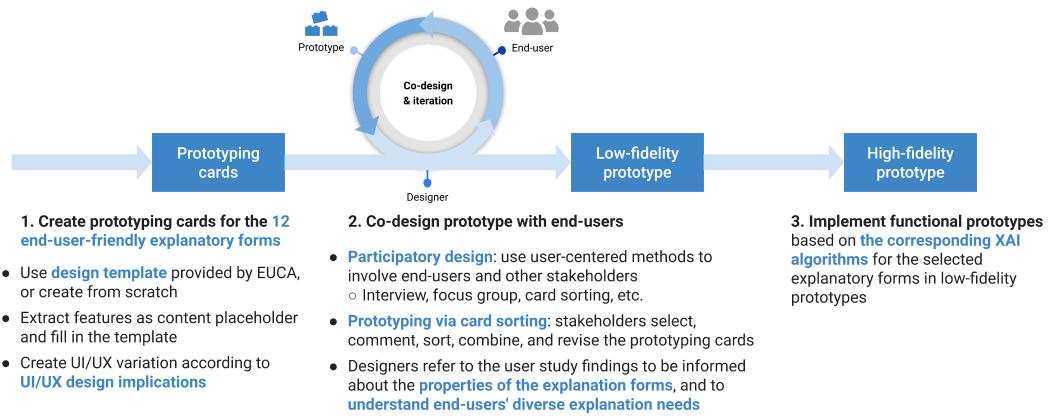

It can help you build a low-fidelity explainable AI (XAI) prototype, so that you can use it to quickly "trial and error" (without the effort to implement it), and co-design and iterate it together with your end-users.

You can use the prototype at the initial phase for user need assessment, and as a start for your explainable AI system iteration.

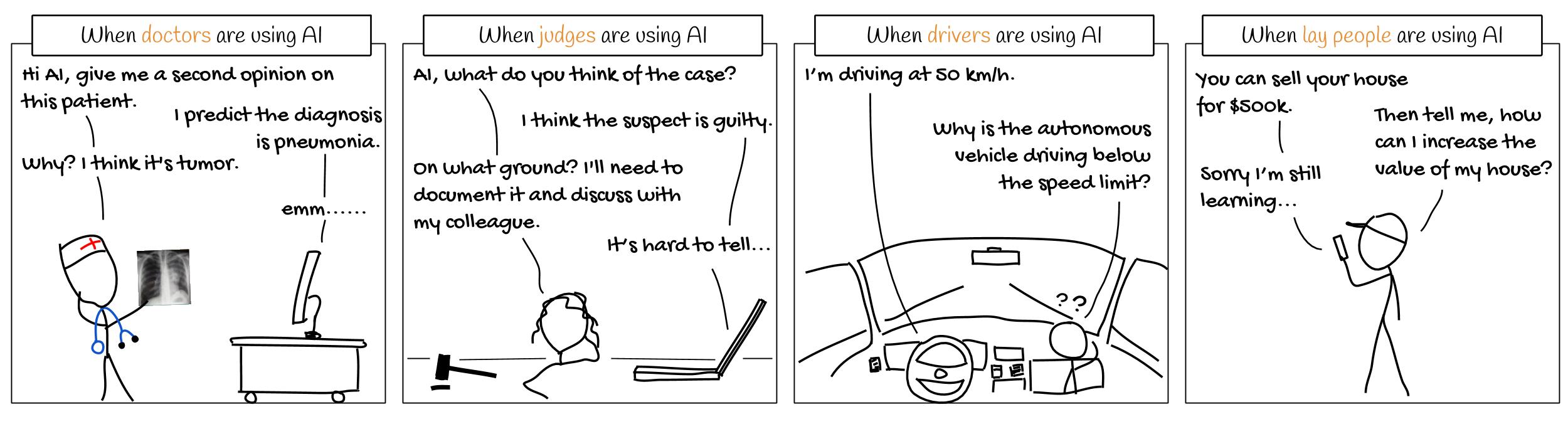

As AI is getting pervasive and assists users in making decisions on critical tasks, such as autonomous driving vehicles, clinical diagnosis, financial decisions, legal and military judgment, or something crucial that relates to people's life or money, the end-users will be more likely to require AI to justify its prediction.

Making AI explainable to its end-users is challenging, since end-users are laypersons or domain experts, and they do not have technical knowledge in AI or computer science to understand how AI works.

EUCA can be used by anyone who designs or builds an explainable AI system for end-users, such as UI/UX designers, AI developers, AI researchers, and HCI researchers.

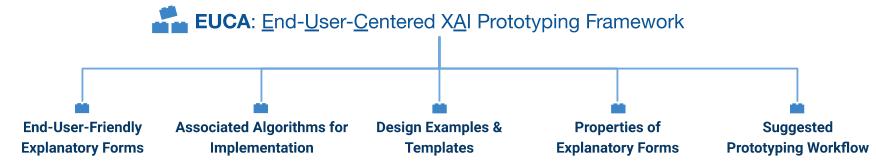

The main component of EUCA is the 12 end-user-friendly explanatory forms, and

- their associated design examples/templates

- corresponding algorithms for implementation, and

- identified properties (their strength, weakness, UI/UX design implications, and applicable explanation needs) from our user study findings See explanatory forms page for the above details and design examples.

In addition, EUCA also provides a suggested prototyping method, and end-users' diverse explanation need analysis (such as to calibrate trust, detect bias, resolve disagreement with AI).

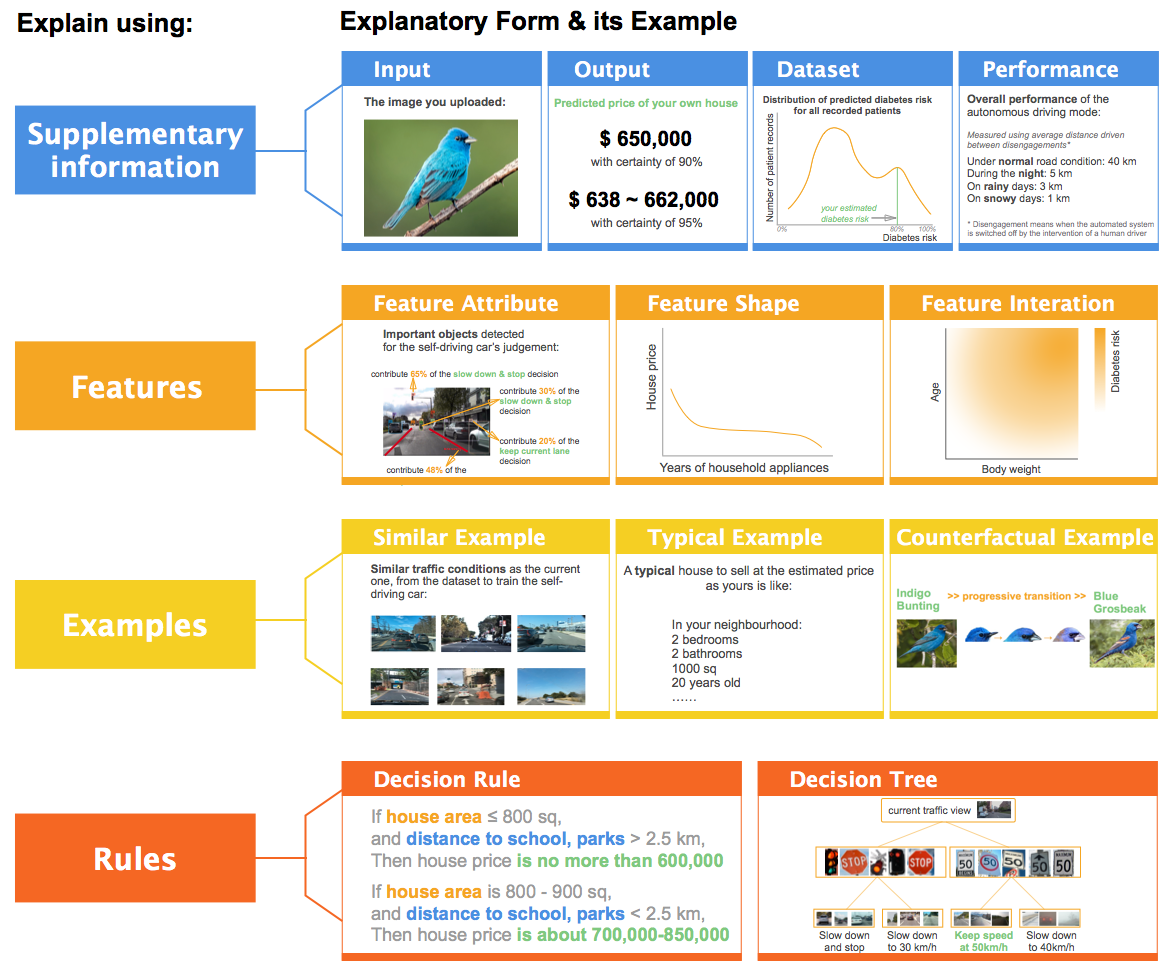

The 12 explanatory forms in EUCA framework are:

Comparison table: You may jump to corresponding sections by clicking on the blue text

| Explanatory form | User-Friendly Level (3:most friendly) | Local/global | Datatype |Visual representations |Pros | Cons |UI/UX Design Implications | Applicable explanation needs | XAI algorithms | |-----------------------------------|----|--------------|-----------------|-----------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------| | Feature Attribute |★ ★ ★ | Local/Global | Tabular/Img/Txt | Saliency map; Bar chart|Simple and easy to understand; Can answer how and why AI reaches its decisions. | Illusion of causality, confirmation bias | Alarm users about causality illusion; Allow setting threshold on importance score, show details on-demand | To verify AI's decision | LIME , SHAP, CAM, LRP, TCAV | | Feature Shape |★ ★ | Global | Tabular | Line plot | Graphical representation, easy to understand the relationship between one feature and prediction | Lacks feature interaction; Information overload if multiple feature shapes are presented | Users can inspect the plot of their interested features; May indicate the position of local data points (usually users’ input data)| To control and improve the outcome; To reveal bias | PDP, ALE, GAM | | Feature Interaction |★ | Global | Tabular | 2D or 3D heatmap | Show feature-feature interaction | The diagram on multiple features is difficult to interpret | Users may select their interested feature pairs and check feature interactions; or XAI system can prioritize significant feature interactions | To control and improve the outcome | PDP, ALE, GA2M | | Similar Example |★ ★ ★ | Local | Tabular/Img/Txt| Data instances as examples | Easy to comprehend, users intuitively verify AI’s decision using analogical reasoning on similar examples | It does not highlight features within examples to enable users’ side-by-side comparison | Support side-by-side feature-based comparison among examples | To verify the decision | Nearest neighbour, CBR | | Typical Example |★ ★ | Local/Global | Tabular/Img/Txt | Data instances as examples| Use prototypical instances to show learned representation; Reveal potential problems of the model | Users may not appreciate the idea of typical cases | May show within-class variations, or edge cases | To verify the decision; To reveal bias | k-Mediods, MMD-critic , Generate prototype(Simonyan, Mahendran2014), CNN prototype(Li2017, Chen2019), Influential instance | | Counterfactual Example |★ ★ | Local | Tabular/Img/Txt | Two counterfactual data instances with their highlighted contrastive features, or a progressive transition between the two| Helpful to identify the differences between the current outcome and another contrastive outcome | Hard to understand, may cause confusion | User can define the predicted outcome to be contrasted with, receive personalized counterfactual constraints; May only show controllable features | To differentiate between similar instances; To control and improve the outcome | Inverse classification, MMD-critic, Progression, Counterfactual Visual Explanations, Pertinent Negative | | Decision Rules/Sets |★ ★ | Global | Tabular/Img/Txt | Present rules as text, table, or matrix| Present decision logic, "like human explanation" | Need to carefully balance between completeness and simplicity of explanation | Trim rules and show on-demand; Highlight local clauses related to user's interested instances | Facilitate users' learning, report generation, and communication with other stakeholders | Bayesian Rule Lists, LORE, Anchors | |Decision tree |★ | Global | Tabular/Img/Txt | Tree diagram| Show decision process, explain the differences | Too much information, complicated to understand | Trim the tree and show on-demand; Support highlighting branches for user's interested instances | Comparison; Counterfactual reasoning | Model distillation , Disentangle CNN

-

Think about your input and feature data type

-

Get familiar with the end-user-friendly explanatory forms

-

Manually extracting several interpretable features

-

Fill in the prototyping card template with the extracted features

-

(optional) Prepare multiple cards varying UI/UX

-

(optional) Consider applying the general human-AI interaction guidelines in your design

-

Understanding end-users' needs for explainability

-

Talk with end-users to co-design prototypes

Step 3: Based on the provided XAI techniques, implement a functional prototype

We provide the following prototyping materials:

- Templates: Blank cards card grids with their explanatory type name

- Examples: We demonstrate creating prototype for tabular or sequential input, and for image input respectively.

- House: House price prediction. Tabular input data

- Health : Diabetes risk prediction. Sequential or tabular input

- Car: Self-driving car. Image or video input

- Bird: Bird species recognition. Image input

We have the following versions:

- Editable Sketch file

- Editable PPT files: PowerPoint, Google slides, and PDF preview

- PDF print version: template, blank card, all templates with individual card examples

You can create your own or even draw your explanatory cards on paper. We provide some examples on tabular and image input data for your reference.

EUCA is created by Weina Jin, Jianyu Fan, Diane Gromala, Philippe Pasquier, and Ghassan Hamarneh.

Here is our research paper: Arxiv

EUCA: the End-User-Centered Explainable AI Prototyping Framework

@article{jin2021euca,

title={EUCA: A Practical Prototyping Framework towards End-User-Centered Explainable Artificial Intelligence},

author={Weina Jin and Jianyu Fan and Diane Gromala and Philippe Pasquier and Ghassan Hamarneh},

year={2021},

eprint={2102.02437},

archivePrefix={arXiv},

primaryClass={cs.HC}

}

To reach us for any comments or feedback, you can Email Weina: weinaj at sfu dot ca.

You can inspire other designers by sharing your sketches, designs and prototypes using EUCA. We will post your design on the EUCA page.

To share your design and input, report a typo, error, or outdated information, please feel free to contact weinaj at sfu dot ca, or open a pull request on the EUCA project repo.