This hands-on workshop, aimed at developers and solution builders, introduces how to leverage foundation models (FMs) through Amazon Bedrock.

Amazon Bedrock is a fully managed service that provides access to FMs from third-party providers and Amazon; available via an API. With Bedrock, you can choose from a variety of models to find the one that’s best suited for your use case.

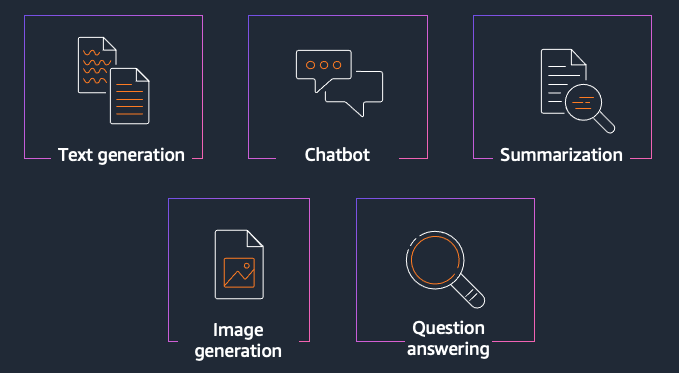

Within this series of labs, you'll explore some of the most common usage patterns we are seeing with our customers for Generative AI. We will show techniques for generating text and images, creating value for organizations by improving productivity. This is achieved by leveraging foundation models to help in composing emails, summarizing text, answering questions, building chatbots, and creating images. You will gain hands-on experience implementing these patterns via Bedrock APIs and SDKs, as well as open-source software like LangChain and FAISS.

Labs include:

- Text Generation [Estimated time to complete - 30 mins]

- Text Summarization [Estimated time to complete - 30 mins]

- Questions Answering [Estimated time to complete - 45 mins]

- Chatbot [Estimated time to complete - 45 mins]

- Image Generation [Estimated time to complete - 30 mins]

You can also refer to these Step-by-step guided instructions on the workshop website.

This workshop is presented as a series of Python notebooks, which you can run from the environment of your choice:

- For a fully-managed environment with rich AI/ML features, we'd recommend using SageMaker Studio. To get started quickly, you can refer to the instructions for domain quick setup.

- For a fully-managed but more basic experience, you could instead create a SageMaker Notebook Instance.

- If you prefer to use your existing (local or other) notebook environment, make sure it has credentials for calling AWS.

The AWS identity you assume from your notebook environment (which is the Studio/notebook Execution Role from SageMaker, or could be a role or IAM User for self-managed notebooks), must have sufficient AWS IAM permissions to call the Amazon Bedrock service.

To grant Bedrock access to your identity, you can:

- Open the AWS IAM Console

- Find your Role (if using SageMaker or otherwise assuming an IAM Role), or else User

- Select Add Permissions > Create Inline Policy to attach new inline permissions, open the JSON editor and paste in the below example policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "BedrockFullAccess",

"Effect": "Allow",

"Action": ["bedrock:*"],

"Resource": "*"

}

]

}

⚠️ Note: With Amazon SageMaker, your notebook execution role will typically be separate from the user or role that you log in to the AWS Console with. If you'd like to explore the AWS Console for Amazon Bedrock, you'll need to grant permissions to your Console user/role too.

For more information on the fine-grained action and resource permissions in Bedrock, check out the Bedrock Developer Guide.

ℹ️ Note: In SageMaker Studio, you can open a "System Terminal" to run these commands by clicking File > New > Terminal

Once your notebook environment is set up, clone this workshop repository into it.

git clone https://github.com/aws-samples/amazon-bedrock-workshop.git

cd amazon-bedrock-workshopBecause the service is in preview, the Amazon Bedrock SDK is not yet included in standard releases of the AWS SDK for Python - boto3. Run the following script to download and extract custom SDK wheels for testing Bedrock:

bash ./download-dependencies.shThis script will create a dependencies folder and download the relevant SDKs, but will not pip install them just yet.

You're now ready to explore the lab notebooks! Start with 00_Intro/bedrock_boto3_setup.ipynb for details on how to install the Bedrock SDKs, create a client, and start calling the APIs from Python.

This repository contains notebook examples for the Bedrock Architecture Patterns workshop. The notebooks are organised by module as follows:

- Simple Bedrock Usage: This notebook shows setting up the boto3 client and some basic usage of bedrock.

- Simple use case with boto3: In this notebook, you generate text using Amazon Bedrock. We demonstrate consuming the Amazon Titan model directly with boto3

- Simple use case with LangChain: We then perform the same task but using the popular framework LangChain

- Generation with additional context: We then take this further by enhancing the prompt with additional context in order to improve the response.

- Small text summarization: In this notebook, you use use Bedrock to perform a simple task of summarizing a small piece of text.

- Long text summarization: The above approach may not work as the content to be summarized gets larger and exceeds the max tokens of the model. In this notebook we show an approach of breaking the file up into smaller chunks, summarizing each chunk, and then summarizing the summaries.

- Simple questions with context: This notebook shows a simple example answering a question with given context by calling the model directly.

- Answering questions with Retrieval Augmented Generation: We can improve the above process by implementing an architecure called Retreival Augmented Generation (RAG). RAG retrieves data from outside the language model (non-parametric) and augments the prompts by adding the relevant retrieved data in context.

- Chatbot using Claude: This notebook shows a chatbot using Claude

- Chatbot using Titan: This notebook shows a chatbot using Titan

- Image Generation with Stable Diffusion: This notebook demonstrates image generation with using the Stable Diffusion model