scraper

twint user attributes | twint tweet attributes | twint configuration options | minamotorin's fork

Table columns (optional ones are bracketed):

- ID

09-[row number]

- Timestamp of inclusion

- Tweet URL

- Group

09

- Collector

Daryll | Westin | Zandrew

- Category

RBRD

- Topic

Leni's incompetence as VP

- Keywords

- Account handle

- Account name

- Account bio

- Account type

- Joined

- Following

- Followers

- Location

- Tweet

- [Translated tweet]

- Tweet type

- Date posted

- [Screenshot]

- Content type

- Likes

- Replies

- Retweets

- [Quote tweets]

- [Views]

- [Rating]

- Reasoning

- [Remarks]

- Reviewer

- leave blank

- Review

- leave blank

Instructions

Group 9 fodder spreadsheet | Group 9 final spreadsheet

Workflow:

- Scrape using

scrape.py - Extract CSV file using

processed.py - Append contents of CSV file to fodder spreadsheet

- Select rows in fodder spreadsheet to copy-paste to final spreadsheet

Steps:

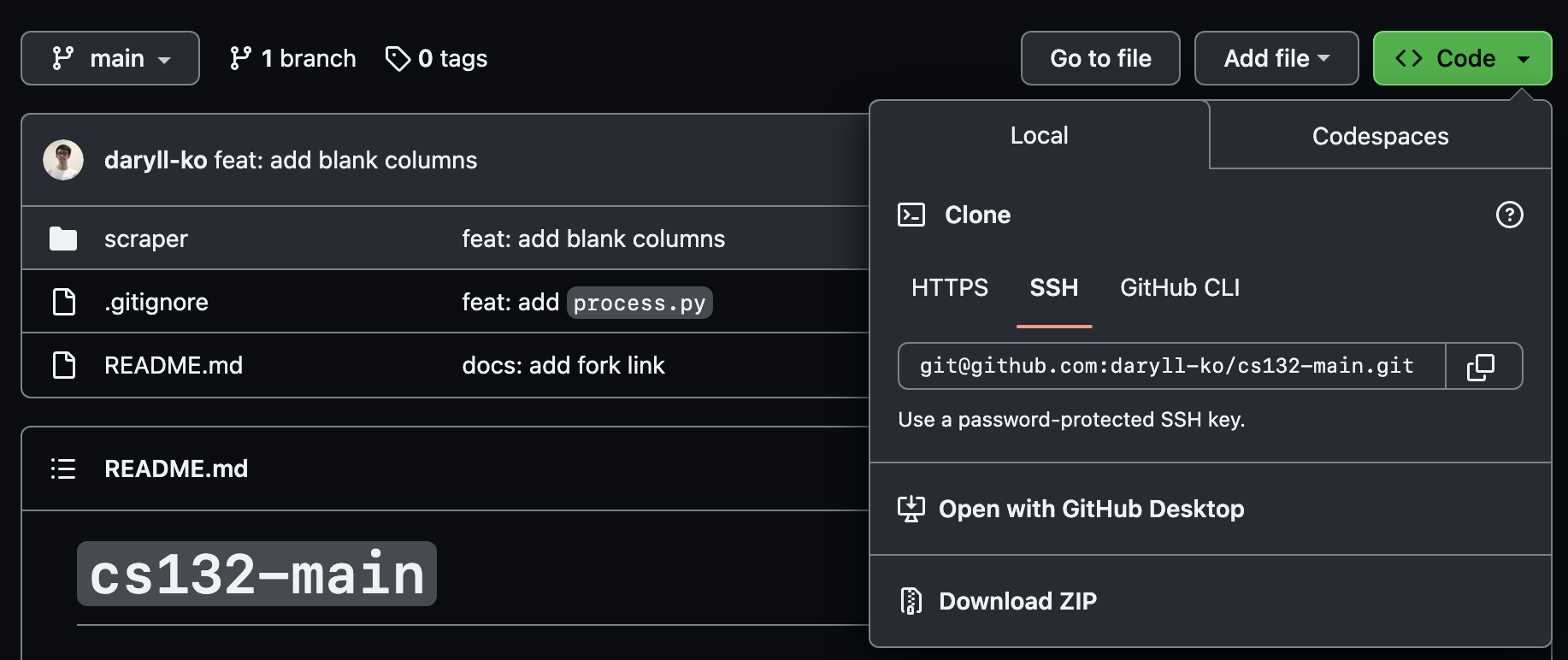

- Download the repository as a ZIP file:

- Extract the ZIP file, and in your terminal,

cd(change directory) to thescraperfolder:

a) Install twint:

First, clone minamotorin's fork:

git clone git@github.com:minamotorin/twint.gitNext, cd into the twint repository:

cd twintInstall twint like so:

pip3 install . -r requirements.txtb) Install snscrape and pandas:

pip3 install snscrape pandas- Scrape tweets using the

scrape.pyprogram:

python3 scrape.py [-u <username>] [-s <search query>] [-l <limit>]There are three command line arguments you can pass in here:

-

-uor--user: optional; indicates the username of the Twitter user you want to scrape tweets from here (e.g.,Official_UPD) -

-sor--search: required; indicates the search terms or keywords you want to use for scraping tweets (e.g.,leni)- important: if your search terms have spaces, add backslashes before those spaces:

python3 scrape.py -s leni\ walang\ ginawa-

-lor--limit: optional; indicates the maximum number of tweets scraped; default is 100- important: if indicated, the limit must be a multiple of 20:

python3 scrape.py -s leni\ walang\ ambag -l 60Once you execute this step, an output.csv file should appear in the folder you are in. There's no need to touch this file; it will be processed by process.py.

This step is relatively short (~1 minute or so for 200 tweets).

- Process tweets using the

process.pyprogram:

python3 process.py [-u <program user>] [-f <first index>] [-s <search query>]There are three command line arguments you can pass in here:

-

-uor--user: required; this is one of three characters:d(for Daryll),w(for Westin), orz(for Zandrew), indicates who's using theprocess.pyprogram right now -

-for--first: required; to find out what number to pass into this flag, look at last row of the fodder spreadsheet: if the last row is$\verb|09-|X$ , pass in$X+1$ -

sor--search: required; this must be the same thing you passed as the-sflag into thescrape.pyprogram

Example:

python3 process.py -u d -f 61 -s leni\ walang\ ambagOnce you execute this step, a processed.csv file should appear in the folder you are in. This is the file we'll import into the fodder spreadsheet!

This step is the bottleneck of our workflow (roughly ~8 minutes for 200 tweets).

- Import the generated

processed.csvfile into the fodder spreadsheet. Make sure to append to the current sheet, and turn off the setting that recognizes values, dates, or equations.

The header row will still be there once you import the csv file: just delete that row.

-

Select rows in the fodder spreadsheet we want, copy-paste them into the final spreadsheet, then fill in the remaining required columns:

- Account Type

- Content Type

- Reasoning