A collection of scripts and notebooks from my exploration of neural-networking. For this study, I'm consulting a collection of whitepapers and some lectures by Andrej Karpathy.

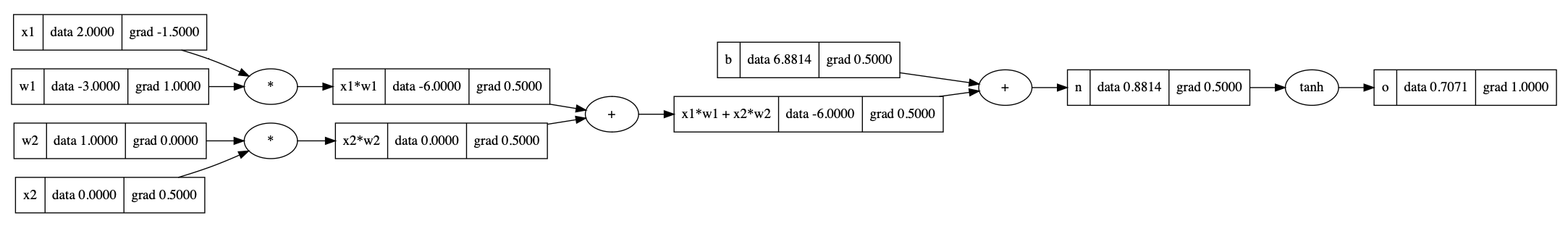

Basic Autograd-type engine implementing backpropogation over a scalar DAG and minimal neural network library. Inspired by micrograd and intended as a learning instrument.

Example NN value tree rendering

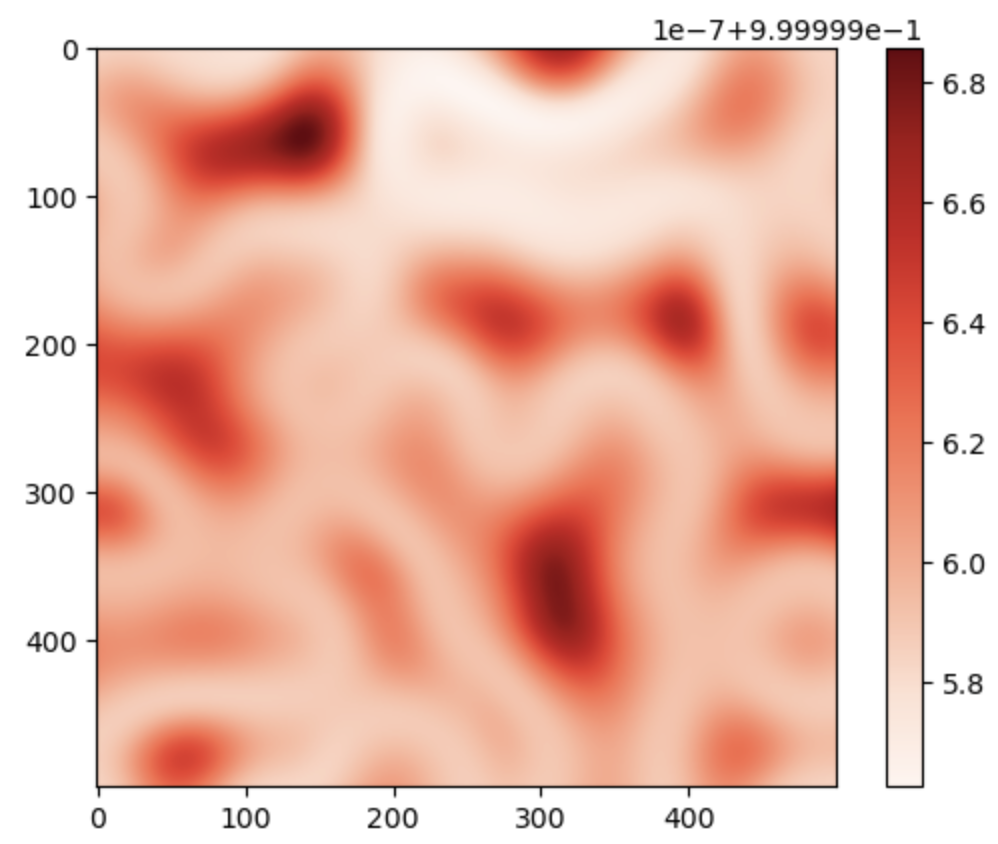

Score ground truth map

Model score prediction map

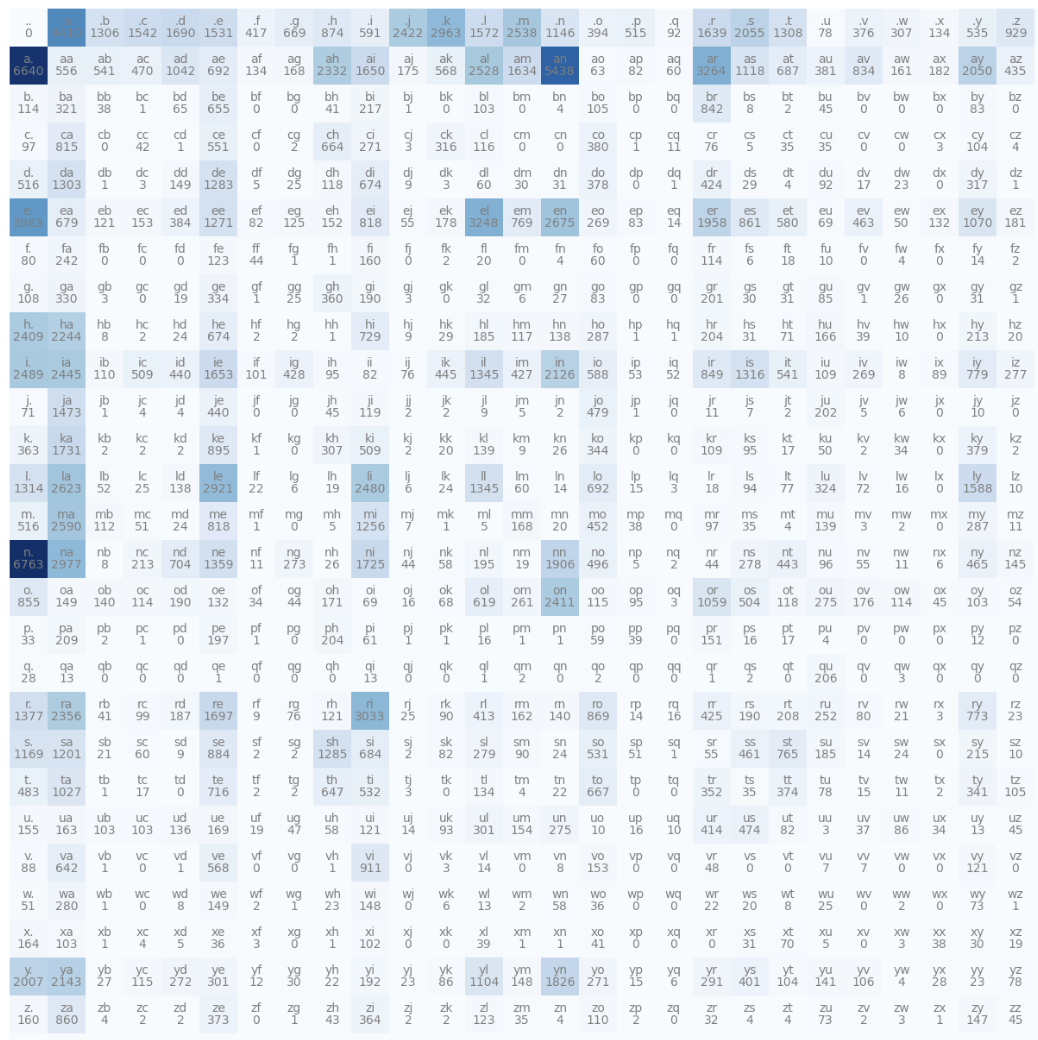

Implementation of a bigram (and trigram) model using PyTorch tensors for next-character prediction. Trained on SSA name data.

Bigram frequency render

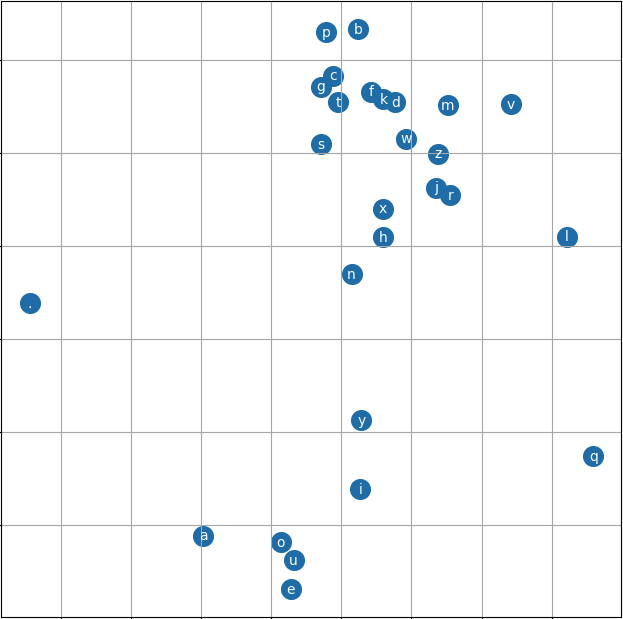

Continuation of name-generation using SSA data, but using an MLP based on the 2003 paper "A Neural Probabilistic Language Model" by Bengio et al. Includes exploration of the embedding space's organization after training, experimental determination of an ideal update rate, train/dev/test splits, and some hyper-parameter tuning.

The model with a 2d embedding space rendered as a scatterplot